LLMs Fail at Minecraft Collab: New Benchmark Exposes AI Teamwork Weakness

This is a Plain English Papers summary of a research paper called LLMs Fail at Minecraft Collab: New Benchmark Exposes AI Teamwork Weakness. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Why Collaboration Matters in AI Systems Most real-world tasks require collaboration. Yet AI research has traditionally focused on optimizing individual agents to achieve human-level performance rather than creating systems that work well together. This approach misses an important reality: both humans and AI systems can accomplish more when they effectively collaborate. Researchers from UC San Diego, Latitude Games, and Emergent Garden address this gap with MINDcraft, a platform for studying multi-agent collaboration through natural language in embodied environments, specifically Minecraft. Their accompanying benchmark, MineCollab, tests how well large language models (LLMs) collaborate on cooking, crafting, and construction tasks. Task suites and challenges showing various collaborative and embodied reasoning challenges in MineCollab. Their findings reveal significant limitations in how current LLMs handle collaborative tasks. Even advanced models like Claude 3.5 Sonnet struggle, placing less than 40% of blocks correctly in construction tasks. Performance drops dramatically when tasks involve four or five agents, and when agents must explicitly communicate detailed plans. The primary bottleneck isn't the agents' ability to perform individual tasks, but rather their capability to effectively communicate and coordinate with other agents—an essential skill for productive collaboration. This suggests we need to develop new methods beyond standard prompting and fine-tuning to create truly collaborative AI systems. How MINDcraft Compares to Other Platforms The researchers position MINDcraft as a comprehensive platform for studying multi-agent collaboration, offering advantages over existing frameworks. Platform Multi-Turn Chat Partial Obs Long Horizon Embodied Quantitative Overcooked [3] $\checkmark$ $\checkmark$ CerealBar [4] $\checkmark$ $\checkmark$ $\checkmark$ $\checkmark$ Habitat AI [5] $\checkmark$ $\checkmark$ $\checkmark$ LLM-Coord [6] $\checkmark$ $\checkmark$ $\checkmark$ Generative Agents [7] $\checkmark$ $\checkmark$ $\checkmark$ $\checkmark$ PARTNR [8] $\checkmark$ $\checkmark$ $\checkmark$ MineLand [9] $\checkmark$ $\checkmark$ $\checkmark$ MINDcraft (ours) $\checkmark$ $\checkmark$ $\checkmark$ $\checkmark$ $\checkmark$ Comparison to Other Platforms, illustrating the difference between MINDcraft and other popular platforms for studying multi-agent coordination or embodied agents. Minecraft was chosen as the environment because it offers a vast, open-ended world with complex dynamics and sparse rewards. Unlike previous research platforms, MINDcraft uniquely combines: Multi-turn chat capabilities Partial observability (agents don't see everything) Long-horizon tasks (requiring 20+ steps) Embodied interactions Quantitative evaluation measures Previous work such as multi-agent collaboration mechanisms has studied how LLMs coordinate, but typically in simpler environments. MINDcraft extends beyond cooking-themed tasks (like Overcooked) to include crafting and construction, creating a more diverse testing ground for collaborative AI. The MINDcraft Platform Architecture MINDcraft provides a robust framework for running experiments in the grounded environment of Minecraft, designed for agentic instruction following, self-guided play, collaboration, and agent communication. Overview of the MINDcraft workflow showing how user instructions are processed by agents and executed in the Minecraft environment. State and Action Spaces Agents in MINDcraft need to actively make queries to access environment observations. This follows a tool-calling approach where specific commands such as !nearbyBlocks and !craftable provide information about the environment. This method reduces noisy information and keeps context lengths manageable. While embodied LLM agents often use visual inputs, MINDcraft primarily uses textual observations. Initial tests indicate that vision inputs don't dramatically affect performance, aligning with prior work showing that thorough textual observations often outperform visual inputs for complex reasoning tasks. The action space in MINDcraft bridges low-level actions (like "jump" or "look up") and the programmatic Mineflayer API by providing 47 parameterized tools directly invokable by LLMs. For example, instead of generating complex code to give items to another player, an agent can simply use !givePlayer("randy", "oak log", 4). This abstraction allows LLMs to focus on higher-level sequential reasoning. Agent Architecture The MINDcraft architecture includes four main components: A server for launching and managing agents The main agent loop for handli

This is a Plain English Papers summary of a research paper called LLMs Fail at Minecraft Collab: New Benchmark Exposes AI Teamwork Weakness. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Why Collaboration Matters in AI Systems

Most real-world tasks require collaboration. Yet AI research has traditionally focused on optimizing individual agents to achieve human-level performance rather than creating systems that work well together. This approach misses an important reality: both humans and AI systems can accomplish more when they effectively collaborate.

Researchers from UC San Diego, Latitude Games, and Emergent Garden address this gap with MINDcraft, a platform for studying multi-agent collaboration through natural language in embodied environments, specifically Minecraft. Their accompanying benchmark, MineCollab, tests how well large language models (LLMs) collaborate on cooking, crafting, and construction tasks.

Task suites and challenges showing various collaborative and embodied reasoning challenges in MineCollab.

Their findings reveal significant limitations in how current LLMs handle collaborative tasks. Even advanced models like Claude 3.5 Sonnet struggle, placing less than 40% of blocks correctly in construction tasks. Performance drops dramatically when tasks involve four or five agents, and when agents must explicitly communicate detailed plans.

The primary bottleneck isn't the agents' ability to perform individual tasks, but rather their capability to effectively communicate and coordinate with other agents—an essential skill for productive collaboration. This suggests we need to develop new methods beyond standard prompting and fine-tuning to create truly collaborative AI systems.

How MINDcraft Compares to Other Platforms

The researchers position MINDcraft as a comprehensive platform for studying multi-agent collaboration, offering advantages over existing frameworks.

| Platform | Multi-Turn Chat | Partial Obs | Long Horizon | Embodied | Quantitative |

|---|---|---|---|---|---|

| Overcooked [3] | $\checkmark$ | $\checkmark$ | |||

| CerealBar [4] | $\checkmark$ | $\checkmark$ | $\checkmark$ | $\checkmark$ | |

| Habitat AI [5] | $\checkmark$ | $\checkmark$ | $\checkmark$ | ||

| LLM-Coord [6] | $\checkmark$ | $\checkmark$ | $\checkmark$ | ||

| Generative Agents [7] | $\checkmark$ | $\checkmark$ | $\checkmark$ | $\checkmark$ | |

| PARTNR [8] | $\checkmark$ | $\checkmark$ | $\checkmark$ | ||

| MineLand [9] | $\checkmark$ | $\checkmark$ | $\checkmark$ | ||

| MINDcraft (ours) | $\checkmark$ | $\checkmark$ | $\checkmark$ | $\checkmark$ | $\checkmark$ |

Comparison to Other Platforms, illustrating the difference between MINDcraft and other popular platforms for studying multi-agent coordination or embodied agents.

Minecraft was chosen as the environment because it offers a vast, open-ended world with complex dynamics and sparse rewards. Unlike previous research platforms, MINDcraft uniquely combines:

- Multi-turn chat capabilities

- Partial observability (agents don't see everything)

- Long-horizon tasks (requiring 20+ steps)

- Embodied interactions

- Quantitative evaluation measures

Previous work such as multi-agent collaboration mechanisms has studied how LLMs coordinate, but typically in simpler environments. MINDcraft extends beyond cooking-themed tasks (like Overcooked) to include crafting and construction, creating a more diverse testing ground for collaborative AI.

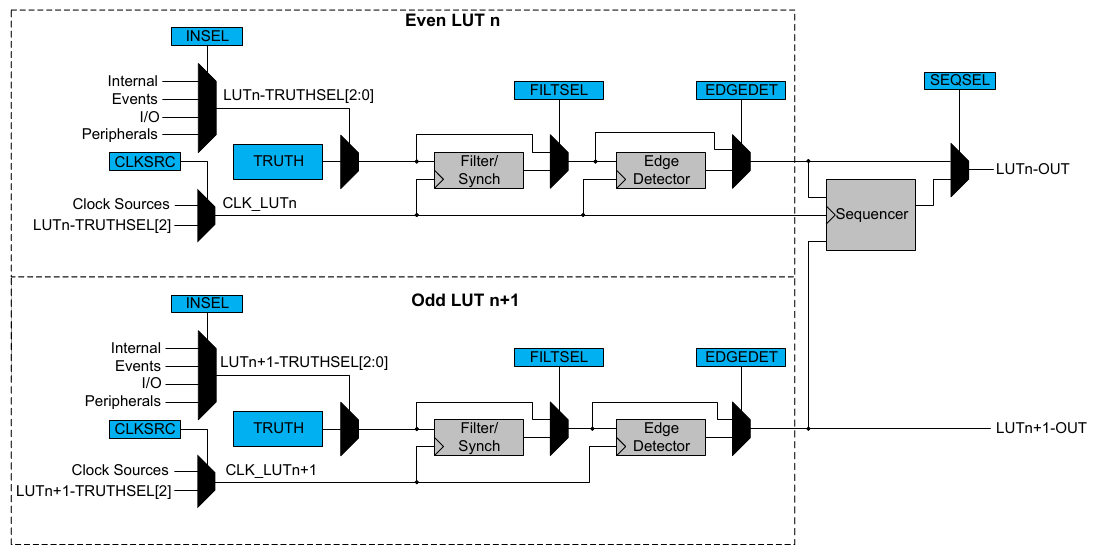

The MINDcraft Platform Architecture

MINDcraft provides a robust framework for running experiments in the grounded environment of Minecraft, designed for agentic instruction following, self-guided play, collaboration, and agent communication.

Overview of the MINDcraft workflow showing how user instructions are processed by agents and executed in the Minecraft environment.

State and Action Spaces

Agents in MINDcraft need to actively make queries to access environment observations. This follows a tool-calling approach where specific commands such as !nearbyBlocks and !craftable provide information about the environment. This method reduces noisy information and keeps context lengths manageable.

While embodied LLM agents often use visual inputs, MINDcraft primarily uses textual observations. Initial tests indicate that vision inputs don't dramatically affect performance, aligning with prior work showing that thorough textual observations often outperform visual inputs for complex reasoning tasks.

The action space in MINDcraft bridges low-level actions (like "jump" or "look up") and the programmatic Mineflayer API by providing 47 parameterized tools directly invokable by LLMs. For example, instead of generating complex code to give items to another player, an agent can simply use !givePlayer("randy", "oak log", 4). This abstraction allows LLMs to focus on higher-level sequential reasoning.

Agent Architecture

The MINDcraft architecture includes four main components:

- A server for launching and managing agents

- The main agent loop for handling messages from players and other agents

- A library of high-level action commands and observation queries

- A layer for prompting and calling arbitrary language models

The platform provides extensive support for agents through well-designed action libraries and few-shot examples. It also implements an embodied Retrieval Augmented Generation (RAG) system that retrieves relevant examples based on embedding similarity to the current conversation, enhancing the abilities of LLMs through contextual learning.

This approach to efficient LLM grounding allows researchers to evaluate LLMs of varying quality while focusing on collaborative capabilities rather than low-level implementation details.

Multi-agent Collaboration

Multi-agent collaboration in MINDcraft is enabled by a conversation manager. Agents can initiate or end conversations using commands like !startConversation and !endConversation. When an agent receives a message, it can choose to respond immediately, ignore it, take another action, or speak to another agent.

The system supports pairwise communication, where only two agents can converse at once. However, this framework scales to three or more agents by allowing them to transition between active conversations. The conversation manager also helps pace agent responses, slowing or pausing conversations when agents are executing actions in the environment.

This conversation architecture enables flexible communication patterns while ensuring that agents can coordinate effectively on complex tasks.

Additional Features

The researchers note that MINDcraft has existed as popular open-source software before this scientific research focus. The platform offers several notable features beyond those used in the paper:

- Agents can use coding tools to build freeform structures, displaying model creativity

- Open-ended play capabilities for studying emergent behaviors

- Support for large groups of agents with varying motivations

- Vision tools (in development) for visual reasoning

These features provide exciting avenues for future research in open-endedness, social simulation, and multi-modal reasoning.

MineCollab: Testing Multi-Agent Collaboration in Minecraft

MineCollab is a benchmark built on MINDcraft that focuses on three practical domains: cooking, crafting, and construction. These domains reflect real-world scenarios and pose substantial challenges requiring long-horizon action sequences, effective environmental interaction, and coordination between agents under resource and time constraints.

| Task | Train | Test | Trials | Success | Transitions | Avg Traj. Len. |

|---|---|---|---|---|---|---|

| Cooking | 280 | 90 | 635 | 103 | 3975 | 29.7 |

| Crafting | 1,200 | 100 | 1645 | 158 | 3565 | 19.2 |

| Construction | 2,000 | 30 | 211 | 52 | 9228 | 111.5 |

Summary of train and test task sets showing the number of trials, successful completions, and average trajectory lengths across different task types.

As shown in the table, construction tasks have the longest average trajectory length (111.5 steps), making them particularly challenging for current LLMs. The researchers collected between 200-2000 trials with LLaMA-3.3-70B-Instruct for each task type, filtering them to a set of successful runs to ensure high-quality training data.

Cooking Tasks

In cooking tasks, agents start with a goal to prepare meals like cakes and bread. They must coordinate ingredient collection and combine them following multi-step plans. Agents are placed in a "cooking world" with all necessary resources—livestock, crops, cooking equipment, etc.

The researchers also implemented a "Hell's Kitchen" variant where each agent receives only a subset of required recipes, forcing them to communicate recipe instructions to teammates. This tests the agents' ability to effectively share procedural knowledge.

In collaborative problem-solving environments, this communication of partial information is critical for success. Agents are evaluated on whether they successfully complete all recipe requirements.

Crafting Tasks

Crafting tasks involve creating items like clothing, furniture, and tools—covering the entire breadth of craftable items in Minecraft. At the beginning of each episode, agents start with different resources and may have limited access to crafting recipes.

To complete the task, agents must:

- Communicate what items are in their inventory

- Share crafting recipes if necessary

- Exchange resources to successfully craft the target item

More challenging variants involve longer crafting objectives that require multiple steps, such as crafting a compass which requires several intermediate components.

Construction Tasks

In construction tasks, agents must build structures from procedurally generated blueprints. These blueprints can vary in complexity based on the number of rooms or unique materials required, allowing fine-grained control over task difficulty.

Agents begin each episode with different materials and capabilities, necessitating resource sharing and coordination. For example, if a blueprint requires a stone base and wooden roof, one agent might have access to stone and the other to wood.

Evaluation uses an edit distance-based metric that measures how closely the constructed building matches the blueprint. This approach provides a continuous measure of performance rather than a binary success/failure assessment.

Experimental Results: How Well Do Current LLMs Collaborate?

The researchers compared the performance of state-of-the-art open and closed-weight LLMs on MineCollab, systematically varying task complexity along two dimensions: embodied reasoning and collaborative communication.

Impact of Embodied Task Complexity

Current agents perform better at cooking tasks than crafting or construction, despite comparable trajectory lengths. This suggests that pre-training data may better prepare LLMs for cooking-related tasks.

Performance across different numbers of unique materials required for construction tasks.

Performance across different numbers of rooms in construction tasks.

The construction task suite served as a case study for varying embodied complexity. As shown in the figures above, increasing either the number of unique materials or rooms in a blueprint results in decreased performance for most models. Claude-3.5-sonnet maintains relatively consistent performance (though still less than 40% success rate) compared to other models that show steeper declines.

Qualitative analysis revealed that agents often undo each other's work as task complexity increases, especially when they need to remember more information over longer horizons.

Communication Challenges in Multi-Agent Coordination

All tested tasks require at least two agents working together. Theoretically, more agents should achieve higher success rates with lower exploration costs per agent. However, the experiments show the opposite:

Performance drop when increasing the number of agents in cooking tasks.

Performance drop when increasing the number of agents in crafting tasks.

Performance drops dramatically from up to 90% success with two agents to less than 30% with five agents. While individual action requirements decrease with more agents, the coordination load increases substantially. For example, with four agents making different food items, they must coordinate to avoid redundant work, manage shared resources, and navigate equipment usage.

The research also found that requiring agents to communicate detailed step-by-step plans further decreases performance:

Impact of requiring detailed plan communication in cooking tasks.

Impact of requiring detailed plan communication in crafting tasks.

This was tested by blocking access to complete crafting plans, forcing agents to communicate complex step-by-step instructions. A similar effect appears in the "Hell's Kitchen" cooking variant. Examples of failures included agents not asking for plans when needed or failing to properly execute plans that were communicated to them.

Conclusions and Future Directions

As LLM agent capabilities continue evolving, measuring their capacity for effective collaboration with both humans and other LLM systems becomes increasingly important. The MINDcraft platform and MineCollab benchmark represent significant progress toward developing agents that can communicate and coordinate actions in complex embodied environments.

The experimental results highlight that current state-of-the-art agents struggle with both embodied reasoning and communication in collaborative settings. These findings underscore the limitations of standard techniques like prompting and fine-tuning, pointing to the need for more advanced methods to create truly collaborative AI systems.

Future work in this area could focus on:

- Developing better communication protocols between agents

- Creating models specifically trained for collaborative tasks

- Improving agents' ability to maintain consistent goals during long-horizon tasks

- Enhancing coordination capabilities with larger numbers of agents

By addressing these challenges, researchers can move closer to creating AI systems that truly augment human capabilities through effective collaboration rather than simply attempting to replace human inputs.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

![[Boost]](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Fuser%2Fprofile_image%2F645749%2Fec8a7f1e-0c41-4d6f-adbd-2394e363dd0d.jpeg)