AI Song Gen: VersBand Creates Complete Songs With Style Control & Vocal Alignment

This is a Plain English Papers summary of a research paper called AI Song Gen: VersBand Creates Complete Songs With Style Control & Vocal Alignment. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Introduction: A Multi-Task Framework for Song Generation Song generation represents a complex challenge within AI music creation – producing complete musical pieces with both vocals and accompaniments that align properly. Current methods struggle with three main limitations: generating high-quality vocals with style control, creating controllable and aligned accompaniments, and supporting diverse generation tasks from various prompts. The research introduces VersBand, a versatile multi-task song generation framework that addresses these challenges. This system can synthesize high-quality, aligned songs with extensive prompt-based control, allowing users to specify lyrics, melody, singing styles, and music genres through natural language. Overview of VersBand, which generates complete songs like a versatile band. The system accepts various optional inputs, allowing users to provide as little as "The task is to generate a song." Unlike previous approaches like Text-to-Song, VersBand offers comprehensive control over all aspects of song creation through an innovative architecture that generates vocals and accompaniments separately, ensuring high quality and proper alignment between components. Background: Understanding Song Generation Singing Voice Synthesis Recent advances in Singing Voice Synthesis (SVS) have improved vocal generation quality and control. Models like VISinger 2 enhance fidelity using digital signal processing, while StyleSinger enables style transfer through residual quantization methods. PromptSinger attempts to control speaker identity based on text descriptions. Despite these advances, current SVS models struggle with two key limitations: they cannot generate aligned accompaniments, and they lack mechanisms for high-level style control through natural language or audio prompts. While Melodist can generate vocals and accompaniments sequentially, and models like TCSinger offer some technique control, they fall short in producing vocals with comprehensive style control. Accompaniment Generation Accompaniment generation research typically focuses on musical symbolic tokens. Approaches range from GAN-based systems like MuseGAN for symbolic music generation to transformer-based models like MusicLM that leverage joint textual-music representations. Recent systems like SongCreator can create songs based on lyrics and audio prompts, while Sing Your Beat offers text-controllable accompaniment generation. MelodyLM employs transformer and diffusion models for decoupled song generation. However, these methods still face significant challenges in generating high-quality music with effective prompt-based control. They also lack robust mechanisms for aligning accompaniments with vocals and supporting diverse song generation tasks. Method: The VersBand Architecture VersBand comprises four main components: VocalBand for vocals, AccompBand for accompaniments, LyricBand for lyrics, and MelodyBand for melodies. This architecture enables multi-task song generation with comprehensive control. The overall architecture of VersBand showing how vocals and accompaniments are generated separately through dedicated components. Multi-Task Song Generation The process begins with a text encoder that generates text tokens from user prompts. When lyrics or music scores aren't provided, LyricBand and MelodyBand generate phonemes and notes automatically. VocalBand then decouples content, timbre, and style to generate high-quality vocals with granular control. AccompBand uses a flow-based transformer with specialized experts to create aligned, controllable accompaniments. This modular design allows VersBand to handle various generation scenarios, from complete song creation to style transfer tasks, all while maintaining high quality and alignment between components. VocalBand: High-Quality Vocal Generation with Style Control VocalBand decomposes vocals into three distinct representations: content (phonemes and notes), style (singing methods, emotion, techniques), and timbre (singer identity). This decomposition enables fine-grained control over each aspect. The architecture of Flow-based Style Predictor and Band-MOE, showing the key components that enable style control and expert selection. The Flow-based Style Predictor uses content, timbre, prompt style, and text tokens to predict the target style. This approach models styles as smooth transformations, effectively balancing multiple control inputs for natural, consistent style generation. The style is then passed to the Flow-based Pitch Predictor and Mel Decoder to generate the final vocal. The architecture of two key VocalBand components: (a

This is a Plain English Papers summary of a research paper called AI Song Gen: VersBand Creates Complete Songs With Style Control & Vocal Alignment. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Introduction: A Multi-Task Framework for Song Generation

Song generation represents a complex challenge within AI music creation – producing complete musical pieces with both vocals and accompaniments that align properly. Current methods struggle with three main limitations: generating high-quality vocals with style control, creating controllable and aligned accompaniments, and supporting diverse generation tasks from various prompts.

The research introduces VersBand, a versatile multi-task song generation framework that addresses these challenges. This system can synthesize high-quality, aligned songs with extensive prompt-based control, allowing users to specify lyrics, melody, singing styles, and music genres through natural language.

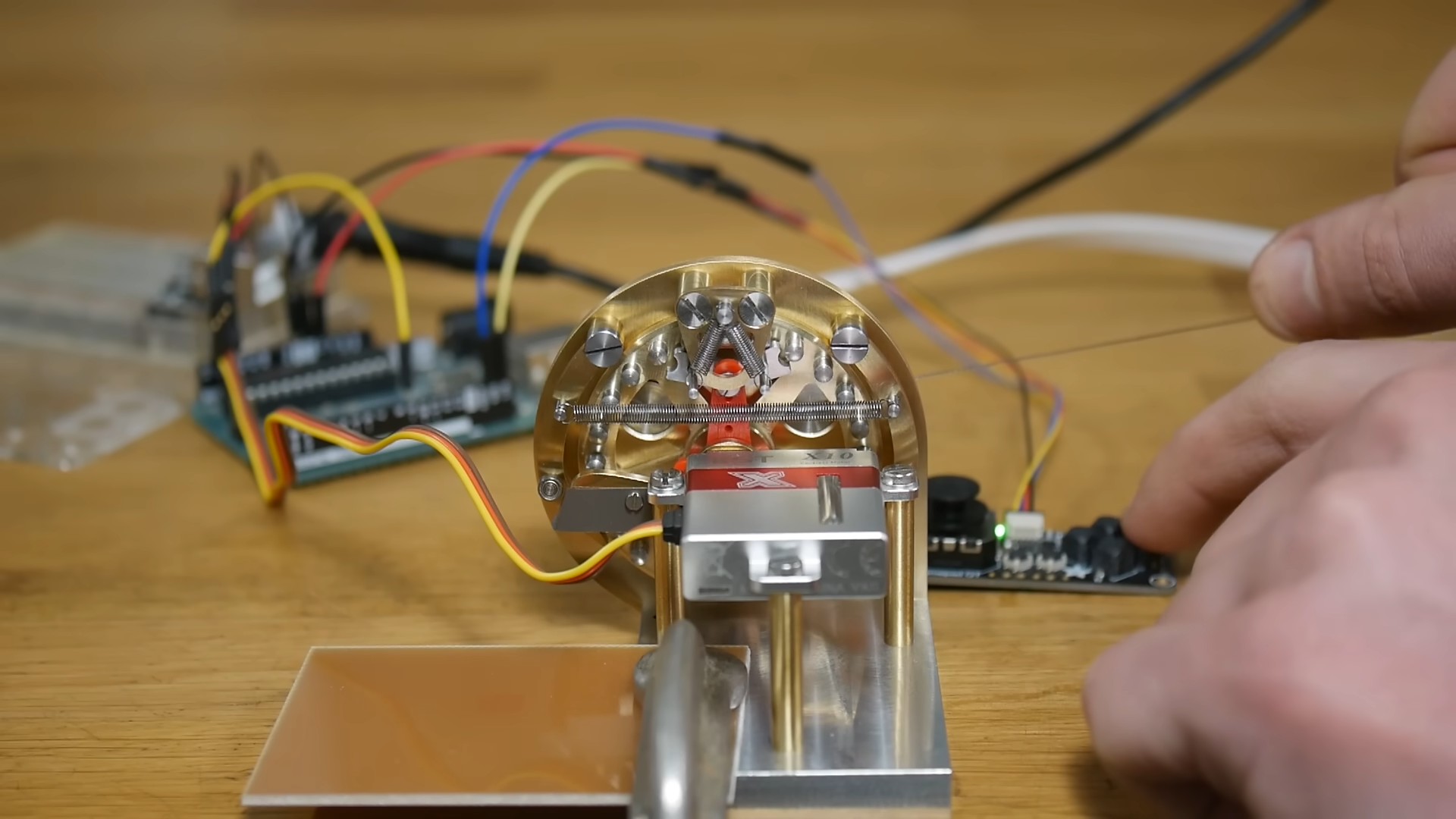

Overview of VersBand, which generates complete songs like a versatile band. The system accepts various optional inputs, allowing users to provide as little as "The task is to generate a song."

Unlike previous approaches like Text-to-Song, VersBand offers comprehensive control over all aspects of song creation through an innovative architecture that generates vocals and accompaniments separately, ensuring high quality and proper alignment between components.

Background: Understanding Song Generation

Singing Voice Synthesis

Recent advances in Singing Voice Synthesis (SVS) have improved vocal generation quality and control. Models like VISinger 2 enhance fidelity using digital signal processing, while StyleSinger enables style transfer through residual quantization methods. PromptSinger attempts to control speaker identity based on text descriptions.

Despite these advances, current SVS models struggle with two key limitations: they cannot generate aligned accompaniments, and they lack mechanisms for high-level style control through natural language or audio prompts. While Melodist can generate vocals and accompaniments sequentially, and models like TCSinger offer some technique control, they fall short in producing vocals with comprehensive style control.

Accompaniment Generation

Accompaniment generation research typically focuses on musical symbolic tokens. Approaches range from GAN-based systems like MuseGAN for symbolic music generation to transformer-based models like MusicLM that leverage joint textual-music representations.

Recent systems like SongCreator can create songs based on lyrics and audio prompts, while Sing Your Beat offers text-controllable accompaniment generation. MelodyLM employs transformer and diffusion models for decoupled song generation.

However, these methods still face significant challenges in generating high-quality music with effective prompt-based control. They also lack robust mechanisms for aligning accompaniments with vocals and supporting diverse song generation tasks.

Method: The VersBand Architecture

VersBand comprises four main components: VocalBand for vocals, AccompBand for accompaniments, LyricBand for lyrics, and MelodyBand for melodies. This architecture enables multi-task song generation with comprehensive control.

The overall architecture of VersBand showing how vocals and accompaniments are generated separately through dedicated components.

Multi-Task Song Generation

The process begins with a text encoder that generates text tokens from user prompts. When lyrics or music scores aren't provided, LyricBand and MelodyBand generate phonemes and notes automatically. VocalBand then decouples content, timbre, and style to generate high-quality vocals with granular control. AccompBand uses a flow-based transformer with specialized experts to create aligned, controllable accompaniments.

This modular design allows VersBand to handle various generation scenarios, from complete song creation to style transfer tasks, all while maintaining high quality and alignment between components.

VocalBand: High-Quality Vocal Generation with Style Control

VocalBand decomposes vocals into three distinct representations: content (phonemes and notes), style (singing methods, emotion, techniques), and timbre (singer identity). This decomposition enables fine-grained control over each aspect.

The architecture of Flow-based Style Predictor and Band-MOE, showing the key components that enable style control and expert selection.

The Flow-based Style Predictor uses content, timbre, prompt style, and text tokens to predict the target style. This approach models styles as smooth transformations, effectively balancing multiple control inputs for natural, consistent style generation. The style is then passed to the Flow-based Pitch Predictor and Mel Decoder to generate the final vocal.

The architecture of two key VocalBand components: (a) the residual style encoder and (b) the vector field estimator of the Flow-based Mel Decoder.

AccompBand: Aligned and Controllable Accompaniments

AccompBand addresses the complex nature of accompaniment generation using a flow-based transformer model. The Band Transformer Blocks leverage self-attention mechanisms to learn vocal-matching style, rhythm, and melody, while adaptive layer normalization ensures style consistency.

A key innovation is the Band-MOE (Mixture of Experts), which contains three expert groups:

- Aligned MOE: Selects experts based on vocal features for alignment

- Controlled MOE: Uses text prompts for fine-grained style control

- Acoustic MOE: Selects experts for different acoustic frequency dimensions

This approach enables AccompBand to generate high-quality accompaniments that align properly with vocals while responding to style control from text prompts. The model also implements classifier-free guidance to further enhance style control during inference.

LyricBand and MelodyBand: Enhancing Generation Capabilities

LyricBand generates complete lyrics based on text prompts, allowing users to specify theme, emotion, genre, and other parameters. It leverages QLoRA for efficient fine-tuning of the Qwen-7B language model, enabling high-quality, customized lyric generation.

The architecture of MelodyBand showing how it generates musical notes from text descriptions and other inputs.

MelodyBand generates musical notes based on text prompts, lyrics, and optional vocal prompts. Using a non-autoregressive transformer model, it enables fast, high-quality melody generation with control over key, tempo, vocal range, and other musical attributes.

Training and Inference

The different components of VersBand are trained separately with specific loss functions. During inference, the system can handle various scenarios:

- Without input lyrics or music scores, LyricBand and MelodyBand generate these components

- For song generation, VocalBand and AccompBand create vocals and accompaniments based on content and style controls

- For style transfer tasks, the system can maintain content while transferring style from vocal or accompaniment prompts

This flexibility allows VersBand to support multiple song generation tasks with a single framework.

Experimental Results

Evaluation Setup

VersBand was trained on a combination of bilingual web-crawled and open-source song datasets, totaling about 1,000 hours of song data and 1,150 hours of vocal data. For accompaniment generation, the system used a filtered subset of LP-MusicCaps-MSD, providing about 1,200 hours of accompaniment data.

| Dataset | Type | Languages | Annotation | Duration (hours) |

|---|---|---|---|---|

| GTSinger (Zhang et al., 2024c) | vocal | Chinese & English | lyrics, notes,styles | 29.6 |

| M4Singer (Zhang et al., 2022a) | vocal | Chinese | lyrics, notes | 29.8 |

| OpenSinger (Huang et al., 2021) | vocal | Chinese | lyrics | 83.5 |

| LP-MusicCaps-MSD (Doh et al., 2023) | accomp | / | text prompt | 213.6 |

| web-crawled | song | Chinese, English | / | 979.4 |

Table 9: Statistics of training datasets used in VersBand development.

The evaluation employed both objective metrics (like FAD, KLD, CLAP score) and subjective assessments (human ratings of quality, relevance, and alignment). The system was compared against several baseline models, including Qwen-7B for lyrics, SongMASS and MelodyLM for melodies, and VISinger2, StyleSinger, Melodist, and MelodyLM for vocals and songs.

Lyric and Melody Generation

LyricBand demonstrated superior performance in lyric generation compared to the original Qwen-7B model, as shown in the evaluation results:

| Methods | OVL ↑ | REL ↑ |

|---|---|---|

| GT | 92.31±1.29 | 84.07±1.63 |

| Qwen-7B | 74.35±1.37 | 80.66±0.92 |

| LyricBand | 79.68±1.05 | 82.01±1.13 |

Table 3: Results of lyric generation showing overall quality (OVL) and relevance to the prompt (REL).

For melody generation, MelodyBand outperformed baseline models on most metrics:

| Methods | KA(%) ↑ | APD ↓ | TD ↓ | PD(%) ↑ | DD(%) ↑ | MD ↓ |

|---|---|---|---|---|---|---|

| SongMASS | 58.9 | 3.78 | 2.93 | 55.4 | 68.1 | 3.41 |

| MelodyLM | 76.6 | 2.05 | 2.29 | 62.8 | 40.8 | 3.62 |

| MelodyBand | 72.7 | 1.74 | 1.65 | 65.8 | 70.5 | 3.12 |

Table 1: Results of melody generation across various metrics including key accuracy, pitch difference, and duration similarity.

Although MelodyLM achieved slightly higher key accuracy, MelodyBand performed better on other metrics and offers faster generation due to its non-autoregressive architecture.

Vocal Generation

VocalBand demonstrated superior performance in both zero-shot vocal generation and singing style transfer compared to baseline models:

| Methods | Vocal Generation | Singing Style Transfer | |||||

|---|---|---|---|---|---|---|---|

| MOS-Q ↑ | MOS-C ↑ | FFE ↓ | MOS-Q ↑ | MOS-C ↑ | MOS-S ↑ | Cos ↑ | |

| GT | 4.34±0.09 | - | - | 4.35±0.06 | - | - | - |

| Melodist | 3.83±0.09 | - | 0.12 | - | - | - | - |

| MelodyLM | 3.88±0.10 | - | 0.08 | 3.76±0.12 | - | 3.81±0.12 | - |

| VISinger2 | 3.62±0.07 | 3.63±0.09 | 0.16 | 3.55±0.11 | 3.57±0.05 | 3.70±0.08 | 0.82 |

| StyleSinger | 3.90±0.08 | 3.96±0.05 | 0.08 | 3.87±0.06 | 3.86±0.09 | 4.05±0.05 | 0.89 |

| VocalBand | 4.04±0.08 | 4.02±0.07 | 0.07 | 3.96±0.10 | 3.95±0.06 | 4.12±0.04 | 0.90 |

Table 2: Results of vocal generation and singing style transfer showing VocalBand's superior performance in quality, controllability, and style transfer capabilities.

VocalBand achieved the highest scores across all metrics, including quality (MOS-Q), controllability (MOS-C), F0 Frame Error (FFE), singer similarity (MOS-S), and cosine similarity (Cos). This demonstrates the effectiveness of the Flow-based Style Predictor for style control and transfer, as well as the high quality provided by the Flow-based Pitch Predictor and Mel Decoder.

Complete Song Generation

For complete song generation, VersBand outperformed baseline models across all metrics:

| Methods | FAD ↓ | KLD ↓ | CLAP ↑ | OVL ↑ | REL ↑ | ALI ↑ |

|---|---|---|---|---|---|---|

| Melodist | 3.81 | 1.34 | 0.39 | 84.12±1.54 | 85.97±1.51 | 74.86±1.13 |

| MelodyLM | 3.42 | 1.35 | 0.35 | 85.23±1.62 | 86.44±0.90 | 75.41±1.34 |

| VersBand (w/o lyrics) | 3.37 | 1.30 | 0.50 | 86.65±0.91 | 85.98±1.08 | 77.02±1.33 |

| VersBand (w/o scores) | 3.38 | 1.31 | 0.48 | 85.12±0.77 | 85.15±1.22 | 75.91±1.62 |

| VersBand (w/o prompts) | 3.55 | 1.35 | - | 83.49±1.20 | - | 74.87±1.68 |

| VersBand (w/ full) | 3.01 | 1.27 | 0.58 | 87.92±1.73 | 88.03±0.59 | 80.51±1.66 |

Table 4: Results of song generation showing VersBand's performance with different input configurations compared to baseline models.

Even with limited inputs (without lyrics, scores, or prompts), VersBand maintained strong performance. With all inputs provided, it achieved the highest scores across all metrics, demonstrating superior perceptual quality (FAD, KLD, OVL), adherence to text prompts (CLAP, REL), and alignment between vocals and accompaniments (ALI).

Ablation Studies

Ablation studies on VocalBand components showed that all elements contribute significantly to performance:

| Methods | MOS-Q ↑ | MOS-C ↑ | FEE ↓ |

|---|---|---|---|

| VocalBand | 4.04±0.08 | 4.02±0.07 | 0.07 |

| w/o Styles | 3.87±0.04 | - | 0.09 |

| w/o Pirch Predictor | 3.79±0.06 | 3.99±0.09 | 0.09 |

| w/o Mel Decoder | 3.68±0.08 | 3.92±0.07 | 0.13 |

Table 6: Ablation results of VocalBand showing the contribution of each component to overall performance.

Similarly, the Band-MOE in AccompBand proved essential for quality, alignment, and control:

| Methods | FAD ↓ | KLD ↓ | CLAP ↑ | OVL ↑ | REL ↑ | ALI ↑ |

|---|---|---|---|---|---|---|

| VersBand | 3.01 | 1.27 | 0.58 | 87.92±1.73 | 88.03±0.59 | 80.51±1.66 |

| w/o Band-MOE | 3.29 | 1.34 | 0.42 | 86.11±1.30 | 87.49±0.84 | 77.59±1.50 |

| w/o Aligned MOE | 3.15 | 1.25 | 0.54 | 87.19±1.14 | 88.48±0.66 | 77.86±1.35 |

| w/o Controlled MOE | 3.10 | 1.23 | 0.44 | 88.48±1.74 | 87.90±1.50 | 79.33±1.63 |

| w/o Acoustic MOE | 3.26 | 1.32 | 0.40 | 86.50±1.55 | 87.72±1.04 | 79.08±1.24 |

Table 5: Results of ablation study on AccompBand showing the importance of Band-MOE and its components.

Removing the Band-MOE resulted in a significant decline across all metrics. The individual expert groups each contributed to specific aspects: Aligned MOE improved alignment, Controlled MOE enhanced controllability, and Acoustic MOE boosted overall quality.

Conclusion

VersBand represents a significant advancement in the field of AI-generated music, offering a comprehensive framework for high-quality, controllable song generation. The system's key innovations include:

- VocalBand's decoupled model using flow-matching for generating singing styles, pitches, and mel-spectrograms

- AccompBand's flow-based transformer with Band-MOE for producing aligned, controllable accompaniments

- LyricBand and MelodyBand for enabling complete multi-task song generation

These components work together to address the major challenges in song generation: creating high-quality vocals with style control, generating aligned and controllable accompaniments, and supporting diverse tasks based on various prompts.

Experimental results demonstrate that VersBand outperforms baseline models across multiple song generation tasks in terms of both objective and subjective metrics. The system's versatility allows it to handle a wide range of inputs, from minimal text prompts to specific style controls and audio references.

Limitations and Future Work

Despite its achievements, VersBand has two main limitations:

First, the system uses four separate sub-models and multiple infrastructure components like flow-based transformers and VAE. This results in cumbersome training and inference procedures. Future work could explore using a single model to achieve the same multi-task generation capabilities and controllability, potentially simplifying the system architecture.

Second, the training dataset only includes songs in Chinese and English, limiting linguistic diversity. Future research could focus on building a larger, more comprehensive dataset to enable a wider range of application scenarios and language support.

The researchers also note ethical considerations related to large-scale generative models for song creation. These include concerns about copyright infringement when dubbing entertainment videos with famous singers' voices, and potential impacts on professional musicians and singers due to the lowered barriers to high-quality music production.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)

![[Weekly funding roundup April 26-May 2] VC inflow continues to remain downcast](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)