Kubernetes Without Docker: Why Container Runtimes Are Changing the Game in 2025

Docker isn’t dead, but Kubernetes definitely stopped texting back. Introduction: The Runtime Plot Twist Nobody Told You About So, imagine this:You’re vibing in your CLI, firing off kubectl apply -f something.yaml, thinking life is good. Docker’s humming in the background. Everything just... works. Then one day, Kubernetes walks into the room like: “Hey, Docker. It’s not you, it’s your shim. We’re done here.” Boom. The internet explodes. Twitter threads. Hacker News wars. Devs panicking. Memes flying. And if you missed the memo (because you were too busy debugging a 500 error that turned out to be a missing colon), here’s the tea: As of Kubernetes v1.24, Docker is no longer the default container runtime. But before you scream into the void or uninstall Docker out of pure spite, let’s get one thing clear: Kubernetes still loves Docker images. It just doesn’t run them using Docker anymore. Yep. It’s like Kubernetes still listens to your mixtapes, but now it’s dating someone named containerd. In this article, we’re going to break it all down what happened, why it matters, what to do next — without sounding like a corporate whitepaper written by a boardroom AI. This is for the devs. The sysadmins. The SREs who live in Grafana dashboards. The cloud engineers who haven’t seen sunlight since Helm v3 dropped. Kubernetes didn’t break up with Docker. It just found a runtime that actually listens.Section 2: The Breakup Kubernetes & Docker, It’s Complicated Once upon a time in DevOps Land, Kubernetes and Docker were the power couple. The Batman and Robin of container orchestration. The Mario and Luigi of your deployment dreams. But then Kubernetes looked Docker in the eyes and said: “Hey… we need to talk. I’m switching to someone else to run my containers.” And thus began the runtime soap opera that brought us here. So… What Actually Happened? Okay, let’s clear up the confusion that’s been haunting dev forums and Reddit threads like a ghost in your microservice. Kubernetes didn’t “drop Docker support” entirely. It dropped Docker as a container runtime specifically, the thing called dockershim. Your Docker-built images? Still work. Your Dockerfile? Still valid. Your sanity? Questionable, but intact. The real issue was that Docker wasn’t built to be used inside Kubernetes. It’s like trying to use a full IDE just to run hello-world.py. Too much baggage, not enough chill. Kubernetes wanted something leaner, meaner, and more compliant with its own CRI (Container Runtime Interface). Enter: containerd — Docker’s slimmed-down, no-BS cousin. CRI-O — Lightweight, purpose-built for Kubernetes, no emotional attachment to Docker. What’s dockershim, and why is it cursed? dockershim was like that weird adapter you bought off AliExpress that somehow made USB-C work with HDMI and Ethernet at the same time. It got the job done — but no one really trusted it. Kubernetes was using dockershim to translate its CRI calls into something Docker understood. But maintaining that shim was a pain, and the Kubernetes maintainers finally said, “Yeah, we’re done babysitting this.” So in Kubernetes v1.24, they officially removed dockershim, which means: No more using Docker as the runtime engine. Still fine to use Docker to build, tag, and push images. Just use containerd, CRI-O, or something else under the hood when actually running the containers in Kubernetes. TL;DR for Busy Devs Who Scrolled Too Fast: And no this isn’t the end of Docker. You can still use it for local dev, CI, even running containers on its own. Just don’t expect Kubernetes to be calling it on weekends anymore. Section 3: Container Runtimes 101 Or, What the Heck Is Actually Running Your Containers? If containers were magic, then the container runtime is the grumpy wizard behind the curtain who actually casts the spell. So here’s the tea: When you kubectl run a pod, Kubernetes doesn’t run your container directly. It talks to a container runtime like a middleman who handles the actual low-level container creation, process isolation, and all that glorious namespace/cgroups wizardry. For a long time, that runtime was Docker (via dockershim), but now it’s mostly containerd, CRI-O, or if you’re feeling adventurous, stuff like gVisor or WasmEdge (yes, WebAssembly is coming for your YAML). So What Exactly Is a Container Runtime? In gamer terms: Docker is like a modded Minecraft launcher lots of features, nice UX, but heavy. containerd is vanilla Minecraft on Linux lean, stable, does exactly what it needs to. CRI-O is the minimalist pixel-art clone optimized for one job only: Kubernetes. Let’s break it down like a stat sheet: Why So Many Runtimes? Because not all clusters are created equal. Some want speed. Some want security. Some just want to be different (you know the type). Here’s how they stack up in gamer mode: containerd: The Elden Ring of runtimes. Deep, elegant, hardcore. CRI-O: The Hollow Knight. Sleek, no bloat, perfect for speedrunners. gVisor: The Sekiro punish

Docker isn’t dead, but Kubernetes definitely stopped texting back.

Introduction: The Runtime Plot Twist Nobody Told You About

So, imagine this:

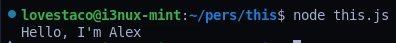

You’re vibing in your CLI, firing off kubectl apply -f something.yaml, thinking life is good. Docker’s humming in the background. Everything just... works.

Then one day, Kubernetes walks into the room like:

“Hey, Docker. It’s not you, it’s your shim. We’re done here.”

Boom.

The internet explodes. Twitter threads. Hacker News wars. Devs panicking. Memes flying.

And if you missed the memo (because you were too busy debugging a 500 error that turned out to be a missing colon), here’s the tea:

As of Kubernetes v1.24, Docker is no longer the default container runtime.

But before you scream into the void or uninstall Docker out of pure spite, let’s get one thing clear:

Kubernetes still loves Docker images.

It just doesn’t run them using Docker anymore.

Yep. It’s like Kubernetes still listens to your mixtapes, but now it’s dating someone named containerd.

In this article, we’re going to break it all down what happened, why it matters, what to do next — without sounding like a corporate whitepaper written by a boardroom AI. This is for the devs. The sysadmins. The SREs who live in Grafana dashboards. The cloud engineers who haven’t seen sunlight since Helm v3 dropped.

Kubernetes didn’t break up with Docker. It just found a runtime that actually listens.

Kubernetes didn’t break up with Docker. It just found a runtime that actually listens.

Section 2: The Breakup Kubernetes & Docker, It’s Complicated

Once upon a time in DevOps Land, Kubernetes and Docker were the power couple. The Batman and Robin of container orchestration. The Mario and Luigi of your deployment dreams.

But then Kubernetes looked Docker in the eyes and said:

“Hey… we need to talk. I’m switching to someone else to run my containers.”

And thus began the runtime soap opera that brought us here.

So… What Actually Happened?

Okay, let’s clear up the confusion that’s been haunting dev forums and Reddit threads like a ghost in your microservice.

- Kubernetes didn’t “drop Docker support” entirely.

- It dropped Docker as a container runtime specifically, the thing called

dockershim. - Your Docker-built images? Still work.

- Your

Dockerfile? Still valid. - Your sanity? Questionable, but intact.

The real issue was that Docker wasn’t built to be used inside Kubernetes. It’s like trying to use a full IDE just to run hello-world.py. Too much baggage, not enough chill.

Kubernetes wanted something leaner, meaner, and more compliant with its own CRI (Container Runtime Interface). Enter:

- containerd — Docker’s slimmed-down, no-BS cousin.

- CRI-O — Lightweight, purpose-built for Kubernetes, no emotional attachment to Docker.

What’s dockershim, and why is it cursed?

dockershim was like that weird adapter you bought off AliExpress that somehow made USB-C work with HDMI and Ethernet at the same time. It got the job done — but no one really trusted it.

Kubernetes was using dockershim to translate its CRI calls into something Docker understood. But maintaining that shim was a pain, and the Kubernetes maintainers finally said, “Yeah, we’re done babysitting this.”

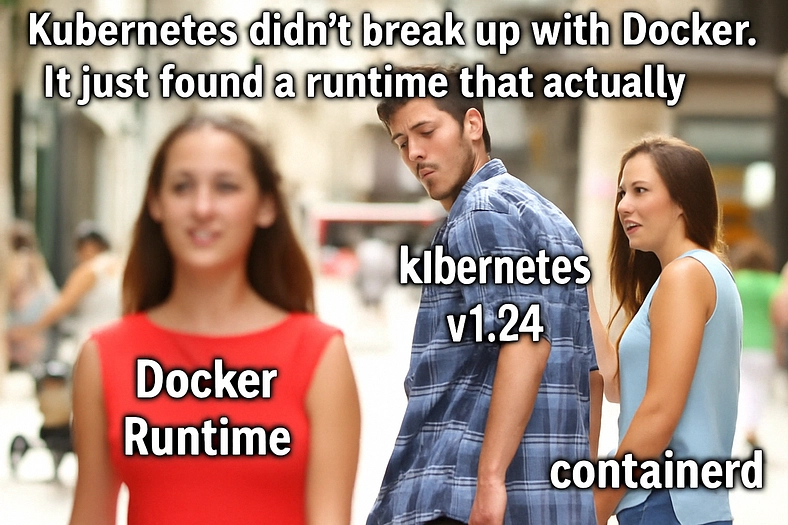

So in Kubernetes v1.24, they officially removed dockershim, which means:

- No more using Docker as the runtime engine.

- Still fine to use Docker to build, tag, and push images.

- Just use

containerd,CRI-O, or something else under the hood when actually running the containers in Kubernetes.

TL;DR for Busy Devs Who Scrolled Too Fast:

And no this isn’t the end of Docker. You can still use it for local dev, CI, even running containers on its own. Just don’t expect Kubernetes to be calling it on weekends anymore.

Section 3: Container Runtimes 101 Or, What the Heck Is Actually Running Your Containers?

If containers were magic, then the container runtime is the grumpy wizard behind the curtain who actually casts the spell.

So here’s the tea:

When you kubectl run a pod, Kubernetes doesn’t run your container directly. It talks to a container runtime like a middleman who handles the actual low-level container creation, process isolation, and all that glorious namespace/cgroups wizardry.

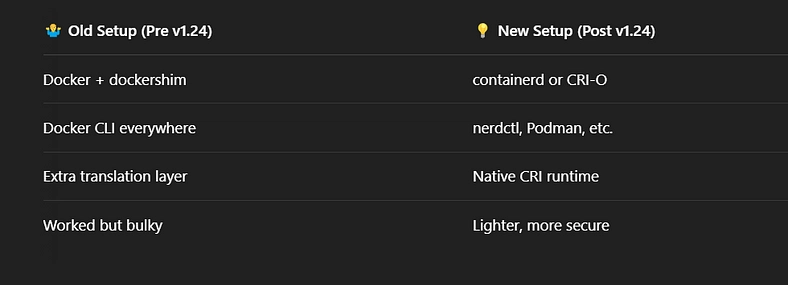

For a long time, that runtime was Docker (via dockershim), but now it’s mostly containerd, CRI-O, or if you’re feeling adventurous, stuff like gVisor or WasmEdge (yes, WebAssembly is coming for your YAML).

So What Exactly Is a Container Runtime?

In gamer terms:

- Docker is like a modded Minecraft launcher lots of features, nice UX, but heavy.

- containerd is vanilla Minecraft on Linux lean, stable, does exactly what it needs to.

- CRI-O is the minimalist pixel-art clone optimized for one job only: Kubernetes.

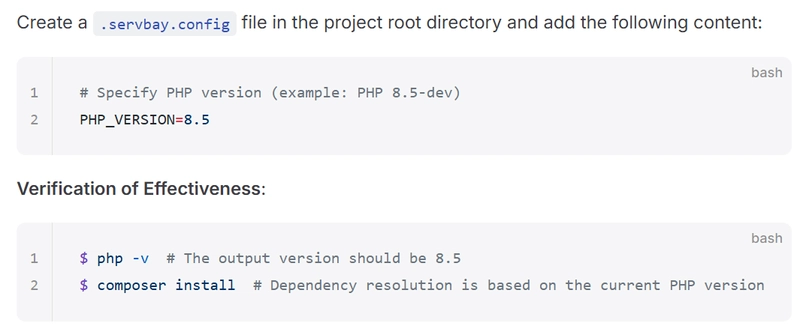

Let’s break it down like a stat sheet:

Why So Many Runtimes?

Because not all clusters are created equal. Some want speed. Some want security. Some just want to be different (you know the type).

Here’s how they stack up in gamer mode:

- containerd: The Elden Ring of runtimes. Deep, elegant, hardcore.

- CRI-O: The Hollow Knight. Sleek, no bloat, perfect for speedrunners.

- gVisor: The Sekiro punishes anything unsafe.

- Docker: The Skyrim modded edition. Great until it crashes.

- WasmEdge: The experimental alpha build you swear is the future.

So What Do You Need?

- Running Kubernetes on the cloud? You’re already using containerd, probably without knowing.

- Running on bare metal or your own VMs? Time to choose your fighter.

- Still using Docker Desktop for local dev? No shame, but maybe test with

containerd+nerdctlso prod doesn’t slap you.

Section 4: Why Developers Should Actually Care (Yes, Even You, Frontend Folks)

Look, I get it. You’re thinking:

“I don’t care what runtime Kubernetes uses. My YAMLs are working, my pods are green, and my coffee is hot.”

But here’s the thing that’s exactly what 2020-me said before a seemingly minor kubectl apply turned into a 2-hour debugging session involving logs that didn’t exist, containers that wouldn’t start, and documentation that was last updated when JavaScript didn’t suck (so… never?).

So What Changes for Developers?

Let’s break it down like you’re explaining it to your sleep-deprived teammate on a 3 AM PagerDuty call:

1. Your Docker CLI Isn’t the Boss Anymore

Docker CLI is still cool. You can still use docker build, docker push, and docker run just don’t expect Kubernetes to care. It now talks directly to containerd or CRI-O, and those tools have their own ecosystems (e.g., nerdctl, crictl, or podman).

Translation: If you’re debugging issues inside a pod, using docker ps is as useful as using git push in a directory with no .git.

2. Debugging Feels Slightly… Different

With Docker, you might’ve used docker logs, docker exec, or docker inspect like your holy trinity. Now?

- You’ll be using kubectl logs and kubectl exec more religiously.

- Or tools like crictl, which has the ergonomics of Vim but the personality of a toaster.

Also, runtime-specific quirks now matter more. For example:

- Some logs might not show properly in certain setups unless you configure log drivers right.

- Some monitoring tools expect Docker. containerd might give them… existential dread.

3. Your Local Dev Doesn’t Match Prod (Surprise!)

- You’re building and testing with Docker on your machine.

- But prod runs on containerd or CRI-O.

- And that tiny difference can hit like a zero-day bug when something goes wrong.

Ever had a pod work perfectly locally but crashloop in staging for “no reason”? Yeah, sometimes that’s runtime behavior, especially with image caching, volume mounts, or how networking is handled.

Docker lies. containerd is brutally honest.

4. Multi-Stage Builds, Distroless Images, Rootless Containers Runtime Affects It All

Modern runtimes push best practices that Docker didn’t care about:

- Run as non-root? containerd says “yes please.”

- Use distroless images? You better understand how they behave without /bin/sh.

- Trying to copy files into a running container like it’s 2017? containerd says “no soup for you.”

You don’t have to change, but understanding this stuff makes you a better dev, and gets you bonus points in code review when you explain why your image is 70% smaller now.

5. It’s Not Just About Ops Anymore

Runtime decisions now shape:

- Your image build strategy

- How you run integration tests

- How you secure your pipeline

- Even how you deploy functions (hello, Wasm!

It’s no longer just an SRE or platform team concern. This stuff touches your code and if you’re in a startup, you are the platform team.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Is This Programming Paradigm New? [closed]](https://miro.medium.com/v2/resize:fit:1200/format:webp/1*nKR2930riHA4VC7dLwIuxA.gif)

-Classic-Nintendo-GameCube-games-are-coming-to-Nintendo-Switch-2!-00-00-13.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![M4 MacBook Air Drops to New All-Time Low of $912 [Deal]](https://www.iclarified.com/images/news/97108/97108/97108-640.jpg)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)