How to build an event assistant in under 1 hour using Open AI and Vercel, part II

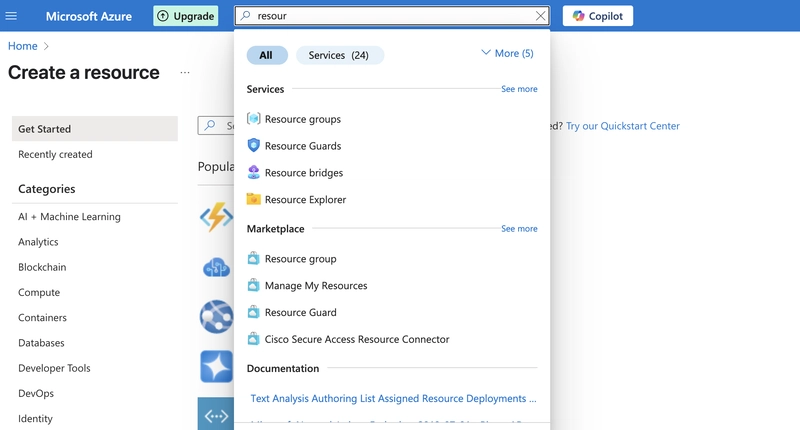

Deploying Your OpenAI Assistant Chat to Vercel In the previous post, we built an AI Event Assistant using OpenAI's tools and prompts. Now, let's create a simple chat interface for it and make it publicly accessible using Vercel. 1.Start with the Quickstart: Begin by cloning the official OpenAI Assistants Quickstart repository: https://github.com/openai/openai-assistants-quickstart 2. Handle OpenAI Library Updates: I encountered an incompatibility when using a newer version of the OpenAI Node.js library (v4.90.0) than the quickstart likely targeted (around v4.46.0, based on its package.json). Specifically, openai.beta.vectorStores needed to be updated to openai.vectorStores. Check your library version and adjust the code accordingly. 3. Improve User Experience During Waits: The default UI is basic. Since Assistant API calls can sometimes take a noticeable amount of time (often >10 seconds in my tests), I added a simple "Waiting..." indicator. This appears while the assistant processes a request, letting the user know the app hasn't stalled. 4. Address Vercel Timeouts: When deploying to Vercel, I initially ran into requests timing out. OpenAI Assistant API calls can exceed Vercel's default serverless function timeout (10 seconds for Hobby/Pro tiers). To resolve this, you need to increase the maximum duration for the function handling the Assistant API calls. Add the following line to the top of your relevant API route file (e.g., in Next.js): export const maxDuration = 60; // Increase timeout to 60 seconds See the Code: You can view all my modifications compared to the original quickstart here: https://github.com/vbrodsky/openai-kabbalah-app With these changes, you should be ready to deploy your repository to a Vercel project and get a free public URL for your AI assistant. Good luck!

Deploying Your OpenAI Assistant Chat to Vercel

In the previous post, we built an AI Event Assistant using OpenAI's tools and prompts. Now, let's create a simple chat interface for it and make it publicly accessible using Vercel.

1.Start with the Quickstart:

Begin by cloning the official OpenAI Assistants Quickstart repository:

https://github.com/openai/openai-assistants-quickstart

2. Handle OpenAI Library Updates:

I encountered an incompatibility when using a newer version of the OpenAI Node.js library (v4.90.0) than the quickstart likely targeted (around v4.46.0, based on its package.json). Specifically, openai.beta.vectorStores needed to be updated to openai.vectorStores. Check your library version and adjust the code accordingly.

3. Improve User Experience During Waits:

The default UI is basic. Since Assistant API calls can sometimes take a noticeable amount of time (often >10 seconds in my tests), I added a simple "Waiting..." indicator. This appears while the assistant processes a request, letting the user know the app hasn't stalled.

4. Address Vercel Timeouts:

When deploying to Vercel, I initially ran into requests timing out. OpenAI Assistant API calls can exceed Vercel's default serverless function timeout (10 seconds for Hobby/Pro tiers). To resolve this, you need to increase the maximum duration for the function handling the Assistant API calls. Add the following line to the top of your relevant API route file (e.g., in Next.js):

export const maxDuration = 60; // Increase timeout to 60 seconds

See the Code:

You can view all my modifications compared to the original quickstart here:

https://github.com/vbrodsky/openai-kabbalah-app

With these changes, you should be ready to deploy your repository to a Vercel project and get a free public URL for your AI assistant. Good luck!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)