Harnessing AI for a Healthier World: Ensuring AI Enhances, Not Undermines, Patient Care

For centuries, medicine has been shaped by new technologies. From the stethoscope to MRI machines, innovation has transformed the way we diagnose, treat, and care for patients. Yet, every leap forward has been met with questions: Will this technology truly serve patients? Can it be trusted? And what happens when efficiency is prioritized over empathy? […] The post Harnessing AI for a Healthier World: Ensuring AI Enhances, Not Undermines, Patient Care appeared first on Unite.AI.

For centuries, medicine has been shaped by new technologies. From the stethoscope to MRI machines, innovation has transformed the way we diagnose, treat, and care for patients. Yet, every leap forward has been met with questions: Will this technology truly serve patients? Can it be trusted? And what happens when efficiency is prioritized over empathy?

Artificial intelligence (AI) is the latest frontier in this ongoing evolution. It has the potential to improve diagnostics, optimize workflows, and expand access to care. But AI is not immune to the same fundamental questions that have accompanied every medical advancement before it.

The concern is not whether AI will change health—it already is. The question is whether it will enhance patient care or create new risks that undermine it. The answer depends on the implementation choices we make today. As AI becomes more embedded in health ecosystems, responsible governance remains imperative. Ensuring that AI enhances rather than undermines patient care requires a careful balance between innovation, regulation, and ethical oversight.

Addressing Ethical Dilemmas in AI-Driven Health Technologies

Governments and regulatory bodies are increasingly recognizing the importance of staying ahead of rapid AI developments. Discussions at the Prince Mahidol Award Conference (PMAC) in Bangkok emphasized the necessity of outcome-based, adaptable regulations that can evolve alongside emerging AI technologies. Without proactive governance, there is a risk that AI could exacerbate existing inequities or introduce new forms of bias in healthcare delivery. Ethical concerns around transparency, accountability, and equity must be addressed.

A major challenge is the lack of understandability in many AI models—often operating as “black boxes” that generate recommendations without clear explanations. If a clinician cannot fully grasp how an AI system arrives at a diagnosis or treatment plan, should it be trusted? This opacity raises fundamental questions about responsibility: If an AI-driven decision leads to harm, who is accountable—the physician, the hospital, or the technology developer? Without clear governance, deep trust in AI-powered healthcare cannot take root.

Another pressing issue is AI bias and data privacy concerns. AI systems rely on vast datasets, but if that data is incomplete or unrepresentative, algorithms may reinforce existing disparities rather than reduce them. Next to this, in healthcare, where data reflects deeply personal information, safeguarding privacy is critical. Without adequate oversight, AI could unintentionally deepen inequities instead of creating fairer, more accessible systems.

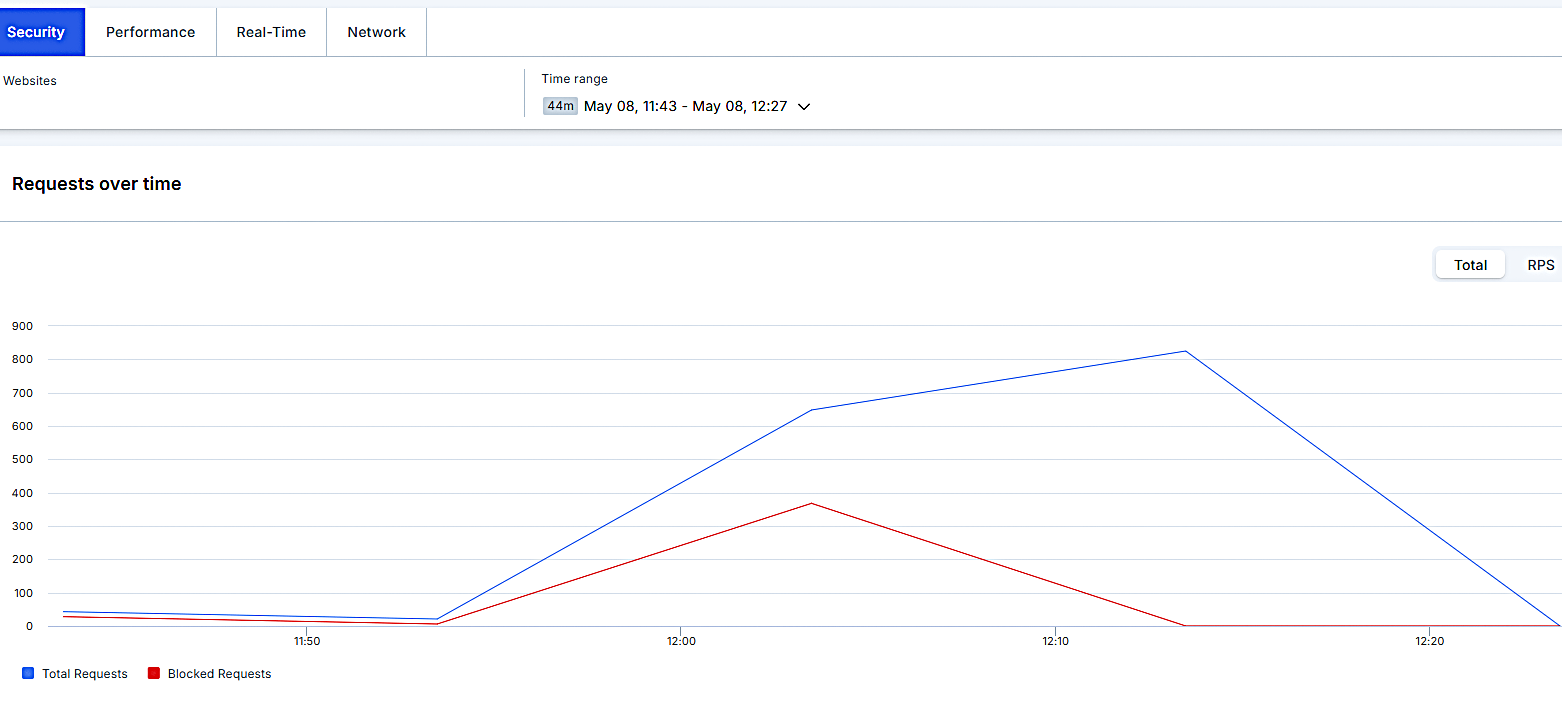

One promising approach to addressing the ethical dilemmas is regulatory sandboxes, which allow AI technologies to be tested in controlled environments before full deployment. These frameworks help refine AI applications, mitigate risks, and build trust among stakeholders, ensuring that patient well-being remains the central priority. Additionally, regulatory sandboxes offer the opportunity for continuous monitoring and real-time adjustments, allowing regulators and developers to identify potential biases, unintended consequences, or vulnerabilities early in the process. In essence, it facilitates a dynamic, iterative approach that enables innovation while enhancing accountability.

Preserving the Role of Human Intelligence and Empathy

Beyond diagnostics and treatments, human presence itself has therapeutic value. A reassuring word, a moment of genuine understanding, or a compassionate touch can ease anxiety and improve patient well-being in ways technology cannot replicate. Healthcare is more than a series of clinical decisions—it is built on trust, empathy, and personal connection.

Effective patient care involves conversations, not just calculations. If AI systems reduce patients to data points rather than individuals with unique needs, the technology is failing its most fundamental purpose. Concerns about AI-driven decision-making are growing, particularly when it comes to insurance coverage. In California, nearly a quarter of health insurance claims were denied last year, a trend seen nationwide. A new law now prohibits insurers from using AI alone to deny coverage, ensuring human judgment is central. This debate intensified with a lawsuit against UnitedHealthcare, alleging its AI tool, nH Predict, wrongly denied claims for elderly patients, with a 90% error rate. These cases underscore the need for AI to complement, not replace, human expertise in clinical decision-making and the importance of robust supervision.

The goal should not be to replace clinicians with AI but to empower them. AI can enhance efficiency and provide valuable insights, but human judgement ensures these tools serve patients rather than dictate care. Medicine is rarely black and white—real-world constraints, patient values, and ethical considerations shape every decision. AI may inform those decisions, but it is human intelligence and compassion that make healthcare truly patient-centered.

Can Artificial Intelligence make healthcare human again? Good question. While AI can handle administrative tasks, analyze complex data, and provide continuous support, the core of healthcare lies in human interaction—listening, empathizing, and understanding. AI today lacks the human qualities necessary for holistic, patient-centered care and healthcare decisions are characterized by nuances. Physicians must weigh medical evidence, patient values, ethical considerations, and real-world constraints to make the best judgments. What AI can do is relieve them of mundane routine tasks, allowing them more time to focus on what they do best.

How Autonomous Should AI Be in Health?

AI and human expertise each serve vital roles across health sectors, and the key to effective patient care lies in balancing their strengths. While AI enhances precision, diagnostics, risk assessments and operational efficiencies, human oversight remains absolutely essential. After all, the goal is not to replace clinicians but to ensure AI serves as a tool that upholds ethical, transparent, and patient-centered healthcare.

Therefore, AI’s role in clinical decision-making must be carefully defined and the degree of autonomy given to AI in health should be well evaluated. Should AI ever make final treatment decisions, or should its role be strictly supportive?Defining these boundaries now is critical to preventing over-reliance on AI that could diminish clinical judgment and professional responsibility in the future.

Public perception, too, tends to incline toward such a cautious approach. A BMC Medical Ethics study found that patients are more comfortable with AI assisting rather than replacing healthcare providers, particularly in clinical tasks. While many find AI acceptable for administrative functions and decision support, concerns persist over its impact on doctor-patient relationships. We must also consider that trust in AI varies across demographics— younger, educated individuals, especially men, tend to be more accepting, while older adults and women express more skepticism. A common concern is the loss of the “human touch” in care delivery.

Discussions at the AI Action Summit in Paris reinforced the importance of governance structures that ensure AI remains a tool for clinicians rather than a substitute for human decision-making. Maintaining trust in healthcare requires deliberate attention, ensuring that AI enhances, rather than undermines, the essential human elements of medicine.

Establishing the Right Safeguards from the Start

To make AI a valuable asset in health, the right safeguards must be built from the ground up. At the core of this approach is explainability. Developers should be required to demonstrate how their AI models function—not just to meet regulatory standards but to ensure that clinicians and patients can trust and understand AI-driven recommendations. Rigorous testing and validation are essential to ensure that AI systems are safe, effective, and equitable. This includes real-world stress testing to identify potential biases and prevent unintended consequences before widespread adoption.

Technology designed without input from those it affects is unlikely to serve them well. In order to treat people as more than the sum of their medical records, it must promote compassionate, personalized, and holistic care. To make sure AI reflects practical needs and ethical considerations, a wide range of voices—including those of patients, healthcare professionals, and ethicists—needs to be included in its development. It is necessary to train clinicians to view AI recommendations critically, for the benefit of all parties involved.

Robust guardrails should be put in place to prevent AI from prioritizing efficiency at the expense of care quality. Additionally, continuous audits are essential to ensure that AI systems uphold the highest standards of care and are in line with patient-first principles. By balancing innovation with oversight, AI can strengthen healthcare systems and promote global health equity.

Conclusion

As AI continues to evolve, the healthcare sector must strike a delicate balance between technological innovation and human connection. The future does not need to choose between AI and human compassion. Instead, the two must complement each other, creating a healthcare system that is both efficient and deeply patient-centered. By embracing both technological innovation and the core values of empathy and human connection, we can ensure that AI serves as a transformative force for good in global healthcare.

However, the path forward requires collaboration across sectors—between policymakers, developers, healthcare professionals, and patients. Transparent regulation, ethical deployment, and continuous human interventions are key to ensuring AI serves as a tool that strengthens healthcare systems and promotes global health equity.

The post Harnessing AI for a Healthier World: Ensuring AI Enhances, Not Undermines, Patient Care appeared first on Unite.AI.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

.jpeg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_ElenaBs_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Working on Brain-Controlled iPhone With Synchron [Report]](https://www.iclarified.com/images/news/97312/97312/97312-640.jpg)