DeepSeek - Recap

It was only a month ago that DeepSeek disrupted the AI world with its brilliant use of optimization and leveraging of the NVIDIA GPU's the team had to work with. The results were, and still are, revolutionary - not just because of what DeepSeek accomplished, but also because they released it to the world in the true spirit of open-source, so that everyone could benefit. This is a cursory look at the technical aspects of what the team accomplished and how: Artificial Intelligence has long been driven by raw computational power, with companies investing billions in larger, more powerful hardware to push the limits of AI capabilities. However, DeepSeek has disrupted this trend by taking an entirely different approach—one that emphasizes optimization over brute force. Their innovation, which allows them to train a 671-billion-parameter language model at speeds ten times faster than industry leaders like Meta, signals a fundamental shift in AI hardware utilization. The Traditional Approach: CUDA and Standard GPU Processing For years, AI models have been trained using NVIDIA’s CUDA (Compute Unified Device Architecture), a parallel computing platform that allows developers to harness GPU power efficiently. CUDA provides a high-level programming interface to interact with the underlying GPU hardware, making it easier to execute AI training and inference tasks. However, while effective, CUDA operates at a relatively high level of abstraction, limiting how much fine-tuned control engineers have over GPU performance. DeepSeek’s Revolutionary Strategy: The Shift to PTX DeepSeek has taken a different path by bypassing CUDA in favor of PTX (Parallel Thread Execution). PTX is a lower-level GPU programming language that allows developers to optimize hardware operations at a much finer granularity. By leveraging PTX, DeepSeek gained deeper control over GPU instructions, enabling more efficient execution of AI workloads. This move is akin to a master mechanic reconfiguring an engine at the component level rather than simply tuning its performance through traditional means. Hardware Reconfiguration: Unlocking New Potential Beyond just software optimizations, DeepSeek reengineered the hardware itself. They modified NVIDIA’s H800 GPUs by repurposing 20 out of the 132 processing units solely for inter-server communication. This decision effectively created a high-speed data express lane, allowing information to flow between GPUs at unprecedented rates. As a result, AI training became vastly more efficient, reducing processing time and power consumption while maintaining model integrity. The Cost-Saving Implications One of the most striking aspects of DeepSeek’s innovation is the potential for cost reduction. Traditionally, training massive AI models requires extensive computational resources, often leading to expenses in the range of $10 billion. However, with DeepSeek’s optimizations, similar levels of training can now be achieved for just $2 billion—a staggering fivefold reduction in cost. This development could open the door for smaller AI startups and research institutions to compete with tech giants, leveling the playing field in AI innovation. Industry Reactions and Market Disruptions DeepSeek’s breakthrough did not go unnoticed. Upon the announcement of their achievement, NVIDIA’s stock price took a significant dip as investors speculated that companies might reduce their reliance on expensive, high-powered GPUs. However, rather than being a threat to hardware manufacturers, DeepSeek’s advancements could signal a broader industry shift toward efficiency-focused AI development, potentially driving demand for new GPU architectures that emphasize custom optimizations over sheer processing power. The Future of AI Optimization DeepSeek’s work challenges conventional thinking in AI hardware. Instead of simply increasing computational power, they have demonstrated that intelligent hardware and software optimizations can yield exponential performance improvements. Their success raises important questions: What other untapped optimizations exist in AI hardware? How can smaller companies adopt similar efficiency-focused approaches? And will this paradigm shift eventually lead to an AI revolution driven by accessibility and affordability? By redefining the way AI training is approached, DeepSeek has not only introduced a faster, cheaper, and more efficient methodology but also set the stage for a future where AI innovation is dictated not by who has the most powerful hardware, but by who can use it the smartest way. Ben Santora - February 2025

It was only a month ago that DeepSeek disrupted the AI world with its brilliant use of optimization and leveraging of the NVIDIA GPU's the team had to work with. The results were, and still are, revolutionary - not just because of what DeepSeek accomplished, but also because they released it to the world in the true spirit of open-source, so that everyone could benefit.

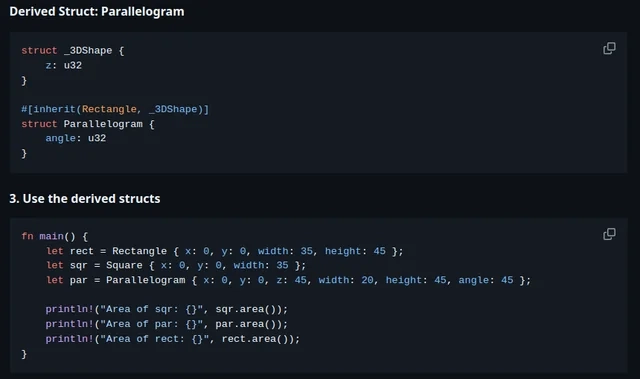

This is a cursory look at the technical aspects of what the team accomplished and how:

Artificial Intelligence has long been driven by raw computational power, with companies investing billions in larger, more powerful hardware to push the limits of AI capabilities. However, DeepSeek has disrupted this trend by taking an entirely different approach—one that emphasizes optimization over brute force. Their innovation, which allows them to train a 671-billion-parameter language model at speeds ten times faster than industry leaders like Meta, signals a fundamental shift in AI hardware utilization.

The Traditional Approach: CUDA and Standard GPU Processing

For years, AI models have been trained using NVIDIA’s CUDA (Compute Unified Device Architecture), a parallel computing platform that allows developers to harness GPU power efficiently. CUDA provides a high-level programming interface to interact with the underlying GPU hardware, making it easier to execute AI training and inference tasks. However, while effective, CUDA operates at a relatively high level of abstraction, limiting how much fine-tuned control engineers have over GPU performance.

DeepSeek’s Revolutionary Strategy: The Shift to PTX

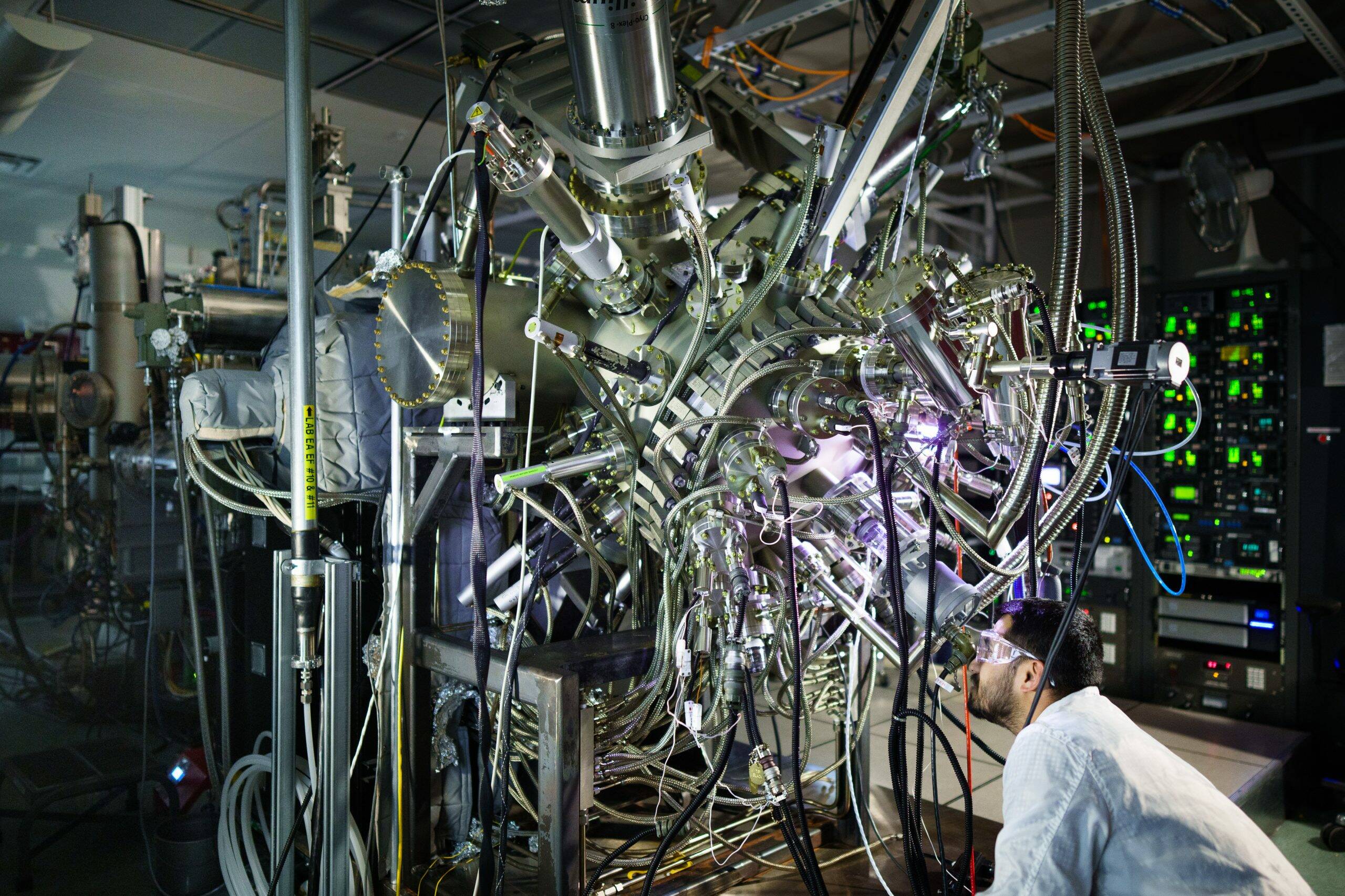

DeepSeek has taken a different path by bypassing CUDA in favor of PTX (Parallel Thread Execution). PTX is a lower-level GPU programming language that allows developers to optimize hardware operations at a much finer granularity. By leveraging PTX, DeepSeek gained deeper control over GPU instructions, enabling more efficient execution of AI workloads. This move is akin to a master mechanic reconfiguring an engine at the component level rather than simply tuning its performance through traditional means.

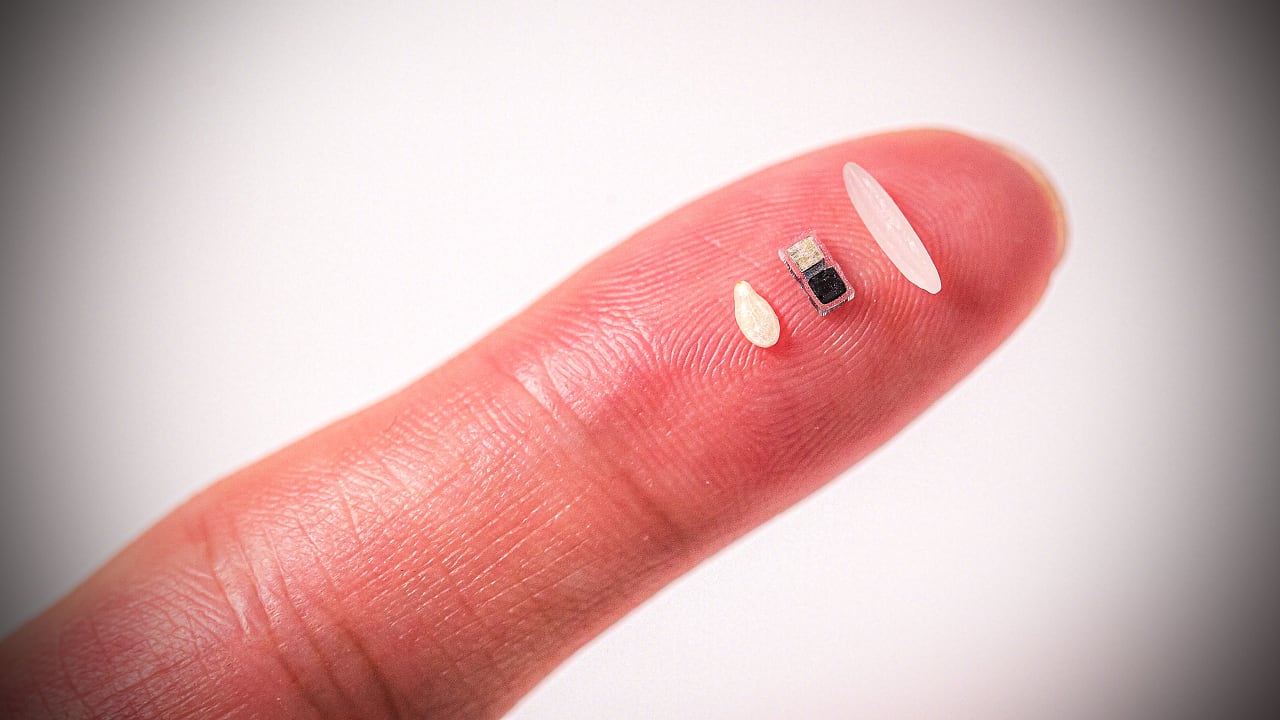

Hardware Reconfiguration: Unlocking New Potential

Beyond just software optimizations, DeepSeek reengineered the hardware itself. They modified NVIDIA’s H800 GPUs by repurposing 20 out of the 132 processing units solely for inter-server communication. This decision effectively created a high-speed data express lane, allowing information to flow between GPUs at unprecedented rates. As a result, AI training became vastly more efficient, reducing processing time and power consumption while maintaining model integrity.

The Cost-Saving Implications

One of the most striking aspects of DeepSeek’s innovation is the potential for cost reduction. Traditionally, training massive AI models requires extensive computational resources, often leading to expenses in the range of $10 billion. However, with DeepSeek’s optimizations, similar levels of training can now be achieved for just $2 billion—a staggering fivefold reduction in cost. This development could open the door for smaller AI startups and research institutions to compete with tech giants, leveling the playing field in AI innovation.

Industry Reactions and Market Disruptions

DeepSeek’s breakthrough did not go unnoticed. Upon the announcement of their achievement, NVIDIA’s stock price took a significant dip as investors speculated that companies might reduce their reliance on expensive, high-powered GPUs. However, rather than being a threat to hardware manufacturers, DeepSeek’s advancements could signal a broader industry shift toward efficiency-focused AI development, potentially driving demand for new GPU architectures that emphasize custom optimizations over sheer processing power.

The Future of AI Optimization

DeepSeek’s work challenges conventional thinking in AI hardware. Instead of simply increasing computational power, they have demonstrated that intelligent hardware and software optimizations can yield exponential performance improvements. Their success raises important questions: What other untapped optimizations exist in AI hardware? How can smaller companies adopt similar efficiency-focused approaches? And will this paradigm shift eventually lead to an AI revolution driven by accessibility and affordability?

By redefining the way AI training is approached, DeepSeek has not only introduced a faster, cheaper, and more efficient methodology but also set the stage for a future where AI innovation is dictated not by who has the most powerful hardware, but by who can use it the smartest way.

Ben Santora - February 2025

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_ArtemisDiana_Alamy.jpg?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)