Compare All LLMs in one Playground: Introducing Agenta's AI Model Hub

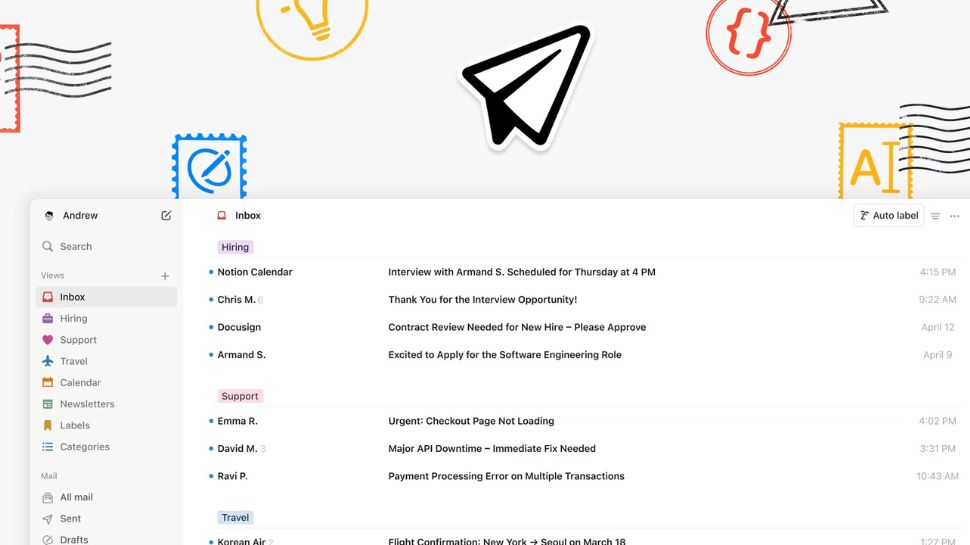

This article is part of Agenta Launch Week (April 14-18, 2025), where we're announcing new features daily. What is Agenta? Agenta is an open-source platform built for AI engineers and teams working with large language models. Our playground, evaluation tools, and deployment solutions help streamline the entire LLM application development process. As an open-source tool, Agenta gives developers complete control over their AI infrastructure while providing enterprise-grade features for teams of all sizes. Introducing AI Model Hub When working with LLMs, comparing different models is essential. Our users face several challenges: You have fine-tuned models for your specific use cases but couldn't use them in Agenta's playground or evaluations. Many of you run self-hosted models for privacy, security, or cost reasons – through Ollama or dedicated servers – but couldn't connect them to Agenta. Enterprise users who rely on Azure OpenAI and AWS Bedrock had to switch between platforms when using Agenta. And teams had no way to share model access without sharing raw API keys, creating security risks and complicating collaboration. Introducing Model Hub: Your central connection point Model Hub gives you a central place in Agenta to manage all your model connections: Connect any model: Azure OpenAI, AWS Bedrock, self-hosted models, fine-tuned models – any model with an OpenAI-compatible API. Configure once, use everywhere: Set up your models once and use them in playground experiments, evaluations, and deployments. Secure team sharing: Give your team access to models without sharing API keys. Control permissions per model. Consistent experience: Use the same Agenta interface for all your models. How it works Model Hub is under Configuration in your Agenta interface: Select your provider (OpenAI, Azure, AWS, Ollama, etc.) Enter your API keys Connect to your self-hosted models Share access with teammates Once configured, your models appear throughout Agenta. Run comparisons in the playground, test performance in evaluations, and deploy with confidence – using models from any provider. Security is built-in When building Model Hub, we prioritized the security of your API keys and credentials: Your model keys and credentials are encrypted at rest in our database and protected in transit with TLS encryption. We never log these sensitive details, and they're only held in memory for the minimum time needed to process requests. Currently, access to models is managed at the project level, with all team members working on a project able to use the configured models. Every access to these credentials requires proper authentication with tokens tied to specific users and projects. For Business and Enterprise customers, Role-Based Access Control (RBAC) provides additional security by letting you control exactly who on your team can view or modify model configurations. We've designed this system from the ground up with security best practices, ensuring your valuable API keys and model access credentials remain protected. What's next? The AI Model Hub is just the first feature in our Launch Week. Four more announcements are coming in the next few days to improve your LLM development workflow. Ready to try it? Check out Agenta on GitHub or log in to your Agenta account to set up your Model Hub. Stay tuned for tomorrow's announcement! ⭐ Star Agenta Consider giving us a star! It helps us grow our community and gets Agenta in front of more developers.

This article is part of Agenta Launch Week (April 14-18, 2025), where we're announcing new features daily.

What is Agenta?

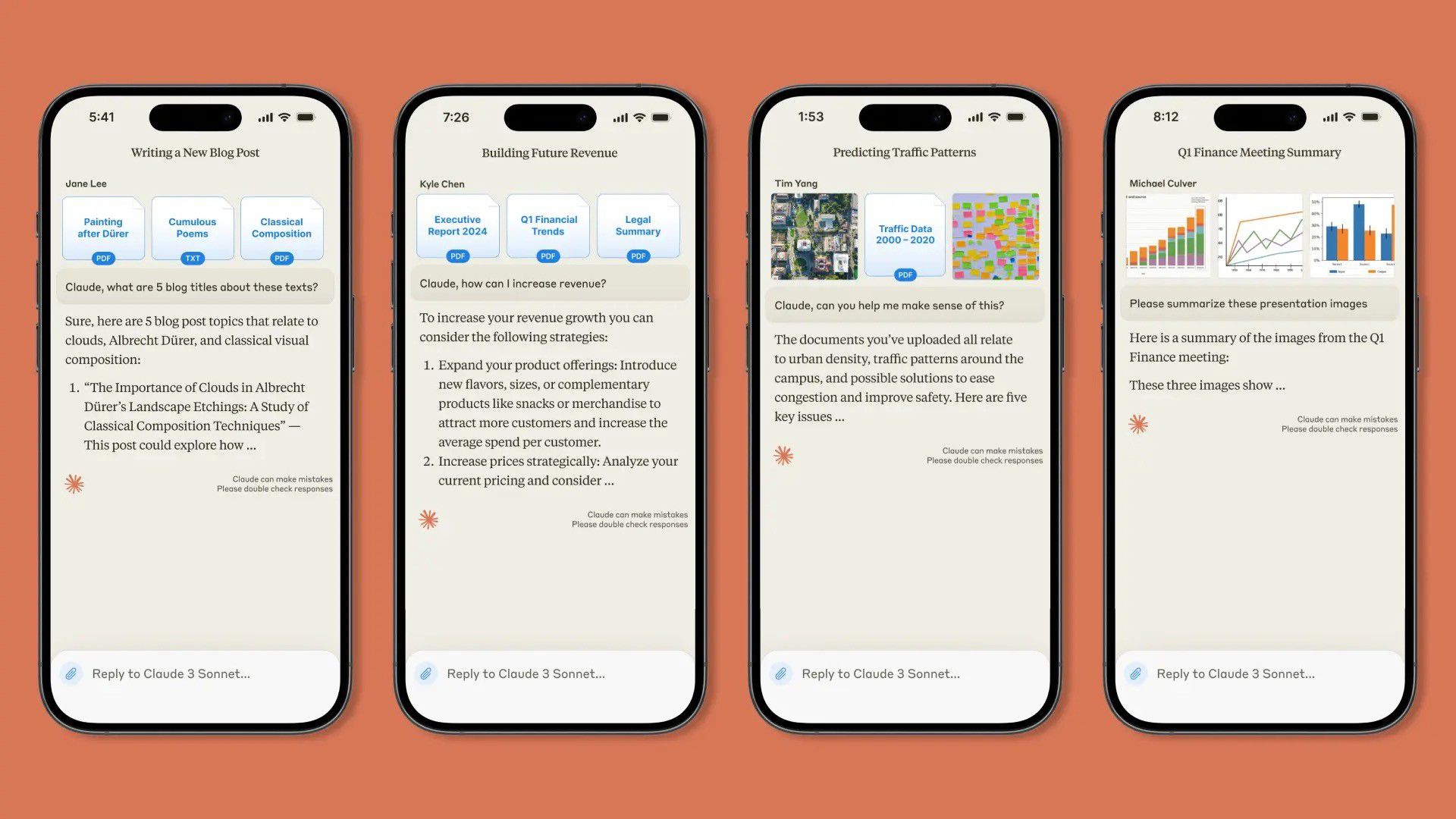

Agenta is an open-source platform built for AI engineers and teams working with large language models. Our playground, evaluation tools, and deployment solutions help streamline the entire LLM application development process. As an open-source tool, Agenta gives developers complete control over their AI infrastructure while providing enterprise-grade features for teams of all sizes.

Introducing AI Model Hub

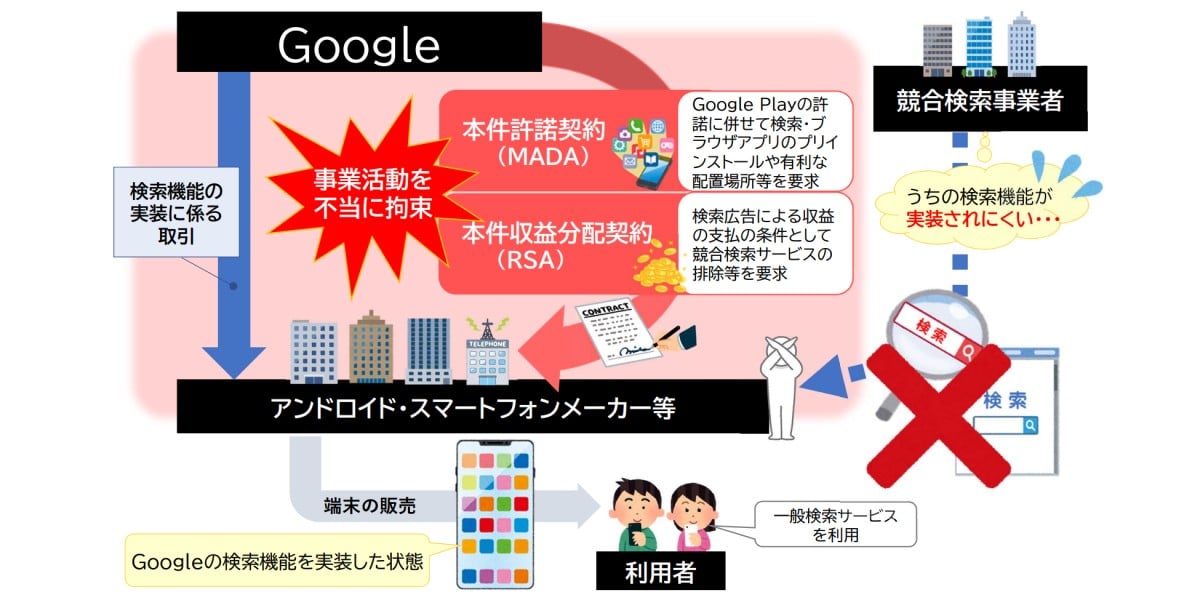

When working with LLMs, comparing different models is essential. Our users face several challenges:

You have fine-tuned models for your specific use cases but couldn't use them in Agenta's playground or evaluations.

Many of you run self-hosted models for privacy, security, or cost reasons – through Ollama or dedicated servers – but couldn't connect them to Agenta.

Enterprise users who rely on Azure OpenAI and AWS Bedrock had to switch between platforms when using Agenta.

And teams had no way to share model access without sharing raw API keys, creating security risks and complicating collaboration.

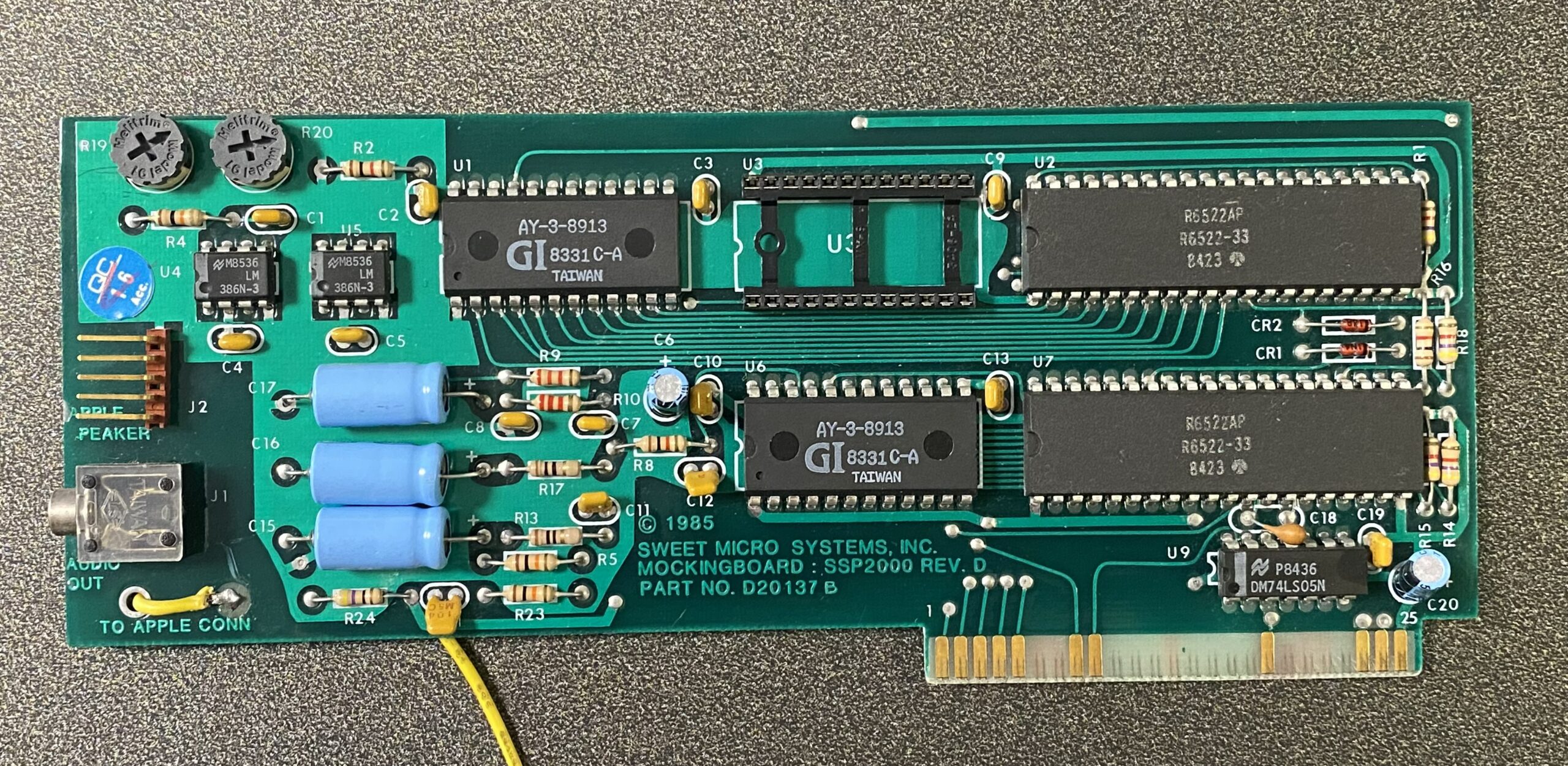

Introducing Model Hub: Your central connection point

Model Hub gives you a central place in Agenta to manage all your model connections:

Connect any model: Azure OpenAI, AWS Bedrock, self-hosted models, fine-tuned models – any model with an OpenAI-compatible API.

Configure once, use everywhere: Set up your models once and use them in playground experiments, evaluations, and deployments.

Secure team sharing: Give your team access to models without sharing API keys. Control permissions per model.

Consistent experience: Use the same Agenta interface for all your models.

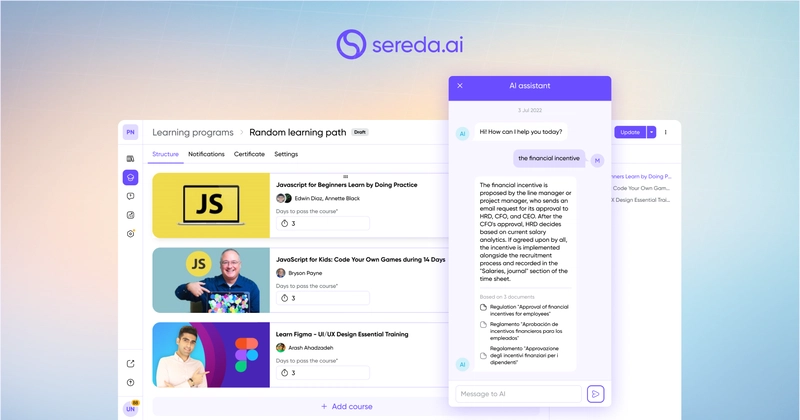

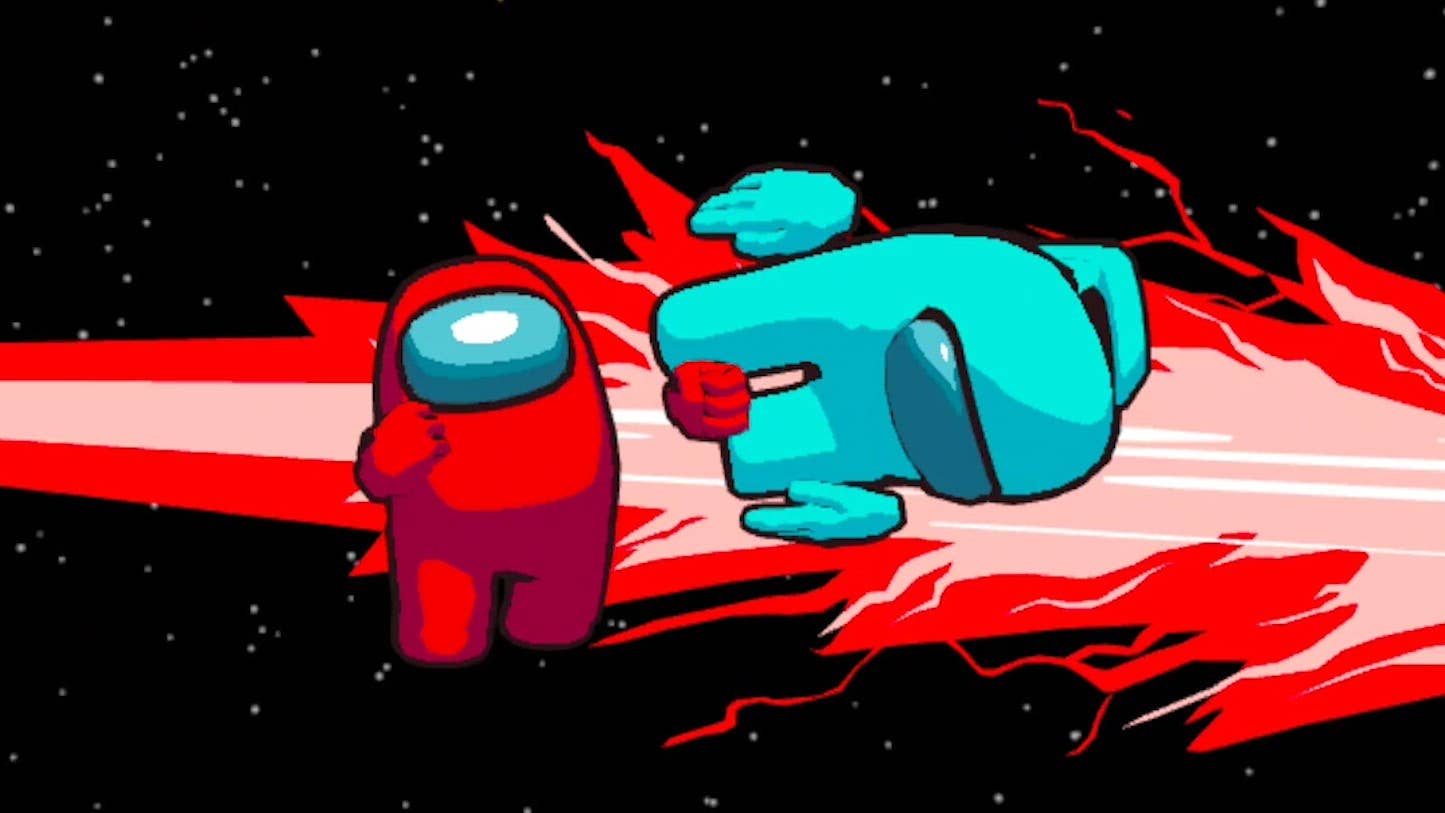

How it works

Model Hub is under Configuration in your Agenta interface:

- Select your provider (OpenAI, Azure, AWS, Ollama, etc.)

- Enter your API keys

- Connect to your self-hosted models

- Share access with teammates

Once configured, your models appear throughout Agenta. Run comparisons in the playground, test performance in evaluations, and deploy with confidence – using models from any provider.

Security is built-in

When building Model Hub, we prioritized the security of your API keys and credentials:

Your model keys and credentials are encrypted at rest in our database and protected in transit with TLS encryption. We never log these sensitive details, and they're only held in memory for the minimum time needed to process requests.

Currently, access to models is managed at the project level, with all team members working on a project able to use the configured models. Every access to these credentials requires proper authentication with tokens tied to specific users and projects.

For Business and Enterprise customers, Role-Based Access Control (RBAC) provides additional security by letting you control exactly who on your team can view or modify model configurations.

We've designed this system from the ground up with security best practices, ensuring your valuable API keys and model access credentials remain protected.

What's next?

The AI Model Hub is just the first feature in our Launch Week. Four more announcements are coming in the next few days to improve your LLM development workflow.

Ready to try it? Check out Agenta on GitHub or log in to your Agenta account to set up your Model Hub.

Stay tuned for tomorrow's announcement!

⭐ Star Agenta

Consider giving us a star! It helps us grow our community and gets Agenta in front of more developers.

.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.webp?#)

![Apple to Split Enterprise and Western Europe Roles as VP Exits [Report]](https://www.iclarified.com/images/news/97032/97032/97032-640.jpg)

![Nanoleaf Announces New Pegboard Desk Dock With Dual-Sided Lighting [Video]](https://www.iclarified.com/images/news/97030/97030/97030-640.jpg)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)

![Daredevil Born Again season 1 ending explained: does [spoiler] show up, when does season 2 come out, and more Marvel questions answered](https://cdn.mos.cms.futurecdn.net/i8Lf25QWuSoxWKGxWMLaaA.jpg?#)