Build an AI Agent That Understands SQL and PDFs Using MindsDB

In this tutorial, you'll learn how to build a production-ready AI agent that can answer questions using both structured data from a PostgreSQL database and unstructured content from PDF files. We'll use MindsDB, an open-source platform designed to integrate LLM-powered agents with databases and external knowledge sources like documents. Whether you're developing internal tools, research assistants, or customer support bots, this step-by-step guide will show you how to combine retrieval-augmented generation (RAG), SQL, and language models efficiently. Tools Used MindsDB – agent orchestration and SQL-based pipeline PostgreSQL – structured data source PDF documents – unstructured data OpenAI GPT / Hugging Face Transformers – response generation Objective We’ll build an AI agent that can: Answer natural language questions by converting them into SQL queries Retrieve and summarize content from uploaded PDFs Integrate with platforms such as Slack or web interfaces Step 1: Install MindsDB You can run MindsDB locally using Docker or use the cloud-hosted version. Run with Docker: docker run -p 47334:47334 mindsdb/mindsdb This provides access to the built-in SQL Editor, REST APIs, and external connectors. Step 2: Create a Conversational Model We start by creating a language model capable of natural dialogue, powered by OpenAI or Hugging Face via LangChain. CREATE MODEL conversational_model PREDICT answer USING engine = 'langchain', openai_api_key = 'YOUR_OPENAI_API_KEY', model_name = 'gpt-4', mode = 'conversational', user_column = 'question', assistant_column = 'answer', prompt_template = 'Answer the user input in a helpful way', max_tokens = 100, temperature = 0, verbose = true; To verify the model is active: DESCRIBE conversational_model; Step 3: Add Skills to Your AI Agent 3.1: Text-to-SQL Skill for Structured Data Connect MindsDB to your PostgreSQL database: CREATE DATABASE datasource WITH ENGINE = "postgres", PARAMETERS = { "user": "demo_user", "password": "demo_password", "host": "samples.mindsdb.com", "port": "5432", "database": "demo", "schema": "demo_data" }; Define a skill for querying this data: CREATE SKILL text2sql_skill USING type = 'text2sql', database = 'datasource', tables = ['house_sales'], description = 'Contains US house sales data from 2007 to 2015'; 3.2: Knowledge Base Skill for PDFs Step 1: Create an embedding model: CREATE MODEL embedding_model_hf PREDICT embedding USING engine = 'langchain_embedding', class = 'HuggingFaceEmbeddings', input_columns = ["content"]; Step 2: Create a knowledge base: CREATE KNOWLEDGE BASE my_knowledge_base USING model = embedding_model_hf; Step 3: Insert PDF content: INSERT INTO my_knowledge_base SELECT * FROM files.my_file_name; Step 4: Create a skill linked to the knowledge base: CREATE SKILL kb_skill USING type = 'knowledge_base', source = 'my_knowledge_base', description = 'PDF report with analysis on house pricing trends'; Step 4: Create the AI Agent Now, connect the conversational model with the two skills. CREATE AGENT ai_agent USING model = 'conversational_model', skills = ['text2sql_skill', 'kb_skill']; Test the agent: SELECT question, answer FROM ai_agent WHERE question = 'How many houses were sold in 2015?'; Optional: Deploy the Agent to Slack CREATE DATABASE mindsdb_slack WITH ENGINE = 'slack', PARAMETERS = { "token": "xoxb-xxx", "app_token": "xapp-xxx" }; CREATE CHATBOT ai_chatbot USING database = 'mindsdb_slack', agent = 'ai_agent'; Your AI agent is now live on Slack and can be interacted with directly by your team. Automating Knowledge Base Updates To refresh your knowledge base regularly: CREATE JOB update_kb_hourly AS ( INSERT INTO my_knowledge_base ( SELECT * FROM data_source WHERE id > LAST ) ) EVERY hour; Example Questions You Can Ask "How many houses were sold in California in 2012?" "Summarize the key insights from the PDF report." "What is the average price of houses sold per state in 2015?" Use Cases Internal AI assistants for querying data systems Customer support bots with product manuals in PDF format AI dashboards for real-time analytics and summaries Slack bots that act as knowledge agents for your team SEO Keywords (Technical) Build AI agent with MindsDB Natural language to SQL chatbot LangChain agent integration RAG PDF chatbot with SQL PostgreSQL GPT chatbot Hugging Face Embeddings MindsDB MindsDB Slack integration Enterprise LLM agent orchestration Conclusion By combining MindsDB with OpenAI or Hugging Face models, you can rapidly develop intelligent agents

In this tutorial, you'll learn how to build a production-ready AI agent that can answer questions using both structured data from a PostgreSQL database and unstructured content from PDF files. We'll use MindsDB, an open-source platform designed to integrate LLM-powered agents with databases and external knowledge sources like documents.

Whether you're developing internal tools, research assistants, or customer support bots, this step-by-step guide will show you how to combine retrieval-augmented generation (RAG), SQL, and language models efficiently.

Tools Used

- MindsDB – agent orchestration and SQL-based pipeline

- PostgreSQL – structured data source

- PDF documents – unstructured data

- OpenAI GPT / Hugging Face Transformers – response generation

Objective

We’ll build an AI agent that can:

- Answer natural language questions by converting them into SQL queries

- Retrieve and summarize content from uploaded PDFs

- Integrate with platforms such as Slack or web interfaces

Step 1: Install MindsDB

You can run MindsDB locally using Docker or use the cloud-hosted version.

Run with Docker:

docker run -p 47334:47334 mindsdb/mindsdb

This provides access to the built-in SQL Editor, REST APIs, and external connectors.

Step 2: Create a Conversational Model

We start by creating a language model capable of natural dialogue, powered by OpenAI or Hugging Face via LangChain.

CREATE MODEL conversational_model

PREDICT answer

USING

engine = 'langchain',

openai_api_key = 'YOUR_OPENAI_API_KEY',

model_name = 'gpt-4',

mode = 'conversational',

user_column = 'question',

assistant_column = 'answer',

prompt_template = 'Answer the user input in a helpful way',

max_tokens = 100,

temperature = 0,

verbose = true;

To verify the model is active:

DESCRIBE conversational_model;

Step 3: Add Skills to Your AI Agent

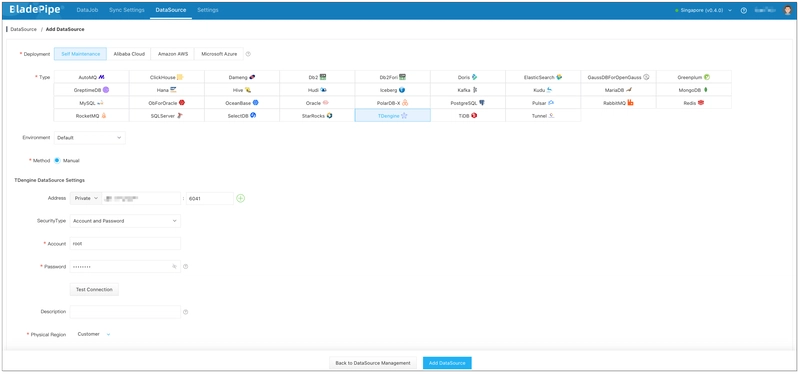

3.1: Text-to-SQL Skill for Structured Data

Connect MindsDB to your PostgreSQL database:

CREATE DATABASE datasource

WITH ENGINE = "postgres",

PARAMETERS = {

"user": "demo_user",

"password": "demo_password",

"host": "samples.mindsdb.com",

"port": "5432",

"database": "demo",

"schema": "demo_data"

};

Define a skill for querying this data:

CREATE SKILL text2sql_skill

USING

type = 'text2sql',

database = 'datasource',

tables = ['house_sales'],

description = 'Contains US house sales data from 2007 to 2015';

3.2: Knowledge Base Skill for PDFs

Step 1: Create an embedding model:

CREATE MODEL embedding_model_hf

PREDICT embedding

USING

engine = 'langchain_embedding',

class = 'HuggingFaceEmbeddings',

input_columns = ["content"];

Step 2: Create a knowledge base:

CREATE KNOWLEDGE BASE my_knowledge_base

USING

model = embedding_model_hf;

Step 3: Insert PDF content:

INSERT INTO my_knowledge_base

SELECT * FROM files.my_file_name;

Step 4: Create a skill linked to the knowledge base:

CREATE SKILL kb_skill

USING

type = 'knowledge_base',

source = 'my_knowledge_base',

description = 'PDF report with analysis on house pricing trends';

Step 4: Create the AI Agent

Now, connect the conversational model with the two skills.

CREATE AGENT ai_agent

USING

model = 'conversational_model',

skills = ['text2sql_skill', 'kb_skill'];

Test the agent:

SELECT question, answer

FROM ai_agent

WHERE question = 'How many houses were sold in 2015?';

Optional: Deploy the Agent to Slack

CREATE DATABASE mindsdb_slack

WITH ENGINE = 'slack',

PARAMETERS = {

"token": "xoxb-xxx",

"app_token": "xapp-xxx"

};

CREATE CHATBOT ai_chatbot

USING

database = 'mindsdb_slack',

agent = 'ai_agent';

Your AI agent is now live on Slack and can be interacted with directly by your team.

Automating Knowledge Base Updates

To refresh your knowledge base regularly:

CREATE JOB update_kb_hourly AS (

INSERT INTO my_knowledge_base (

SELECT * FROM data_source WHERE id > LAST

)

) EVERY hour;

Example Questions You Can Ask

- "How many houses were sold in California in 2012?"

- "Summarize the key insights from the PDF report."

- "What is the average price of houses sold per state in 2015?"

Use Cases

- Internal AI assistants for querying data systems

- Customer support bots with product manuals in PDF format

- AI dashboards for real-time analytics and summaries

- Slack bots that act as knowledge agents for your team

SEO Keywords (Technical)

- Build AI agent with MindsDB

- Natural language to SQL chatbot

- LangChain agent integration

- RAG PDF chatbot with SQL

- PostgreSQL GPT chatbot

- Hugging Face Embeddings MindsDB

- MindsDB Slack integration

- Enterprise LLM agent orchestration

Conclusion

By combining MindsDB with OpenAI or Hugging Face models, you can rapidly develop intelligent agents that understand both structured and unstructured data sources. With just a few SQL-like commands, we’ve created an end-to-end AI workflow that can be deployed across business-critical applications.

For enterprise use cases, this stack scales well for document understanding, sales analytics, research summarization, and beyond.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Google Home app fixes bug that repeatedly asked to ‘Set up Nest Cam features’ for Nest Hub Max [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2022/08/youtube-premium-music-nest-hub-max.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Epic Games Wins Major Victory as Apple is Ordered to Comply With App Store Anti-Steering Injunction [Updated]](https://images.macrumors.com/t/Z4nU2dRocDnr4NPvf-sGNedmPGA=/2250x/article-new/2022/01/iOS-App-Store-General-Feature-JoeBlue.jpg)

![T-Mobile is phasing out plans with included taxes and fees starting tomorrow [UPDATED]](https://m-cdn.phonearena.com/images/article/169988-two/T-Mobile-is-phasing-out-plans-with-included-taxes-and-fees-starting-tomorrow-UPDATED.jpg?#)