AI-Assisted Testing with Browseruse

Why High-Level AI-Powered Testing? Automated UI testing is a critical part of modern QA. However, writing stable UI tests—especially for legacy applications or hard-to-automate interfaces—can be: Time-consuming Fragile due to frequent DOM changes or lack of test IDs Manual and resource-intensive to maintain AI-powered high-level automation offers a compelling alternative: Reduces QA effort for sanity and smoke tests Avoids the need for writing detailed selectors or test data Enables automation of legacy or dynamically rendered applications This blog demonstrates how existing open-source tools can be combined to build flexible and AI-enhanced automation workflows—without relying on expensive commercial platforms. Tools Used This approach integrates: Browseruse: An LLM-driven browser controller that uses Playwright under the hood Playwright: A robust framework for browser automation LLMs (Gemini): Handles interpretation of natural language QA tasks In this example, we use Gemini 2.0 Flash via LangChain due to its free availability. However, you can easily swap in alternatives like GPT-4, Claude 3, or even local models via Ollama. Available Alternatives in the Market Several commercial tools offer similar AI-powered testing capabilities, but What differentiates Browseruse + LLM: Fully open source agent Compatible with any LLM provider Easy to extend with custom component logic Real Example: One Script, No Test Data, No Selectors Consider a test application running locally at http://localhost:5173/, which includes a form submission page. Below is a complete script to test it using Browseruse and Gemini: from browser_use import Agent from dotenv import load_dotenv from langchain_google_genai import ChatGoogleGenerativeAI import asyncio llm = ChatGoogleGenerativeAI(model="gemini-2.0-flash") load_dotenv() async def main(): success_task = """ You are an automated QA assistant performing exploratory UI testing. Open http://localhost:5173/ Fill the form with appropriate values Click Save after filling mandatory values Return task as success if you see success toast message or fail with reasons needed. """ agent = Agent( task=success_task, llm=llm, ) await agent.run() asyncio.run(main()) This script requires: No test-specific data No HTML selectors or test IDs No manual handling of dropdowns or wait conditions The LLM interprets the flow and executes it end-to-end using intelligent heuristics. You can also write a negative test case to validate failed submissions: failure_task = """ You are an automated QA assistant performing exploratory UI testing. Open http://localhost:5173/ Validate if the form works for mandatory values Return task as success if you see error toast message for validation fails. """ Challenges and Considerations Prompt Design Complex UI components like Ant Design’s rc-select or dynamic dropdowns require detailed prompt crafting or custom handlers. Execution Time LLM-based test runs tend to be slower than hand-coded automation scripts, particularly for long forms or multi-step flows. Cost While Gemini Flash is free, using models like GPT-4 or Claude 3 may incur usage-based costs. Benefits Using this approach, you can: Test new UIs without creating test scripts Add smoke checks for legacy flows without deep DOM knowledge Detect form-level bugs early in the development cycle All with the robustness of Playwright and the intelligence of LLMs. What’s Next Implement screenshot comparisons for visual regressions Generate test reports linked to user stories Auto-record successful runs as replayable test cases Fine-tune prompts for app-specific language and behavior

Why High-Level AI-Powered Testing?

Automated UI testing is a critical part of modern QA. However, writing stable UI tests—especially for legacy applications or hard-to-automate interfaces—can be:

- Time-consuming

- Fragile due to frequent DOM changes or lack of test IDs

- Manual and resource-intensive to maintain

AI-powered high-level automation offers a compelling alternative:

- Reduces QA effort for sanity and smoke tests

- Avoids the need for writing detailed selectors or test data

- Enables automation of legacy or dynamically rendered applications

This blog demonstrates how existing open-source tools can be combined to build flexible and AI-enhanced automation workflows—without relying on expensive commercial platforms.

Tools Used

This approach integrates:

- Browseruse: An LLM-driven browser controller that uses Playwright under the hood

- Playwright: A robust framework for browser automation

- LLMs (Gemini): Handles interpretation of natural language QA tasks

In this example, we use Gemini 2.0 Flash via LangChain due to its free availability. However, you can easily swap in alternatives like GPT-4, Claude 3, or even local models via Ollama.

Available Alternatives in the Market

Several commercial tools offer similar AI-powered testing capabilities, but What differentiates Browseruse + LLM:

- Fully open source agent

- Compatible with any LLM provider

- Easy to extend with custom component logic

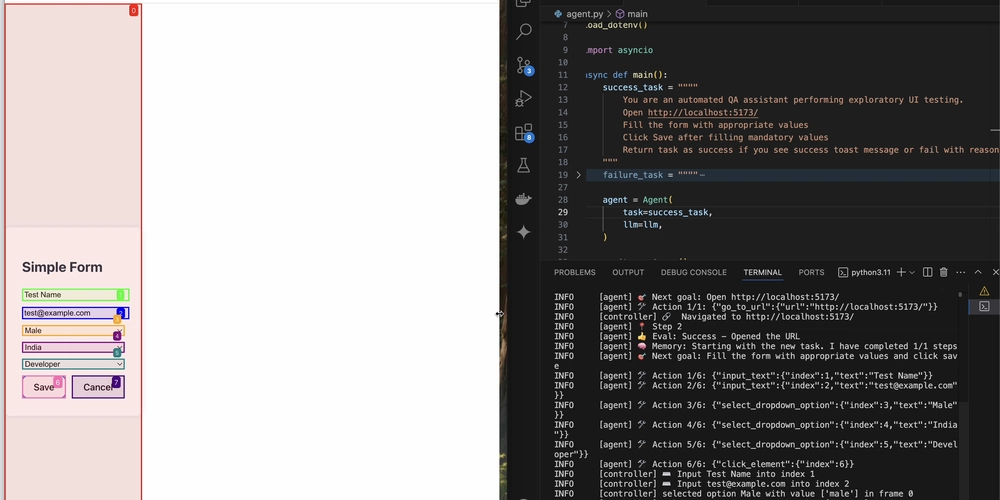

Real Example: One Script, No Test Data, No Selectors

Consider a test application running locally at http://localhost:5173/, which includes a form submission page.

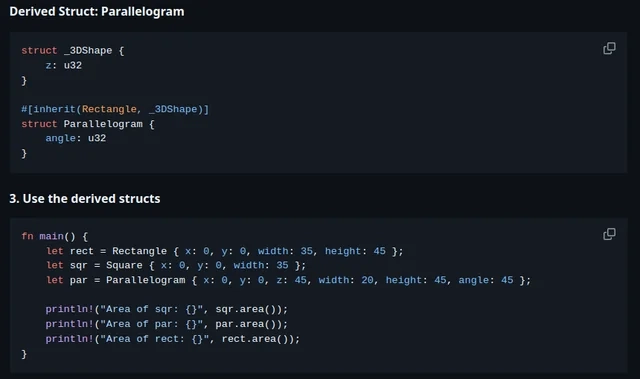

Below is a complete script to test it using Browseruse and Gemini:

from browser_use import Agent

from dotenv import load_dotenv

from langchain_google_genai import ChatGoogleGenerativeAI

import asyncio

llm = ChatGoogleGenerativeAI(model="gemini-2.0-flash")

load_dotenv()

async def main():

success_task = """

You are an automated QA assistant performing exploratory UI testing.

Open http://localhost:5173/

Fill the form with appropriate values

Click Save after filling mandatory values

Return task as success if you see success toast message or fail with reasons needed.

"""

agent = Agent(

task=success_task,

llm=llm,

)

await agent.run()

asyncio.run(main())

This script requires:

- No test-specific data

- No HTML selectors or test IDs

- No manual handling of dropdowns or wait conditions

The LLM interprets the flow and executes it end-to-end using intelligent heuristics.

You can also write a negative test case to validate failed submissions:

failure_task = """

You are an automated QA assistant performing exploratory UI testing.

Open http://localhost:5173/

Validate if the form works for mandatory values

Return task as success if you see error toast message for validation fails.

"""

Challenges and Considerations

Prompt Design

- Complex UI components like Ant Design’s

rc-selector dynamic dropdowns require detailed prompt crafting or custom handlers.

Execution Time

- LLM-based test runs tend to be slower than hand-coded automation scripts, particularly for long forms or multi-step flows.

Cost

- While Gemini Flash is free, using models like GPT-4 or Claude 3 may incur usage-based costs.

Benefits

Using this approach, you can:

- Test new UIs without creating test scripts

- Add smoke checks for legacy flows without deep DOM knowledge

- Detect form-level bugs early in the development cycle

All with the robustness of Playwright and the intelligence of LLMs.

What’s Next

- Implement screenshot comparisons for visual regressions

- Generate test reports linked to user stories

- Auto-record successful runs as replayable test cases

- Fine-tune prompts for app-specific language and behavior

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_ArtemisDiana_Alamy.jpg?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)