Understanding the CAP Theorem in Distributed Systems

Introduction to Distributed Systems A distributed system is a network of independent computers that work together to appear as a single coherent system. These systems are widely used to enhance scalability, availability, and fault tolerance. Examples include cloud computing, microservices architectures, and database clusters. Why Use Distributed Systems? The primary reasons for using distributed systems include: Scalability: Handling increasing loads by distributing tasks across multiple nodes. Fault Tolerance: Ensuring that a failure in one part of the system does not bring the entire system down. Availability: Providing uninterrupted services despite hardware or software failures. Performance: Reducing latency by processing data closer to the user or workload. However, designing distributed systems comes with challenges, particularly maintaining consistency, availability, and partition tolerance simultaneously. This is where the CAP theorem comes into play. The CAP Theorem The CAP theorem, formulated by Eric Brewer in 2000, states that a distributed system can achieve at most two out of three of the following properties: Consistency (C) – Every read receives the most recent write or an error. Availability (A) – Every request receives a response, whether it contains the latest data or not. Partition Tolerance (P) – The system continues to function despite network partitions. Why is the CAP Theorem Important? In a distributed system, network failures (partitions) are inevitable due to various reasons such as hardware failures, data center outages, or network congestion. When a partition occurs, the system must choose between consistency and availability. Trade-offs in the CAP Theorem Since a system cannot guarantee all three properties simultaneously, architects must make trade-offs based on business needs. 1. CP (Consistency + Partition Tolerance) Prioritizes data consistency over availability. During a network partition, the system may become unavailable to ensure that all nodes have consistent data. Example: Traditional relational databases like Google Spanner. 2. AP (Availability + Partition Tolerance) Prioritizes availability over strict consistency. Data may be stale for a brief period, but the system remains operational. Example: NoSQL databases like Cassandra, DynamoDB, which offer eventual consistency. 3. CA (Consistency + Availability) - Theoretical Only A system that is both 100% consistent and always available is only possible if there is no network partition. Since real-world networks can fail, achieving this in a distributed system is practically impossible. How to Choose a Strategy Based on Use Case? Requirement Recommended CAP Strategy Example Systems Financial Transactions CP (Consistency + Partition Tolerance) Google Spanner, RDBMS Real-Time Data Processing AP (Availability + Partition Tolerance) Cassandra, DynamoDB Distributed Caching AP (Availability + Partition Tolerance) Memcached, Redis Suggested Diagrams CAP Theorem Triangle - A triangular diagram showing trade-offs among Consistency, Availability, and Partition Tolerance. Partition Tolerance Example - Illustrating a network failure scenario causing a system to choose between consistency and availability. Comparison of CP vs. AP Systems - Visual representation of how different systems behave under network partitions. Conclusion The CAP theorem provides a fundamental understanding of how distributed systems operate under failure conditions. No system can achieve perfect consistency, availability, and partition tolerance simultaneously, so system architects must make trade-offs based on business and technical requirements. Understanding these trade-offs helps in designing robust and scalable distributed architectures. By carefully choosing between CP and AP approaches based on the application's needs, businesses can create resilient systems tailored to their use cases.

Introduction to Distributed Systems

A distributed system is a network of independent computers that work together to appear as a single coherent system. These systems are widely used to enhance scalability, availability, and fault tolerance. Examples include cloud computing, microservices architectures, and database clusters.

Why Use Distributed Systems?

The primary reasons for using distributed systems include:

- Scalability: Handling increasing loads by distributing tasks across multiple nodes.

- Fault Tolerance: Ensuring that a failure in one part of the system does not bring the entire system down.

- Availability: Providing uninterrupted services despite hardware or software failures.

- Performance: Reducing latency by processing data closer to the user or workload.

However, designing distributed systems comes with challenges, particularly maintaining consistency, availability, and partition tolerance simultaneously. This is where the CAP theorem comes into play.

The CAP Theorem

The CAP theorem, formulated by Eric Brewer in 2000, states that a distributed system can achieve at most two out of three of the following properties:

- Consistency (C) – Every read receives the most recent write or an error.

- Availability (A) – Every request receives a response, whether it contains the latest data or not.

- Partition Tolerance (P) – The system continues to function despite network partitions.

Why is the CAP Theorem Important?

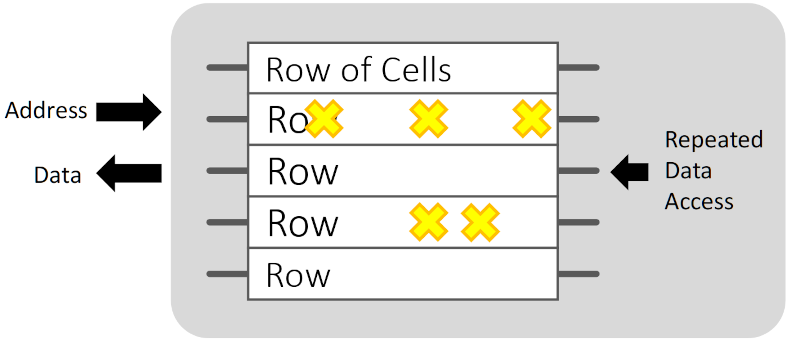

In a distributed system, network failures (partitions) are inevitable due to various reasons such as hardware failures, data center outages, or network congestion. When a partition occurs, the system must choose between consistency and availability.

Trade-offs in the CAP Theorem

Since a system cannot guarantee all three properties simultaneously, architects must make trade-offs based on business needs.

1. CP (Consistency + Partition Tolerance)

- Prioritizes data consistency over availability.

- During a network partition, the system may become unavailable to ensure that all nodes have consistent data.

- Example: Traditional relational databases like Google Spanner.

2. AP (Availability + Partition Tolerance)

- Prioritizes availability over strict consistency.

- Data may be stale for a brief period, but the system remains operational.

- Example: NoSQL databases like Cassandra, DynamoDB, which offer eventual consistency.

3. CA (Consistency + Availability) - Theoretical Only

- A system that is both 100% consistent and always available is only possible if there is no network partition.

- Since real-world networks can fail, achieving this in a distributed system is practically impossible.

How to Choose a Strategy Based on Use Case?

| Requirement | Recommended CAP Strategy | Example Systems |

|---|---|---|

| Financial Transactions | CP (Consistency + Partition Tolerance) | Google Spanner, RDBMS |

| Real-Time Data Processing | AP (Availability + Partition Tolerance) | Cassandra, DynamoDB |

| Distributed Caching | AP (Availability + Partition Tolerance) | Memcached, Redis |

Suggested Diagrams

- CAP Theorem Triangle - A triangular diagram showing trade-offs among Consistency, Availability, and Partition Tolerance.

- Partition Tolerance Example - Illustrating a network failure scenario causing a system to choose between consistency and availability.

- Comparison of CP vs. AP Systems - Visual representation of how different systems behave under network partitions.

Conclusion

The CAP theorem provides a fundamental understanding of how distributed systems operate under failure conditions. No system can achieve perfect consistency, availability, and partition tolerance simultaneously, so system architects must make trade-offs based on business and technical requirements. Understanding these trade-offs helps in designing robust and scalable distributed architectures.

By carefully choosing between CP and AP approaches based on the application's needs, businesses can create resilient systems tailored to their use cases.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] Microsoft Office Professional 2021 for Windows: Lifetime License (75% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Anthony_Brown_Alamy.jpg?#)

_Hanna_Kuprevich_Alamy.jpg?#)

.png?#)

![Hands-on: We got to play Nintendo Switch 2 for nearly six hours yesterday [Video]](https://i0.wp.com/9to5toys.com/wp-content/uploads/sites/5/2025/04/Switch-FI-.jpg.jpg?resize=1200%2C628&ssl=1)

![Fitbit redesigns Water stats and logging on Android, iOS [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/03/fitbit-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)