Troubleshooting High Load Averages: How Remote SSH Execution Saved My Build Server

In the world of server management, unexpected issues can arise at any moment, often leaving us scrambling for solutions. I recently faced such a challenge when my build server became unresponsive, and attempts to SSH into it would simply hang. Rebooting the server was an option, but it risked losing valuable insights into the root cause of the problem. Instead, I decided to leverage the power of SSH to remotely diagnose and resolve the issue. What followed was a journey through high load averages, runaway cron jobs, and the satisfaction of bringing a server back from the brink-all without a single reboot. Stay with me as I walk you through how remote SSH execution became my lifeline in this technical adventure. The trouble began when I noticed that my build server was unresponsive. Any attempt to SSH into it would hang indefinitely. The easy way out was to reboot, but I knew that would wipe away any clues about what was going on. I needed to figure out what was causing the problem to prevent it from happening again in the future. So, I decided to take a more investigative approach by running non-login shell commands over SSH. I first ran the uptime command to check the system's load average. To my surprise, it exceeded 12,000-indicating extreme server stress, which explained the unresponsiveness. ssh user@server "uptime" With the load average sky-high, I needed to dig deeper. I used the ps command to list the running processes and quickly discovered the root of the problem: over 9,000 instances of a cron job were running simultaneously. It appeared that the job wasn't completing before the next one started, causing them to stack up. ssh user@server "ps aux" Armed with this information, I knew I had to stop the runaway processes. I used the killall command to terminate all instances of the problematic cron job. This was a crucial step in bringing the server back to a manageable state. ssh user@server "killall cronjobname" After a few minutes, the load average began to drop, and I was finally able to log in to the server. With access restored, I disabled the cron job to prevent it from causing further issues. This experience reinforced the importance of understanding and utilizing SSH commands for remote troubleshooting. Key Takeaways Don't reboot without investigating: When a server becomes unresponsive, it's tempting to simply reboot it. However, this can make it difficult to diagnose the root cause of the problem. Use non-login SSH commands for troubleshooting: By running simple shell commands over SSH, you can gather information about a server's state without having to log in. Check load average and processes: The uptime and ps commands can provide valuable insights into a server's load average and running processes. Identify and terminate problematic processes: If you find a process that's causing issues, use commands like killall to terminate it and prevent further problems. These takeaways can be applied to various scenarios in DevOps, system administration, and even personal computing. This experience was not just about troubleshooting a high load average; it was a practical exercise in incident response, a critical component of modern DevOps practices. In today's fast-paced tech environments, the ability to quickly diagnose and resolve issues is paramount. By using these strategies, you can diagnose and resolve issues more efficiently, reducing downtime and improving overall system reliability.

In the world of server management, unexpected issues can arise at any moment, often leaving us scrambling for solutions.

I recently faced such a challenge when my build server became unresponsive, and attempts to SSH into it would simply hang.

Rebooting the server was an option, but it risked losing valuable insights into the root cause of the problem.

Instead, I decided to leverage the power of SSH to remotely diagnose and resolve the issue.

What followed was a journey through high load averages, runaway cron jobs, and the satisfaction of bringing a server back from the brink-all without a single reboot.

Stay with me as I walk you through how remote SSH execution became my lifeline in this technical adventure.

The trouble began when I noticed that my build server was unresponsive. Any attempt to SSH into it would hang indefinitely.

The easy way out was to reboot, but I knew that would wipe away any clues about what was going on.

I needed to figure out what was causing the problem to prevent it from happening again in the future.

So, I decided to take a more investigative approach by running non-login shell commands over SSH.

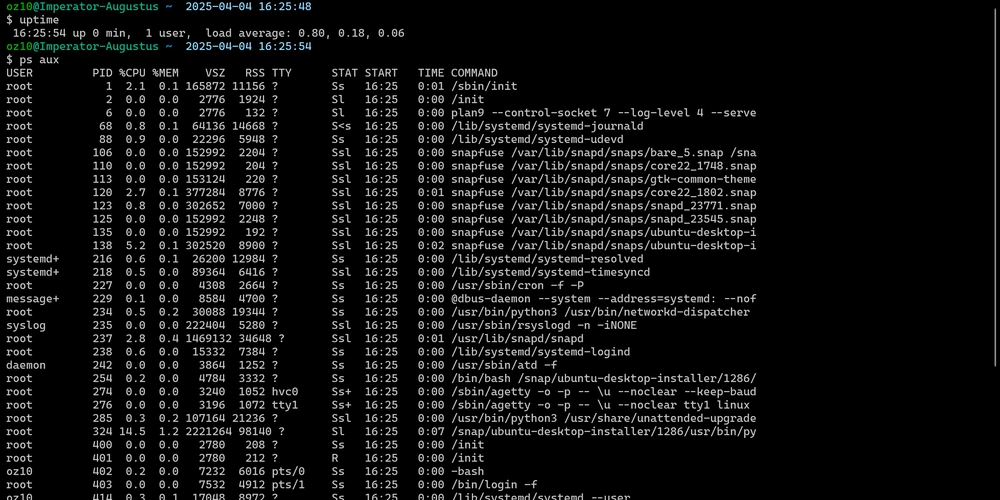

I first ran the uptime command to check the system's load average. To my surprise, it exceeded 12,000-indicating extreme server stress, which explained the unresponsiveness.

ssh user@server "uptime"

With the load average sky-high, I needed to dig deeper.

I used the ps command to list the running processes and quickly discovered the root of the problem: over 9,000 instances of a cron job were running simultaneously. It appeared that the job wasn't completing before the next one started, causing them to stack up.

ssh user@server "ps aux"

Armed with this information, I knew I had to stop the runaway processes.

I used the killall command to terminate all instances of the problematic cron job.

This was a crucial step in bringing the server back to a manageable state.

ssh user@server "killall cronjobname"

After a few minutes, the load average began to drop, and I was finally able to log in to the server. With access restored, I disabled the cron job to prevent it from causing further issues.

This experience reinforced the importance of understanding and utilizing SSH commands for remote troubleshooting.

Key Takeaways

- Don't reboot without investigating: When a server becomes unresponsive, it's tempting to simply reboot it. However, this can make it difficult to diagnose the root cause of the problem.

- Use non-login SSH commands for troubleshooting: By running simple shell commands over SSH, you can gather information about a server's state without having to log in.

- Check load average and processes: The uptime and ps commands can provide valuable insights into a server's load average and running processes.

- Identify and terminate problematic processes: If you find a process that's causing issues, use commands like killall to terminate it and prevent further problems.

These takeaways can be applied to various scenarios in DevOps, system administration, and even personal computing.

This experience was not just about troubleshooting a high load average; it was a practical exercise in incident response, a critical component of modern DevOps practices.

In today's fast-paced tech environments, the ability to quickly diagnose and resolve issues is paramount.

By using these strategies, you can diagnose and resolve issues more efficiently, reducing downtime and improving overall system reliability.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)