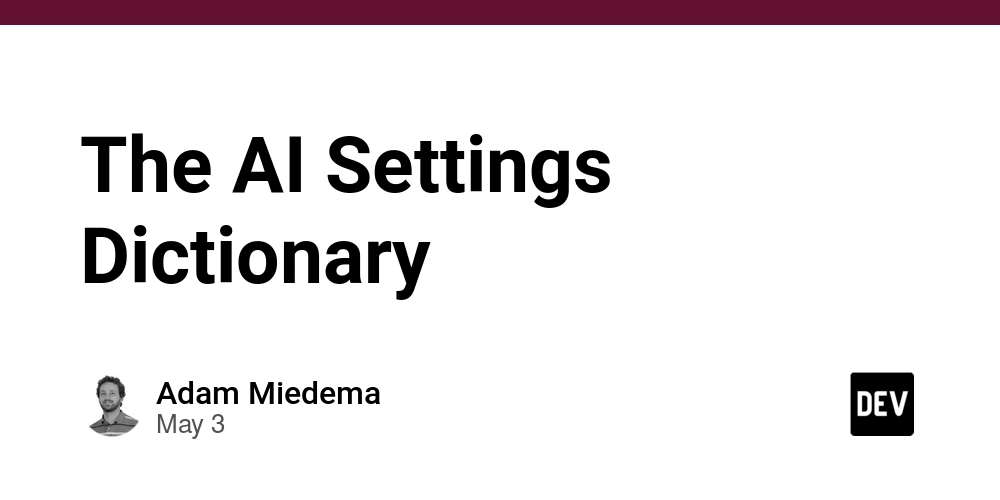

The AI Settings Dictionary

You may have noticed some LLMs allow you the ability to control certain settings - typically paired with a brief explanation that still leaves you wondering exactly what they do. Fear not! This is the living dictionary of AI settings. We will keep this up to date as we learn more about how these settings work and what they do. Context Message Limit What it is How many past messages in a conversation the AI can "remember." Why it matters Imagine texting someone with short-term memory. If the limit is 10 messages, the AI forgets anything before that. Important in long chats, like customer support or storytelling, where the AI needs to follow along. When to change it Increase it for better conversation memory. Decrease it if you're seeing errors, or to save memory. Context Window Size What it is The total number of tokens (pieces of words) the AI can consider at once — both your message and its reply. Why it matters Think of it like a whiteboard. A bigger whiteboard = more info the AI can “see” at once. If your input is too long (e.g., an entire book), it might cut off earlier parts if they don’t fit. When to change it Use large windows for long documents, coding projects, or full email threads. Not something you usually adjust, but good to know when picking a model (e.g., GPT-4-Turbo can handle 128k tokens). Frequency Penalty What it is Tells the AI: "Don’t keep using the same words over and over." Why it matters Without it, the AI might write: “This is very very very good.” Makes writing sound more natural and less robotic. Range 0 to 2 Low (0) - repetition allowed. High (1–2) - more variety, less echo. Typical value 0.5 When to change it Raise it if the AI is looping or repeating itself. Lower it for tasks like summarizing where repetition might be okay. GPU Layers What it is A performance setting for people running AI locally. It decides how much of the model runs on a GPU (fast) versus CPU (slow). Why it matters More GPU layers = faster responses (if your graphics card can handle it). When to change it Only in local installations (like using models on your own computer). You’ll need some technical know-how and a decent GPU. Max Output Tokens What it is This sets how long the AI’s reply can be — in tokens, which are like chunks of words. Why it matters Prevents the AI from writing an essay when you just want a sentence. Saves on cost or time if you're paying per token. Range 1 to thousands (depending on the model) Typical value 256–512 tokens (about 100–300 words) When to change it Increase it for long answers (e.g., stories, detailed code). Decrease it for quick replies or summaries. Presence Penalty What it is Encourages the AI to introduce new topics instead of sticking to what it already said. Why it matters Great for brainstorming, exploring ideas, or avoiding repetition. Range 0 to 2 Low (0) - stick to what's already been said. High (1–2) - add new ideas, mix things up. Typical value 0.5–1.0 When to change it Raise it when you want variety (like idea generation). Lower it if you want the AI to stay focused on a specific topic. Temperature What it is Controls how creative or random the AI is when generating responses. Why it matters Think of it like a dial between "robot mode" and "creative mode." Range 0 to 1 Low (0–0.3) very focused, factual, safe. Example: Good for math, summaries, or coding. High (0.7–1: - more playful, unpredictable, and surprising. Example: Useful for stories, jokes, or coming up with weird ideas. Typical value 0.7 When to change it Lower for accurate info or professional tone. Raise for fun, brainstorming, or “think outside the box” tasks. Top K What it is The AI predicts many possible next words — Top K limits its choices to just the K most likely ones. Why it matters A low K is like giving the AI a strict script: “Pick from just the top 5 words.” A high K gives it more freedom: “Pick from the top 50 or even 100!” Range 1 to 100+ Low (1–10) - safe, predictable — might repeat phrases. High (50–100) - more creative, but risks weird or off-topic replies. Typical value 40–50 When to change it Raise it to make the AI more surprising or fun. Lower it if you want tighter, more professional responses. Top P (Nucleus Sampling) What it is Instead of limiting the number of word options (like Top K), it limits the total p

You may have noticed some LLMs allow you the ability to control certain settings - typically paired with a brief explanation that still leaves you wondering exactly what they do.

Fear not! This is the living dictionary of AI settings. We will keep this up to date as we learn more about how these settings work and what they do.

Context Message Limit

What it is

How many past messages in a conversation the AI can "remember."

Why it matters

- Imagine texting someone with short-term memory. If the limit is 10 messages, the AI forgets anything before that.

- Important in long chats, like customer support or storytelling, where the AI needs to follow along.

When to change it

- Increase it for better conversation memory.

- Decrease it if you're seeing errors, or to save memory.

Context Window Size

What it is

The total number of tokens (pieces of words) the AI can consider at once — both your message and its reply.

Why it matters

- Think of it like a whiteboard. A bigger whiteboard = more info the AI can “see” at once.

- If your input is too long (e.g., an entire book), it might cut off earlier parts if they don’t fit.

When to change it

- Use large windows for long documents, coding projects, or full email threads.

- Not something you usually adjust, but good to know when picking a model (e.g., GPT-4-Turbo can handle 128k tokens).

Frequency Penalty

What it is

Tells the AI: "Don’t keep using the same words over and over."

Why it matters

- Without it, the AI might write: “This is very very very good.”

- Makes writing sound more natural and less robotic.

Range

0 to 2

- Low (0) - repetition allowed.

- High (1–2) - more variety, less echo.

Typical value

0.5

When to change it

- Raise it if the AI is looping or repeating itself.

- Lower it for tasks like summarizing where repetition might be okay.

GPU Layers

What it is

A performance setting for people running AI locally. It decides how much of the model runs on a GPU (fast) versus CPU (slow).

Why it matters

- More GPU layers = faster responses (if your graphics card can handle it).

When to change it

- Only in local installations (like using models on your own computer).

- You’ll need some technical know-how and a decent GPU.

Max Output Tokens

What it is

This sets how long the AI’s reply can be — in tokens, which are like chunks of words.

Why it matters

- Prevents the AI from writing an essay when you just want a sentence.

- Saves on cost or time if you're paying per token.

Range

1 to thousands (depending on the model)

Typical value

256–512 tokens (about 100–300 words)

When to change it

- Increase it for long answers (e.g., stories, detailed code).

- Decrease it for quick replies or summaries.

Presence Penalty

What it is

Encourages the AI to introduce new topics instead of sticking to what it already said.

Why it matters

- Great for brainstorming, exploring ideas, or avoiding repetition.

Range

0 to 2

- Low (0) - stick to what's already been said.

- High (1–2) - add new ideas, mix things up.

Typical value

0.5–1.0

When to change it

- Raise it when you want variety (like idea generation).

- Lower it if you want the AI to stay focused on a specific topic.

Temperature

What it is

Controls how creative or random the AI is when generating responses.

Why it matters

- Think of it like a dial between "robot mode" and "creative mode."

Range

0 to 1

-

Low (0–0.3) very focused, factual, safe.

- Example: Good for math, summaries, or coding.

-

High (0.7–1: - more playful, unpredictable, and surprising.

- Example: Useful for stories, jokes, or coming up with weird ideas.

Typical value

0.7

When to change it

- Lower for accurate info or professional tone.

- Raise for fun, brainstorming, or “think outside the box” tasks.

Top K

What it is

The AI predicts many possible next words — Top K limits its choices to just the K most likely ones.

Why it matters

- A low K is like giving the AI a strict script: “Pick from just the top 5 words.”

- A high K gives it more freedom: “Pick from the top 50 or even 100!”

Range

1 to 100+

- Low (1–10) - safe, predictable — might repeat phrases.

- High (50–100) - more creative, but risks weird or off-topic replies.

Typical value

40–50

When to change it

- Raise it to make the AI more surprising or fun.

- Lower it if you want tighter, more professional responses.

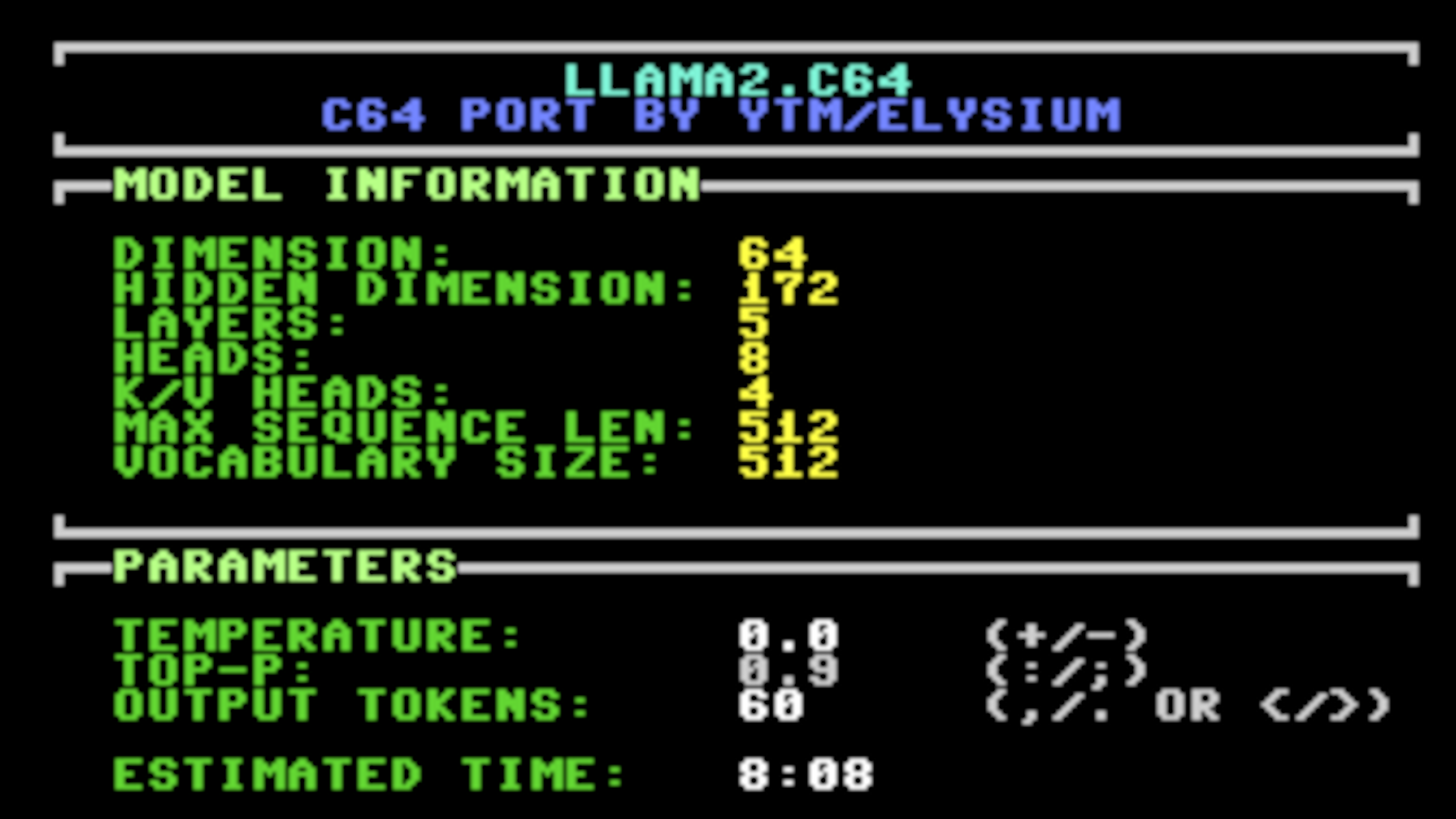

Top P (Nucleus Sampling)

What it is

Instead of limiting the number of word options (like Top K), it limits the total probability.

Why it matters

- Imagine a pie chart of likely next words. Top P tells the AI, “Only pick from the smallest slice that makes up, say, 90% of the pie.”

- This allows more flexibility than Top K, especially when probability shifts a lot.

Range

0 to 1

- Low (0.1–0.3) - very focused and repetitive.

- High (0.9–1.0) - much more varied and playful.

Typical value

0.9

When to change it

- Lower for more technical, rule-following output.

- Raise for more imagination, idea generation, or fun conversations.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

![[Updated] Samsung’s 65-inch 4K Smart TV Just Crashed to $299 — That’s Cheaper Than an iPad](https://www.androidheadlines.com/wp-content/uploads/2025/05/samsung-du7200.jpg)