Setting Up Llama 3.2 Locally with Ollama and Open WebUI: A Complete Guide

We'll use Ollama as tool for setting up the llama3.2 model in our local device. Requirements for Llama3.2 1 Install Ollama Download the Ollama from here: https://ollama.com/ and install it locally. It should be very easy to install just one click. It'll automatically setup the CLI path, if not please explore the documentation. you can explore the models from supported by ollama here https://ollama.com/search 2 Setup the model, in our case Llama3.2 ollama run llama3.2:latest It should download the modal and spin it up in your terminal, you can have chat directly from the terminal Once we're good that part, now it's time to setup the UI ( like chatGPT ) 3 Setup Open-WebUI Open WebUI is an extensible, self-hosted AI interface that adapts to your workflow, all while operating entirely offline. Checkout their docs from here: https://docs.openwebui.com/ we'll use the docker to setup the interface locally, run this command docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main Once docker is up successfully go to port 3000 and you're good to go. You can run other models and change them directly from the open webui interface. Enjoy....

We'll use Ollama as tool for setting up the llama3.2 model in our local device.

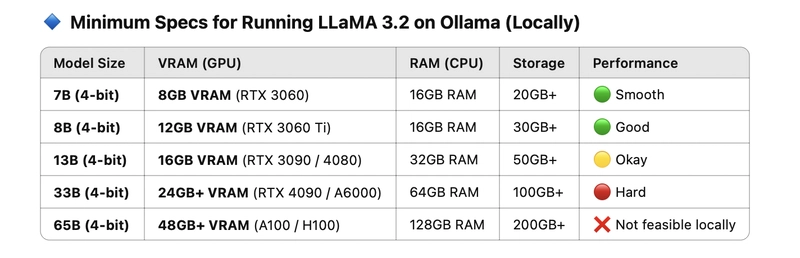

Requirements for Llama3.2

1 Install Ollama

Download the Ollama from here: https://ollama.com/ and install it locally. It should be very easy to install just one click.

It'll automatically setup the CLI path, if not please explore the documentation.

you can explore the models from supported by ollama here

https://ollama.com/search

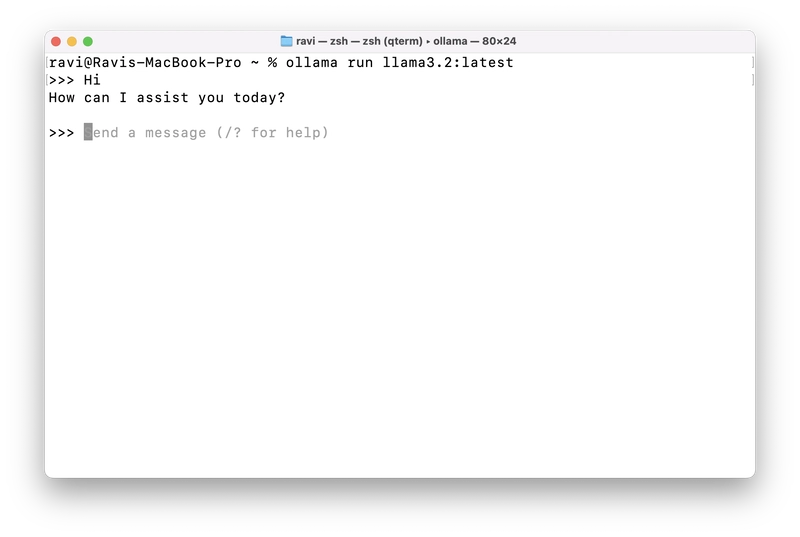

2 Setup the model, in our case Llama3.2

ollama run llama3.2:latest

It should download the modal and spin it up in your terminal, you can have chat directly from the terminal

Once we're good that part, now it's time to setup the UI ( like chatGPT )

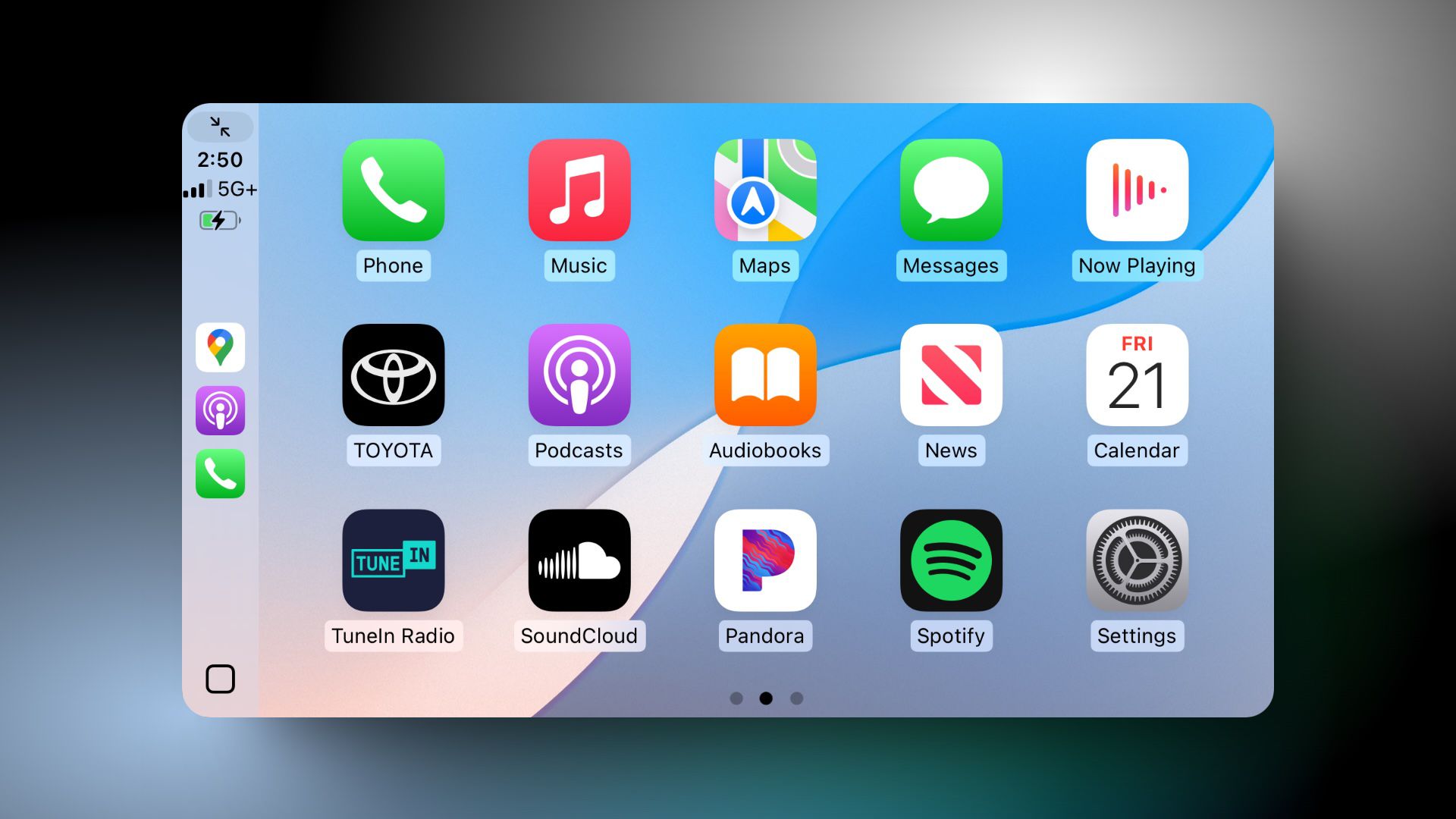

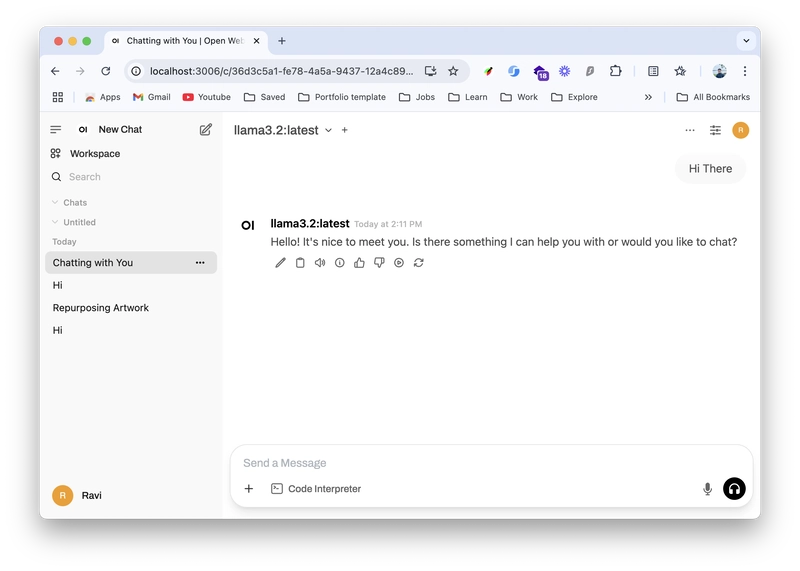

3 Setup Open-WebUI

Open WebUI is an extensible, self-hosted AI interface that adapts to your workflow, all while operating entirely offline.

Checkout their docs from here: https://docs.openwebui.com/

we'll use the docker to setup the interface locally, run this command

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Once docker is up successfully go to port 3000 and you're good to go.

You can run other models and change them directly from the open webui interface.

Enjoy....

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

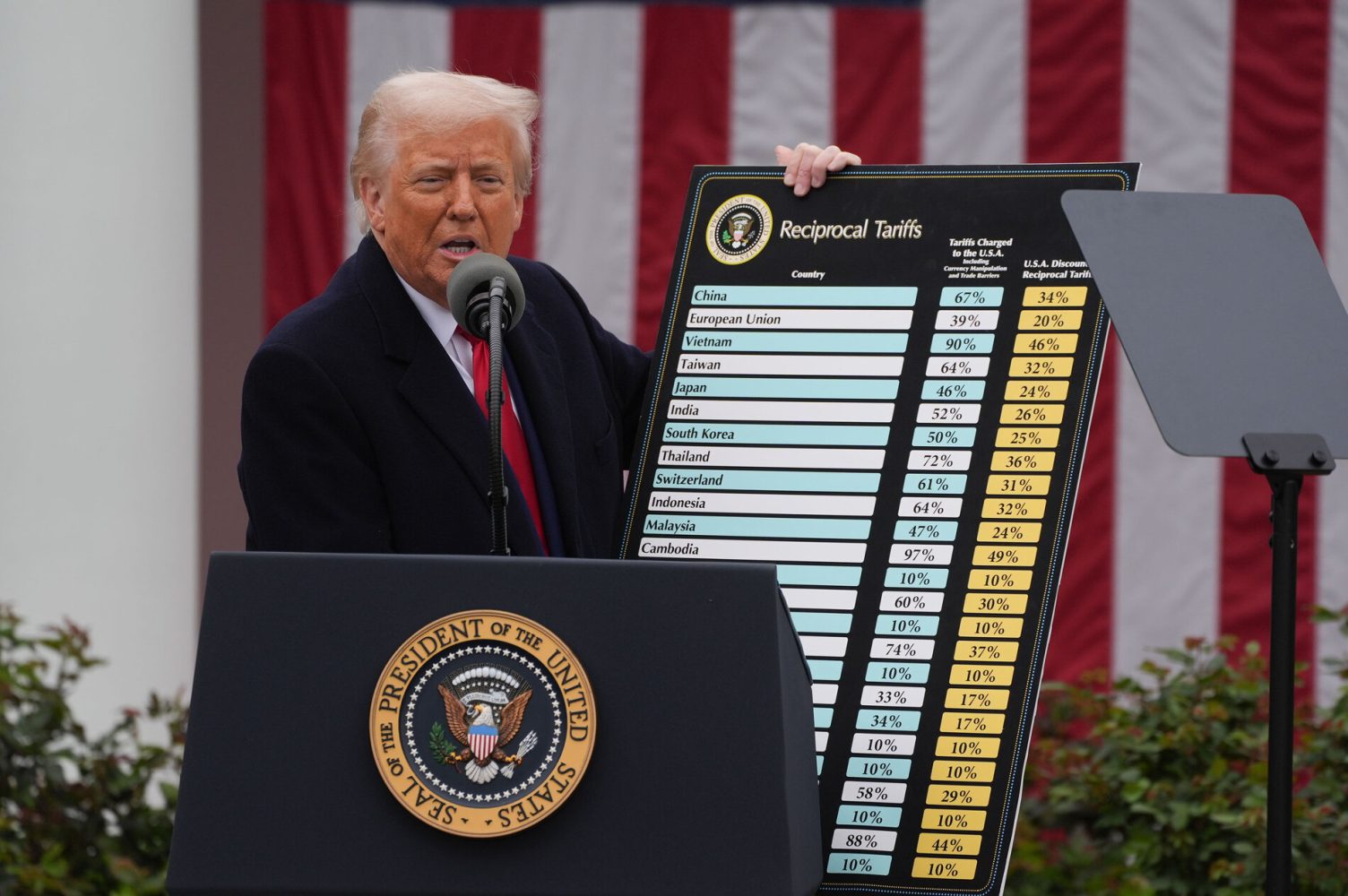

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)