Securing AI Document Systems: Implementing the Four-Perimeter Framework with Permit.io

This is a submission for the Permit.io Authorization Challenge: AI Access Control What I Built I've built an AI Document Assistant with enterprise-grade security and authorization controls using the Four-Perimeter Framework from Permit.io. This project demonstrates how to implement robust security controls for AI applications that handle potentially sensitive documents. The AI Document Assistant allows users to upload documents and perform various AI operations on them, such as summarization, information extraction, analysis, translation, and question answering. What sets this application apart is its security architecture that goes beyond basic authentication. Most AI document systems today focus solely on features and capabilities, leaving security as an afterthought. This creates significant risks for organizations dealing with sensitive information. My solution addresses this gap by implementing comprehensive authorization controls at every level of the AI interaction chain. The system enforces different permission levels based on user roles: Admin users can upload documents and perform most AI operations Premium users can access documents but with restrictions on certain advanced operations Basic users have minimal permissions, only able to view their own documents and perform simple queries What makes this implementation special is that it demonstrates real authorization controls using the Four-Perimeter Framework: Prompt Filtering: Controls what users can ask the AI system based on their roles RAG Data Protection: Enforces access controls on the document data that feeds the AI External Access Control: Implements separation of duties for sensitive operations that require external API access Response Enforcement: Ensures AI responses comply with security policies and user permissions This project is particularly relevant for organizations in regulated industries (healthcare, finance, legal) where controlling AI access to sensitive documents is critical for compliance and data protection. By implementing the Four-Perimeter Framework, I've created a blueprint for building AI systems that are both powerful and secure. Admin Users: Can upload documents Can read any document Can perform basic AI operations (summarize, extract, answer) Cannot perform operations that require external access (analyze, translate) Premium Users: Cannot upload documents Can read any document Can use basic AI operations (summarize, extract, answer) Cannot use advanced operations (analyze, translate) Basic Users: Cannot upload documents Can only read their own documents Can only use the most basic AI operation (answer) Cannot use any advanced features Demo Sample Output for "python test_endpoints.py admin": Sample output for "python test_endpoints.py basic": Project Repo Repo link: github My Journey Implementing the AI Document Assistant with robust security controls was both challenging and rewarding. Getting Started: Understanding the Four-Perimeter Framework I began by diving deep into Permit.io's Four-Perimeter Framework to understand how these security layers could be implemented in a practical application. This required shifting my thinking from "can the user authenticate?" to "what specific actions should this user be allowed to perform on this specific resource?" Architectural Decisions: I chose FastAPI for the backend due to its performance and built-in OpenAPI documentation. For AI capabilities, LangChain provided the flexibility to integrate with various LLMs while creating abstractions for document processing. The Permit.io SDK offered the authorization layer that connected everything together. The most critical architectural decision was designing the security perimeters as separate, modular components that could intercept and authorize requests at different stages of the AI pipeline. Challenges and Solutions Challenge 1: Integrating Authorization with RAG Architecture Implementing authorization in a RAG (Retrieval-Augmented Generation) system was complex because I needed to control not just what documents a user could see, but what content the AI model could access on their behalf. Solution: I split the RAG protection into pre-query and post-query filtering. This allowed me to filter document IDs before retrieval and then sanitize the content before passing it to the LLM. Challenge 2: Stubbing External Systems for Testing Operations like "analyze" and "translate" would normally call external APIs, but implementing these connections would have been overkill for a proof of concept. Solution: I created a simulated external access control system that would deny these operations by default, demonstrating the separation of duties principle without implementing actual external integrations. Challenge 3: Handling Authentication Errors I struggled with 401 and 403 errors during testing. It wasn't always clear whether the issue was with a

This is a submission for the Permit.io Authorization Challenge: AI Access Control

What I Built

I've built an AI Document Assistant with enterprise-grade security and authorization controls using the Four-Perimeter Framework from Permit.io. This project demonstrates how to implement robust security controls for AI applications that handle potentially sensitive documents.

The AI Document Assistant allows users to upload documents and perform various AI operations on them, such as summarization, information extraction, analysis, translation, and question answering. What sets this application apart is its security architecture that goes beyond basic authentication.

Most AI document systems today focus solely on features and capabilities, leaving security as an afterthought. This creates significant risks for organizations dealing with sensitive information. My solution addresses this gap by implementing comprehensive authorization controls at every level of the AI interaction chain.

The system enforces different permission levels based on user roles:

- Admin users can upload documents and perform most AI operations

- Premium users can access documents but with restrictions on certain advanced operations

- Basic users have minimal permissions, only able to view their own documents and perform simple queries

What makes this implementation special is that it demonstrates real authorization controls using the Four-Perimeter Framework:

- Prompt Filtering: Controls what users can ask the AI system based on their roles

- RAG Data Protection: Enforces access controls on the document data that feeds the AI

- External Access Control: Implements separation of duties for sensitive operations that require external API access

- Response Enforcement: Ensures AI responses comply with security policies and user permissions This project is particularly relevant for organizations in regulated industries (healthcare, finance, legal) where controlling AI access to sensitive documents is critical for compliance and data protection.

By implementing the Four-Perimeter Framework, I've created a blueprint for building AI systems that are both powerful and secure.

Admin Users:

- Can upload documents

- Can read any document

- Can perform basic AI operations (summarize, extract, answer)

- Cannot perform operations that require external access (analyze, translate)

Premium Users:

- Cannot upload documents

- Can read any document

- Can use basic AI operations (summarize, extract, answer)

- Cannot use advanced operations (analyze, translate)

Basic Users:

- Cannot upload documents

- Can only read their own documents

- Can only use the most basic AI operation (answer)

- Cannot use any advanced features

Demo

Sample Output for "python test_endpoints.py admin":

Sample output for "python test_endpoints.py basic":

Project Repo

Repo link: github

My Journey

Implementing the AI Document Assistant with robust security controls was both challenging and rewarding.

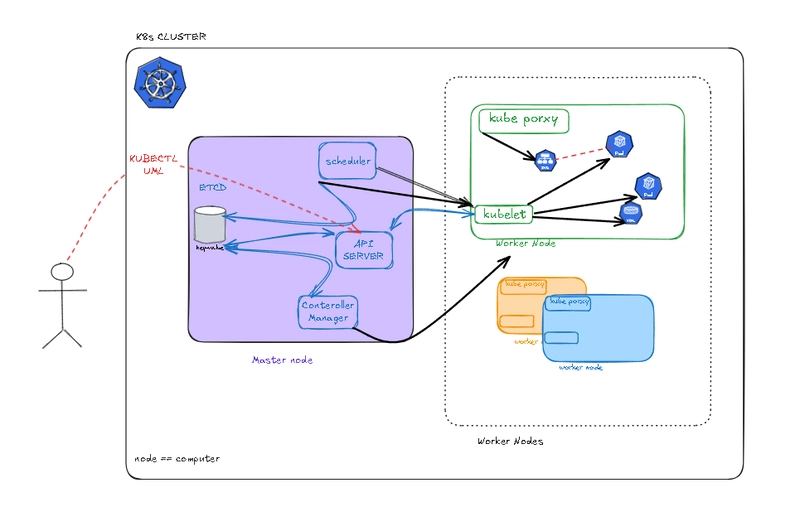

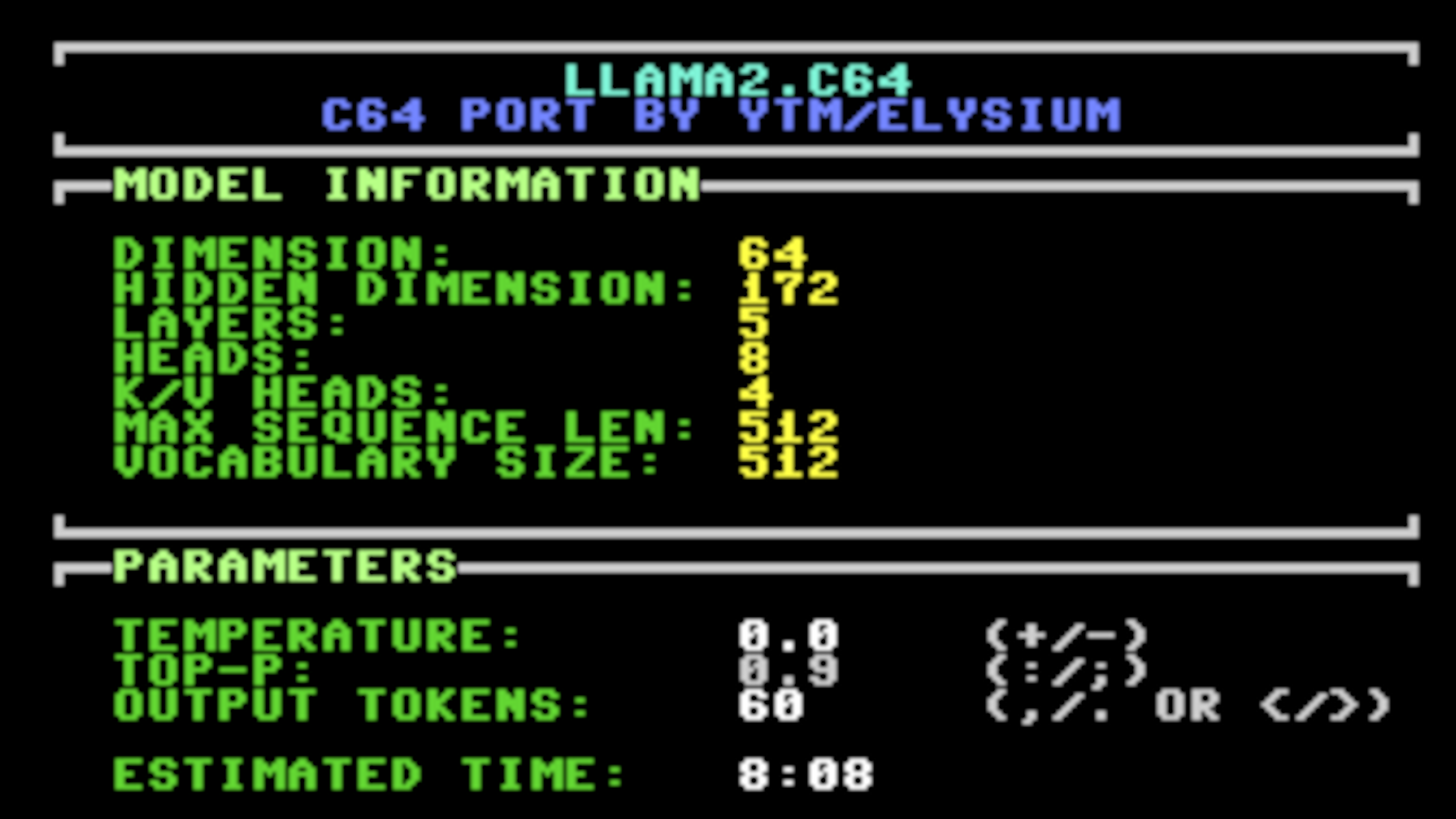

Getting Started: Understanding the Four-Perimeter Framework I began by diving deep into Permit.io's Four-Perimeter Framework to understand how these security layers could be implemented in a practical application. This required shifting my thinking from "can the user authenticate?" to "what specific actions should this user be allowed to perform on this specific resource?"

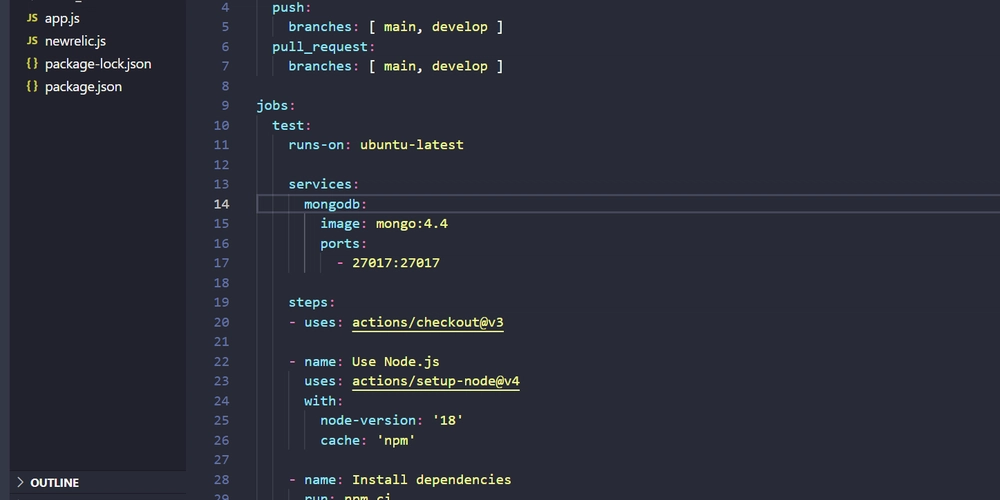

Architectural Decisions: I chose FastAPI for the backend due to its performance and built-in OpenAPI documentation. For AI capabilities, LangChain provided the flexibility to integrate with various LLMs while creating abstractions for document processing. The Permit.io SDK offered the authorization layer that connected everything together.

The most critical architectural decision was designing the security perimeters as separate, modular components that could intercept and authorize requests at different stages of the AI pipeline.

Challenges and Solutions

Challenge 1: Integrating Authorization with RAG Architecture

Implementing authorization in a RAG (Retrieval-Augmented Generation) system was complex because I needed to control not just what documents a user could see, but what content the AI model could access on their behalf.

Solution: I split the RAG protection into pre-query and post-query filtering. This allowed me to filter document IDs before retrieval and then sanitize the content before passing it to the LLM.

Challenge 2: Stubbing External Systems for Testing

Operations like "analyze" and "translate" would normally call external APIs, but implementing these connections would have been overkill for a proof of concept.

Solution: I created a simulated external access control system that would deny these operations by default, demonstrating the separation of duties principle without implementing actual external integrations.

Challenge 3: Handling Authentication Errors

I struggled with 401 and 403 errors during testing. It wasn't always clear whether the issue was with authentication or authorization.

Solution: I improved error handling and debugging in the auth endpoints, which helped identify that the OAuth token URL path was incorrect. This seemingly small detail was causing the whole authentication flow to fail.

Challenge 4: Developing Without External APIs

Working with OpenAI and Permit.io in a development environment proved challenging when API keys weren't available or rate limits were hit.

Solution: I implemented fallback mechanisms that used mock responses when external services were unavailable, making development smooth even without constant API access.

What I Learned

This project taught me several valuable lessons:

Security is a Feature, Not an Afterthought: Integrating security controls from the beginning led to a cleaner, more robust architecture.

Separation of Duties is Powerful: Having operations that even admin users can't perform without approval creates significantly stronger security guarantees.

Role-Based vs. Attribute-Based Access Control: I gained a deeper understanding of how RBAC is insufficient for AI systems, where ABAC (Attribute-Based Access Control) provides the necessary granularity.

Error Handling is Critical for Security UX: Clear, specific error messages make security issues easier to debug without revealing sensitive implementation details.

The most important insight was realizing that authorization for AI systems needs to happen at multiple layers. Simply checking if a user can access a document isn't enough - you need controls at the prompt level, the data access level, the external API level, and the response level to create truly secure AI systems.

This approach to AI security feels like the right direction for building enterprise AI applications that can handle sensitive data while respecting organizational boundaries and compliance requirements.

Authorization for AI Applications with Permit.io

I implemented Permit.io's Four-Perimeter Framework to create defense-in-depth for AI systems.

Prompt Filtering:

I built a prompt filtering layer that validates user prompts before they reach the AI models. This ensures users can only submit prompts for operations they're authorized to perform, based on their role and document sensitivity.RAG Data Protection:

I created two-phase protection for document access:

Pre-query filtering to control which documents can be retrieved

Post-query filtering to sanitize retrieved content

This ensures the AI model only accesses document content the user is authorized to see.External Access Control:

For operations requiring external APIs (analyze, translate), I implemented controls that enforce separation of duties. Even admin users need explicit approval for these sensitive operations, demonstrating the principle that privileged access doesn't mean unlimited access.Response Enforcement:

The final security layer filters AI-generated responses before returning them to users, ensuring content is appropriate for the user's permission level.

Role-Based Permission Design

I implemented three distinct user roles with different permission levels:

Admin users: Can upload documents and perform most operations except those requiring external access

Premium users: Can read documents and perform basic AI operations but can't upload

Basic users: Can only read their own documents and use the simplest AI operation (question answering)

Implementing authorization for AI applications taught me that AI systems need much more fine-grained controls than traditional apps. Context (document sensitivity, operation type) is crucial for authorization decisions. Even admin users should face restrictions for high-risk operations. Well-designed permission denials improve security understanding

Using Permit.io's attribute-based access control, I was able to implement these nuanced authorization controls that go far beyond simple role-based access, providing the security needed for enterprise AI applications handling sensitive data.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

![[Updated] Samsung’s 65-inch 4K Smart TV Just Crashed to $299 — That’s Cheaper Than an iPad](https://www.androidheadlines.com/wp-content/uploads/2025/05/samsung-du7200.jpg)