REST vs gRPC in Python: A Practical Benchmark!

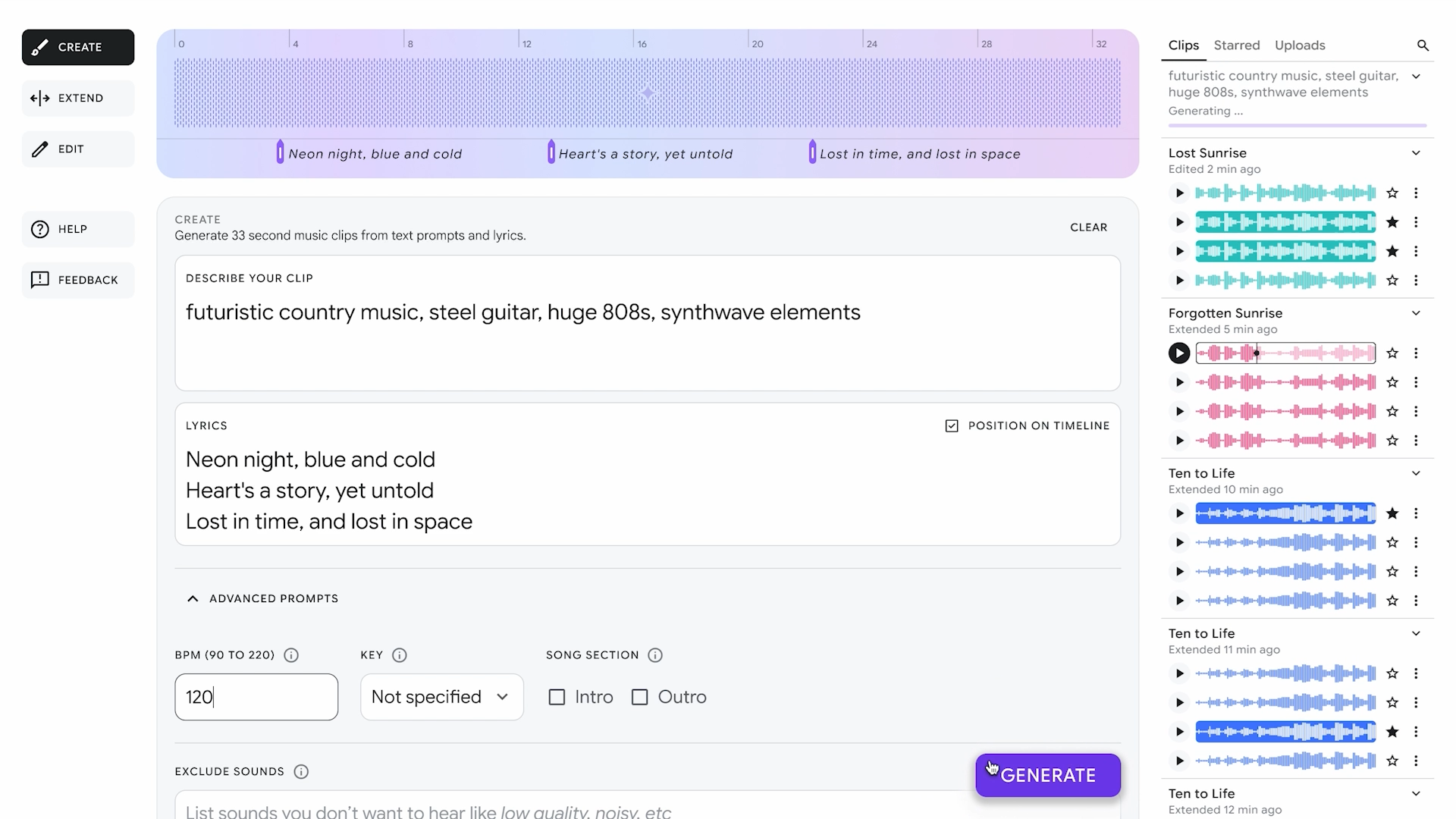

Ever wondered: "Is gRPC really faster than REST?" Let's not just believe the hype. Let's measure it ourselves! In this blog, we'll build small REST and gRPC services in Python, benchmark them, and compare their real-world performance. REST vs gRPC: Quick Intro Feature REST (Flask) gRPC (Protobuf) Protocol HTTP/1.1 HTTP/2 Data Format JSON (text) Protobuf (binary) Human-readable? Yes No Speed Slower Faster Streaming support Hard Native Setup: Build Two Simple Services 1. REST API Server (Flask) # rest_server.py from flask import Flask, request, jsonify app = Flask(__name__) @app.route('/hello', methods=['POST']) def say_hello(): data = request.json name = data.get('name', 'World') return jsonify({'message': f'Hello, {name}!'}), 200 if __name__ == '__main__': app.run(port=5000) 2. gRPC Server (Python) First, define your service using Protocol Buffers. hello.proto syntax = "proto3"; service HelloService { rpc SayHello (HelloRequest) returns (HelloResponse); } message HelloRequest { string name = 1; } message HelloResponse { string message = 1; } Generate Python files: python -m grpc_tools.protoc -I. --python_out=. --grpc_python_out=. hello.proto gRPC server: # grpc_server.py import grpc from concurrent import futures import hello_pb2 import hello_pb2_grpc class HelloService(hello_pb2_grpc.HelloServiceServicer): def SayHello(self, request, context): return hello_pb2.HelloResponse(message=f"Hello, {request.name}!") server = grpc.server(futures.ThreadPoolExecutor(max_workers=10)) hello_pb2_grpc.add_HelloServiceServicer_to_server(HelloService(), server) server.add_insecure_port('[::]:50051') server.start() server.wait_for_termination() Benchmark: How to Measure? We will send 1000 requests to each server and measure total time taken. REST Benchmark Client import requests import time def benchmark_rest(): url = "http://localhost:5000/hello" data = {"name": "Ninad"} start = time.time() for _ in range(1000): response = requests.post(url, json=data) _ = response.json() end = time.time() print(f"REST Total Time: {end - start:.2f} seconds") if __name__ == "__main__": benchmark_rest() gRPC Benchmark Client import grpc import hello_pb2 import hello_pb2_grpc import time def benchmark_grpc(): channel = grpc.insecure_channel('localhost:50051') stub = hello_pb2_grpc.HelloServiceStub(channel) start = time.time() for _ in range(1000): _ = stub.SayHello(hello_pb2.HelloRequest(name="Ninad")) end = time.time() print(f"gRPC Total Time: {end - start:.2f} seconds") if __name__ == "__main__": benchmark_grpc() Results: What I Observed Metric REST gRPC Total Time (1000 req) ~15-20 seconds ~3-5 seconds Avg Latency/request ~15-20 ms ~3-5 ms Payload Size Larger (text) Smaller (binary) gRPC was about 4-5x faster than REST in this small test! Why is gRPC Faster? Uses HTTP/2: multiplexing multiple streams. Binary Protobufs: smaller, faster to serialize/deserialize. Persistent connection: no 3-way TCP handshake every call. Conclusion If you're building frontend APIs (browsers/mobile apps) -> REST is still great. If you're building internal microservices at scale -> gRPC shines! Bonus: Advanced Benchmarking Tools For REST: wrk For gRPC: ghz Final Thought: Real engineers don't guess performance — they measure it! Happy benchmarking!

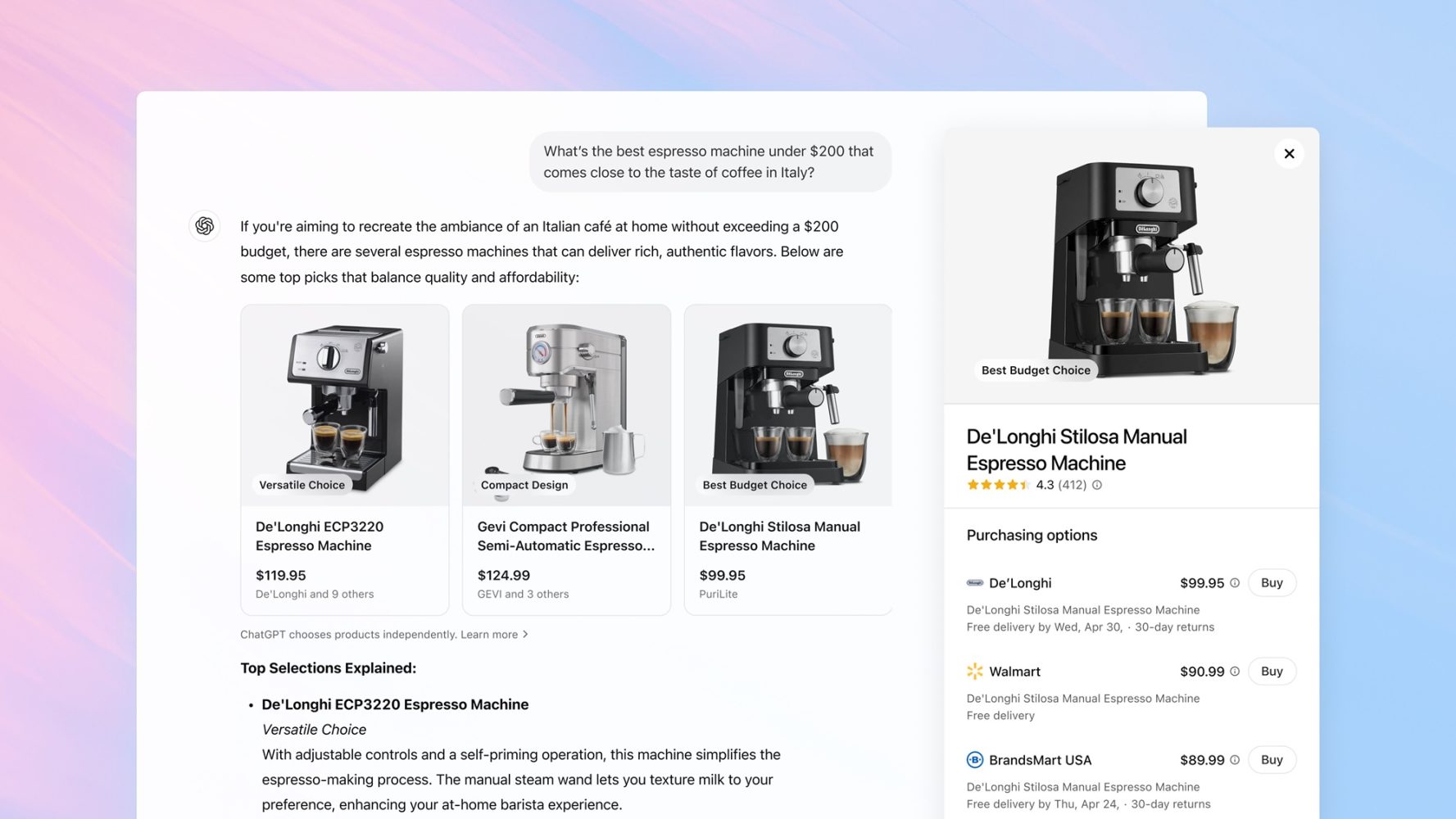

Ever wondered: "Is gRPC really faster than REST?" Let's not just believe the hype. Let's measure it ourselves!

In this blog, we'll build small REST and gRPC services in Python, benchmark them, and compare their real-world performance.

REST vs gRPC: Quick Intro

| Feature | REST (Flask) | gRPC (Protobuf) |

|---|---|---|

| Protocol | HTTP/1.1 | HTTP/2 |

| Data Format | JSON (text) | Protobuf (binary) |

| Human-readable? | Yes | No |

| Speed | Slower | Faster |

| Streaming support | Hard | Native |

Setup: Build Two Simple Services

1. REST API Server (Flask)

# rest_server.py

from flask import Flask, request, jsonify

app = Flask(__name__)

@app.route('/hello', methods=['POST'])

def say_hello():

data = request.json

name = data.get('name', 'World')

return jsonify({'message': f'Hello, {name}!'}), 200

if __name__ == '__main__':

app.run(port=5000)

2. gRPC Server (Python)

First, define your service using Protocol Buffers.

hello.proto

syntax = "proto3";

service HelloService {

rpc SayHello (HelloRequest) returns (HelloResponse);

}

message HelloRequest {

string name = 1;

}

message HelloResponse {

string message = 1;

}

Generate Python files:

python -m grpc_tools.protoc -I. --python_out=. --grpc_python_out=. hello.proto

gRPC server:

# grpc_server.py

import grpc

from concurrent import futures

import hello_pb2

import hello_pb2_grpc

class HelloService(hello_pb2_grpc.HelloServiceServicer):

def SayHello(self, request, context):

return hello_pb2.HelloResponse(message=f"Hello, {request.name}!")

server = grpc.server(futures.ThreadPoolExecutor(max_workers=10))

hello_pb2_grpc.add_HelloServiceServicer_to_server(HelloService(), server)

server.add_insecure_port('[::]:50051')

server.start()

server.wait_for_termination()

Benchmark: How to Measure?

We will send 1000 requests to each server and measure total time taken.

REST Benchmark Client

import requests

import time

def benchmark_rest():

url = "http://localhost:5000/hello"

data = {"name": "Ninad"}

start = time.time()

for _ in range(1000):

response = requests.post(url, json=data)

_ = response.json()

end = time.time()

print(f"REST Total Time: {end - start:.2f} seconds")

if __name__ == "__main__":

benchmark_rest()

gRPC Benchmark Client

import grpc

import hello_pb2

import hello_pb2_grpc

import time

def benchmark_grpc():

channel = grpc.insecure_channel('localhost:50051')

stub = hello_pb2_grpc.HelloServiceStub(channel)

start = time.time()

for _ in range(1000):

_ = stub.SayHello(hello_pb2.HelloRequest(name="Ninad"))

end = time.time()

print(f"gRPC Total Time: {end - start:.2f} seconds")

if __name__ == "__main__":

benchmark_grpc()

Results: What I Observed

| Metric | REST | gRPC |

|---|---|---|

| Total Time (1000 req) | ~15-20 seconds | ~3-5 seconds |

| Avg Latency/request | ~15-20 ms | ~3-5 ms |

| Payload Size | Larger (text) | Smaller (binary) |

gRPC was about 4-5x faster than REST in this small test!

Why is gRPC Faster?

- Uses HTTP/2: multiplexing multiple streams.

- Binary Protobufs: smaller, faster to serialize/deserialize.

- Persistent connection: no 3-way TCP handshake every call.

Conclusion

- If you're building frontend APIs (browsers/mobile apps) -> REST is still great.

- If you're building internal microservices at scale -> gRPC shines!

Bonus: Advanced Benchmarking Tools

Final Thought:

Real engineers don't guess performance — they measure it!

Happy benchmarking!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)