NumExpr: The “Faster than Numpy” Library Most Data Scientists Have Never Used

A comparative performance test with NumPy The post NumExpr: The “Faster than Numpy” Library Most Data Scientists Have Never Used appeared first on Towards Data Science.

I was immediately interested because of some claims made about the library. In particular, it stated that for some complex numerical calculations, it was up to 15 times faster than NumPy.

I was intrigued because, up until now, NumPy has remained unchallenged in its dominance in the numerical computation space in Python. In particular with Data Science, NumPy is a cornerstone for machine learning, exploratory data analysis and model training. Anything we can use to squeeze out every last bit of performance in our systems will be welcomed. So, I decided to put the claims to the test myself.

You can find a link to the NumExpr repository at the end of this article.

What is NumExpr?

According to its GitHub page, NumExpr is a fast numerical expression evaluator for Numpy. Using it, expressions that operate on arrays are accelerated and use less memory than performing the same calculations in Python with other numerical libraries, such as NumPy.

In addition, as it is multithreaded, NumExpr can use all your CPU cores, which generally results in substantial performance scaling compared to NumPy.

Setting up a development environment

Before we start coding, let’s set up our development environment. The best practice is to create a separate Python environment where you can install any necessary software and experiment with coding, knowing that anything you do in this environment won’t affect the rest of your system. I use conda for this, but you can use whatever method you know best that suits you.

If you want to go down the Miniconda route and don’t already have it, you must install Miniconda first. Get it using this link:

https://www.anaconda.com/docs/main

1/ Create our new dev environment and install the required libraries

(base) $ conda create -n numexpr_test python=3.12-y

(base) $ conda activate numexpr

(numexpr_test) $ pip install numexpr

(numexpr_test) $ pip install jupyter2/ Start Jupyter

Now type in jupyter notebook into your command prompt. You should see a jupyter notebook open in your browser. If that doesn’t happen automatically, you’ll likely see a screenful of information after the jupyter notebook command. Near the bottom, you will find a URL that you should copy and paste into your browser to launch the Jupyter Notebook.

Your URL will be different to mine, but it should look something like this:-

http://127.0.0.1:8888/tree?token=3b9f7bd07b6966b41b68e2350721b2d0b6f388d248cc69Comparing NumExpr and NumPy performance

To compare the performance, we’ll run a series of numerical computations using NumPy and NumExpr, and time both systems.

Example 1 — A simple array addition calculation

In this example, we run a vectorised addition of two large arrays 5000 times.

import numpy as np

import numexpr as ne

import timeit

a = np.random.rand(1000000)

b = np.random.rand(1000000)

# Using timeit with lambda functions

time_np_expr = timeit.timeit(lambda: 2*a + 3*b, number=5000)

time_ne_expr = timeit.timeit(lambda: ne.evaluate("2*a + 3*b"), number=5000)

print(f"Execution time (NumPy): {time_np_expr} seconds")

print(f"Execution time (NumExpr): {time_ne_expr} seconds")

>>>>>>>>>>>

Execution time (NumPy): 12.03680682599952 seconds

Execution time (NumExpr): 1.8075962659931974 secondsI have to say, that’s a pretty impressive start from the NumExpr library already. I make that a 6 times improvement over the NumPy runtime.

Let’s double-check that both operations return the same result set.

# Arrays to store the results

result_np = 2*a + 3*b

result_ne = ne.evaluate("2*a + 3*b")

# Ensure the two new arrays are equal

arrays_equal = np.array_equal(result_np, result_ne)

print(f"Arrays equal: {arrays_equal}")

>>>>>>>>>>>>

Arrays equal: TrueExample 2 — Calculate Pi using a Monte Carlo simulation

Our second example will examine a more complicated use case with more real-world applications.

Monte Carlo simulations involve running many iterations of a random process to estimate a system’s properties, which can be computationally intensive.

In this case, we’ll use Monte Carlo to calculate the value of Pi. This is a well-known example where we take a square with a side length of one unit and inscribe a quarter circle inside it with a radius of one unit. The ratio of the quarter circle’s area to the square’s area is (π/4)/1, and we can multiply this expression by four to get π on its own.

So, if we consider numerous random (x,y) points that all lie within or on the bounds of the square, as the total number of these points tends to infinity, the ratio of points that lie on or inside the quarter circle to the total number of points tends towards Pi.

First, the NumPy implementation.

import numpy as np

import timeit

def monte_carlo_pi_numpy(num_samples):

x = np.random.rand(num_samples)

y = np.random.rand(num_samples)

inside_circle = (x**2 + y**2) <= 1.0

pi_estimate = (np.sum(inside_circle) / num_samples) * 4

return pi_estimate

# Benchmark the NumPy version

num_samples = 1000000

time_np_expr = timeit.timeit(lambda: monte_carlo_pi_numpy(num_samples), number=1000)

pi_estimate = monte_carlo_pi_numpy(num_samples)

print(f"Estimated Pi (NumPy): {pi_estimate}")

print(f"Execution Time (NumPy): {time_np_expr} seconds")

>>>>>>>>

Estimated Pi (NumPy): 3.144832

Execution Time (NumPy): 10.642843848007033 secondsNow, using NumExpr.

import numpy as np

import numexpr as ne

import timeit

def monte_carlo_pi_numexpr(num_samples):

x = np.random.rand(num_samples)

y = np.random.rand(num_samples)

inside_circle = ne.evaluate("(x**2 + y**2) <= 1.0")

pi_estimate = (np.sum(inside_circle) / num_samples) * 4 # Use NumPy for summation

return pi_estimate

# Benchmark the NumExpr version

num_samples = 1000000

time_ne_expr = timeit.timeit(lambda: monte_carlo_pi_numexpr(num_samples), number=1000)

pi_estimate = monte_carlo_pi_numexpr(num_samples)

print(f"Estimated Pi (NumExpr): {pi_estimate}")

print(f"Execution Time (NumExpr): {time_ne_expr} seconds")

>>>>>>>>>>>>>>>

Estimated Pi (NumExpr): 3.141684

Execution Time (NumExpr): 8.077501275009126 secondsOK, so the speed-up was not as impressive that time, but a 20% improvement isn’t terrible either. Part of the reason is that NumExpr doesn’t have an optimised SUM() function, so we had to default back to NumPy for that operation.

Example 3 — Implementing a Sobel image filter

In this example, we’ll implement a Sobel filter for images. The Sobel filter is commonly used in image processing for edge detection. It calculates the image intensity gradient at each pixel, highlighting edges and intensity transitions. Our input image is of the Taj Mahal in India.

Let’s see the NumPy code running first and time it.

import numpy as np

from scipy.ndimage import convolve

from PIL import Image

import timeit

# Sobel kernels

sobel_x = np.array([[-1, 0, 1],

[-2, 0, 2],

[-1, 0, 1]])

sobel_y = np.array([[-1, -2, -1],

[ 0, 0, 0],

[ 1, 2, 1]])

def sobel_filter_numpy(image):

"""Apply Sobel filter using NumPy."""

img_array = np.array(image.convert('L')) # Convert to grayscale

gradient_x = convolve(img_array, sobel_x)

gradient_y = convolve(img_array, sobel_y)

gradient_magnitude = np.sqrt(gradient_x**2 + gradient_y**2)

gradient_magnitude *= 255.0 / gradient_magnitude.max() # Normalize to 0-255

return Image.fromarray(gradient_magnitude.astype(np.uint8))

# Load an example image

image = Image.open("/mnt/d/test/taj_mahal.png")

# Benchmark the NumPy version

time_np_sobel = timeit.timeit(lambda: sobel_filter_numpy(image), number=100)

sobel_image_np = sobel_filter_numpy(image)

sobel_image_np.save("/mnt/d/test/sobel_taj_mahal_numpy.png")

print(f"Execution Time (NumPy): {time_np_sobel} seconds")

>>>>>>>>>

Execution Time (NumPy): 8.093792188999942 secondsAnd now the NumExpr code.

import numpy as np

import numexpr as ne

from scipy.ndimage import convolve

from PIL import Image

import timeit

# Sobel kernels

sobel_x = np.array([[-1, 0, 1],

[-2, 0, 2],

[-1, 0, 1]])

sobel_y = np.array([[-1, -2, -1],

[ 0, 0, 0],

[ 1, 2, 1]])

def sobel_filter_numexpr(image):

"""Apply Sobel filter using NumExpr for gradient magnitude computation."""

img_array = np.array(image.convert('L')) # Convert to grayscale

gradient_x = convolve(img_array, sobel_x)

gradient_y = convolve(img_array, sobel_y)

gradient_magnitude = ne.evaluate("sqrt(gradient_x**2 + gradient_y**2)")

gradient_magnitude *= 255.0 / gradient_magnitude.max() # Normalize to 0-255

return Image.fromarray(gradient_magnitude.astype(np.uint8))

# Load an example image

image = Image.open("/mnt/d/test/taj_mahal.png")

# Benchmark the NumExpr version

time_ne_sobel = timeit.timeit(lambda: sobel_filter_numexpr(image), number=100)

sobel_image_ne = sobel_filter_numexpr(image)

sobel_image_ne.save("/mnt/d/test/sobel_taj_mahal_numexpr.png")

print(f"Execution Time (NumExpr): {time_ne_sobel} seconds")

>>>>>>>>>>>>>

Execution Time (NumExpr): 4.938702256011311 secondsOn this occasion, using NumExpr led to a great result, with a performance that was close to double that of NumPy.

Here is what the edge-detected image looks like.

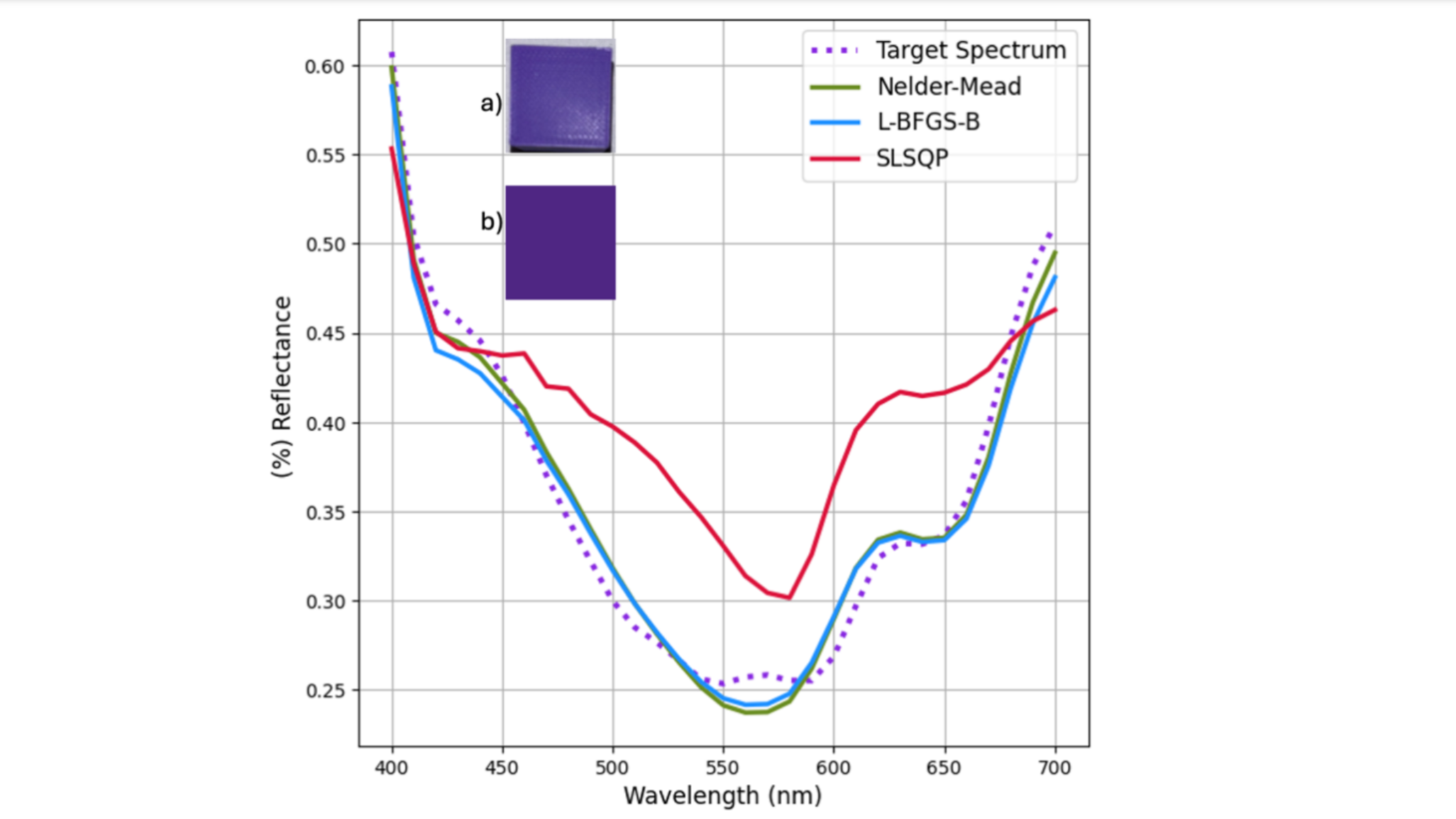

Example 4 — Fourier series approximation

It’s well known that complex periodic functions can be simulated by applying a series of sine waves superimposed on each other. At the extreme, even a square wave can be easily modelled in this way. The method is called the Fourier series approximation. Although an approximation, we can get as close to the target wave shape as memory and computational capacity allow.

The maths behind all this isn’t the primary focus. Just be aware that when we increase the number of iterations, the run-time of the solution rises markedly.

import numpy as np

import numexpr as ne

import time

import matplotlib.pyplot as plt

# Define the constant pi explicitly

pi = np.pi

# Generate a time vector and a square wave signal

t = np.linspace(0, 1, 1000000) # Reduced size for better visualization

signal = np.sign(np.sin(2 * np.pi * 5 * t))

# Number of terms in the Fourier series

n_terms = 10000

# Fourier series approximation using NumPy

start_time = time.time()

approx_np = np.zeros_like(t)

for n in range(1, n_terms + 1, 2):

approx_np += (4 / (np.pi * n)) * np.sin(2 * np.pi * n * 5 * t)

numpy_time = time.time() - start_time

# Fourier series approximation using NumExpr

start_time = time.time()

approx_ne = np.zeros_like(t)

for n in range(1, n_terms + 1, 2):

approx_ne = ne.evaluate("approx_ne + (4 / (pi * n)) * sin(2 * pi * n * 5 * t)", local_dict={"pi": pi, "n": n, "approx_ne": approx_ne, "t": t})

numexpr_time = time.time() - start_time

print(f"NumPy Fourier series time: {numpy_time:.6f} seconds")

print(f"NumExpr Fourier series time: {numexpr_time:.6f} seconds")

# Plotting the results

plt.figure(figsize=(10, 6))

plt.plot(t, signal, label='Original Signal (Square Wave)', color='black', linestyle='--')

plt.plot(t, approx_np, label='Fourier Approximation (NumPy)', color='blue')

plt.plot(t, approx_ne, label='Fourier Approximation (NumExpr)', color='red', linestyle='dotted')

plt.title('Fourier Series Approximation of a Square Wave')

plt.xlabel('Time')

plt.ylabel('Amplitude')

plt.legend()

plt.grid(True)

plt.show()And the output?

That is another pretty good result. NumExpr shows a 5 times improvement over Numpy on this occasion.

Summary

NumPy and NumExpr are both powerful libraries used for Python numerical computations. They each have unique strengths and use cases, making them suitable for different types of tasks. Here, we compared their performance and suitability for specific computational tasks, focusing on examples such as simple array addition to more complex applications, like using a Sobel filter for image edge detection.

While I didn’t quite see the claimed 15x speed increase over NumPy in my tests, there’s no doubt that NumExpr can be significantly faster than NumPy in many cases.

If you’re a heavy user of NumPy and need to extract every bit of performance from your code, I recommend trying the NumExpr library. Besides the fact that not all NumPy code can be replicated using NumExpr, there’s practically no downside, and the upside might surprise you.

For more details on the NumExpr library, check out the GitHub page here.

The post NumExpr: The “Faster than Numpy” Library Most Data Scientists Have Never Used appeared first on Towards Data Science.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![macOS 15.5 beta 4 now available for download [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/macOS-Sequoia-15.5-b4.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)

![Apple Seeds Fourth Beta of iOS 18.5 to Developers [Update: Public Beta Available]](https://images.macrumors.com/t/uSxxRefnKz3z3MK1y_CnFxSg8Ak=/2500x/article-new/2025/04/iOS-18.5-Feature-Real-Mock.jpg)

![Apple Seeds Fourth Beta of macOS Sequoia 15.5 [Update: Public Beta Available]](https://images.macrumors.com/t/ne62qbjm_V5f4GG9UND3WyOAxE8=/2500x/article-new/2024/08/macOS-Sequoia-Night-Feature.jpg)