Neuromorphic Chips in 2025: A Developer's Guide to Brain-Inspired AI Hardware

Why Neuromorphic Chips Are the Future of AI The AI hardware landscape is undergoing a seismic shift. While GPUs dominated the 2020s, neuromorphic chips - processors that mimic the human brain's neural architecture - are poised to revolutionize edge AI by 2025. Here's what developers need to know: # Traditional AI Inference (GPU) output = model.predict(input_data) # Power-hungry batch processing # Neuromorphic Approach (Intel Loihi) spike_encoder = SpikingEncoder() # Event-driven, ultra-low-power Key Advantage: Neuromorphic chips like Intel's Loihi 3 consume 0.1% the power of GPUs for real-time tasks . How Neuromorphic Computing Works Core Principles Spiking Neural Networks (SNNs): Neurons fire only when thresholds are met (like biological brains) In-Memory Computing: Eliminates von Neumann bottleneck Event-Driven Processing: No clock cycles wasted on idle states Getting Started with Neuromorphic Development 1. Hardware Options # Install Intel's Loihi SDK pip install nxsdk Intel Loihi 3 (1M neurons) BrainChip Akida (event-based vision) SynSense Speck (low-power IoT) 2. Programming SNNs import nxsdk # Create a spiking neuron neuron = nxsdk.neurons.SRM0(v_thresh=0.5) neuron.spike_out.connect(synapse) # Event-driven communication Pro Tip: Use Nengo for high-level SNN development. Real-World Use Cases 1. Edge AI // Smart camera with neuromorphic processing camera.on('motion', () => { chip.process(spikes); // No cloud dependency }); 2. Robotics Boston Dynamics' next-gen robots use neuromorphic chips for 10x longer battery life 3. Healthcare Real-time seizure prediction with 95% accuracy (Mayo Clinic trials) (More applications in our edge AI trends report) Challenges to Consider New Programming Paradigms: # Different from traditional deep learning model = SNN(layers=[SpikingDense(128)]) Limited Tooling (vs. PyTorch/TensorFlow) Hybrid Architectures often needed The Road Ahead With $1.3B market projection by 2030, neuromorphic computing will power: ✅ Always-on IoT devices ✅ Autonomous vehicle decision-making ✅ Privacy-preserving AI Want to go deeper? Explore our full neuromorphic chip breakdown with performance benchmarks and buying guides. Discussion: What's your experience with neuromorphic hardware? Share your setup in the comments!

Why Neuromorphic Chips Are the Future of AI

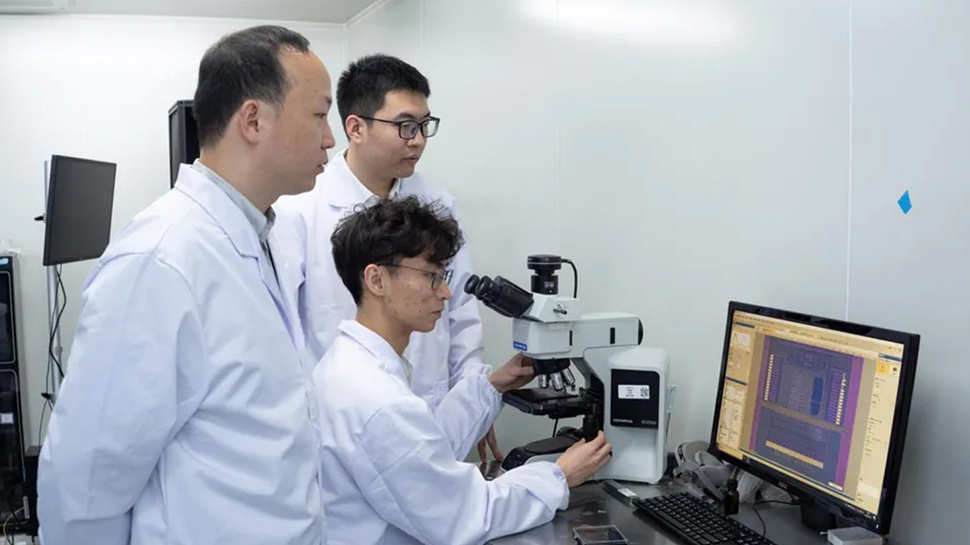

The AI hardware landscape is undergoing a seismic shift. While GPUs dominated the 2020s, neuromorphic chips - processors that mimic the human brain's neural architecture - are poised to revolutionize edge AI by 2025. Here's what developers need to know:

# Traditional AI Inference (GPU)

output = model.predict(input_data) # Power-hungry batch processing

# Neuromorphic Approach (Intel Loihi)

spike_encoder = SpikingEncoder() # Event-driven, ultra-low-power

Key Advantage: Neuromorphic chips like Intel's Loihi 3 consume 0.1% the power of GPUs for real-time tasks .

How Neuromorphic Computing Works

Core Principles

Spiking Neural Networks (SNNs): Neurons fire only when thresholds are met (like biological brains)

In-Memory Computing: Eliminates von Neumann bottleneck

Event-Driven Processing: No clock cycles wasted on idle states

Getting Started with Neuromorphic Development

1. Hardware Options

# Install Intel's Loihi SDK

pip install nxsdk

Intel Loihi 3 (1M neurons)

BrainChip Akida (event-based vision)

SynSense Speck (low-power IoT)

2. Programming SNNs

import nxsdk

# Create a spiking neuron

neuron = nxsdk.neurons.SRM0(v_thresh=0.5)

neuron.spike_out.connect(synapse) # Event-driven communication

Pro Tip: Use Nengo for high-level SNN development.

Real-World Use Cases

1. Edge AI

// Smart camera with neuromorphic processing

camera.on('motion', () => {

chip.process(spikes); // No cloud dependency

});

2. Robotics

Boston Dynamics' next-gen robots use neuromorphic chips for 10x longer battery life

3. HealthcareReal-time seizure prediction with 95% accuracy (Mayo Clinic trials)

(More applications in our edge AI trends report)

Challenges to Consider

- New Programming Paradigms:

# Different from traditional deep learning

model = SNN(layers=[SpikingDense(128)])

Limited Tooling (vs. PyTorch/TensorFlow)

Hybrid Architectures often needed

The Road Ahead

With $1.3B market projection by 2030, neuromorphic computing will power:

✅ Always-on IoT devices

✅ Autonomous vehicle decision-making

✅ Privacy-preserving AI

Want to go deeper? Explore our full neuromorphic chip breakdown with performance benchmarks and buying guides.

Discussion:

What's your experience with neuromorphic hardware? Share your setup in the comments!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[FREE EBOOKS] AI and Business Rule Engines for Excel Power Users, Machine Learning Hero & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Hostinger Horizons lets you effortlessly turn ideas into web apps without coding [10% off]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/IMG_1551.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new Google TV streaming dongle looks just like a Chromecast [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/thomson-cast-150-google-tv-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)

![Most iPhones Sold in the U.S. Will Be Made in India by 2026 [Report]](https://www.iclarified.com/images/news/97130/97130/97130-640.jpg)