My Understanding of DevOps Engineering

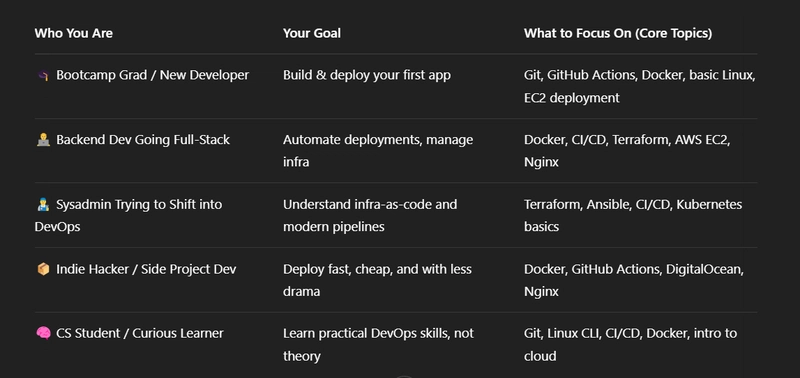

What is DevOps? DevOps, as the name suggests, combines Development and Operations into a unified approach. A DevOps Engineer orchestrates the entire journey of an application: planning, coding, building, testing, releasing, deploying, and monitoring. This comprehensive process ensures applications work seamlessly for end-users and integrate properly with other services. I see DevOps Engineers as master planners who understand the complete Software Development Life Cycle (SDLC). Success in this role requires both technical expertise and strong communication skills. Communication becomes crucial especially when coordinating across different teams – from developers to stakeholders. The DevOps Lifecycle Planning Planning begins with constructive thinking about the development and production environments where code will be built. For example, imagine a client named Alex who needs a web-based analytical platform for Business Intelligence. The DevOps Engineer would need to carefully consider all technical components. Drawing on development knowledge, the engineer would select appropriate libraries and services for Alex's project specific needs. This means evaluating both private and open-source packages, and choosing between established cloud platforms like AWS, Azure, GCP, DigitalOcean, Linode, and Oracle. After this critical thinking phase, development can move forward confidently. For Alex's theoretical project, React.js might be ideal for the frontend (a robust and popular library), FastAPI for the backend (which offers flexibility as an unopinionated framework), along with essential services including storage, databases, compute resources, networking, and Content Delivery Network (CDN) capabilities. This thorough planning establishes the foundation for the entire SDLC. Coding During the coding stage, development teams work collaboratively to create the components that form the business logic of the application. This typically involves frontend developers, backend developers, and database administrators working in concert. Additional team members often include project managers, data analysts, and data scientists who provide specialized expertise. The DevOps Engineer's role during this phase involves ensuring these diverse teams can work efficiently within well-structured environments. Building, Testing, Releasing, and Deploying These phases represent the core of the SDLC, where source code is transformed through validation and formatting into a production-ready application that serves end-users. This process, known as the CI/CD Pipeline (Continuous Integration/Continuous Delivery or Deployment), is where significant automation occurs. By automating the software development workflow, DevOps makes development less stressful and reduces complexity for the entire team. During this process, testing protocols identify potential issues before they reach production, significantly improving code quality. Various CI/CD tools can be utilized depending on project requirements. These include GitHub Actions, GitLab, BitBucket, Jenkins, CircleCI, and ArgoCD. Major cloud platforms also offer their own solutions like AWS Pipeline and Azure Pipeline. Some platforms, such as GitLab and BitBucket, provide built-in CI/CD capabilities that streamline the process by reducing integration complexity. The Mechanics of CI/CD Continuous Integration involves merging all code committed to a shared repository (like GitHub or GitLab). Each time a developer commits or merges code, automated tests verify its quality. This Continuous Testing approach includes four critical testing types: Unit Testing – Verifies individual code units and methods function as expected. For frontend code, tools like Jest or Mocha test specific components within the source repository. Integration Testing – Confirms that modules, services, and components work together correctly. This testing ensures different parts of the application communicate and function cohesively. Regression Testing – When a build test fails (whether unit or integration), regression testing determines if errors from previous builds persist. This prevents old bugs from reappearing. Code Quality Testing – Evaluates code against established standards. For example, if a junior developer uses 'var' instead of 'const' for a scoped variable, tools like SonarQube or Qodana can automatically flag this issue without requiring senior developers to manually review every line of code, Checkout thectoclub for other code quality tools. The final stage of the CI/CD Pipeline is Continuous Deployment/Delivery (CD). At this point, successful builds are published to an Artifact registry (Docker Hub, Nexus, AWS ECS - Elastic Container Service), Testing environment, or Production environment. This constitutes the actual application release that users will experience. During this phase, Infrastructure Provisioning is implemented using to

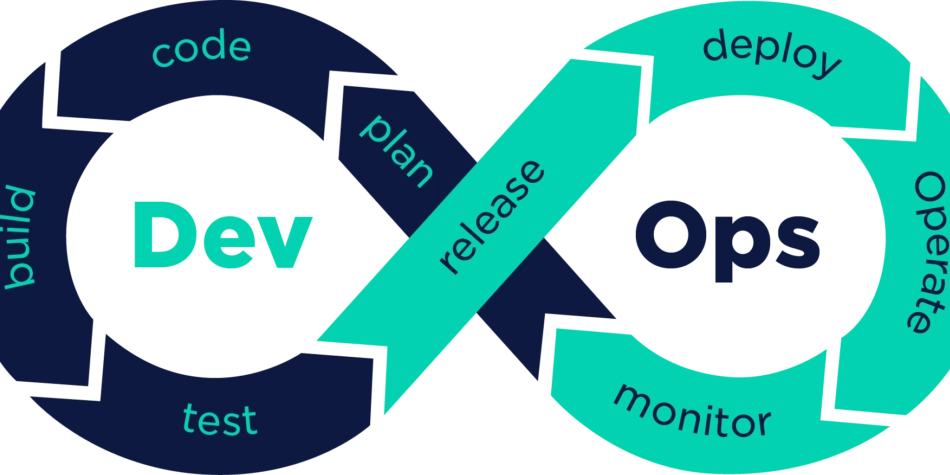

What is DevOps?

DevOps, as the name suggests, combines Development and Operations into a unified approach. A DevOps Engineer orchestrates the entire journey of an application: planning, coding, building, testing, releasing, deploying, and monitoring. This comprehensive process ensures applications work seamlessly for end-users and integrate properly with other services.

I see DevOps Engineers as master planners who understand the complete Software Development Life Cycle (SDLC). Success in this role requires both technical expertise and strong communication skills. Communication becomes crucial especially when coordinating across different teams – from developers to stakeholders.

The DevOps Lifecycle

Planning

Planning begins with constructive thinking about the development and production environments where code will be built. For example, imagine a client named Alex who needs a web-based analytical platform for Business Intelligence. The DevOps Engineer would need to carefully consider all technical components.

Drawing on development knowledge, the engineer would select appropriate libraries and services for Alex's project specific needs. This means evaluating both private and open-source packages, and choosing between established cloud platforms like AWS, Azure, GCP, DigitalOcean, Linode, and Oracle.

After this critical thinking phase, development can move forward confidently. For Alex's theoretical project, React.js might be ideal for the frontend (a robust and popular library), FastAPI for the backend (which offers flexibility as an unopinionated framework), along with essential services including storage, databases, compute resources, networking, and Content Delivery Network (CDN) capabilities. This thorough planning establishes the foundation for the entire SDLC.

Coding

During the coding stage, development teams work collaboratively to create the components that form the business logic of the application. This typically involves frontend developers, backend developers, and database administrators working in concert. Additional team members often include project managers, data analysts, and data scientists who provide specialized expertise.

The DevOps Engineer's role during this phase involves ensuring these diverse teams can work efficiently within well-structured environments.

Building, Testing, Releasing, and Deploying

These phases represent the core of the SDLC, where source code is transformed through validation and formatting into a production-ready application that serves end-users. This process, known as the CI/CD Pipeline (Continuous Integration/Continuous Delivery or Deployment), is where significant automation occurs.

By automating the software development workflow, DevOps makes development less stressful and reduces complexity for the entire team. During this process, testing protocols identify potential issues before they reach production, significantly improving code quality.

Various CI/CD tools can be utilized depending on project requirements. These include GitHub Actions, GitLab, BitBucket, Jenkins, CircleCI, and ArgoCD. Major cloud platforms also offer their own solutions like AWS Pipeline and Azure Pipeline. Some platforms, such as GitLab and BitBucket, provide built-in CI/CD capabilities that streamline the process by reducing integration complexity.

The Mechanics of CI/CD

Continuous Integration involves merging all code committed to a shared repository (like GitHub or GitLab). Each time a developer commits or merges code, automated tests verify its quality. This Continuous Testing approach includes four critical testing types:

Unit Testing – Verifies individual code units and methods function as expected. For frontend code, tools like Jest or Mocha test specific components within the source repository.

Integration Testing – Confirms that modules, services, and components work together correctly. This testing ensures different parts of the application communicate and function cohesively.

Regression Testing – When a build test fails (whether unit or integration), regression testing determines if errors from previous builds persist. This prevents old bugs from reappearing.

Code Quality Testing – Evaluates code against established standards. For example, if a junior developer uses 'var' instead of 'const' for a scoped variable, tools like SonarQube or Qodana can automatically flag this issue without requiring senior developers to manually review every line of code, Checkout thectoclub for other code quality tools.

The final stage of the CI/CD Pipeline is Continuous Deployment/Delivery (CD). At this point, successful builds are published to an Artifact registry (Docker Hub, Nexus, AWS ECS - Elastic Container Service), Testing environment, or Production environment. This constitutes the actual application release that users will experience.

During this phase, Infrastructure Provisioning is implemented using tools like Terraform or Pulumi. This creates and configures the precise environment and services needed to run the application efficiently. This infrastructure-as-code (IaC) approach allows for upgrading resources or removing unused services as required.

Monitoring

Continuous monitoring of both the production environment and application is essential to DevOps practice. This vigilance helps detect anomalies and analyze traffic patterns before they impact users.

The consequences of inadequate monitoring can be severe: service downtime, customer loss, and unexpectedly high cloud infrastructure costs. To prevent these issues, specialized tools simplify the monitoring process like CloudWatch service from AWS.

Prometheus collects critical metrics like CPU and memory usage, while Grafana transforms this data into intuitive visualizations. Combining these tools creates a powerful system for tracking application resource usage. For more detailed analysis, the Elastic Logstash Kibana (ELK) stack provides comprehensive metrics with advanced search capabilities. Kibana serves as the visualization layer for this rich data.

The DevOps Impact

Through my understanding of these DevOps practices, I can see how proper planning, automation, and monitoring can transform application development and deployment. This systematic approach not only improves technical operations but also delivers tangible business benefits through faster, more reliable software delivery.

What aspects of DevOps do you find most valuable in your work? I would love to connect with fellow professionals interested in this field!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

![[Updated] Samsung’s 65-inch 4K Smart TV Just Crashed to $299 — That’s Cheaper Than an iPad](https://www.androidheadlines.com/wp-content/uploads/2025/05/samsung-du7200.jpg)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)