Multiplayer VR Development using Unity XR Interaction Toolkit and Photon Fusion 2

This blog post is taken from the book Unity for Multiplayer VR Development. Check my other books at Amazon Author Page. With VR technology becoming more affordable and realistic, we’re witnessing a rise in Metaverse and Social VR applications across various industries. Users from around the world can now connect in shared virtual spaces, talk via voice chat, and interact with common elements in a collaborative environment. Whether you're a developer exploring new technology, creating a demo for a client, or working on your next business idea, building a Social VR experience is more achievable than ever. This blog will help you take the first steps and plan your journey in the right direction. The Evolving Use Cases of VR Like every emerging technology, VR initially went through a hype phase. While that hype is still alive, VR is now steadily gaining traction in real-world applications. From defense training, medical simulation, and safety drills, to manufacturing training and virtual tourism, the list of use cases continues to grow. Major brands like Meta, Apple, HTC, PICO are releasing new devices, and there is significant investment pouring into the space. Roadmap to Building a Social VR Experience Let’s outline a basic roadmap to develop VR Metaverse applications. These are core features that are practically essential for most social VR platforms: ✅ Feature 1: VR Avatars Can Join a Multiplayer Session Create networked sessions where multiple users can join the same virtual environment. ✅ Feature 2: VR Avatars Can See Other Joined Avatars Enable full-body or head-hand avatars so that users can see each other’s presence in real time. ✅ Feature 3: VR Avatars Can Communicate with Voice Chat Integrate voice chat so users can talk naturally, as they would in the real world. ✅ Feature 4: VR Avatars Can Interact with Interactable Items Add interactive elements (like doors, levers, tools, or whiteboards) that users can use collaboratively. Tech Stack We'll Use To implement these features, we’ll use the following tools: Game Engine: Unity VR Interaction SDK: XR Interaction Toolkit with OpenXR (for cross-platform compatibility) Multiplayer SDK: Photon Fusion 2 (for performant and flexible networking) Let us elaborate each feature in detail for it's implementation using mentioned tech stack. Feature 1: VR Avatars Can Join a Multiplayer Session This feature enables users to enter a shared virtual environment where they can interact with other players in real-time. It forms the backbone of the multiplayer experience, requiring a robust networking infrastructure to manage player connections and session handling. Technical Requirements: Implement Photon Fusion 2.0 to manage the multiplayer framework. Photon Fusion will handle the creation and joining of sessions, player synchronization, and real-time communication between clients. Develop a lobby or matchmaking system using Photon Fusion’s capabilities, allowing players to either create new sessions or join existing ones with minimal latency. Feature 2: VR Avatars Can See Other Joined Avatars Visual representation of other players is crucial for immersion in a multiplayer VR environment. This feature allows users to see and interact with avatars representing other players, enhancing the sense of presence. Technical Requirements: Utilize Photon Fusion 2.0 to synchronize avatar states across the network, ensuring that all players see each other’s movements and actions in real-time. Implement an avatar system that accurately reflects head, hand, and body movements. Photon Fusion will manage the spawning and synchronization of avatars, ensuring they are consistently displayed across all connected clients. Feature 3: VR Avatars Can Communicate with Voice Chat Voice communication is vital for social interaction and collaboration in multiplayer VR. This feature allows players to speak to each other in real-time, adding a layer of realism and enhancing the overall experience. Technical Requirements: Integrate Photon Voice, a module of Photon Fusion, to enable real-time voice chat. Photon Voice will handle audio transmission between players, ensuring low latency and clear communication. Implement features such as proximity-based voice chat, where players’ voices become louder or softer depending on their virtual distance, adding a sense of realism to the communication. Feature 4: VR Avatars Can Interact with Interactable Items Interaction with virtual objects is a key component of immersive VR experiences. This feature allows avatars to manipulate and interact with various items within the environment, making the virtual world more dynamic and engaging. Technical Requirements: Leverage the XR Interaction Toolkit to define and create interactable items within the VR environment. Implement interaction logic such as grabbing, moving, or

This blog post is taken from the book Unity for Multiplayer VR Development. Check my other books at Amazon Author Page.

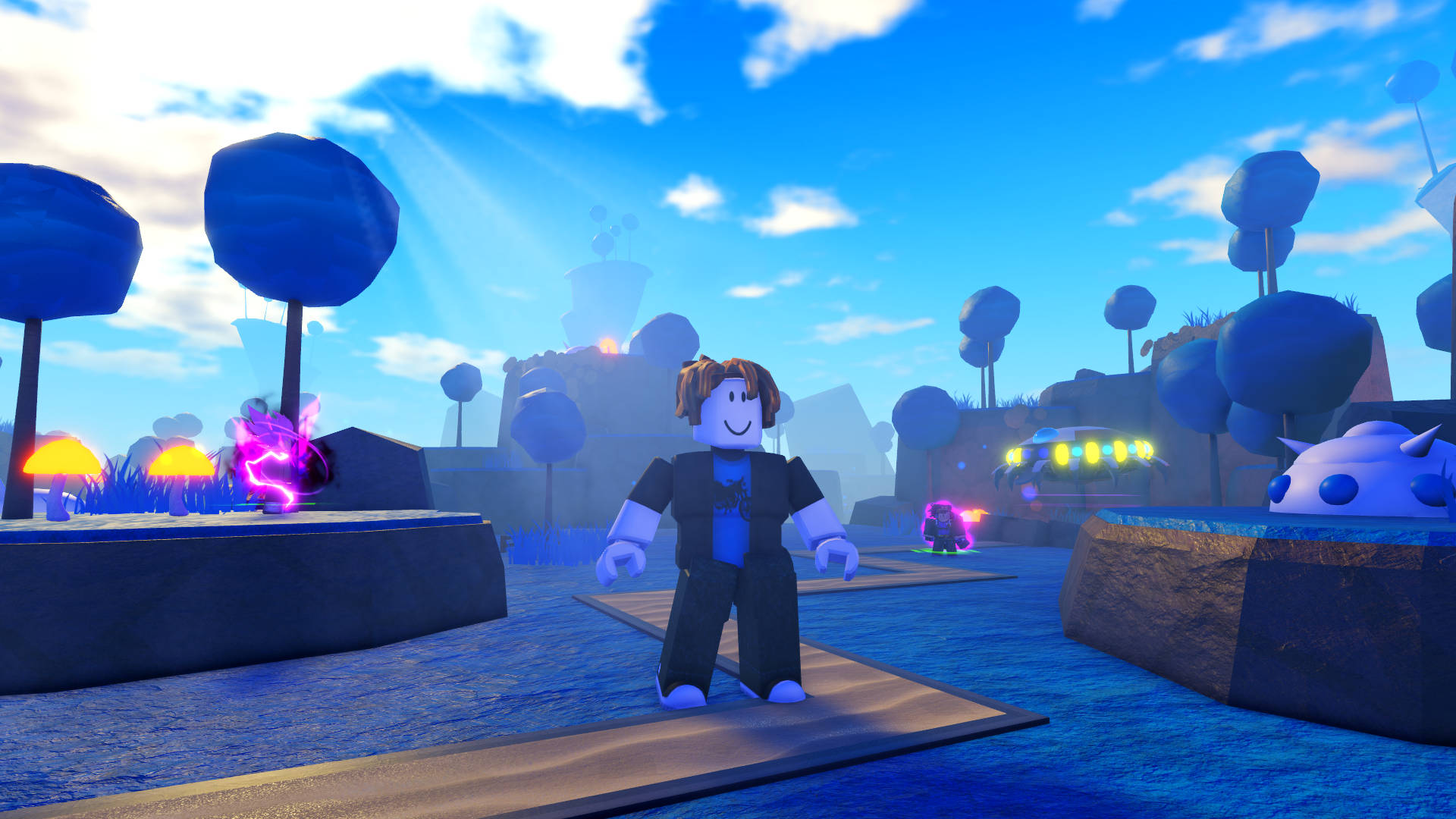

With VR technology becoming more affordable and realistic, we’re witnessing a rise in Metaverse and Social VR applications across various industries. Users from around the world can now connect in shared virtual spaces, talk via voice chat, and interact with common elements in a collaborative environment. Whether you're a developer exploring new technology, creating a demo for a client, or working on your next business idea, building a Social VR experience is more achievable than ever.

This blog will help you take the first steps and plan your journey in the right direction.

The Evolving Use Cases of VR

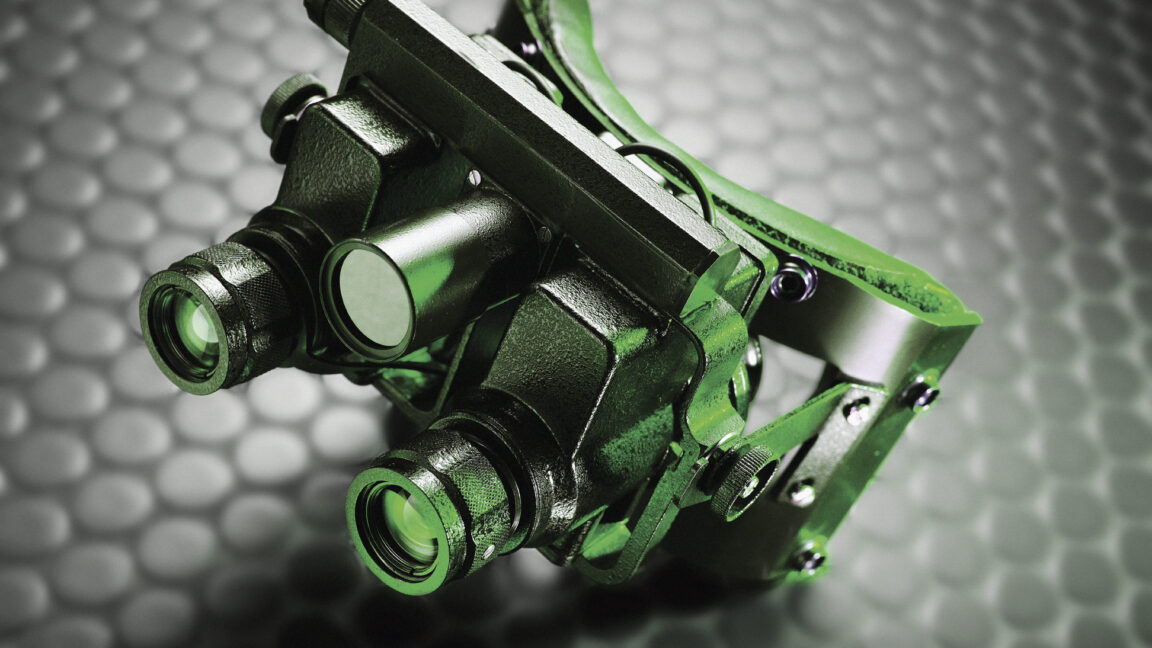

Like every emerging technology, VR initially went through a hype phase. While that hype is still alive, VR is now steadily gaining traction in real-world applications. From defense training, medical simulation, and safety drills, to manufacturing training and virtual tourism, the list of use cases continues to grow. Major brands like Meta, Apple, HTC, PICO are releasing new devices, and there is significant investment pouring into the space.

Roadmap to Building a Social VR Experience

Let’s outline a basic roadmap to develop VR Metaverse applications. These are core features that are practically essential for most social VR platforms:

✅ Feature 1: VR Avatars Can Join a Multiplayer Session

Create networked sessions where multiple users can join the same virtual environment.

✅ Feature 2: VR Avatars Can See Other Joined Avatars

Enable full-body or head-hand avatars so that users can see each other’s presence in real time.

✅ Feature 3: VR Avatars Can Communicate with Voice Chat

Integrate voice chat so users can talk naturally, as they would in the real world.

✅ Feature 4: VR Avatars Can Interact with Interactable Items

Add interactive elements (like doors, levers, tools, or whiteboards) that users can use collaboratively.

Tech Stack We'll Use

To implement these features, we’ll use the following tools:

- Game Engine: Unity

- VR Interaction SDK: XR Interaction Toolkit with OpenXR (for cross-platform compatibility)

- Multiplayer SDK: Photon Fusion 2 (for performant and flexible networking)

Let us elaborate each feature in detail for it's implementation using mentioned tech stack.

Feature 1: VR Avatars Can Join a Multiplayer Session

This feature enables users to enter a shared virtual environment where they can interact with other players in real-time. It forms the backbone of the multiplayer experience, requiring a robust networking infrastructure to manage player connections and session handling.

Technical Requirements:

- Implement Photon Fusion 2.0 to manage the multiplayer framework. Photon Fusion will handle the creation and joining of sessions, player synchronization, and real-time communication between clients.

- Develop a lobby or matchmaking system using Photon Fusion’s capabilities, allowing players to either create new sessions or join existing ones with minimal latency.

Feature 2: VR Avatars Can See Other Joined Avatars

Visual representation of other players is crucial for immersion in a multiplayer VR environment. This feature allows users to see and interact with avatars representing other players, enhancing the sense of presence.

Technical Requirements:

- Utilize Photon Fusion 2.0 to synchronize avatar states across the network, ensuring that all players see each other’s movements and actions in real-time.

- Implement an avatar system that accurately reflects head, hand, and body movements. Photon Fusion will manage the spawning and synchronization of avatars, ensuring they are consistently displayed across all connected clients.

Feature 3: VR Avatars Can Communicate with Voice Chat

Voice communication is vital for social interaction and collaboration in multiplayer VR. This feature allows players to speak to each other in real-time, adding a layer of realism and enhancing the overall experience.

Technical Requirements:

- Integrate Photon Voice, a module of Photon Fusion, to enable real-time voice chat. Photon Voice will handle audio transmission between players, ensuring low latency and clear communication.

- Implement features such as proximity-based voice chat, where players’ voices become louder or softer depending on their virtual distance, adding a sense of realism to the communication.

Feature 4: VR Avatars Can Interact with Interactable Items

Interaction with virtual objects is a key component of immersive VR experiences. This feature allows avatars to manipulate and interact with various items within the environment, making the virtual world more dynamic and engaging.

Technical Requirements:

- Leverage the XR Interaction Toolkit to define and create interactable items within the VR environment. Implement interaction logic such as grabbing, moving, or activating items.

- Use Photon Fusion 2.0 to synchronize these interactions across all players in the session. When one player interacts with an item, the changes should be reflected in real-time for all connected players, ensuring a consistent experience.

Roadmap for Development

The development roadmap will focus on identifying key entities central to the project and breaking them down into individual features. Each feature will be connected to the tools and components required for implementation. As outlined in the project scope, we will focus on two major entities: VR Player Entity and Interactable Item Entity. These will serve as the core around which supporting classes and scripts, such as UI screens and manager classes, will be developed.

1. VR Player Entity

The VR Player Entity represents the user in the multiplayer VR environment. It will handle avatar representation, movement, interactions, and communication.

Here are the tasks required for the development of the VR Player Entity, with each task focused on implementing a specific feature using the designated tool:

XR Rig Setup:

Feature: Set up an XR Rig using the XR Interaction Toolkit SDK, allowing players to navigate the environment via teleportation using VR controllers.

Tools: XR Interaction Toolkit.

Networked Player Movement:

Feature: Implement real-time networked movement using Photon Fusion 2.0, ensuring the player's movements are synchronized across all connected users.

Tools: Photon Fusion 2.0.

Synchronized Movement of Player's Head and Hands:

Feature: Ensure the VR player's head and hand movements are tracked and synchronized across the network for other players to see in real-time.

Tools: Photon Fusion 2.0, Unity’s XR Interaction Toolkit for head and hand tracking.

Interaction with Objects:

Feature: Allow players to pick up and manipulate interactable objects within the environment using the XR Grab Interactable component from the XR Interaction Toolkit SDK.

Tools: XR Interaction Toolkit, Photon Fusion 2.0 (for network synchronization).

Voice Communication:

Feature: Enable real-time voice communication between players using Photon Voice to create a seamless social experience.

Tools: Photon Voice SDK, Unity Audio system.

2. Interactable Item Entity

The Interactable Item Entity defines objects that players can interact with, such as picking up and moving these items. These objects will react based on the player's actions, providing feedback in the virtual world with sound and colour.

Here are the tasks required for the development of the Interactable Item Entity, with each task focused on implementing a specific feature using the designated tool:

Interactable Objects Configuration:

Feature: Configure interactable items using the XR Interaction Toolkit's XR Grab Interactable component, allowing items to be picked up, thrown, or used by the player.

Tools: XR Interaction Toolkit, Photon Fusion 2.0 (for multiplayer synchronization).

Attachment Points:

Feature: Create predefined attachment points on interactable objects. These points will define the exact position and orientation of objects when grabbed by the player, ensuring consistent handling and placement.

Tools: XR Interaction Toolkit.

3. Supporting Components

While the VR Player Entity and Interactable Item Entity are central to the development, the project will also include:

- UI Screens for interaction and player settings (using Unity’s UI Toolkit).

- Manager Classes to handle game sessions, player management, and voice communication setup.

The complete development for the above Roadmap has been covered in the book Unity for Multiplayer VR Development which is available at Amazon and Amazon India. Get your copy to get clear explanation for each component required for Multiplayer VR experience development.

_courtesy_VERTICAL.jpg)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Ships 55 Million iPhones, Claims Second Place in Q1 2025 Smartphone Market [Report]](https://www.iclarified.com/images/news/97185/97185/97185-640.jpg)