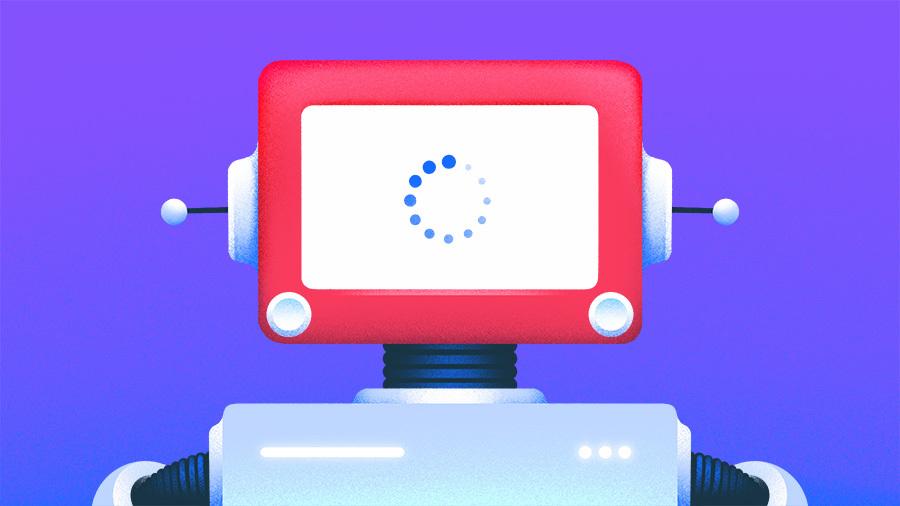

Models can make or mar your agents

Building and using AI products has become mainstream in our daily lives - from coding to writing to reading to shopping, practically all spheres of our lives. By the minute, developers are picking up more interest in the field of artificial intelligence and going further into AI agents. AI agents are autonomous, work with tools, models, and prompts to achieve a given task with minimal interference from the human-in-the-loop. With this autonomy of AI, I am a firm believer of training an AI using your own data, making it specialized to work with your business and/or use case. I am also a firm believer that AI agents work better in a vertical than as a horizontal worker because you can input the needed guardrails and prompt with little to no deviation. The current models do well in respective fields, have their benchmarks, and are good at prototyping and building proof of concepts. The issue comes in when the prompt becomes complex, has to call tools and functions; this is where you will see the inhibitions of AI. I will give an example that happened recently - I created a framework for building AI agents named Karo. Since it's still in its infancy, I have been creating examples that reflect real-world use cases. Initially when I built it 2 weeks ago, GPT-4o and GPT-4o-mini were working perfectly when it came to prompts, tool calls, and getting the task done. Earlier this week, I worked on a more complex example that had database sessions embedded in it, and boy was the agent a mess! GPT-4o and GPT-4o-mini were absolutely nerfed. They weren't following instructions, deviated a lot from what they were supposed to do. I kept steering them back to achieve the task and it was awful. I had to switch to Anthropic and it followed the first 5 steps and deviated; switched to Gemini, the GEMINI_JSON worked a little bit and deviated; the GEMINI_TOOLS worked a little bit and also deviated. I was at the verge of giving up when I decided to ask ChatGPT which models did well with complex prompts. I had already asked my network and they responded with GPT-4o and 4o-mini and were surprised it was nerfed. Those who recommended Gemini, I had to tell them that it worked only halfway and died. I'm a user of Claude and was disappointed when the model wasn't working well. I used ChatGPT's recommendation which was the Turbo and it worked as it should - prompt, tool calls, staying on task. I found out later on Twitter that GPT-4o was having some issues and was pulled, which brings me back to my case of agents working with specialized models. I was building an example and had this issue; what if it was an app in production? I would have lost thousands of both income and users due to relying on external models to work under the hood. There may be better models that work well with complex prompts and all, I didn't try them all, it still doesn't negate that there should be specialized models for agents in a niche/vertical/task to work well. Which brings this question: how will this be achieved without the fluff and putting into consideration these businesses' concerns?

Building and using AI products has become mainstream in our daily lives - from coding to writing to reading to shopping, practically all spheres of our lives. By the minute, developers are picking up more interest in the field of artificial intelligence and going further into AI agents. AI agents are autonomous, work with tools, models, and prompts to achieve a given task with minimal interference from the human-in-the-loop.

With this autonomy of AI, I am a firm believer of training an AI using your own data, making it specialized to work with your business and/or use case. I am also a firm believer that AI agents work better in a vertical than as a horizontal worker because you can input the needed guardrails and prompt with little to no deviation.

The current models do well in respective fields, have their benchmarks, and are good at prototyping and building proof of concepts. The issue comes in when the prompt becomes complex, has to call tools and functions; this is where you will see the inhibitions of AI.

I will give an example that happened recently - I created a framework for building AI agents named Karo. Since it's still in its infancy, I have been creating examples that reflect real-world use cases. Initially when I built it 2 weeks ago, GPT-4o and GPT-4o-mini were working perfectly when it came to prompts, tool calls, and getting the task done. Earlier this week, I worked on a more complex example that had database sessions embedded in it, and boy was the agent a mess! GPT-4o and GPT-4o-mini were absolutely nerfed. They weren't following instructions, deviated a lot from what they were supposed to do. I kept steering them back to achieve the task and it was awful. I had to switch to Anthropic and it followed the first 5 steps and deviated; switched to Gemini, the GEMINI_JSON worked a little bit and deviated; the GEMINI_TOOLS worked a little bit and also deviated. I was at the verge of giving up when I decided to ask ChatGPT which models did well with complex prompts. I had already asked my network and they responded with GPT-4o and 4o-mini and were surprised it was nerfed. Those who recommended Gemini, I had to tell them that it worked only halfway and died. I'm a user of Claude and was disappointed when the model wasn't working well. I used ChatGPT's recommendation which was the Turbo and it worked as it should - prompt, tool calls, staying on task.

I found out later on Twitter that GPT-4o was having some issues and was pulled, which brings me back to my case of agents working with specialized models. I was building an example and had this issue; what if it was an app in production? I would have lost thousands of both income and users due to relying on external models to work under the hood. There may be better models that work well with complex prompts and all, I didn't try them all, it still doesn't negate that there should be specialized models for agents in a niche/vertical/task to work well.

Which brings this question: how will this be achieved without the fluff and putting into consideration these businesses' concerns?

_courtesy_VERTICAL.jpg)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Ships 55 Million iPhones, Claims Second Place in Q1 2025 Smartphone Market [Report]](https://www.iclarified.com/images/news/97185/97185/97185-640.jpg)