Model Context Protocol (MCP) 101: A Hands-On Beginner's Guide!

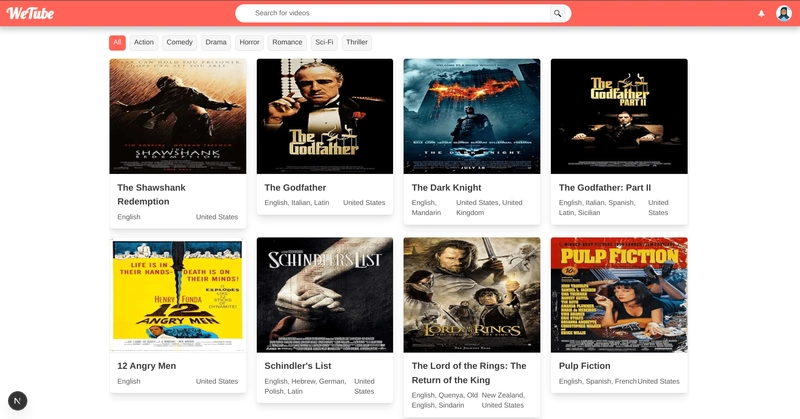

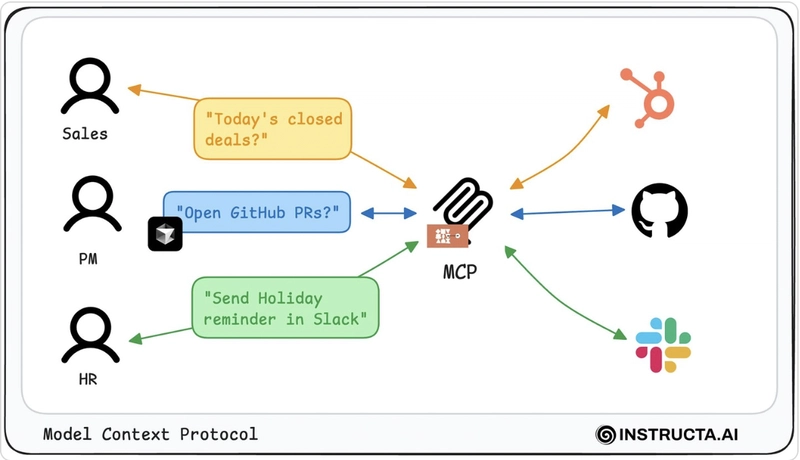

The Model Context Protocol (MCP) is creating a buzz in the AI community, and for good reason. This open-source framework, initiated by Anthropic, provides a standardized way for AI models to connect with external data sources and tools. By simplifying the integration process, MCP is set to revolutionize how developers create AI applications, making it easier to access real-time data and leverage advanced functionalities. What is Model Context Protocol (MCP)? MCP stands for Model Context Protocol, and it’s essentially a universal connector for AI applications. Think of it as the USB-C of the AI world, allowing different AI tools and models to interact seamlessly with various data sources. With MCP, developers can focus on building innovative applications instead of spending time on complex integrations. Why Do We Need MCP? Large Language Models (LLMs) like Claude, ChatGPT, and others have transformed our interactions with technology. However, they still have limitations, particularly when it comes to accessing real-world data and connecting with tools. Here are some challenges that MCP addresses: Knowledge Limitations: LLMs rely on training data that can quickly become outdated. This makes it difficult for them to provide accurate, real-time information. Domain Knowledge Gaps: LLMs lack deep understanding of specialized domains, making it hard for them to generate contextually relevant responses. Non-Standardized Integration: Current methods for connecting LLMs to external data sources often require custom solutions, leading to high costs and inefficiencies. MCP provides a unified solution to these issues, allowing LLMs to easily access external data and tools, thereby enhancing their capabilities. How MCP Works MCP was originally built to improve Claude’s ability to interact with external systems, Anthropic decided to open-source MCP in early 2024 to encourage industry-wide adoption. So, Retrieval Augmented Generation (RAG) is where you provide LLMs with custom data required to generate contextually relevant responses for the user queries. But MCP goes beyond that, MCP provides direct access to tools and other custom data through a unified API. Basically, MCP facilitates communication between AI models and external data/tools, enabling AI systems to interact with diverse sources in a consistent manner. MCP operates on a client-server architecture, comprising several key components: MCP Hosts: Applications that need contextual AI capabilities, such as chatbots or IDEs. MCP Clients: These maintain a one-on-one connection with MCP servers and handle protocol specifics. MCP Servers: Lightweight programs that expose specific capabilities through the MCP interface, connecting to local or remote data sources. Local Data Sources: Files and databases that MCP servers can securely access. Remote Services: External services available over the Internet that MCP servers can connect to. Here's an analogy to understand MCP: Let’s imagine the concept of MCP as a restaurant where we have: The Host = The restaurant building (the environment where the agent runs) The Server = The kitchen (where tools live) The Client = The waiter (who sends tool requests) The Agent = The customer (who decides what tool to use) The Tools = The recipes (the code that gets executed) Benefits of Implementing MCP The advantages of adopting MCP are numerous: Standardization: MCP provides a common interface for integrating various tools and data sources, reducing development time and complexity. Enhanced Performance: Direct access to data sources allows for faster, more accurate responses from AI models. Flexibility: Developers can easily switch between different LLMs without having to rewrite code for each integration. Security: MCP incorporates robust authentication and access control mechanisms, ensuring secure data exchanges. Getting Started with MCP If you’re interested in implementing MCP, here’s a quick guide to help you get started. Let’s walk through a simple tutorial where we create an MCP server that can fetch weather data. For this, you need to have Claude Desktop ready. Here’s a step-by-step guide: Prerequisites Ensure you have Claude Desktop installed on your system. You can download it based on your operating system — be it macOS or Windows. Building the MCP Server Navigate to the MCP documentation for guidance. modelcontextprotocol.io Set up your server to expose two tools: “Get Alerts” and “Get Forecast” for the weather. Here below is my complete video you can follow along MCP is poised to become the standard for Al integration, addressing the challenges of knowledge limitations, domain knowledge gaps, and non-standardized integrations. By adopting MCP, developers can create more efficient, scalable, and secure Al applications. The future looks bright for Al, and with MCP laying the groundwork

The Model Context Protocol (MCP) is creating a buzz in the AI community, and for good reason. This open-source framework, initiated by Anthropic, provides a standardized way for AI models to connect with external data sources and tools. By simplifying the integration process, MCP is set to revolutionize how developers create AI applications, making it easier to access real-time data and leverage advanced functionalities.

What is Model Context Protocol (MCP)?

MCP stands for Model Context Protocol, and it’s essentially a universal connector for AI applications. Think of it as the USB-C of the AI world, allowing different AI tools and models to interact seamlessly with various data sources. With MCP, developers can focus on building innovative applications instead of spending time on complex integrations.

Why Do We Need MCP?

Large Language Models (LLMs) like Claude, ChatGPT, and others have transformed our interactions with technology. However, they still have limitations, particularly when it comes to accessing real-world data and connecting with tools.

Here are some challenges that MCP addresses:

Knowledge Limitations: LLMs rely on training data that can quickly become outdated. This makes it difficult for them to provide accurate, real-time information.

Domain Knowledge Gaps: LLMs lack deep understanding of specialized domains, making it hard for them to generate contextually relevant responses.

Non-Standardized Integration: Current methods for connecting LLMs to external data sources often require custom solutions, leading to high costs and inefficiencies.

MCP provides a unified solution to these issues, allowing LLMs to easily access external data and tools, thereby enhancing their capabilities.

How MCP Works

MCP was originally built to improve Claude’s ability to interact with external systems, Anthropic decided to open-source MCP in early 2024 to encourage industry-wide adoption.

So, Retrieval Augmented Generation (RAG) is where you provide LLMs with custom data required to generate contextually relevant responses for the user queries. But MCP goes beyond that, MCP provides direct access to tools and other custom data through a unified API.

Basically, MCP facilitates communication between AI models and external data/tools, enabling AI systems to interact with diverse sources in a consistent manner.

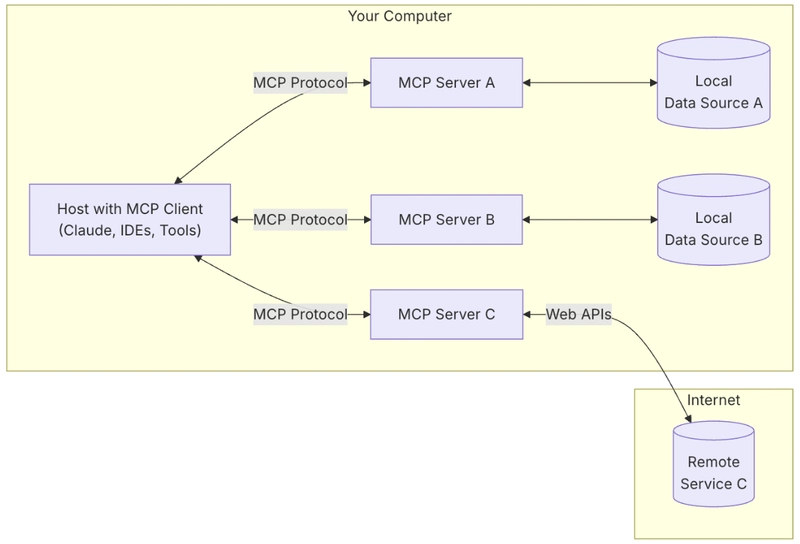

MCP operates on a client-server architecture, comprising several key components:

- MCP Hosts: Applications that need contextual AI capabilities, such as chatbots or IDEs.

- MCP Clients: These maintain a one-on-one connection with MCP servers and handle protocol specifics.

- MCP Servers: Lightweight programs that expose specific capabilities through the MCP interface, connecting to local or remote data sources.

- Local Data Sources: Files and databases that MCP servers can securely access.

- Remote Services: External services available over the Internet that MCP servers can connect to.

Here's an analogy to understand MCP:

Let’s imagine the concept of MCP as a restaurant where we have:

The Host = The restaurant building (the environment where the agent runs)

The Server = The kitchen (where tools live)

The Client = The waiter (who sends tool requests)

The Agent = The customer (who decides what tool to use)

The Tools = The recipes (the code that gets executed)

Benefits of Implementing MCP

The advantages of adopting MCP are numerous:

- Standardization: MCP provides a common interface for integrating various tools and data sources, reducing development time and complexity.

- Enhanced Performance: Direct access to data sources allows for faster, more accurate responses from AI models.

- Flexibility: Developers can easily switch between different LLMs without having to rewrite code for each integration.

- Security: MCP incorporates robust authentication and access control mechanisms, ensuring secure data exchanges.

Getting Started with MCP

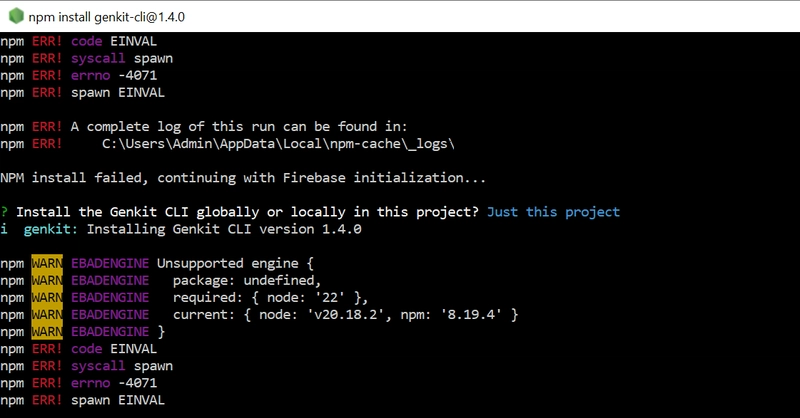

If you’re interested in implementing MCP, here’s a quick guide to help you get started. Let’s walk through a simple tutorial where we create an MCP server that can fetch weather data. For this, you need to have Claude Desktop ready. Here’s a step-by-step guide:

Prerequisites

Ensure you have Claude Desktop installed on your system. You can download it based on your operating system — be it macOS or Windows.

Building the MCP Server

-

Navigate to the MCP documentation for guidance.

Set up your server to expose two tools: “Get Alerts” and “Get Forecast” for the weather.

Here below is my complete video you can follow along

MCP is poised to become the standard for Al integration, addressing the challenges of knowledge limitations, domain knowledge gaps, and non-standardized integrations. By adopting MCP, developers can create more efficient, scalable, and secure Al applications.

The future looks bright for Al, and with MCP laying the groundwork for standardized connections, we are taking significant steps toward a more connected and capable ecosystem. Were already seeing exciting developments build upon these principles.

Automate Your Database Operations through MCP

And now, you can easily manage and automate most of your database actions from one place. At SingleStore, we just published our MCP server.

SingleStore, a real-time data platform, helps build AI applications and systems by providing a unified database for both transactional and analytical workloads, including fast vector and full-text search, and supporting SQL and Python within its notebooks. You can get started with SingleStore by creating a free account.

Here is the GitHub repo you can follow.

singlestore-labs

/

mcp-server-singlestore

singlestore-labs

/

mcp-server-singlestore

MCP server for interacting with SingleStore Management API and services

SingleStore MCP Server

Model Context Protocol (MCP) is a standardized protocol designed to manage context between large language models (LLMs) and external systems. This repository provides an installer and an MCP Server for Singlestore, enabling seamless integration.

With MCP, you can use Claude Desktop or any compatible MCP client to interact with SingleStore using natural language, making it easier to perform complex operations effortlessly.

Quickstart

Installing via Smithery

To install mcp-server-singlestore for Claude Desktop automatically via Smithery:

npx -y @smithery/cli install @singlestore-labs/mcp-server-singlestore --client claude

Clone the Repository

To clone the repository and set up the server locally:

git clone https://github.com/singlestore-labs/mcp-server-singlestore.git

cd mcp-server-singlestore

# Install dependencies

pip install -e .

Install via pip

Alternatively, you can install the package using pip:

pip install singlestore-mcp-server

Use command singlestore-mcp-client to run the server with the mcp clients or mcp inspector.

Local Installation Configuration

When running the MCP server locally with Claude Desktop…

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

-Nintendo-Switch-2-–-Overview-trailer-00-00-10.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Anna_Berkut_Alamy.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![[Weekly funding roundup March 29-April 4] Steady-state VC inflow pre-empts Trump tariff impact](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)