LimeLight-An Autonomous Assistant for Enterprise Community Platforms Using RAG, LangChain, and LLaMA 3

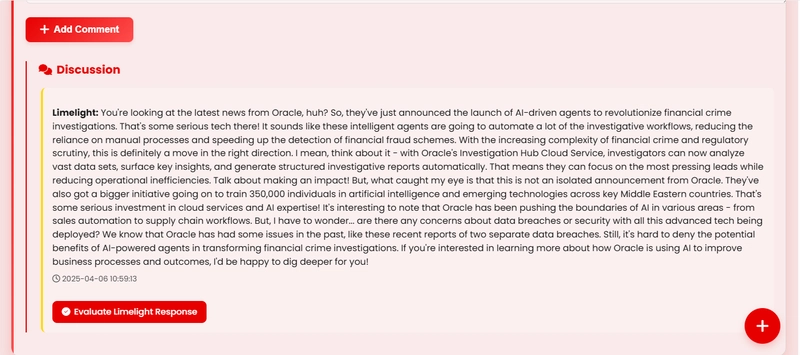

LimeLight: An Autonomous Assistant for Enterprise Community Platforms Using RAG, LangChain, and LLaMA 3 In today’s dynamic online communities, users frequently seek clarity on enterprise technologies, frameworks, and tools. However, many relevant posts go unanswered or receive inconsistent feedback. To address this gap, LimeLight was developed—an intelligent assistant designed to autonomously detect relevant discussions, retrieve contextual data, and generate high-quality, sentiment-aware responses in Niche communities like Reddit and Slack. This project demonstrates how Retrieval-Augmented Generation (RAG), modern language models, and sentiment analysis can be combined to enrich community interactions in a scalable and meaningful way. Project Overview LimeLight is a modular, AI-driven system integrated into a community platform. It automatically identifies posts related to enterprise technologies, retrieves relevant information from a vector database, and responds with well-formed, context-sensitive comments. It also considers the sentiment of user posts to adjust tone and engagement accordingly. Key Features 1. Intelligent Content Detection LimeLight identifies relevant content using a hybrid method of: Keyword filtering (e.g., references to enterprise platforms, services, or tools) Semantic similarity via sentence embeddings This ensures the assistant only engages with content aligned to its knowledge domain. 2. RAG-Based Contextual Responses When a relevant post is detected: Associated data is retrieved from a ChromaDB vector store A LangChain RAG pipeline feeds this context into LLaMA 3, running locally via Ollama A response is generated and inserted into the conversation thread 3. Sentiment-Aware Interaction LimeLight evaluates the sentiment of the original post (positive, neutral, or negative) and adjusts its tone accordingly. For example: A negative sentiment triggers more empathetic or supportive language Neutral or factual posts receive concise and informative replies 4. Automated Post Engagement On post creation, the system performs the following: Checks if the content is within scope If relevant, triggers generate_response() in bot.py Inserts a comment into the database, visible in the discussion thread Tags the response as authored by "LimeLight" with a timestamp Technology Stack Component Technology Backend FastAPI Frontend HTML, CSS, Jinja2 Database MongoDB (accessed via Motor) LLM LLaMA 3 (served via Ollama) RAG Pipeline LangChain + HuggingFace Vector Store ChromaDB Embeddings Sentence Transformers ML Extension Sentiment Analysis (Planned) Installation & Usage Clone the repository: git clone https://github.com/YourUsername/limelight-assistant.git cd limelight-assistant Set up the virtual environment: python -m venv venv source venv/bin/activate # For Mac/Linux venv\Scripts\activate # For Windows Install dependencies: pip install -r requirements.txt Start the application: uvicorn main:app --reload Ensure LLaMA 3 is active using Ollama: ollama run llama3 Visit the platform at http://localhost:8000 System Architecture User Post │ ├──> Relevance Detection (keywords + embeddings) │ ├──> ChromaDB Vector Retrieval │ ├──> LangChain RAG Pipeline │ ├──> LLaMA 3 Generates Response │ ├──> Sentiment Analysis │ └──> Auto-Comment as "LimeLight" The architecture is modular, with separation of concerns across: main.py – routing and server logic response.py – relevance and sentiment processing bot.py – RAG pipeline and language generation Future Enhancements Replace keyword detection with an LLM-based classifier for improved topic relevance Integrate advanced sentiment classification using transformer-based models Expand to support other domains beyond enterprise tech Sneakpeak Contact For queries, feedback, or collaboration opportunities, contact: Email: v.ukrishnan8@gmail.com

LimeLight: An Autonomous Assistant for Enterprise Community Platforms Using RAG, LangChain, and LLaMA 3

In today’s dynamic online communities, users frequently seek clarity on enterprise technologies, frameworks, and tools. However, many relevant posts go unanswered or receive inconsistent feedback. To address this gap, LimeLight was developed—an intelligent assistant designed to autonomously detect relevant discussions, retrieve contextual data, and generate high-quality, sentiment-aware responses in Niche communities like Reddit and Slack.

This project demonstrates how Retrieval-Augmented Generation (RAG), modern language models, and sentiment analysis can be combined to enrich community interactions in a scalable and meaningful way.

Project Overview

LimeLight is a modular, AI-driven system integrated into a community platform. It automatically identifies posts related to enterprise technologies, retrieves relevant information from a vector database, and responds with well-formed, context-sensitive comments. It also considers the sentiment of user posts to adjust tone and engagement accordingly.

Key Features

1. Intelligent Content Detection

LimeLight identifies relevant content using a hybrid method of:

- Keyword filtering (e.g., references to enterprise platforms, services, or tools)

- Semantic similarity via sentence embeddings

This ensures the assistant only engages with content aligned to its knowledge domain.

2. RAG-Based Contextual Responses

When a relevant post is detected:

- Associated data is retrieved from a ChromaDB vector store

- A LangChain RAG pipeline feeds this context into LLaMA 3, running locally via Ollama

- A response is generated and inserted into the conversation thread

3. Sentiment-Aware Interaction

LimeLight evaluates the sentiment of the original post (positive, neutral, or negative) and adjusts its tone accordingly. For example:

- A negative sentiment triggers more empathetic or supportive language

- Neutral or factual posts receive concise and informative replies

4. Automated Post Engagement

On post creation, the system performs the following:

- Checks if the content is within scope

- If relevant, triggers

generate_response()inbot.py - Inserts a comment into the database, visible in the discussion thread

- Tags the response as authored by "LimeLight" with a timestamp

Technology Stack

| Component | Technology |

|---|---|

| Backend | FastAPI |

| Frontend | HTML, CSS, Jinja2 |

| Database | MongoDB (accessed via Motor) |

| LLM | LLaMA 3 (served via Ollama) |

| RAG Pipeline | LangChain + HuggingFace |

| Vector Store | ChromaDB |

| Embeddings | Sentence Transformers |

| ML Extension | Sentiment Analysis (Planned) |

Installation & Usage

Clone the repository:

git clone https://github.com/YourUsername/limelight-assistant.git

cd limelight-assistant

Set up the virtual environment:

python -m venv venv

source venv/bin/activate # For Mac/Linux

venv\Scripts\activate # For Windows

Install dependencies:

pip install -r requirements.txt

Start the application:

uvicorn main:app --reload

Ensure LLaMA 3 is active using Ollama:

ollama run llama3

Visit the platform at http://localhost:8000

System Architecture

User Post

│

├──> Relevance Detection (keywords + embeddings)

│

├──> ChromaDB Vector Retrieval

│

├──> LangChain RAG Pipeline

│

├──> LLaMA 3 Generates Response

│

├──> Sentiment Analysis

│

└──> Auto-Comment as "LimeLight"

The architecture is modular, with separation of concerns across:

-

main.py– routing and server logic -

response.py– relevance and sentiment processing -

bot.py– RAG pipeline and language generation

Future Enhancements

- Replace keyword detection with an LLM-based classifier for improved topic relevance

- Integrate advanced sentiment classification using transformer-based models

- Expand to support other domains beyond enterprise tech

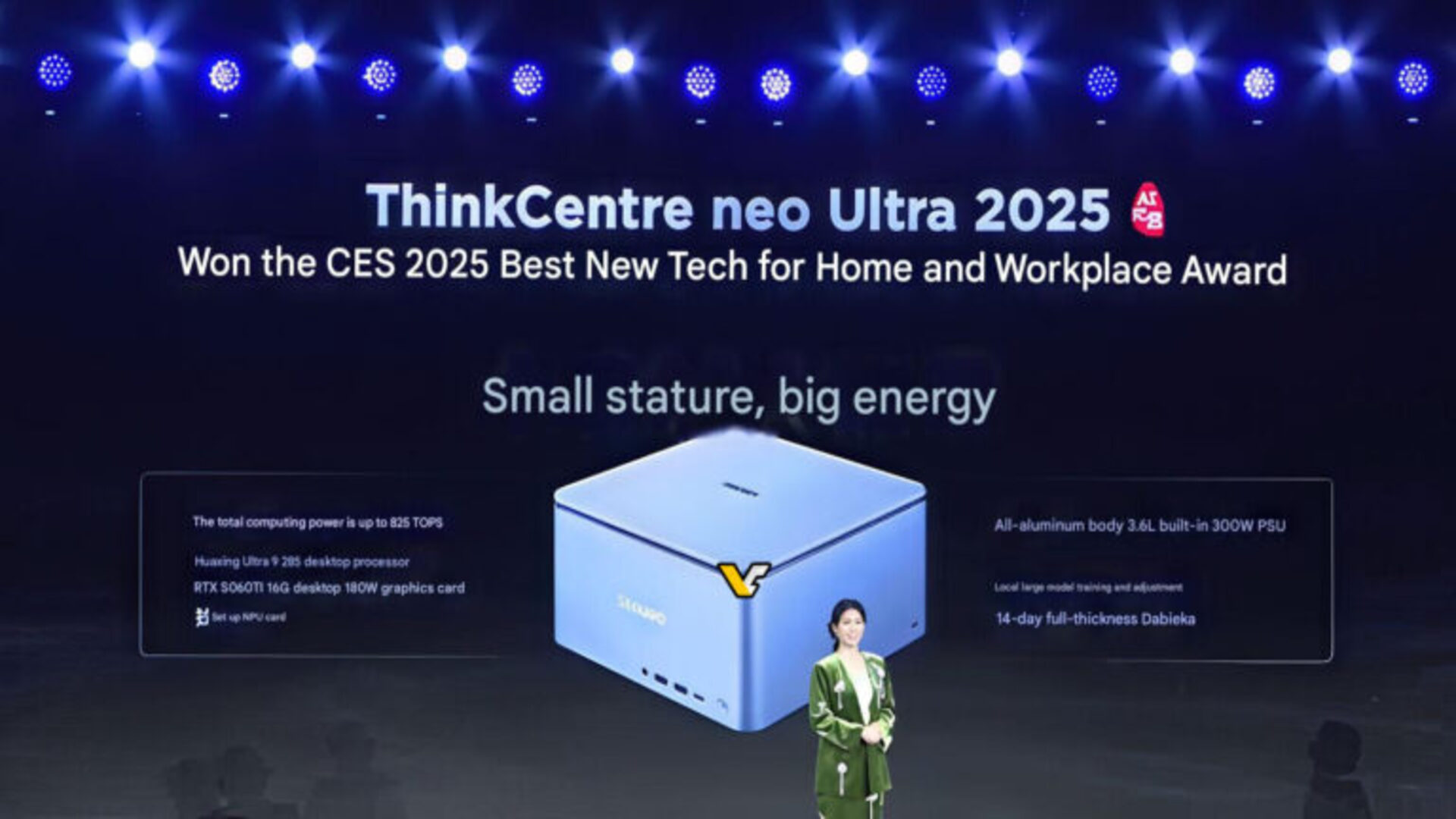

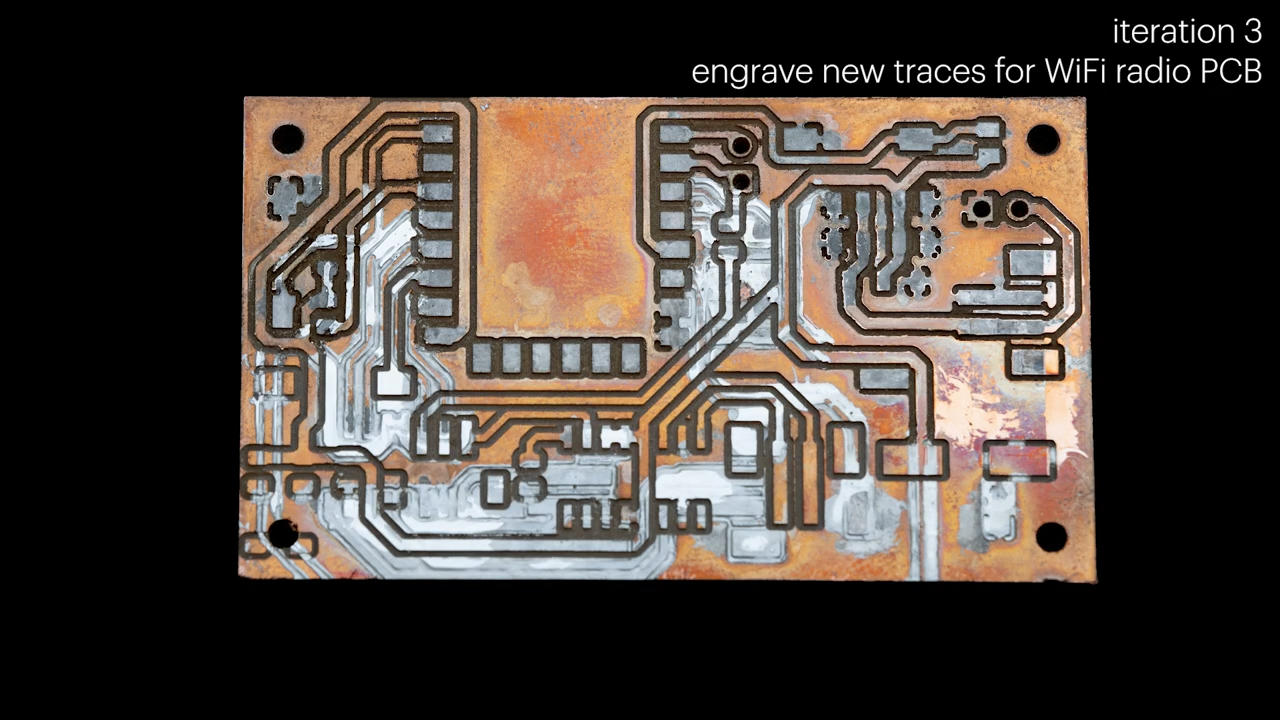

Sneakpeak

Contact

For queries, feedback, or collaboration opportunities, contact:

Email: v.ukrishnan8@gmail.com

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

![Re-designing a Git/development workflow with best practices [closed]](https://i.postimg.cc/tRvBYcrt/branching-example.jpg)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![The Material 3 Expressive redesign of Google Clock leaks out [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/03/Google-Clock-v2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)