Govern AI APIs with Azure API Management GenAI policies

In the world of AI, you can access a large or small language model via the api. With the growth of AI LLMs, LLM apis are increasing as well, which calls for the management and governance of the AI Apis. There are many challenges a centralized AI Governance team in an enterprise may face like Proper tracking of tokens that are the main currency for AI communication Securing the LLM API keys Improved response time for chat completion requests Azure API Management has released a list of AI policies that can be used to build a AI Gateway and govern the AI Apis. You can connect your Azure OpenAI instance and Azure API Management in the same Azure subscription natively. AI Gateway Policies Token Limit Policy With Token Limit policy, you can manage and enforce regulations on token usage per minute. Also, the quota can be used at an hourly, daily, weekly and yearly basis. Azure OpenAI Token Metric policy Using the emit token policy, token usage can be logged to track the consumed total, completion and prompt tokens. Token usage can be grouped by certain dimensions like User ID, Subscription ID, Location, Product ID, Client IP address and many more. Semantic Caching policy Performance and latency of your llm requests can be improved by caching and responding with the cached responses of the similar prompts based on the similarity score you set. Semantic Caching can be enabled with Redis Search module from Azure Managed Redis or other external cache connected to API Management. Use azure-openai-semantic-cache-lookup policy below to retrieve responses from cache for Azure OpenAI chat completion requests. By adjusting the similarity score threshold, you can control how closely prompts need to match for the cached completion responses to be reused. "expression to partition caching" This approach leverages the Azure OpenAI embeddings model to generate vectors for comparing prompts and ensures that similar prompts can reuse cached completion responses, resulting in reduced token consumption and improved response performance. Authentication and Authorization Authenticating and authorizing Azure OpenAI api endpoints using API management helps enterprises when multiple AI apps and agents are accessing multiple OpenAI endpoints. Api Management helps with managing the API keys are not shared directly instead using keyless authentication via Managed Identities or with keys stored as Named Values. The recommended approach is to use Managed Identities to authenticate Azure OpenAI api endpoints. Enable Managed Identity on API Management Assign the API Management Managed Identity with Cognitive Services OpenAI User role on the Azure OpenAI resource. If Azure OpenAI api is directly imported, authentication using managed identity is automatically configured. Note: All these policies are supported for a wider range of large language models through Azure AI Model Inference API. Production ready accelerator API Management landing zone accelerator provides packaged guidance with reference architecture and implementation to deploy a AI gateway with API Management, multiple Azure OpenAI instances provisioned in secure baseline. This accelerator implements policies for tracking token usage, managing traffic between multiple OpenAI instances. References AI gateway capabilities APIM landing zone accelerator AI-Gateway

In the world of AI, you can access a large or small language model via the api. With the growth of AI LLMs, LLM apis are increasing as well, which calls for the management and governance of the AI Apis.

There are many challenges a centralized AI Governance team in an enterprise may face like

- Proper tracking of tokens that are the main currency for AI communication

- Securing the LLM API keys

- Improved response time for chat completion requests

Azure API Management has released a list of AI policies that can be used to build a AI Gateway and govern the AI Apis.

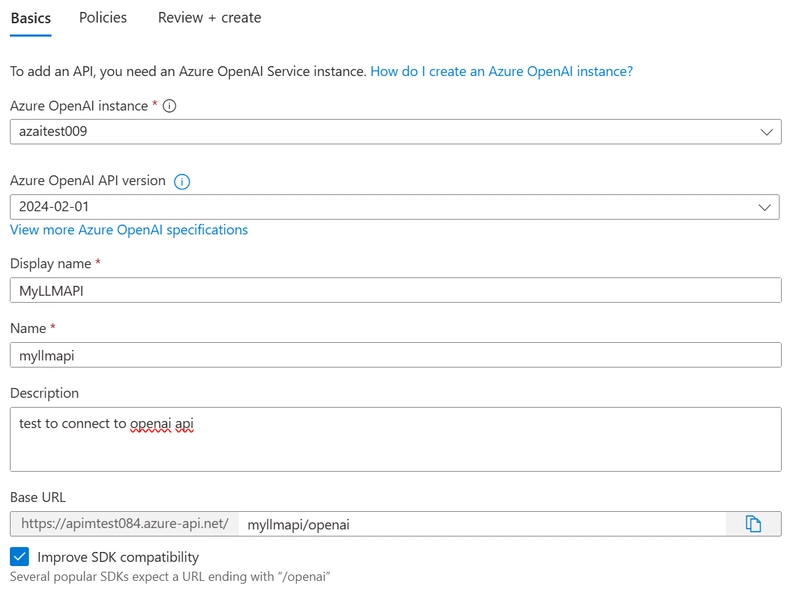

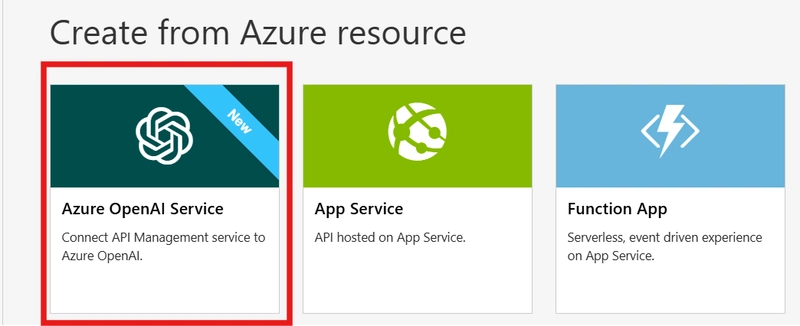

You can connect your Azure OpenAI instance and Azure API Management in the same Azure subscription natively.

AI Gateway Policies

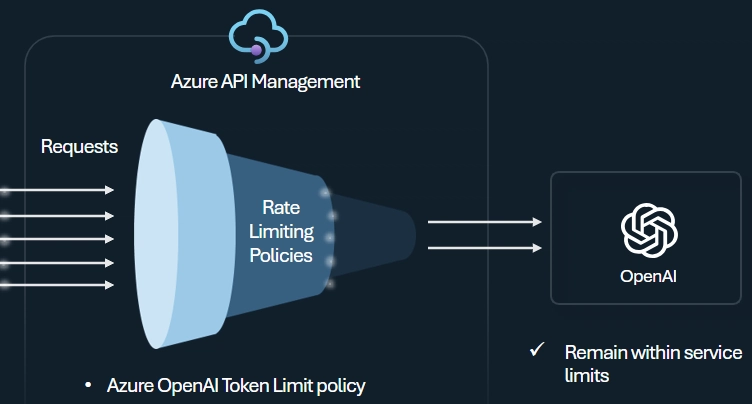

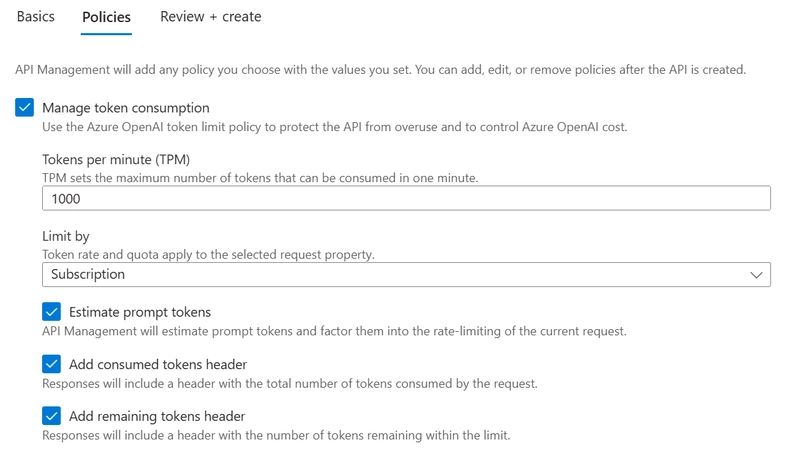

Token Limit Policy

With Token Limit policy, you can manage and enforce regulations on token usage per minute. Also, the quota can be used at an hourly, daily, weekly and yearly basis.

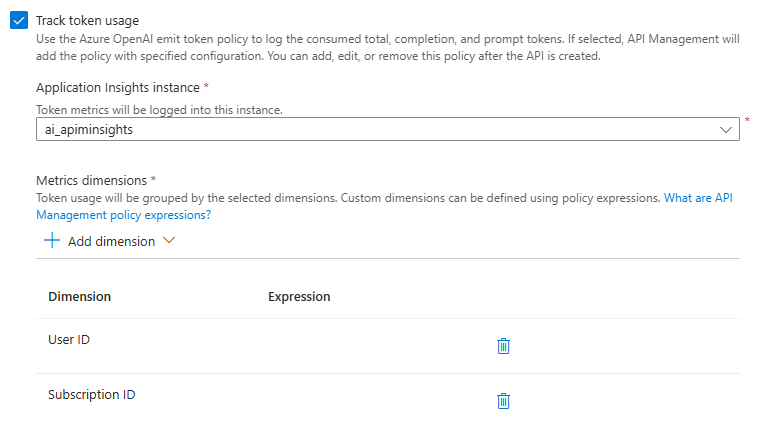

Azure OpenAI Token Metric policy

Using the emit token policy, token usage can be logged to track the consumed total, completion and prompt tokens. Token usage can be grouped by certain dimensions like User ID, Subscription ID, Location, Product ID, Client IP address and many more.

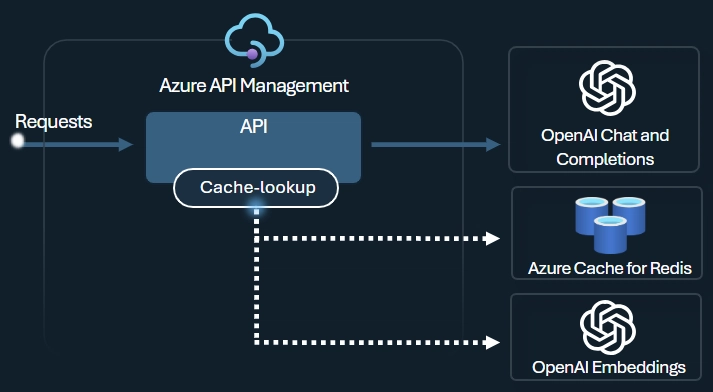

Semantic Caching policy

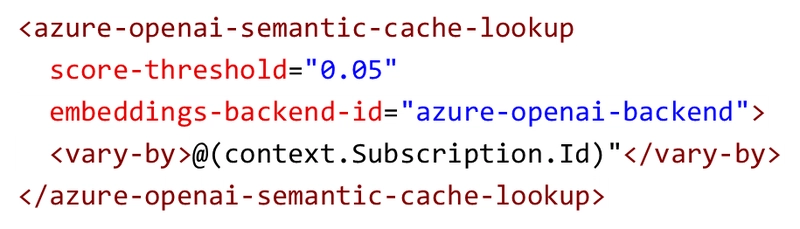

Performance and latency of your llm requests can be improved by caching and responding with the cached responses of the similar prompts based on the similarity score you set. Semantic Caching can be enabled with Redis Search module from Azure Managed Redis or other external cache connected to API Management.

Use azure-openai-semantic-cache-lookup policy below to retrieve responses from cache for Azure OpenAI chat completion requests. By adjusting the similarity score threshold, you can control how closely prompts need to match for the cached completion responses to be reused.

"expression to partition caching"

This approach leverages the Azure OpenAI embeddings model to generate vectors for comparing prompts and ensures that similar prompts can reuse cached completion responses, resulting in reduced token consumption and improved response performance.

Authentication and Authorization

Authenticating and authorizing Azure OpenAI api endpoints using API management helps enterprises when multiple AI apps and agents are accessing multiple OpenAI endpoints. Api Management helps with managing the API keys are not shared directly instead using keyless authentication via Managed Identities or with keys stored as Named Values.

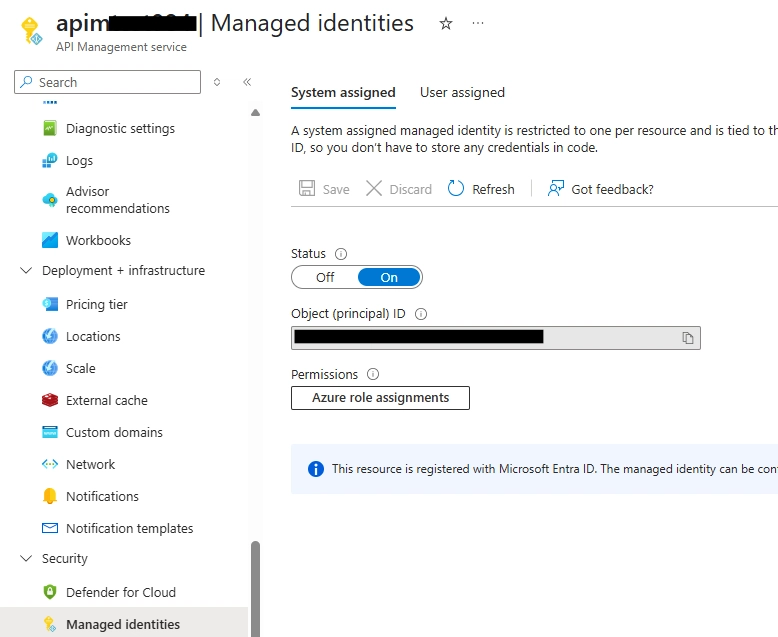

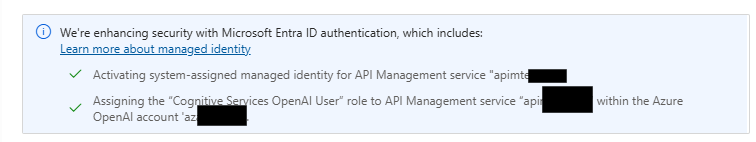

The recommended approach is to use Managed Identities to authenticate Azure OpenAI api endpoints.

- Enable Managed Identity on API Management

- Assign the API Management Managed Identity with Cognitive Services OpenAI User role on the Azure OpenAI resource.

If Azure OpenAI api is directly imported, authentication using managed identity is automatically configured.

Note: All these policies are supported for a wider range of large language models through Azure AI Model Inference API.

Production ready accelerator

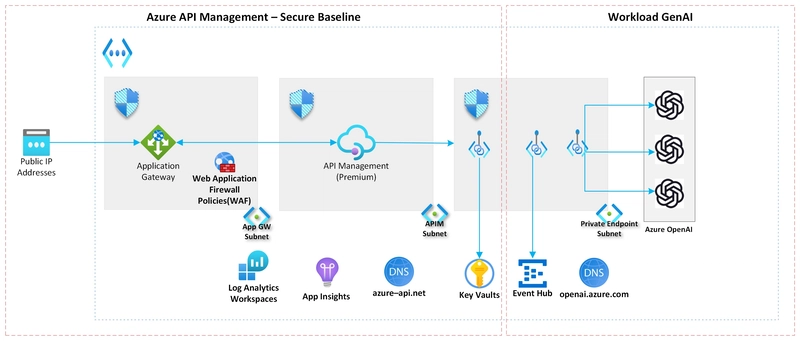

API Management landing zone accelerator provides packaged guidance with reference architecture and implementation to deploy a AI gateway with API Management, multiple Azure OpenAI instances provisioned in secure baseline. This accelerator implements policies for tracking token usage, managing traffic between multiple OpenAI instances.

.jpg)

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)