Docker Compose and Devcontainers for Microservices Development

Introduction Imagine a scenario - you are working as a developer in a fast-growing company. On board there are many teams, developing separate services that intertwine between each other. Let's say you have: App 1 - developed mostly by Team A, with occasional changes by Team B and C. It communicates with App 2 and App 3 via REST. App 2 - developed primarily by Team B and A. It serves as an API for both App 1 and 3, while also communicating with other microservices via GRPC or Kafka. App 3 - developed mostly by Team C, with support from Team A, and occasional changes by Team B. This one interacts with microservice Y. …plus 5 more microservices, each with its own dependencies, databases, or message queues, developed by various combinations of teams. The point is - developers frequently need to work on multiple services, often simultaneously. They need to see how their changes affect service they are working on, and all dependent services - all without the friction and delay of deploying to a shared staging environment. Onboarding new team members needs to be swift, not a week-long setup marathon wrestling with dependencies. So how to approach this problem? Traditional local development The approach we all know. In this flow, we setup every service we need locally on our machine. Manually installing all dependencies, following steps in README. This is good enough for single repositories, however will come with problems at scale: Port management - every service has to have its own set of ports. These addresses will have to be configured in other services. It is not easy to tell, what address localhost:3000 , localhost:3001 or localhost:3010 is responsible for. Dependency management - every project will need their own versions of NodeJS, Golang, Ruby, etc… "Works on my machine" - differences in OS, installed packages, and configurations leading to inconsistencies. CORS, Webstorage API, etc. - different ports mean different origins. This causes many issues for frontend apps, like blocked requests or missing cookies. Onboarding difficulties - every new team member has to go through many README files. Every one of this readme's will differ from each other. Devcontainers with Docker Compose and Traefik This tutorial assumes familiarity with Docker, Docker Compose, Devcontainers and that your services have Dockerfile implemented Concept Overview The solution consists of: A central repository containing: A makefile with utilities (e.g., init to pull service repositories) A docker-compose configuration for orchestrating services Service repositories with: Dockerfiles for containerization Devcontainer configurations for development Traefik integration: Routes traffic to services. For example, you can have multiple frontends accessible on the same domain, like localhost:3000/svc1 , localhost:3000/svc2. Provides a dashboard (localhost:5555 by default) to inspect configurations of routings. Source: Traefik Docker Provider Documentation Key Benefits Flexible service management: Run selected services using COMPOSE_PROFILES Stop, rebuild, and restart services with ease Seamless development experience: Switch between running containerized services and developing in devcontainers Maintain identical networking configurations in both environments No more conflicting ports Setup base compose repository The first step is to create an empty repository, then inside it create a Makefile with essential procedures: REPOS = \ git@github.com:some/repository1.git \ git@github.com:some/repository2.git \ git@github.com:some/repository3.git \ ... .PHONY: init dev down $(REPO_DIRS): git clone --filter=blob:none $(filter %$@.git,$(REPOS)); # Responsible for pulling repositories init: $(REPO_DIRS) # Will be used as a shortcut to start all services dev: docker compose up -d # Stops and removes all docker services down: COMPOSE_PROFILES=all docker compose down For Traefik to work correctly, we first need to add a traefik.yml configuration file: log: level: INFO api: dashboard: true insecure: true providers: docker: endpoint: unix:///var/run/docker.sock exposedByDefault: false # This must match the network name used by your services network: shared-network ping: {} Next, let's create compose.yml. In this file we will: Create a Traefik service to handle routing between our microservices Define all our microservices with appropriate configurations Create an attachable network, allowing devcontainers to connect to the same network Assign profiles to each service for selective launching: Each service gets its own profile (same as the service name) All services get the all profile for stopping all services at once You can group related services by assigning the same profile name to both. For example, if a database is useless without its API service,

Introduction

Imagine a scenario - you are working as a developer in a fast-growing company. On board there are many teams, developing separate services that intertwine between each other. Let's say you have:

- App 1 - developed mostly by Team A, with occasional changes by Team B and C. It communicates with App 2 and App 3 via REST.

- App 2 - developed primarily by Team B and A. It serves as an API for both App 1 and 3, while also communicating with other microservices via GRPC or Kafka.

- App 3 - developed mostly by Team C, with support from Team A, and occasional changes by Team B. This one interacts with microservice Y.

- …plus 5 more microservices, each with its own dependencies, databases, or message queues, developed by various combinations of teams.

The point is - developers frequently need to work on multiple services, often simultaneously. They need to see how their changes affect service they are working on, and all dependent services - all without the friction and delay of deploying to a shared staging environment. Onboarding new team members needs to be swift, not a week-long setup marathon wrestling with dependencies.

So how to approach this problem?

Traditional local development

The approach we all know. In this flow, we setup every service we need locally on our machine. Manually installing all dependencies, following steps in README. This is good enough for single repositories, however will come with problems at scale:

-

Port management - every service has to have its own set of ports. These addresses will have to be configured in other services. It is not easy to tell, what address

localhost:3000,localhost:3001orlocalhost:3010is responsible for. - Dependency management - every project will need their own versions of NodeJS, Golang, Ruby, etc…

- "Works on my machine" - differences in OS, installed packages, and configurations leading to inconsistencies.

- CORS, Webstorage API, etc. - different ports mean different origins. This causes many issues for frontend apps, like blocked requests or missing cookies.

- Onboarding difficulties - every new team member has to go through many README files. Every one of this readme's will differ from each other.

Devcontainers with Docker Compose and Traefik

This tutorial assumes familiarity with Docker, Docker Compose, Devcontainers and that your services have Dockerfile implemented

Concept Overview

The solution consists of:

- A central repository containing:

- A makefile with utilities (e.g.,

initto pull service repositories) - A docker-compose configuration for orchestrating services

- A makefile with utilities (e.g.,

- Service repositories with:

- Dockerfiles for containerization

- Devcontainer configurations for development

-

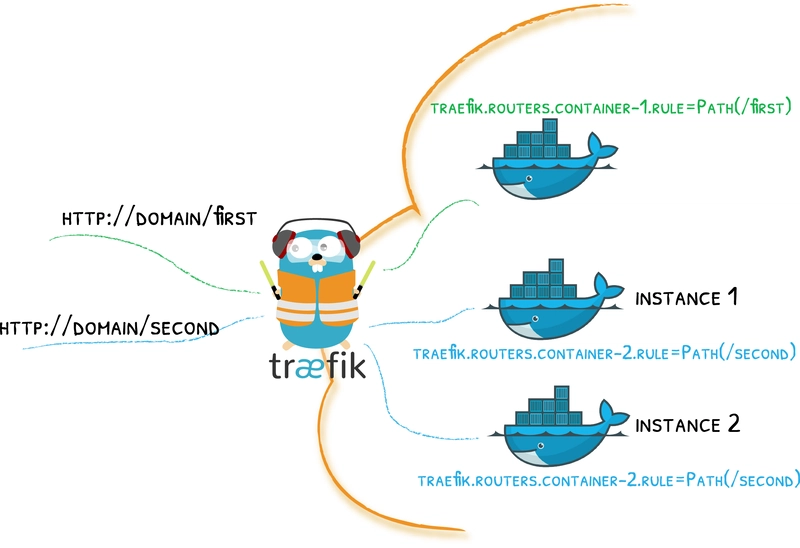

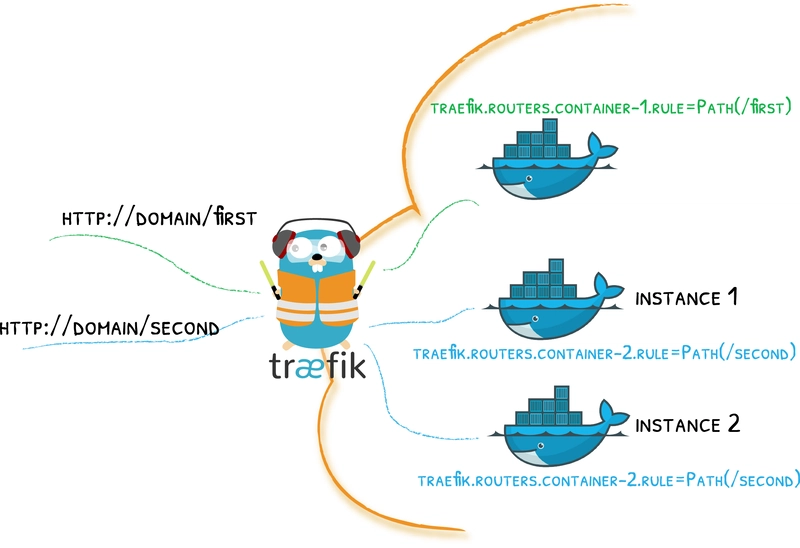

Traefik integration:

- Routes traffic to services. For example, you can have multiple frontends accessible on the same domain, like

localhost:3000/svc1,localhost:3000/svc2. - Provides a dashboard (

localhost:5555by default) to inspect configurations of routings.

- Routes traffic to services. For example, you can have multiple frontends accessible on the same domain, like

Source: Traefik Docker Provider Documentation

Key Benefits

-

Flexible service management:

- Run selected services using COMPOSE_PROFILES

- Stop, rebuild, and restart services with ease

-

Seamless development experience:

- Switch between running containerized services and developing in devcontainers

- Maintain identical networking configurations in both environments

- No more conflicting ports

Setup base compose repository

The first step is to create an empty repository, then inside it create a Makefile with essential procedures:

REPOS = \

git@github.com:some/repository1.git \

git@github.com:some/repository2.git \

git@github.com:some/repository3.git \

...

.PHONY: init dev down

$(REPO_DIRS):

git clone --filter=blob:none $(filter %$@.git,$(REPOS));

# Responsible for pulling repositories

init: $(REPO_DIRS)

# Will be used as a shortcut to start all services

dev:

docker compose up -d

# Stops and removes all docker services

down:

COMPOSE_PROFILES=all docker compose down

For Traefik to work correctly, we first need to add a traefik.yml configuration file:

log:

level: INFO

api:

dashboard: true

insecure: true

providers:

docker:

endpoint: unix:///var/run/docker.sock

exposedByDefault: false

# This must match the network name used by your services

network: shared-network

ping: {}

Next, let's create compose.yml. In this file we will:

- Create a Traefik service to handle routing between our microservices

- Define all our microservices with appropriate configurations

- Create an attachable network, allowing devcontainers to connect to the same network

- Assign profiles to each service for selective launching:

- Each service gets its own profile (same as the

service name) - All services get the

allprofile for stopping all services at once - You can group related services by assigning the same profile name to both. For example, if a database is useless without its API service, give both the same profile name so they always start together

- Each service gets its own profile (same as the

- Configure the build process for each service, including environment variable handling

Here's the complete compose.yml configuration:

services:

traefik:

image: traefik:v2.11.0

ports:

- 3000:80 # Services you expose will be visible on localhost:3000/**

- 5555:8080 # This is a port for a traefik dashboard

volumes:

- ./traefik.yml:/etc/traefik/traefik.yml:ro

- /var/run/docker.sock:/var/run/docker.sock

command: --configFile=/etc/traefik/traefik.yml

networks:

- default

postgres-db:

profiles:

- postgres-db

- all

image: postgres:16.2

networks:

- default

...

# Lets assume svc1 is one of your repositories

svc1:

hostname: svc1

profiles:

- svc1

- all

image: svc1:latest

env_file:

# here is a list of env files, that will be loaded

# when service is started

# (important - these won't be loaded for build process, for that

# we will use something else)

- svc1/.env.example

- path: svc2/.env.local # if exists, .env.local will override variables coming from .env.example

required: false

networks:

- default

build:

context: ./svc1

dockerfile: Dockerfile

args:

# these arguments will be passed to Dockerfile ARGS

# you can't use here directly env files, so you have to

# set them manually to some env variable, along with default value:

- API_KEY=${API_KEY:-your_default_api_key}

- LOG_LEVEL=${LOG_LEVEL:-info}

labels:

# If you want to expose this service on localhost:3000/svc1 add this

# Will route any traffic matching the rule to svc1 on port 5173

- traefik.enable=true

- traefik.http.services.svc1.loadbalancer.server.port=5173

- traefik.http.routers.svc1.rule=(Host(`localhost`) || Host(`host.docker.internal`)) && PathPrefix(`/svc1`)

...

# Placeholder for another service (e.g., svc2)

svc2:

hostname: svc2

profiles:

- svc2

- all

image: svc2:latest

env_file:

- svc2/.env.example

networks:

- default

build:

context: ./svc2

dockerfile: Dockerfile

labels:

# Expose svc2 on localhost:3000/svc2, routing to its internal port (e.g., 8080)

- traefik.enable=true

- traefik.http.services.svc2.loadbalancer.server.port=8080 # Adjust port as needed

- traefik.http.routers.svc2.rule=(Host(`localhost`) || Host(`host.docker.internal`)) && PathPrefix(`/svc2`)

networks:

default:

# This is crucial for devcontainers to be able to connect to this network

attachable: true

name: shared-network

volumes:

...

Now let's create .env and .env.example files. The .env file is loaded automatically when running docker compose:

Example .env file:

COMPOSE_PROFILES=postgres-db,svc1,...

Now you can run make dev, which will start all services listed in the compose profiles. When an image is not available (like in svc1's case), it will build the image based on the instructions provided.

The build process can be tricky. The reason we added default values is that there's no easy way to access env files during the first launch.

Enhance rebuilding flow

Our setup revolves around keeping all environment files for services in their respective directories. Now let's implement a better process for rebuilding images.

We'll introduce a new build command in our Makefile, which will first load env files related to the service, and then rebuild the image:

To do this, add in your Makefile:

[... existing code ...]

# List of all env files, in order of least relevant to most relevant

# build procedure will try to load all of them. Customize for your use case.

ENV_FILES = .env.example .env.development .env .env.local .env.development.local

build:

# If 'build' is called without specific service names, build all services defined in compose.

@if [ -z "$(filter-out $@,$(MAKECMDGOALS))" ]; then \

docker compose build; \

exit 0; \

fi

# Get the list of service names passed as arguments (e.g., make build svc1 svc2)

@services="$(filter-out $@,$(MAKECMDGOALS))"; \

# Loop through each specified service name

for service in $$services; do \

echo "Building service: $$service"; \

# Temporarily export variables sourced from env files so docker compose build can see them

set -a; \

# Loop through the predefined list of env file names/suffixes

for env_suffix in $(ENV_FILES); do \

# Check if the env file exists in the service's directory

if [ -f "$$service/$$env_suffix" ]; then \

# Source the file, loading its variables into the environment

source "$$service/$$env_suffix"; \

fi; \

done; \

# Stop exporting variables

set +a; \

docker compose build $$service || exit 1; \

done

# needed because of passing arguments to make command

%:

@:

Now when you run make build svc1, your service will be built with ARGS values defined in your repository's env files (or default ones if no env files are found).

Devcontainers in services

Currently, you have configured Docker Compose in a way that makes it easy to run and rebuild your services. They can communicate with each other because they're in the same network. But this setup is mainly useful when you're not actively developing these services.

To enable easy development, navigate to your service directory and create a .devcontainer directory. For simplicity, I'll present a configuration for a Node application.

Inside the directory, create two files:

devcontainer.json:

{

"name": "Node",

"dockerComposeFile": "./docker-compose.devcontainer.yaml",

"service": "base",

"workspaceFolder": "/workspaces/${localWorkspaceFolderBasename}",

"customizations": {

"vscode": {

"settings": {

"remote.autoForwardPorts": false

},

"extensions": [

... list of extensions, if any...

]

}

}

}

And docker-compose.devcontainer.yaml:

services:

base:

image: node:22.12.0

command: sleep infinity

hostname: svc1

networks:

- default

volumes:

- ../../:/workspaces:cached

labels:

# these has to be the same, as in main docker compose

- traefik.enable=true

- traefik.http.services.svc1.loadbalancer.server.port=5173

- traefik.http.routers.svc1.rule=(Host(`localhost`) || Host(`host.docker.internal`)) && PathPrefix(`/svc1`)

networks:

shared-network:

external: true

And that's all! When you open this repository using a devcontainer, you'll have the whole environment ready for development. All routing to other services will work either using direct connection to other containers (for example http://svc2:3000), or using Traefik (http://host.docker.internal:3000/svc2). You will still have your local-development features, like hot-reload or debugging.

Because the devcontainer shares the shared-network with the services run by the main compose.yml, you can connect from your devcontainer (svc1) to other services (svc2) in two main ways:

-

Direct Service-to-Service: Use the service name defined in

compose.ymlas the hostname (e.g.,fetch('http://svc2:8080/api/data')). This works because Docker's internal DNS resolves service names on the shared network. Use the internal port of the target service (e.g.,8080forsvc2in our example). -

Via Traefik: Access services through the Traefik proxy using the host machine's address and the path defined in the Traefik rule (e.g.,

fetch('http://host.docker.internal:3000/svc2/api/data')).host.docker.internalis a special DNS name that resolves to the host machine from within a Docker container. Traefik then routes the request based on the path (/svc2) to the correct container and port.

You will still have your local-development features, like hot-reload or debugging, within the devcontainer.

Summary

We've successfully created a development environment that allows teams to work efficiently on interconnected microservices without the usual friction:

- Consistent environments across the team - no more "works on my machine" issues or week-long setup processes.

- Flexible service management - choose which services to run based on your current task using profiles

- Easy switching between modes - run services in containers when just consuming them, or switch to devcontainers when actively developing

- Simplified networking - consistent service discovery regardless of whether you're running services or developing them

Use this guide as a baseline, and extend the setup according to your specific needs.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)

![Apple Seeds tvOS 18.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97153/97153/97153-640.jpg)