Crafting Minimal Viable IAM Permissions for Amazon Bedrock

In many ways, most AWS accounts usually require very specific minimum viable permissions (MVP) assigned to user groups. This is usually controlled through templates like AWS Organizations Landing Zone, where the account governor usually has to manually define the scope of access manually. What if we could generate permissions policies via a prompt? Minimal Viable IAM Permissions for Amazon Bedrock with Langchain As Cloud Governance specialist, we now have access to powerful tools like Amazon Bedrock and Langchain. But with great power comes great responsibility, especially when it comes to security. In this post, we'll explore how to create minimal viable IAM (Identity and Access Management) permissions for Amazon Bedrock when using it with Langchain, and we'll include some Python code examples. Understanding the Stack Amazon Bedrock: A fully managed service offering high-performing foundation models. Langchain: A framework for developing applications powered by language models. IAM: AWS's Identity and Access Management service for controlling access to AWS resources. The Importance of Minimal Permissions Implementing the principle of least privilege is crucial when working with AI services. It helps: Reduce the potential attack surface Minimize the risk of unintended actions Comply with security best practices Key Bedrock Actions for Langchain Integration When using Langchain with Bedrock, we typically need these main actions: bedrock:InvokeModel: To call the model bedrock:ListFoundationModels: To list available models (optional) bedrock:GetFoundationModel: To get model details (optional) Sample IAM Policy Here's a minimal IAM policy for Bedrock use with Langchain: { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "bedrock:InvokeModel" ], "Resource": "arn:aws:bedrock:us-west-2::foundation-model/anthropic.claude-v2" } ] } This policy allows invoking the Claude v2 model, which is commonly used with Langchain. Python Code Example Let's see how to use this with Langchain: import boto3 from langchain.llms import Bedrock # Assume AWS credentials are set up in your environment bedrock_client = boto3.client( service_name='bedrock-runtime', region_name='us-west-2' ) # Initialize the Bedrock LLM llm = Bedrock( model_id="anthropic.claude-v2", client=bedrock_client, model_kwargs={"max_tokens_to_sample": 256} ) # Use the LLM response = llm("Produce a IAM permissions policy with only read and write access to the bucket name only") print(response) This example we're using the boto3 client with Langchain's Bedrock integration. The IAM permissions we set earlier allow this code to run successfully. Best Practices Use IAM roles: Attach these permissions to IAM roles rather than individual users. Regular audits: Periodically review and update your IAM policies. Environment-specific permissions: Use different permissions for development, testing, and production environments. Troubleshooting If you encounter permission issues, check the following: Ensure the IAM policy is attached correctly to your role or user. Verify that the region in your code matches the region in the IAM policy. Check AWS CloudTrail logs for specific permission denied errors. Conclusion By implementing minimal viable IAM permissions for Amazon Bedrock when using Langchain, you're taking a crucial step in securing your AI applications. Of course you gotta remember to regularly review and update your permissions as your application's needs evolve. Happy secure coding!

In many ways, most AWS accounts usually require very specific minimum viable permissions (MVP) assigned to user groups. This is usually controlled through templates like AWS Organizations Landing Zone, where the account governor usually has to manually define the scope of access manually. What if we could generate permissions policies via a prompt?

Minimal Viable IAM Permissions for Amazon Bedrock with Langchain

As Cloud Governance specialist, we now have access to powerful tools like Amazon Bedrock and Langchain. But with great power comes great responsibility, especially when it comes to security. In this post, we'll explore how to create minimal viable IAM (Identity and Access Management) permissions for Amazon Bedrock when using it with Langchain, and we'll include some Python code examples.

Understanding the Stack

- Amazon Bedrock: A fully managed service offering high-performing foundation models.

- Langchain: A framework for developing applications powered by language models.

- IAM: AWS's Identity and Access Management service for controlling access to AWS resources.

The Importance of Minimal Permissions

Implementing the principle of least privilege is crucial when working with AI services. It helps:

- Reduce the potential attack surface

- Minimize the risk of unintended actions

- Comply with security best practices

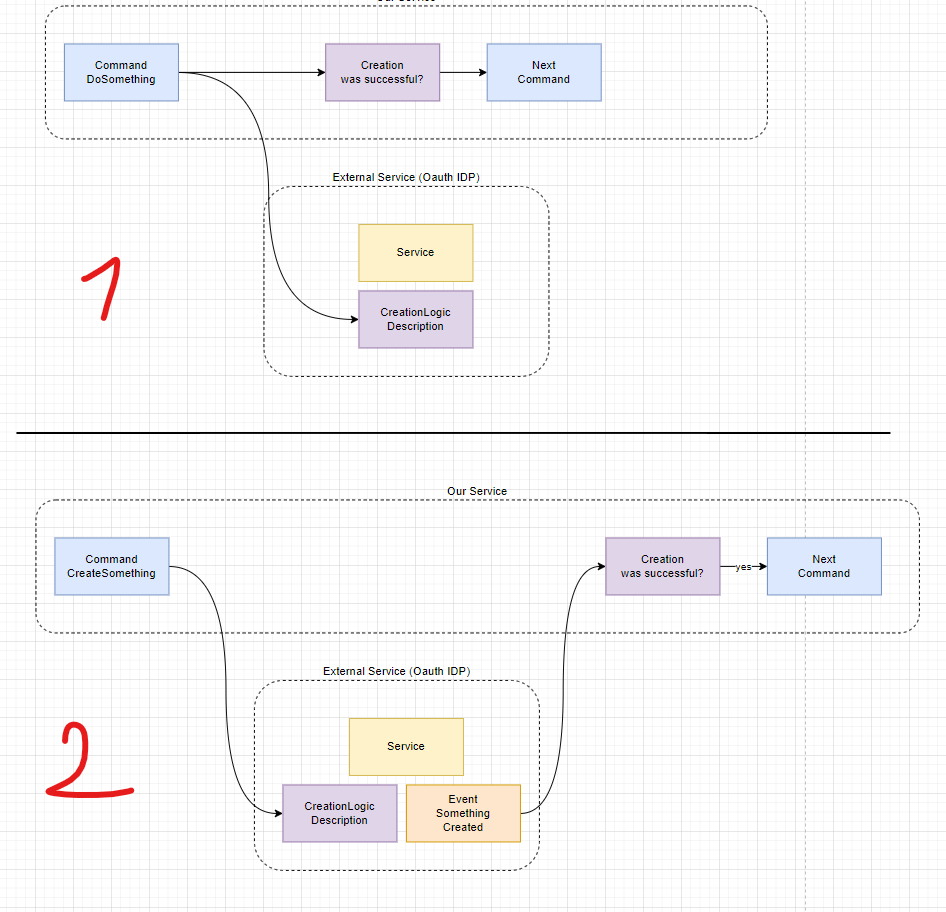

Key Bedrock Actions for Langchain Integration

When using Langchain with Bedrock, we typically need these main actions:

-

bedrock:InvokeModel: To call the model -

bedrock:ListFoundationModels: To list available models (optional) -

bedrock:GetFoundationModel: To get model details (optional)

Sample IAM Policy

Here's a minimal IAM policy for Bedrock use with Langchain:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"bedrock:InvokeModel"

],

"Resource": "arn:aws:bedrock:us-west-2::foundation-model/anthropic.claude-v2"

}

]

}

This policy allows invoking the Claude v2 model, which is commonly used with Langchain.

Python Code Example

Let's see how to use this with Langchain:

import boto3

from langchain.llms import Bedrock

# Assume AWS credentials are set up in your environment

bedrock_client = boto3.client(

service_name='bedrock-runtime',

region_name='us-west-2'

)

# Initialize the Bedrock LLM

llm = Bedrock(

model_id="anthropic.claude-v2",

client=bedrock_client,

model_kwargs={"max_tokens_to_sample": 256}

)

# Use the LLM

response = llm("Produce a IAM permissions policy with only read and write access to the bucket name <'INSERT BUCKET NAME HERE'> only")

print(response)

This example we're using the boto3 client with Langchain's Bedrock integration. The IAM permissions we set earlier allow this code to run successfully.

Best Practices

- Use IAM roles: Attach these permissions to IAM roles rather than individual users.

- Regular audits: Periodically review and update your IAM policies.

- Environment-specific permissions: Use different permissions for development, testing, and production environments.

Troubleshooting

If you encounter permission issues, check the following:

- Ensure the IAM policy is attached correctly to your role or user.

- Verify that the region in your code matches the region in the IAM policy.

- Check AWS CloudTrail logs for specific permission denied errors.

Conclusion

By implementing minimal viable IAM permissions for Amazon Bedrock when using Langchain, you're taking a crucial step in securing your AI applications. Of course you gotta remember to regularly review and update your permissions as your application's needs evolve.

Happy secure coding!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

_Piotr_Adamowicz_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-xl-xl.jpg)

![Walmart’s $30 Google TV streamer is now in stores and it supports USB-C hubs [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/onn-4k-plus-store-reddit.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple to Launch AI-Powered Battery Saver Mode in iOS 19 [Report]](https://www.iclarified.com/images/news/97309/97309/97309-1280.jpg)

![Apple Officially Releases macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97308/97308/97308-640.jpg)