Bending .NET: How to Stack-Allocate Reference Types in C#

About In C# interviews, it's quite common to encounter questions like "What are reference and value types?" or "Where are they stored in memory?" - and most of us instinctively answer, "Value types go on the stack, reference types on the heap." But a good interviewer might follow up with a more thought-provoking question: "Can this ever be the other way around - value types on the heap, or reference types on the stack?" Storing value types on the heap is relatively straightforward - boxing is a well-known concept. But the idea of placing reference types on the stack often feels counterintuitive or even impossible at first. However, if we take a step back and think logically: both the stack and the heap are just regions of memory within the same process - they’re used differently, but not fundamentally different in nature. In this article, we’ll explore whether it’s actually possible to place a reference type on the stack - and, more importantly, what practical benefits that might offer to us as developers. Theory Before diving into the demonstration, it’s helpful to refresh how class objects are represented in memory. The key components are: Sync Block Index - 8 bytes used internally by the runtime for features like locking and thread synchronization Method Table Pointer - 8 bytes pointing to metadata about the object, including information about the type and the layout of its methods. This is how C# knows which methods to call and where they are located Field Data - the remaining bytes are used to store the actual values of the class's fields If, for example, we have a class: class MyClass { int a; // 4 bytes byte b; // 1 byte bool c; // 1 byte } then its display will be akin to Offset Size Description 0x00 8 bytes Sync block (reserved by CLR) 0x08 8 bytes MethodTable pointer (type metadata) 0x10 4 bytes int a = 42 0x14 1 byte byte b = 0xAB 0x15 1 byte bool c = true (0x01) 0x16 2 bytes Padding for alignment (optional) The structure described above corresponds to a 64-bit operating system. On a 32-bit system, pointer sizes are different: instead of 8 bytes, pointers occupy 4 bytes. It’s important to note that struct types do not include the initial 16 bytes used in class objects. The field data starts right at offset 0x00, which will be an important detail later on. General Discussions After diving into the theory, it becomes tempting to ask: What if we could allocate our new object directly on the stack - just as raw memory? Fortunately, C# gives us the tools to do exactly that. One of the key features here is the stackalloc byte[size] construct, which allows us to reserve a block of memory on the stack. By default, stackalloc returns a byte*, which lives in the realm of unsafe code. However, thanks to the evolution of .NET and the introduction of Span, we can now safely work with this stack-allocated memory - without leaving the bounds of memory safety or sacrificing performance. This leads us to two distinct ways of implementing our idea: Unsafe Approach – leveraging unsafe blocks, pointer arithmetic, and low-level memory manipulation Safe Approach – using only standard C# language features, without leaving the bounds of memory safety Unsafe This is probably the simplest way to understand how an object can be manually constructed (allocated). If we break it down step by step, the process looks like this: Allocate memory for the future object - this can be done using stackalloc byte[size] Initialize the first 8 bytes to zero, which typically represent the sync block index Set the next 8 bytes to a pointer referencing the MethodTable - metadata that describes the object's type Convert the MethodTable pointer into a managed reference to a C# class instance. Up to this point, everything was treated as raw pointers, similar to how you would work in C++ An important step in this process is calculating the total size of the object to allocate. This can be expressed as IntPtr.Size * 2 + Unsafe.SizeOf(), which accounts for the size of the object's actual data plus two pointer-sized fields: one for the sync block and one for the MethodTable reference. Another critical detail is how to obtain a pointer to the MethodTable. This can be done using the following construct: typeof(T).TypeHandle.Value, which gives you a raw pointer to the runtime type information associated with the target class. Putting all the pieces together, the final code looks like this: Of course, most of these operations will require you to work within an unsafe context byte* buffer = stackalloc byte[IntPtr.Size * 2 + Unsafe.SizeOf()]; // Buffer for storage on the stack var pointer = (IntPtr*)stackBuffer; // Transform it for ease of use *(pointer + 0) = IntPtr.Zero; // Optional *(pointer + 1) = typeof(T).TypeHandle.Value; // Set MethodTable pointer var ptr = (IntPtr)(pointer + 1); // Get a pointer to

About

In C# interviews, it's quite common to encounter questions like "What are reference and value types?" or "Where are they stored in memory?" - and most of us instinctively answer, "Value types go on the stack, reference types on the heap."

But a good interviewer might follow up with a more thought-provoking question: "Can this ever be the other way around - value types on the heap, or reference types on the stack?"

Storing value types on the heap is relatively straightforward - boxing is a well-known concept. But the idea of placing reference types on the stack often feels counterintuitive or even impossible at first. However, if we take a step back and think logically: both the stack and the heap are just regions of memory within the same

process - they’re used differently, but not fundamentally different in nature.

In this article, we’ll explore whether it’s actually possible to place a reference type on the stack - and, more importantly, what practical benefits that might offer to us as developers.

Theory

Before diving into the demonstration, it’s helpful to refresh how class objects are represented in memory.

The key components are:

- Sync Block Index - 8 bytes used internally by the runtime for features like locking and thread synchronization

- Method Table Pointer - 8 bytes pointing to metadata about the object, including information about the type and the layout of its methods. This is how C# knows which methods to call and where they are located

- Field Data - the remaining bytes are used to store the actual values of the class's fields

If, for example, we have a class:

class MyClass

{

int a; // 4 bytes

byte b; // 1 byte

bool c; // 1 byte

}

then its display will be akin to

| Offset | Size | Description |

|---|---|---|

| 0x00 | 8 bytes | Sync block (reserved by CLR) |

| 0x08 | 8 bytes | MethodTable pointer (type metadata) |

| 0x10 | 4 bytes | int a = 42 |

| 0x14 | 1 byte | byte b = 0xAB |

| 0x15 | 1 byte |

bool c = true (0x01) |

| 0x16 | 2 bytes | Padding for alignment (optional) |

The structure described above corresponds to a 64-bit operating system. On a 32-bit system, pointer sizes are

different: instead of 8 bytes, pointers occupy 4 bytes.

It’s important to note that struct types do not include the initial 16 bytes used in class objects. The field data starts right at offset 0x00, which will be an important detail later on.

General Discussions

After diving into the theory, it becomes tempting to ask: What if we could allocate our new object directly on the stack - just as raw memory? Fortunately, C# gives us the tools to do exactly that. One of the key features here is the stackalloc byte[size] construct, which allows us to reserve a block of memory on the stack.

By default, stackalloc returns a byte*, which lives in the realm of unsafe code. However, thanks to the evolution of .NET and the introduction of Span, we can now safely work with this stack-allocated memory - without leaving the bounds of memory safety or sacrificing performance.

This leads us to two distinct ways of implementing our idea:

-

Unsafe Approach – leveraging

unsafeblocks, pointer arithmetic, and low-level memory manipulation - Safe Approach – using only standard C# language features, without leaving the bounds of memory safety

Unsafe

This is probably the simplest way to understand how an object can be manually constructed (allocated). If we break it down step by step, the process looks like this:

-

Allocate memory for the future object - this can be done using

stackalloc byte[size] - Initialize the first 8 bytes to zero, which typically represent the sync block index

- Set the next 8 bytes to a pointer referencing the MethodTable - metadata that describes the object's type

- Convert the MethodTable pointer into a managed reference to a C# class instance. Up to this point, everything was treated as raw pointers, similar to how you would work in C++

An important step in this process is calculating the total size of the object to allocate. This can be expressed as IntPtr.Size * 2 + Unsafe.SizeOf, which accounts for the size of the object's actual data plus two pointer-sized fields: one for the sync block and one for the MethodTable reference.

Another critical detail is how to obtain a pointer to the MethodTable. This can be done using the following construct:

typeof(T).TypeHandle.Value, which gives you a raw pointer to the runtime type information associated with the target class.

Putting all the pieces together, the final code looks like this:

Of course, most of these operations will require you to work within an

unsafecontext

byte* buffer = stackalloc byte[IntPtr.Size * 2 + Unsafe.SizeOf<T>()]; // Buffer for storage on the stack

var pointer = (IntPtr*)stackBuffer; // Transform it for ease of use

*(pointer + 0) = IntPtr.Zero; // Optional

*(pointer + 1) = typeof(T).TypeHandle.Value; // Set MethodTable pointer

var ptr = (IntPtr)(pointer + 1); // Get a pointer to MethodTable and cast it into a managed IntPtr

var obj = Unsafe.As<IntPtr, T>(ref ptr); // Convert IntPtr to T as if we had done *(T*)ptr

As a result, we obtain an obj that behaves like a fully functional object. However, it’s crucial to keep in mind the

following important points:

- The object exists outside the visibility of the Garbage Collector (GC) - it is not tracked or managed by the GC

- When the method where

stackallocwas called exits, the stack frame is torn down, and any attempt to use or return the object afterward can lead to undefined behavior

Links

Safe

Now let’s move on to something even more interesting - constructing an object using pure C#, without resorting to raw pointers.

The sequence of steps remains essentially the same as in the unsafe approach. We’ll still rely on the same techniques to calculate the required size and to retrieve the pointer to the MethodTable.

In the end, the resulting code looks like this:

Span<byte> buffer = stackalloc byte[IntPtr.Size * 2 + Unsafe.SizeOf<T>()]; // Buffer for storage on the stack

var buffer = MemoryMarshal.Cast<byte, IntPtr>(stackBuffer); // Transform it for ease of use

buffer[0] = IntPtr.Zero; // Optional

buffer[1] = typeof(T).TypeHandle.Value; // Set MethodTable pointer

var objBuffer = buffer[1..]; // Get a Span that starts with a MethodTable pointer

var obj = Unsafe.As<Span<IntPtr>, T>(ref objBuffer); // Convert Span to T bytes to bytes

Both approaches are generally quite similar, but there’s an interesting distinction - the line Unsafe.As, where we convert a Span into T.

To understand what’s happening, let’s recall how struct types are laid out in memory. It doesn’t have an object header - its field data starts right at the beginning of the memory block.

So, what does the Span<> structure actually look like under the hood?

public readonly ref struct Span<T>

{

internal readonly ref T _reference; // Reference to array of elements

private readonly int _length; // Element array length

/* Another code */

}

In other words, the first 8 bytes of the Span<> structure represent a pointer - and in our case, that memory contains

the MethodTable. So when we cast a Span into T, we’re effectively interpreting its first field as a reference to our object. C# then treats that pointer as a managed reference to a class instance - which is precisely what we need in this scenario.

It’s also important to mention some limitations. This approach only works on .NET 9.0 and later, because Unsafe.As<,> started supporting allows ref struct as arguments only from that version onward. In earlier versions, such usage is not allowed, which means you can’t simply pass a Span<> this way.

To work around this restriction, I wrote a custom implementation using IL emit. You can find an example here.

Links

A few more fun tricks.

Inheritance

One of the reasons we love classes is for inheritance and all the powerful features it brings. And nothing stops us from leveraging that power - even on the stack.

As a result, we can write a test that looks something like this:

[Theory, AutoData]

public void Safe_ShouldAllocateAndChange_WhenItIsDerivedClass(DerivedClass expected)

{

// Arrange

// Act

var obj = Stack<DerivedClass>.Safe(stackalloc byte[Stack<DerivedClass>.Size]);

obj.X = expected.X - 1;

obj.Y = expected.Y;

BaseClass based = obj;

based.X = expected.X;

// Assert

based.Should().BeEquivalentTo(expected);

based.ToString().Should().Be(expected.ToString());

}

The entire array is on the stack

How about placing an array on the stack, with all of its elements stored nearby - also on the stack? Turns out, it’s surprisingly straightforward.

There are a couple of important points to keep in mind:

- The total size is calculated as

Unsafe.SizeOf, which includes space for the array elements plus 4 bytes reserved for the array’s length field() * length + 4 - Initializing the array is slightly more involved (just one extra line compared to creating a single object), and essentially boils down to the following:

buffer[0] = IntPtr.Zero; // Optional

buffer[1] = typeof(T[]).TypeHandle.Value; // MethodTable pointer

buffer[2] = length; // Array length

[Theory, AutoData]

public void Safe_ShouldAllocate_WhenItIsArray(ExampleClass[] expected)

{

// Arrange

// Act

var obj = Stack<ExampleClass>.Safe(stackalloc byte[Stack<ExampleClass>.Size * expected.Length + 4], expected.Length);

for (var i = 0; i < obj.Length; i++)

{

var element = Stack<ExampleClass>.Safe(stackalloc byte[Stack<ExampleClass>.Size]);

element.X = expected[i].X;

element.Y = expected[i].Y;

obj[i] = element;

}

// Assert

obj.Should().BeEquivalentTo(expected);

}

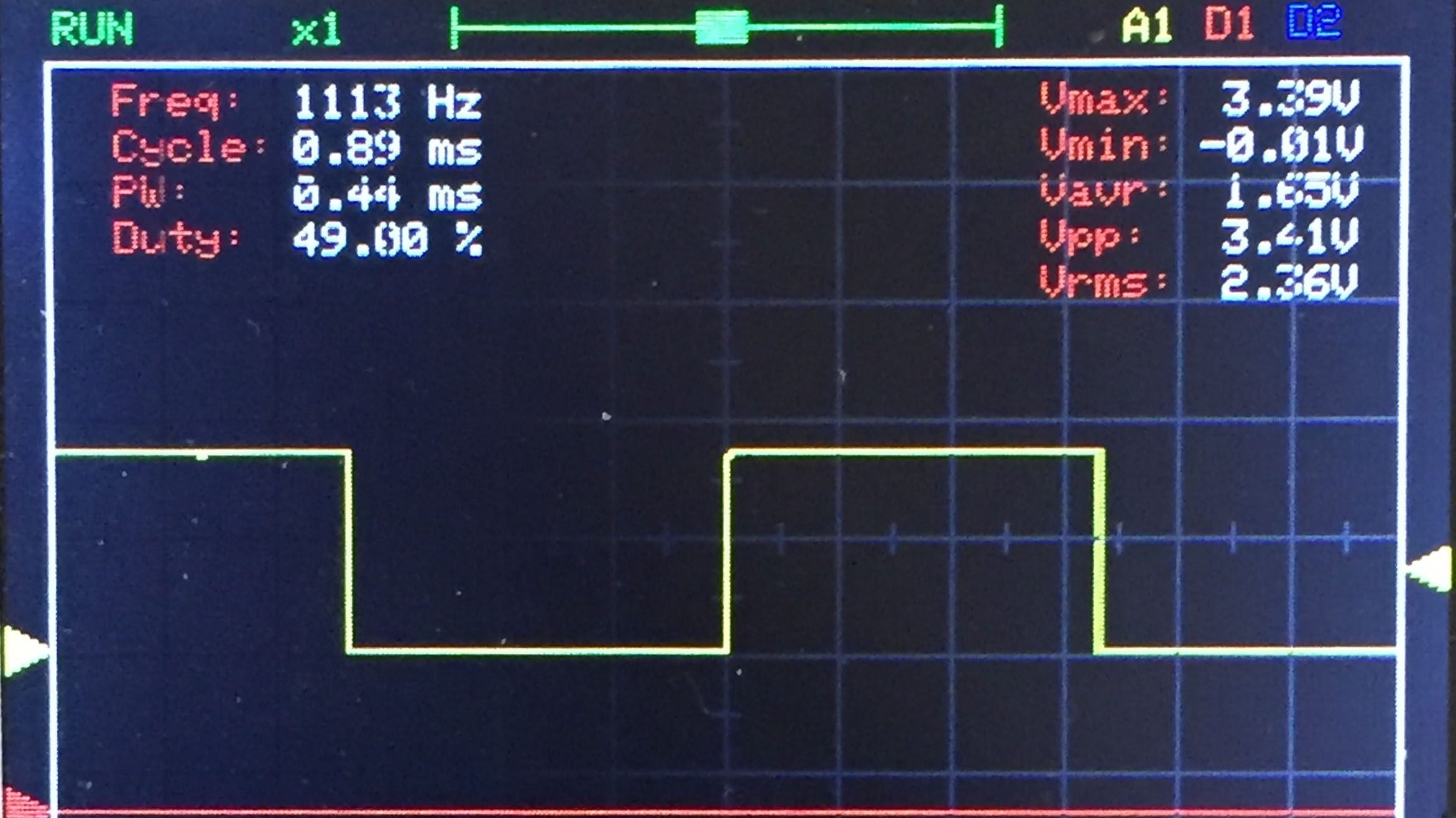

Verification & Benchmarking

Perhaps the most interesting and meaningful challenge for our theory is to put it to the test - through real-world benchmarking. Only by measuring actual performance can we understand whether our stack-based approach offers tangible benefits... or not.

To get a clearer picture, we'll walk through a couple of focused scenarios:

- Allocating a single class instance on the heap vs. the stack

- Allocating 1000 class instances sequentially - again comparing stack and heap memory

Below, you’ll find sample code used to run these benchmarks. We've also included an alternative version that uses the Unsafe approach for direct memory access and control.

[Benchmark]

public void SingleAllocateHeap()

{

var obj = new ExampleClass();

obj.X = 1;

obj.Y = 2;

GC.KeepAlive(obj);

}

[Benchmark]

public void SingleAllocateStack()

{

var obj = Stack<ExampleClass>.Safe(stackalloc byte[Stack<ExampleClass>.Size]);

obj.X = 1;

obj.Y = 2;

}

[Benchmark]

public void ManyAllocateHeap()

{

for (var i = 0; i < _n; i++)

{

var obj = new ExampleClass();

obj.X = 1;

obj.Y = 2;

GC.KeepAlive(obj);

}

}

[Benchmark]

public void ManyAllocateStack()

{

for (var i = 0; i < _n; i++)

{

var obj = Stack<ExampleClass>.Safe(stackalloc byte[Stack<ExampleClass>.Size]);

obj.X = 1;

obj.Y = 2;

}

}

And in the end, here's what we get:

Safe

| Method | Job | Runtime | Mean | Error | StdDev | Median | Gen0 | Allocated |

|---|---|---|---|---|---|---|---|---|

| SingleAllocateHeap | .NET 7.0 | .NET 7.0 | 1.9396 ns | 0.0553 ns | 0.0517 ns | 1.9181 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 7.0 | .NET 7.0 | 8.6831 ns | 0.1932 ns | 0.3677 ns | 8.7082 ns | - | - |

| ManyAllocateHeap | .NET 7.0 | .NET 7.0 | 2,452.9738 ns | 22.3611 ns | 20.9166 ns | 2,454.1597 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 7.0 | .NET 7.0 | 2,723.5996 ns | 0.8523 ns | 0.6654 ns | 2,723.6259 ns | - | - |

| SingleAllocateHeap | .NET 8.0 | .NET 8.0 | 1.7152 ns | 0.0029 ns | 0.0025 ns | 1.7155 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 8.0 | .NET 8.0 | 8.7965 ns | 0.1961 ns | 0.3382 ns | 8.6271 ns | - | - |

| ManyAllocateHeap | .NET 8.0 | .NET 8.0 | 2,478.6933 ns | 24.0770 ns | 22.5216 ns | 2,480.5584 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 8.0 | .NET 8.0 | 2,684.2186 ns | 1.9118 ns | 1.4926 ns | 2,684.7157 ns | - | - |

| SingleAllocateHeap | .NET 9.0 | .NET 9.0 | 2.2176 ns | 0.0048 ns | 0.0045 ns | 2.2161 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 9.0 | .NET 9.0 | 0.7736 ns | 0.0076 ns | 0.0064 ns | 0.7749 ns | - | - |

| ManyAllocateHeap | .NET 9.0 | .NET 9.0 | 2,705.4946 ns | 11.6700 ns | 10.9161 ns | 2,705.4497 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 9.0 | .NET 9.0 | 1,273.6940 ns | 0.3620 ns | 0.3023 ns | 1,273.7439 ns | - | - |

The results clearly show that stack-based allocation outperforms heap allocation - not only in terms of memory usage (

which is expected), but also in raw execution speed when running on .NET 9.0.

However, for .NET 8.0 and 7.0, there’s a noticeable slowdown compared to heap allocation.

This is primarily due to the overhead of generating Unsafe.As<,> dynamically via IL emit at runtime.

Unsafe

| Method | Job | Runtime | Mean | Error | StdDev | Gen0 | Allocated |

|---|---|---|---|---|---|---|---|

| SingleAllocateHeap | .NET 7.0 | .NET 7.0 | 1.6922 ns | 0.0486 ns | 0.0455 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 7.0 | .NET 7.0 | 0.4787 ns | 0.0159 ns | 0.0149 ns | - | - |

| SingleAllocateStackOnSpan | .NET 7.0 | .NET 7.0 | 0.9192 ns | 0.0174 ns | 0.0162 ns | - | - |

| ManyAllocateHeap | .NET 7.0 | .NET 7.0 | 2,560.6623 ns | 18.9084 ns | 17.6869 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 7.0 | .NET 7.0 | 944.7791 ns | 5.6593 ns | 4.7257 ns | - | - |

| ManyAllocateStackOnSpan | .NET 7.0 | .NET 7.0 | 1,296.3634 ns | 9.2856 ns | 8.6857 ns | - | - |

| SingleAllocateHeap | .NET 8.0 | .NET 8.0 | 2.0119 ns | 0.0159 ns | 0.0133 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 8.0 | .NET 8.0 | 0.3722 ns | 0.0141 ns | 0.0118 ns | - | - |

| SingleAllocateStackOnSpan | .NET 8.0 | .NET 8.0 | 0.5997 ns | 0.0182 ns | 0.0170 ns | - | - |

| ManyAllocateHeap | .NET 8.0 | .NET 8.0 | 2,515.6083 ns | 8.5975 ns | 7.6214 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 8.0 | .NET 8.0 | 948.0459 ns | 7.3097 ns | 6.1039 ns | - | - |

| ManyAllocateStackOnSpan | .NET 8.0 | .NET 8.0 | 1,132.9332 ns | 4.7512 ns | 4.4443 ns | - | - |

| SingleAllocateHeap | .NET 9.0 | .NET 9.0 | 2.2379 ns | 0.0386 ns | 0.0361 ns | 0.0029 | 24 B |

| SingleAllocateStack | .NET 9.0 | .NET 9.0 | 0.2845 ns | 0.0182 ns | 0.0161 ns | - | - |

| SingleAllocateStackOnSpan | .NET 9.0 | .NET 9.0 | 0.4485 ns | 0.0133 ns | 0.0118 ns | - | - |

| ManyAllocateHeap | .NET 9.0 | .NET 9.0 | 2,786.9785 ns | 23.1452 ns | 19.3273 ns | 2.8687 | 24000 B |

| ManyAllocateStack | .NET 9.0 | .NET 9.0 | 824.4629 ns | 1.3196 ns | 1.0303 ns | - | - |

| ManyAllocateStackOnSpan | .NET 9.0 | .NET 9.0 | 962.2158 ns | 1.0145 ns | 0.8472 ns | - | - |

In some cases, the stack-based approach achieved up to a 10x performance boost, clearly demonstrating the raw power and

efficiency of using the stack over the heap - especially when compared to the fully safe alternative.

These results highlight how, with the right setup, stack allocation can significantly outperform more traditional approaches.

Conclusion

This exploration demonstrates that allocating class instances on the stack in C# - while unconventional - is not only technically feasible, but can also yield substantial performance gains in certain scenarios.

We’ve shown that:

- Unsafe techniques provide maximum control and efficiency, though they require deep knowledge of memory layout and careful handling to avoid undefined behavior

- Safe techniques, made possible in .NET 9 (or emulated in previous versions), offer a surprising degree of flexibility without stepping outside the boundaries of memory safety

These methods aren’t meant to replace the heap in everyday development - but they open up exciting opportunities for

advanced use cases, such as high-performance systems, embedded scenarios, or low-level experimentation.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Ships 55 Million iPhones, Claims Second Place in Q1 2025 Smartphone Market [Report]](https://www.iclarified.com/images/news/97185/97185/97185-640.jpg)