AWS Compute Showdown: ECS vs. EKS vs. Fargate vs. Lambda – Choosing Your Champion

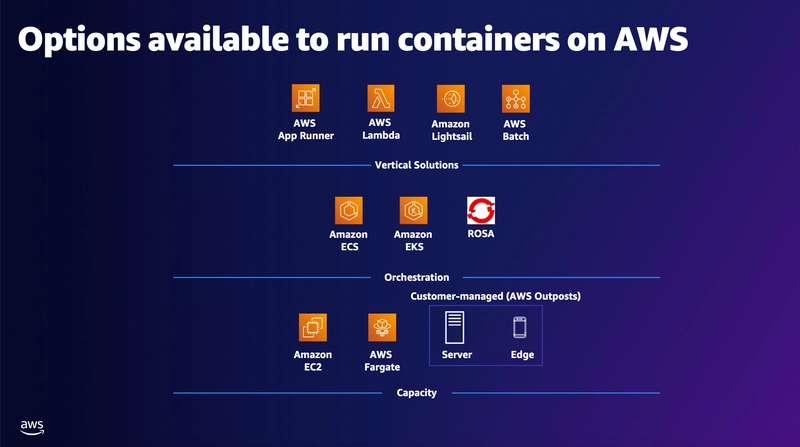

Intro : Start with a relatable problem, real-world scenario, or surprising insight You've built your application. It works beautifully on your local machine. Now comes the "fun" part: deploying it to the cloud. You log into the AWS console, navigate to compute services, and... bam. You're hit with a wall of acronyms: ECS, EKS, Fargate, Lambda. They all seem to run code, but they feel vastly different. Which one is right for your workload? Choose wisely, and you unlock scalability, cost-efficiency, and operational bliss. Choose poorly, and you might face spiraling costs, operational headaches, or an architecture that fights you every step of the way. It's a common crossroads for developers and architects, and navigating it effectively is crucial. Why It Matters: Briefly explain why the topic is relevant today In today's cloud-native world, how you run your code is just as important as the code itself. The right compute service impacts everything: Cost: Pay only for what you need, or pay for idle servers? Scalability: Scale seamlessly from zero to millions of requests, or manually provision capacity? Operational Overhead: Manage servers, patching, and scaling, or let AWS handle the heavy lifting? Development Velocity: Focus on writing application code, or spend time managing infrastructure? Architecture: Enable modern patterns like microservices and event-driven architectures, or stick with traditional monoliths? Understanding these fundamental compute options is no longer optional; it's a core competency for anyone building on AWS. Getting this choice right accelerates innovation and optimizes resources. Getting it wrong creates drag. The Concept in Simple Terms: Introduce the AWS service or concept using a metaphor or analogy Let's use a transportation analogy to understand the different levels of abstraction and management: AWS Lambda: Think of this as a Taxi or Ride-Sharing Service (like Uber/Lyft). You just tell it where you want to go (your code/function) and when (the trigger/event). You pay per trip (invocation time + requests). You never worry about buying the car, insurance, gas, maintenance, or even finding a parking spot. The service handles everything infrastructure-related. Ideal for short, specific journeys initiated by an event. AWS Fargate: This is like a Managed Car Rental Service. You specify the type of car you need (CPU/Memory for your container). You drive it wherever you want (run your containerized application). You pay for the duration you're using the car (vCPU/Memory per second). You don't worry about oil changes, tire rotations, or engine trouble (managing the underlying server). The rental company (AWS) handles the fleet maintenance. Ideal for running containers without managing the underlying EC2 instances. AWS ECS (Elastic Container Service) on EC2: This is like Leasing and Managing Your Own Fleet of Delivery Vans. You choose the specific models of vans (EC2 instance types). You decide how many vans you need and manage their assignments (cluster scaling, task placement). You hire the drivers (your containerized applications). You're responsible for van maintenance (patching EC2 instances, OS updates), refueling (managing instance resources), and optimizing routes (task placement strategies). The leasing company (AWS via ECS Control Plane) provides the framework and some high-level management tools. Ideal when you need granular control over the underlying instances for specific compliance, performance, or cost optimization reasons (like using Spot Instances effectively). AWS EKS (Elastic Kubernetes Service) on EC2: This is like Building and Operating Your Own Custom Logistics Network using Industry-Standard Trucks and Protocols. You're adopting a powerful, standardized system (Kubernetes) recognized across the industry. You choose your trucks (EC2 instances) and build your depots (configure the cluster). You manage the entire logistics operation (deployments, networking, scaling, security) using Kubernetes tools (kubectl, Helm, etc.). AWS manages the Kubernetes control plane (the dispatch center's core systems), but you're responsible for the worker nodes (the trucks and drivers) and everything running on them. Ideal when you're committed to the Kubernetes ecosystem, need multi-cloud portability, or require specific Kubernetes features and integrations. Crucial Distinction: Control Plane vs. Data Plane Control Plane: The "brain" that manages the state, scheduling, and orchestration (e.g., ECS Schedulers, EKS API Server). For ECS and EKS, AWS manages this for you. Data Plane: Where your actual code/containers run (the "muscle"). Lambda & Fargate: AWS manages the data plane (serverless). You don't see or touch underlying servers. ECS on EC2 & EKS on EC2: You manage the data plane (the EC2 instances). You are responsible for patching, scaling, securing, and optimizing these instances. ECS

Intro : Start with a relatable problem, real-world scenario, or surprising insight

You've built your application. It works beautifully on your local machine. Now comes the "fun" part: deploying it to the cloud. You log into the AWS console, navigate to compute services, and... bam. You're hit with a wall of acronyms: ECS, EKS, Fargate, Lambda. They all seem to run code, but they feel vastly different. Which one is right for your workload? Choose wisely, and you unlock scalability, cost-efficiency, and operational bliss. Choose poorly, and you might face spiraling costs, operational headaches, or an architecture that fights you every step of the way. It's a common crossroads for developers and architects, and navigating it effectively is crucial.

Why It Matters: Briefly explain why the topic is relevant today

In today's cloud-native world, how you run your code is just as important as the code itself. The right compute service impacts everything:

- Cost: Pay only for what you need, or pay for idle servers?

- Scalability: Scale seamlessly from zero to millions of requests, or manually provision capacity?

- Operational Overhead: Manage servers, patching, and scaling, or let AWS handle the heavy lifting?

- Development Velocity: Focus on writing application code, or spend time managing infrastructure?

- Architecture: Enable modern patterns like microservices and event-driven architectures, or stick with traditional monoliths?

Understanding these fundamental compute options is no longer optional; it's a core competency for anyone building on AWS. Getting this choice right accelerates innovation and optimizes resources. Getting it wrong creates drag.

The Concept in Simple Terms: Introduce the AWS service or concept using a metaphor or analogy

Let's use a transportation analogy to understand the different levels of abstraction and management:

-

AWS Lambda: Think of this as a Taxi or Ride-Sharing Service (like Uber/Lyft).

- You just tell it where you want to go (your code/function) and when (the trigger/event).

- You pay per trip (invocation time + requests).

- You never worry about buying the car, insurance, gas, maintenance, or even finding a parking spot. The service handles everything infrastructure-related.

- Ideal for short, specific journeys initiated by an event.

-

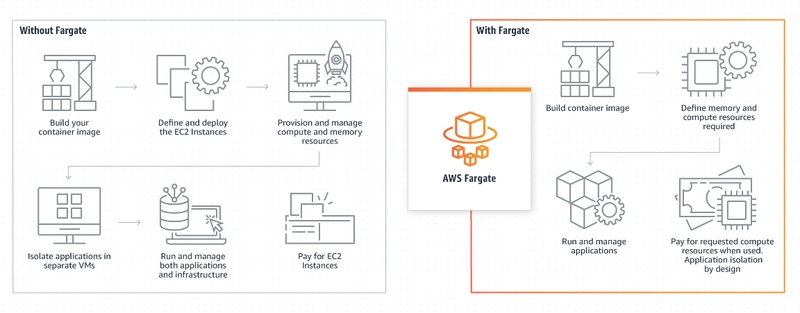

AWS Fargate: This is like a Managed Car Rental Service.

- You specify the type of car you need (CPU/Memory for your container).

- You drive it wherever you want (run your containerized application).

- You pay for the duration you're using the car (vCPU/Memory per second).

- You don't worry about oil changes, tire rotations, or engine trouble (managing the underlying server). The rental company (AWS) handles the fleet maintenance.

- Ideal for running containers without managing the underlying EC2 instances.

-

AWS ECS (Elastic Container Service) on EC2: This is like Leasing and Managing Your Own Fleet of Delivery Vans.

- You choose the specific models of vans (EC2 instance types).

- You decide how many vans you need and manage their assignments (cluster scaling, task placement).

- You hire the drivers (your containerized applications).

- You're responsible for van maintenance (patching EC2 instances, OS updates), refueling (managing instance resources), and optimizing routes (task placement strategies). The leasing company (AWS via ECS Control Plane) provides the framework and some high-level management tools.

- Ideal when you need granular control over the underlying instances for specific compliance, performance, or cost optimization reasons (like using Spot Instances effectively).

-

AWS EKS (Elastic Kubernetes Service) on EC2: This is like Building and Operating Your Own Custom Logistics Network using Industry-Standard Trucks and Protocols.

- You're adopting a powerful, standardized system (Kubernetes) recognized across the industry.

- You choose your trucks (EC2 instances) and build your depots (configure the cluster).

- You manage the entire logistics operation (deployments, networking, scaling, security) using Kubernetes tools (kubectl, Helm, etc.).

- AWS manages the Kubernetes control plane (the dispatch center's core systems), but you're responsible for the worker nodes (the trucks and drivers) and everything running on them.

- Ideal when you're committed to the Kubernetes ecosystem, need multi-cloud portability, or require specific Kubernetes features and integrations.

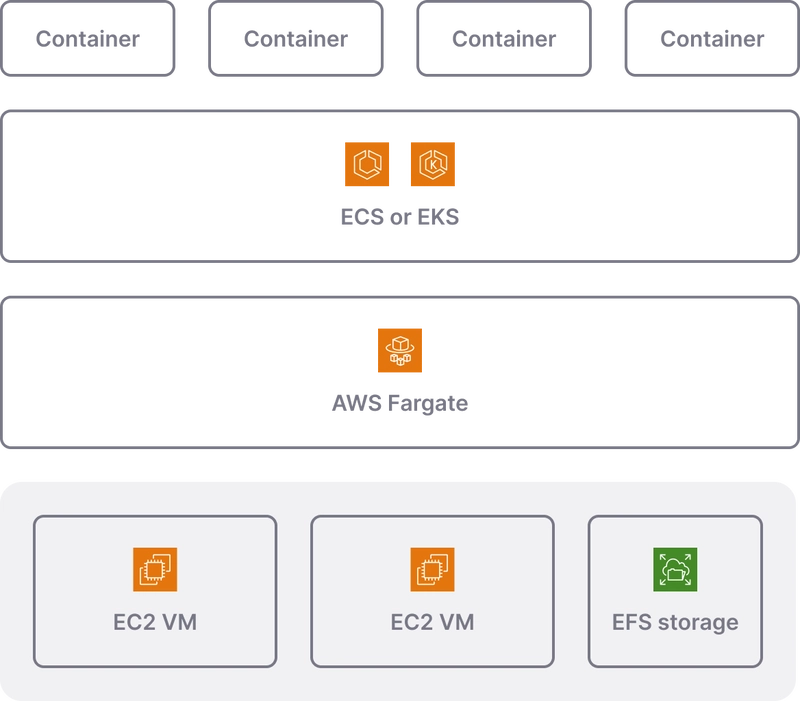

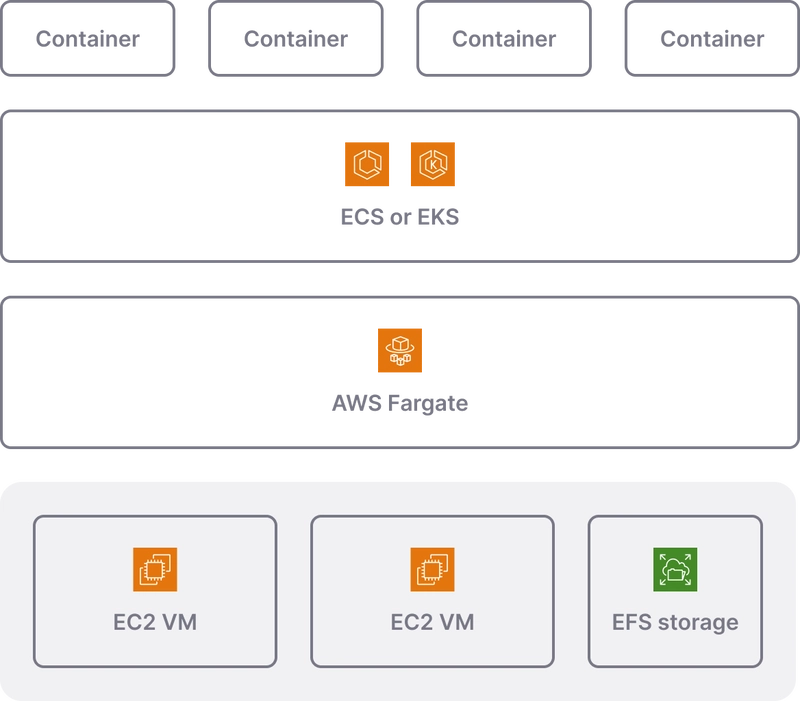

Crucial Distinction: Control Plane vs. Data Plane

- Control Plane: The "brain" that manages the state, scheduling, and orchestration (e.g., ECS Schedulers, EKS API Server). For ECS and EKS, AWS manages this for you.

- Data Plane: Where your actual code/containers run (the "muscle").

- Lambda & Fargate: AWS manages the data plane (serverless). You don't see or touch underlying servers.

- ECS on EC2 & EKS on EC2: You manage the data plane (the EC2 instances). You are responsible for patching, scaling, securing, and optimizing these instances.

- ECS on Fargate & EKS on Fargate: You use the ECS/EKS control plane but run your containers on the AWS-managed Fargate data plane. A hybrid approach!

Deeper Dive: Transition to a more technical explanation

Let's break down each service with more technical detail:

AWS Lambda

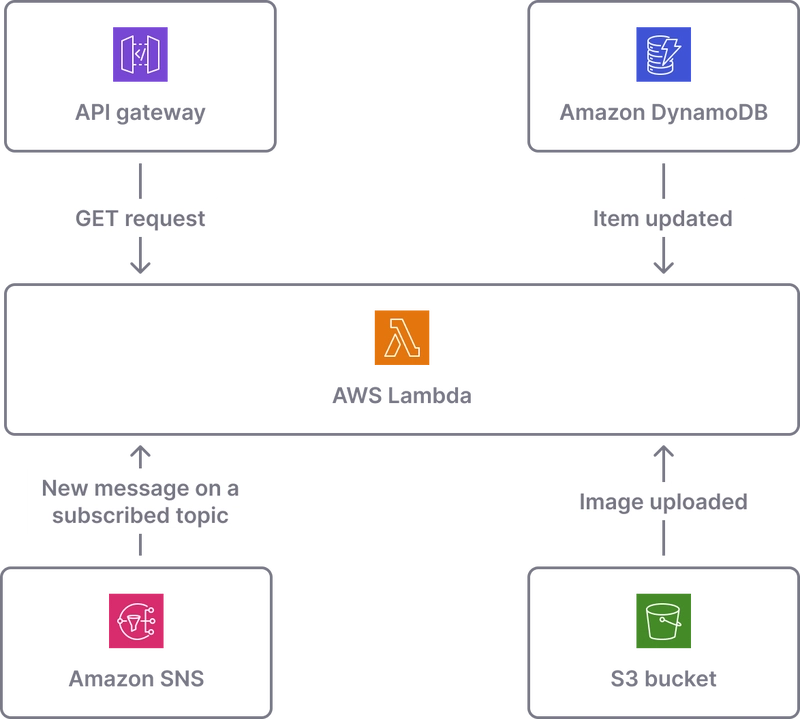

- What it is: A serverless, event-driven compute service. You upload your code (as functions), and Lambda runs it in response to triggers (API Gateway requests, S3 events, DynamoDB streams, etc.), automatically managing the underlying compute resources.

- Core Concept: Function-as-a-Service (FaaS). Pay per invocation and compute time (measured in milliseconds). Scales automatically from zero to thousands of requests per second.

- When to Choose:

- Event-driven architectures (processing file uploads, reacting to database changes).

- APIs (especially microservices via API Gateway).

- Background tasks & scheduled jobs (Cron jobs).

- Real-time data processing.

- Workloads with highly variable traffic (including zero).

- Best Architectures: Microservices, Event Sourcing, APIs, Data Processing Pipelines.

- Challenges:

- Cold Starts: Initial latency when a function hasn't been invoked recently. (Mitigated by Provisioned Concurrency).

- Execution Limits: Maximum runtime duration (currently 15 minutes).

- Statelessness: Functions should ideally be stateless; state needs external management (DynamoDB, S3, ElastiCache).

- Vendor Lock-in: Code might need refactoring to run outside Lambda's environment.

- Debugging/Monitoring: Can be complex across distributed functions.

- Cost Optimization:

- Optimize function memory size (cost is proportional to memory * duration).

- Use Graviton2 (Arm) processors for better price/performance.

- Implement efficient code to reduce execution time.

- Use Provisioned Concurrency strategically for latency-sensitive workloads (costs more).

- Leverage Compute Savings Plans.

AWS Fargate

- What it is: A serverless compute engine for containers. It works with both ECS and EKS. You define your container requirements (CPU, memory), package your application in a container, and Fargate launches and manages the infrastructure for you.

- Core Concept: Serverless Containers. Pay per vCPU and memory resources consumed by your containerized application, per second. No instances to manage.

- When to Choose:

- Running containerized applications without wanting to manage EC2 instances.

- Microservices architectures where operational simplicity is key.

- Web applications, APIs, batch processing jobs run via containers.

- Migrating containerized applications quickly without infrastructure overhead.

- When you prefer a container orchestrator (ECS/EKS) but want a serverless data plane.

- Best Architectures: Microservices, Web Applications, APIs, Containerized Batch Jobs.

- Challenges:

- Limited Control: Less control over the underlying environment compared to EC2 (no custom AMIs, limited OS-level access).

- Networking: Can be slightly more complex to configure VPC networking initially compared to Lambda.

- Cost: Can be more expensive than EC2 if workloads are very steady-state and instances are highly utilized (especially with Reserved Instances or Savings Plans on EC2).

- Specific Instance Features: Can't use instance types with specialized hardware like GPUs directly (though options are evolving).

- Cost Optimization:

- Right-size tasks: Accurately define CPU/memory needs.

- Use Fargate Spot: Up to 70% discount for fault-tolerant workloads.

- Use Graviton2 (Arm) processors: Better price/performance.

- Compute Savings Plans: Commit to usage for discounts.

- Scale-to-zero (with ECS): Can scale services down to zero tasks when idle (though event-driven scaling might require custom solutions or App Runner).

AWS ECS (Elastic Container Service)

- What it is: A highly scalable, high-performance container orchestration service that supports Docker containers. It allows you to easily run, stop, and manage containers on a cluster.

- Core Concept: AWS-Native Container Orchestration. Simpler API and integration with AWS services compared to Kubernetes (EKS).

- Launch Types:

- EC2: You manage the underlying EC2 instances in the cluster (patching, scaling the cluster itself). Offers maximum control.

- Fargate: AWS manages the underlying compute (serverless, as described above).

- When to Choose (ECS on EC2):

- You need fine-grained control over EC2 instances (specific instance types, GPUs, custom AMIs, stricter compliance).

- You want maximum cost optimization potential via Spot Instances and Reserved Instances/Savings Plans with high utilization.

- Your team is deeply familiar with the AWS ecosystem and prefers native integrations.

- You need features like Windows Containers on EC2 (Fargate support is newer/limited).

- When to Choose (ECS on Fargate): Same reasons as standalone Fargate, but using the ECS control plane.

- Best Architectures: Microservices, Web Applications, Batch Processing, Monoliths (containerized).

- Challenges (ECS on EC2):

- Instance Management: You are responsible for the EC2 cluster nodes (OS patching, security updates, scaling the cluster instances, managing Docker agent).

- Cluster Capacity Management: Need to ensure you have enough EC2 capacity for your tasks (Can be automated with Cluster Auto Scaling and Capacity Providers).

- Operational Overhead: Higher than Fargate or Lambda.

- Cost Optimization (ECS on EC2):

- Right-size instances and tasks.

- Utilize Spot Instances heavily via Capacity Providers.

- Use Reserved Instances or Savings Plans for baseline capacity.

- Optimize task placement strategies (bin packing).

- Use Graviton2 (Arm) instances.

AWS EKS (Elastic Kubernetes Service)

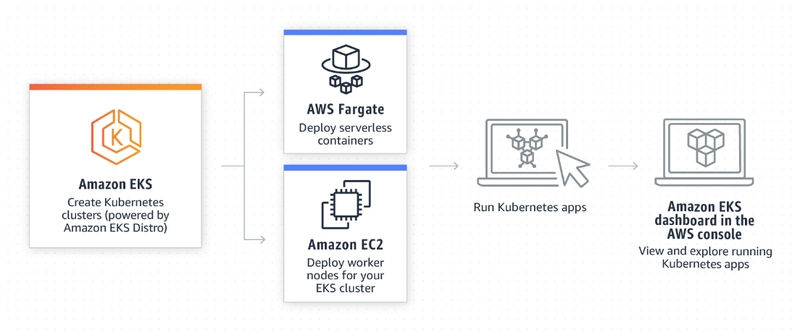

- What it is: A managed service that makes it easy to run Kubernetes on AWS without needing to install, operate, and maintain your own Kubernetes control plane.

- Core Concept: Managed Kubernetes. Provides upstream, certified Kubernetes conformance. Leverages the vast Kubernetes ecosystem (tools, plugins, community support).

- Launch Types (Data Plane):

- EC2 (Managed Node Groups / Self-Managed Nodes): You manage the EC2 worker nodes. Managed Node Groups automate provisioning and lifecycle management.

- Fargate: Run Kubernetes pods on serverless infrastructure managed by AWS.

- When to Choose:

- Your organization is standardized on Kubernetes.

- You need portability across clouds or on-premises (using Kubernetes).

- You want to leverage the extensive Kubernetes ecosystem (Helm, Istio, Argo CD, etc.).

- You have complex networking or policy requirements best met by Kubernetes primitives (Network Policies, etc.).

- Your team has existing Kubernetes expertise.

- Best Architectures: Microservices, Complex Web Applications, Hybrid Cloud Deployments, Platforms built on Kubernetes.

- Challenges:

- Complexity: Kubernetes itself has a steep learning curve. EKS simplifies the control plane, but managing applications and worker nodes still requires Kubernetes knowledge.

- Operational Overhead (EKS on EC2): Similar to ECS on EC2, you manage the worker nodes (though Managed Node Groups help).

- Cost: Control plane cost per cluster + data plane costs (EC2 or Fargate). Can be more expensive than ECS for simpler use cases.

- Upgrade Cycles: Keeping up with Kubernetes versions requires planning for cluster and node upgrades.

- Cost Optimization:

- Right-size nodes and pods.

- Use EC2 Spot Instances effectively (e.g., with Karpenter or Cluster Autoscaler).

- Use Reserved Instances or Savings Plans for stable worker nodes.

- Use Fargate Spot for stateless pods.

- Optimize pod density on nodes (bin packing).

- Use Graviton2 (Arm) nodes.

- Shut down non-production clusters when not needed.

Practical Example or Use Case: Deploying a Simple REST API

Let's imagine deploying a standard Node.js REST API backend:

-

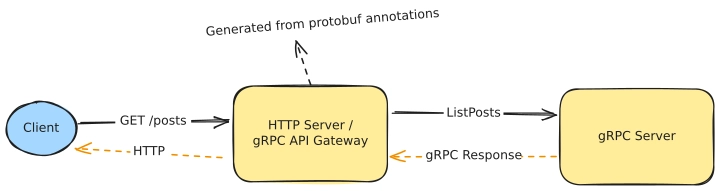

Lambda + API Gateway:

- Write your API logic as Lambda functions (one per endpoint or a monolith function with routing).

- Define an API Gateway endpoint to trigger these functions via HTTP requests.

- Deployment: Zip your code, upload to Lambda, configure triggers. Use AWS SAM or Serverless Framework for automation.

- Pros: Scales to zero, pay-per-request, minimal operational overhead.

- Cons: Potential cold starts, 15-min execution limit.

-

Fargate (with ECS or EKS):

- Package your Node.js app into a Docker container.

- Define an ECS Task Definition or Kubernetes Deployment specifying the container image, CPU/Memory.

- Define an ECS Service or Kubernetes Service (with a Load Balancer).

- Deployment: Push container image to ECR, deploy the service definition using AWS CLI, Console, CDK, Terraform, or kubectl/Helm.

- Pros: Serverless infrastructure, familiar container workflow, good for long-running processes.

- Cons: Slightly higher baseline cost than Lambda (runs continuously unless scaled to zero), networking setup.

-

ECS on EC2:

- Same containerization process as Fargate.

- Provision an ECS Cluster with EC2 instances (choose type, size, configure auto-scaling for the cluster).

- Deploy ECS Task Definition and Service, specifying the EC2 launch type.

- Deployment: Similar to Fargate, but you also manage the EC2 instances (OS updates, agent updates).

- Pros: Full control over instances, potential for cost savings with Spot/RIs.

- Cons: Infrastructure management overhead (patching, scaling instances).

-

EKS on EC2:

- Same containerization process.

- Set up an EKS cluster (AWS manages control plane).

- Provision worker nodes (Managed Node Groups or self-managed EC2).

- Define Kubernetes Deployment, Service, Ingress using YAML manifests.

- Deployment: Use

kubectl apply -for Helm charts. Manage worker node scaling and updates..yaml - Pros: Kubernetes standard, powerful ecosystem, portability.

- Cons: Highest complexity, requires Kubernetes expertise, infrastructure management for nodes.

Common Mistakes or Misunderstandings

- Using EKS "Just Because": Choosing EKS for simple applications when ECS or Fargate would be far simpler and cheaper, simply because Kubernetes is popular.

- Ignoring Fargate Costs: Assuming Fargate is always cheaper because it's "serverless." While it removes operational overhead, sustained high-utilization workloads can be cheaper on optimized EC2 (ECS/EKS on EC2 with Spot/RIs). Model your costs!

- Treating Lambda like a Server: Trying to run long-running, stateful processes in Lambda functions instead of using containers (ECS/EKS/Fargate) or dedicated services.

- Underestimating EC2 Management (ECS/EKS on EC2): Forgetting the ongoing effort needed for patching OS, updating container runtimes, managing security groups, and scaling the instances themselves.

- Not Right-Sizing: Over-provisioning CPU/Memory for Fargate tasks or Lambda functions, or using oversized EC2 instances, leading to wasted spend.

- Confusing Control Plane vs. Data Plane: Not understanding who manages what (especially the difference between EKS managing the control plane vs. you managing worker nodes on EC2).

Pro Tips & Hidden Features

- ECS:

- Capacity Providers: Simplify mixing EC2 Spot and On-Demand instances for cost savings and reliability.

- ECS Exec: Securely SSH or run commands directly inside running containers for debugging (use with caution!).

- CloudWatch Container Insights: Detailed performance monitoring and diagnostics for ECS (and EKS/Fargate).

- EKS:

- Karpenter: An open-source, flexible, high-performance Kubernetes cluster autoscaler built by AWS that can rapidly provision right-sized nodes. Often preferred over the standard Cluster Autoscaler.

- Managed Node Groups: Let AWS handle node provisioning, upgrades, and termination, reducing operational burden.

- Helm: Use Helm charts to package, deploy, and manage Kubernetes applications easily.

- EKS Blueprints: Use CDK or Terraform blueprints to quickly provision complete, opinionated EKS clusters.

- Fargate:

- Fargate Spot: Essential for cost savings on fault-tolerant container workloads. Combine with On-Demand for reliability.

- Seekable OCI (SOCI): An AWS open-source technology that can speed up container launch times on Fargate by lazy-loading the image layers. (Check for current support status).

- Lambda:

- Provisioned Concurrency: Pre-warm function instances to eliminate cold starts for latency-sensitive applications (at extra cost).

- Lambda Extensions: Integrate monitoring, security, and governance tools directly into the Lambda execution environment.

- AWS Lambda Powertools: Language-specific libraries (Python, Java, TypeScript, .NET) to help implement observability best practices (tracing, structured logging, metrics).

- General:

- Graviton Processors (Arm64): Often provide significant price/performance benefits across EC2, Fargate, and Lambda. Compile/build your code/containers for Arm64.

- Infrastructure as Code (IaC): Use AWS CDK, Terraform, or CloudFormation to define and manage all these resources reliably and repeatably.

- Cost Allocation Tags: Tag everything meticulously to understand costs per service, team, or feature.

Simple Code Snippet / CLI Command Example

Here's a simple AWS CLI command to list your running ECS tasks on a specific cluster (illustrative):

# Make sure you have AWS CLI configured

# Replace 'your-cluster-name' with your actual ECS cluster name

aws ecs list-tasks --cluster your-cluster-name

# To get more details about a specific task (replace task-arn):

# aws ecs describe-tasks --cluster your-cluster-name --tasks arn:aws:ecs:region:account-id:task/cluster-name/task-id

This just gives a taste – managing these services often involves more complex configurations via IaC tools.

Final Thoughts + Call to Action

Choosing between Lambda, Fargate, ECS, and EKS isn't about finding the single "best" service – it's about finding the right fit for your specific application needs, team expertise, operational tolerance, and cost sensitivity.

- Need ultimate simplicity for event-driven tasks? Lambda.

- Want to run containers without managing servers? Fargate (via ECS or EKS).

- Need container orchestration with deep control over instances or maximum EC2 cost optimization? ECS on EC2.

- Committed to the Kubernetes ecosystem and need its power and portability? EKS (on EC2 or Fargate).

The lines can blur (e.g., ECS/EKS on Fargate), offering hybrid approaches. The best way to truly understand is to experiment. Spin up a small test application on each relevant service. Monitor its performance, check the costs, and experience the developer workflow.

What are your experiences? Which service is your go-to, and why? Did I miss any crucial considerations or pro tips? Share your thoughts in the comments below – let's learn from each other!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)