AI Isn’t My Pair Programmer. It’s My Intern. And It Has to Pass My Tests.

"Vibe coding" is fun until you have to maintain the code. Tools like Cursor, Windsurf, and Copilot make it feel like you're going really fast: just describe what you want, hit tab a few times, and watch an entire feature appear. It feels productive. And sometimes it is. But if you've ever looked back at that AI-generated code a week later and thought what is this? you're not alone. Most of what AI spits out is slop. It's bloated, unpredictable, and hard to debug. It works, technically but just barely. And that's the problem. I'm building a fairly large TypeScript/Node project (UserJot), and I've learned the hard way that letting AI run loose in a growing codebase is asking for trouble. So now I do something different: I write tests first. Then I let AI pass them. AI Is Good But Not Great I use Cursor with Gemini 2.5 Pro for most of my day-to-day coding. It's fast, intuitive, and honestly pretty impressive. But I treat it like a junior dev not a wizard. Left unsupervised, it generates code that: Misses edge cases Creates unnecessary abstractions Optimizes for the wrong thing It doesn't mean the tools are bad. They're actually amazing. But when the hype focuses only on speed and "shipping fast," we stop talking about something more important: maintainability. This workflow is my middle ground. It's not as flashy as vibe coding with zero guardrails, but it's still fast and more importantly, it's safe to build on top of. The Workflow Here's what I do: I use Vitest for writing tests Code in TypeScript with Node.js Use Cursor (Gemini 2.5 Pro) to generate the actual implementation The loop is simple: Write a test suite that defines behavior Ask AI to "write code that passes these tests" If it fails, great that's a feedback loop Iterate until the tests pass I'm not telling the AI how to implement anything. I just tell it what the code should do. Example: Date Formatting Without the Slop Let's say I need a function to format a date string into MM/DD/YYYY. I want it to: Accept an ISO string Return a formatted date Return '' for invalid input Here’s the test: // formatDate.test.ts import { describe, expect, it } from 'vitest' import { formatDate } from './formatDate' describe('formatDate', () => { it('formats a valid ISO string', () => { expect(formatDate('2023-12-01')).toBe('12/01/2023') }) it('returns empty string for invalid input', () => { expect(formatDate('not-a-date')).toBe('') expect(formatDate(null as any)).toBe('') expect(formatDate(undefined as any)).toBe('') }) }) Then I ask Cursor: Write a formatDate(input: string): string function that passes these tests. And you get something like this: // formatDate.ts export function formatDate(input: string): string { const date = new Date(input) if (isNaN(date.getTime())) return '' const month = (date.getMonth() + 1).toString().padStart(2, '0') const day = date.getDate().toString().padStart(2, '0') const year = date.getFullYear() return `${month}/${day}/${year}` } ✅ Clean ✅ Focused ✅ No unnecessary locale utils or extra dependencies I've tried prompting this without tests before, and the AI gave me timezone handling, locale fallbacks, and five helper functions I didn't ask for. That's what I mean by slop. Why This Approach Works Tests define the contract - no room for hallucinated logic You get focused output - not a kitchen sink of guesses Debugging is faster - failing tests tell you exactly what to fix The code is replaceable - nothing magic, just clear behavior You start with the behavior you want. You end up with code that does just that and nothing more. What I Do With UserJot UserJot is a growing codebase. Feedback boards, roadmap tracking, changelogs, integrations, and a bunch of frontend+backend logic. I don't let AI touch everything. But I do use this workflow constantly. I break features down into small, testable units of logic. I write the tests. Then I use AI to implement those pieces. It's a reliable, repeatable loop that lets me move fast without leaving behind a pile of nonsense. It's not full vibe coding, but it's still a massive productivity boost. Final Thoughts AI isn't your CTO. It's your intern. And interns don't ship code until they've passed the tests. This method gives you most of the speed benefits of AI-assisted coding without sacrificing clarity or maintainability. It's not slower. It's just smarter. I've used this approach to build a lot of the core logic behind UserJot, my feedback and changelog tool for SaaS products. It's helped me move quickly without compromising the long-term health of the codebase. If you're building something real, give it a shot. And if you've found better ways to avoid slop, I'd love to hear them. P.S. If you're building a product and want a simple way to collect feedback, share what you're working on, and keep users in the loop, I built Use

"Vibe coding" is fun until you have to maintain the code.

Tools like Cursor, Windsurf, and Copilot make it feel like you're going really fast: just describe what you want, hit tab a few times, and watch an entire feature appear. It feels productive. And sometimes it is.

But if you've ever looked back at that AI-generated code a week later and thought what is this? you're not alone.

Most of what AI spits out is slop. It's bloated, unpredictable, and hard to debug. It works, technically but just barely. And that's the problem.

I'm building a fairly large TypeScript/Node project (UserJot), and I've learned the hard way that letting AI run loose in a growing codebase is asking for trouble.

So now I do something different:

I write tests first. Then I let AI pass them.

AI Is Good But Not Great

I use Cursor with Gemini 2.5 Pro for most of my day-to-day coding. It's fast, intuitive, and honestly pretty impressive. But I treat it like a junior dev not a wizard.

Left unsupervised, it generates code that:

- Misses edge cases

- Creates unnecessary abstractions

- Optimizes for the wrong thing

It doesn't mean the tools are bad. They're actually amazing. But when the hype focuses only on speed and "shipping fast," we stop talking about something more important: maintainability.

This workflow is my middle ground. It's not as flashy as vibe coding with zero guardrails, but it's still fast and more importantly, it's safe to build on top of.

The Workflow

Here's what I do:

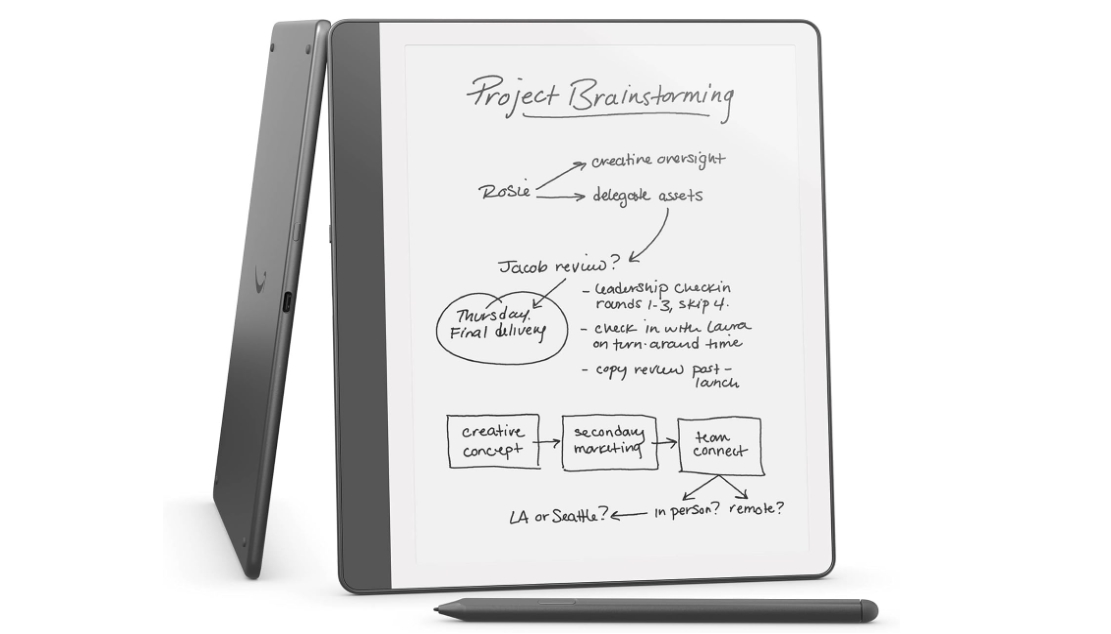

- I use Vitest for writing tests

- Code in TypeScript with Node.js

- Use Cursor (Gemini 2.5 Pro) to generate the actual implementation

The loop is simple:

- Write a test suite that defines behavior

- Ask AI to "write code that passes these tests"

- If it fails, great that's a feedback loop

- Iterate until the tests pass

I'm not telling the AI how to implement anything. I just tell it what the code should do.

Example: Date Formatting Without the Slop

Let's say I need a function to format a date string into MM/DD/YYYY. I want it to:

- Accept an ISO string

- Return a formatted date

- Return

''for invalid input

Here’s the test:

// formatDate.test.ts

import { describe, expect, it } from 'vitest'

import { formatDate } from './formatDate'

describe('formatDate', () => {

it('formats a valid ISO string', () => {

expect(formatDate('2023-12-01')).toBe('12/01/2023')

})

it('returns empty string for invalid input', () => {

expect(formatDate('not-a-date')).toBe('')

expect(formatDate(null as any)).toBe('')

expect(formatDate(undefined as any)).toBe('')

})

})

Then I ask Cursor:

Write a

formatDate(input: string): stringfunction that passes these tests.

And you get something like this:

// formatDate.ts

export function formatDate(input: string): string {

const date = new Date(input)

if (isNaN(date.getTime())) return ''

const month = (date.getMonth() + 1).toString().padStart(2, '0')

const day = date.getDate().toString().padStart(2, '0')

const year = date.getFullYear()

return `${month}/${day}/${year}`

}

✅ Clean

✅ Focused

✅ No unnecessary locale utils or extra dependencies

I've tried prompting this without tests before, and the AI gave me timezone handling, locale fallbacks, and five helper functions I didn't ask for. That's what I mean by slop.

Why This Approach Works

- Tests define the contract - no room for hallucinated logic

- You get focused output - not a kitchen sink of guesses

- Debugging is faster - failing tests tell you exactly what to fix

- The code is replaceable - nothing magic, just clear behavior

You start with the behavior you want. You end up with code that does just that and nothing more.

What I Do With UserJot

UserJot is a growing codebase. Feedback boards, roadmap tracking, changelogs, integrations, and a bunch of frontend+backend logic. I don't let AI touch everything.

But I do use this workflow constantly.

I break features down into small, testable units of logic. I write the tests. Then I use AI to implement those pieces. It's a reliable, repeatable loop that lets me move fast without leaving behind a pile of nonsense.

It's not full vibe coding, but it's still a massive productivity boost.

Final Thoughts

AI isn't your CTO. It's your intern.

And interns don't ship code until they've passed the tests.

This method gives you most of the speed benefits of AI-assisted coding without sacrificing clarity or maintainability. It's not slower. It's just smarter.

I've used this approach to build a lot of the core logic behind UserJot, my feedback and changelog tool for SaaS products. It's helped me move quickly without compromising the long-term health of the codebase.

If you're building something real, give it a shot.

And if you've found better ways to avoid slop, I'd love to hear them.

P.S. If you're building a product and want a simple way to collect feedback, share what you're working on, and keep users in the loop, I built UserJot for that. It's what I wish I had earlier. Might be useful.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Steven_Jones_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

Stolen 884,000 Credit Card Details on 13 Million Clicks from Users Worldwide.webp?#)

![Google Mocks Rumored 'iPhone 17 Air' Design in New Pixel Ad [Video]](https://www.iclarified.com/images/news/97224/97224/97224-640.jpg)

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)