AI Agents from Zero to Hero – Part 1

Intro AI Agents are autonomous programs that perform tasks, make decisions, and communicate with others. Normally, they use a set of tools to help complete tasks. In GenAI applications, these Agents process sequential reasoning and can use external tools (like web searches or database queries) when the LLM knowledge isn’t enough. Unlike a basic chatbot, […] The post AI Agents from Zero to Hero – Part 1 appeared first on Towards Data Science.

Intro

AI Agents are autonomous programs that perform tasks, make decisions, and communicate with others. Normally, they use a set of tools to help complete tasks. In GenAI applications, these Agents process sequential reasoning and can use external tools (like web searches or database queries) when the LLM knowledge isn’t enough. Unlike a basic chatbot, which generates random text when uncertain, an AI Agent activates tools to provide more accurate, specific responses.

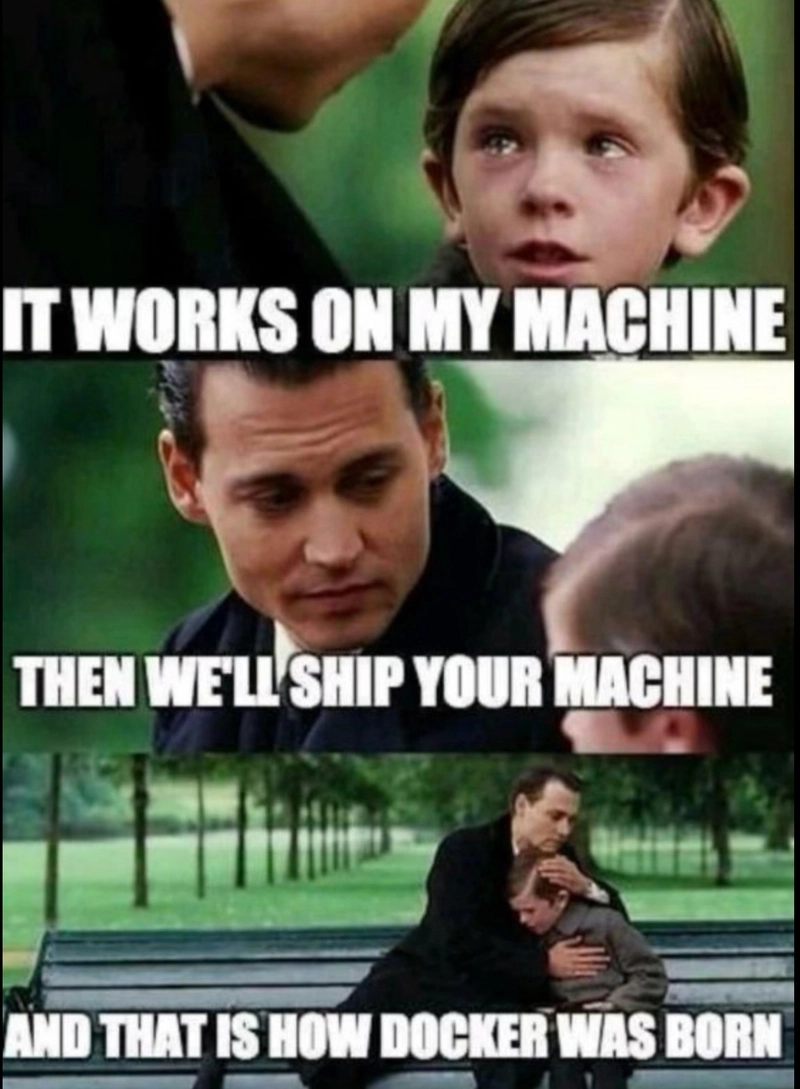

We are moving closer and closer to the concept of Agentic Ai: systems that exhibit a higher level of autonomy and decision-making ability, without direct human intervention. While today’s AI Agents respond reactively to human inputs, tomorrow’s Agentic AIs proactively engage in problem-solving and can adjust their behavior based on the situation.

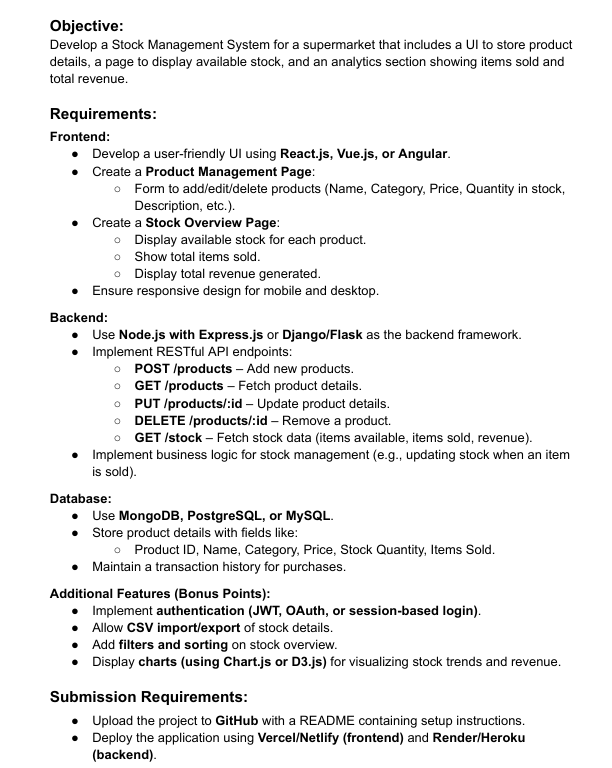

Today, building Agents from scratch is becoming as easy as training a logistic regression model 10 years ago. Back then, Scikit-Learn provided a straightforward library to quickly train Machine Learning models with just a few lines of code, abstracting away much of the underlying complexity.

In this tutorial, I’m going to show how to build from scratch different types of AI Agents, from simple to more advanced systems. I will present some useful Python code that can be easily applied in other similar cases (just copy, paste, run) and walk through every line of code with comments so that you can replicate this example.

Setup

As I said, anyone can have a custom Agent running locally for free without GPUs or API keys. The only necessary library is Ollama (pip install ollama==0.4.7), as it allows users to run LLMs locally, without needing cloud-based services, giving more control over data privacy and performance.

First of all, you need to download Ollama from the website.

Then, on the prompt shell of your laptop, use the command to download the selected LLM. I’m going with Alibaba’s Qwen, as it’s both smart and lite.

After the download is completed, you can move on to Python and start writing code.

import ollama

llm = "qwen2.5"Let’s test the LLM:

stream = ollama.generate(model=llm, prompt='''what time is it?''', stream=True)

for chunk in stream:

print(chunk['response'], end='', flush=True)Obviously, the LLM per se is very limited and it can’t do much besides chatting. Therefore, we need to provide it the possibility to take action, or in other words, to activate Tools.

One of the most common tools is the ability to search the Internet. In Python, the easiest way to do it is with the famous private browser DuckDuckGo (pip install duckduckgo-search==6.3.5). You can directly use the original library or import the LangChain wrapper (pip install langchain-community==0.3.17).

With Ollama, in order to use a Tool, the function must be described in a dictionary.

from langchain_community.tools import DuckDuckGoSearchResults

def search_web(query: str) -> str:

return DuckDuckGoSearchResults(backend="news").run(query)

tool_search_web = {'type':'function', 'function':{

'name': 'search_web',

'description': 'Search the web',

'parameters': {'type': 'object',

'required': ['query'],

'properties': {

'query': {'type':'str', 'description':'the topic or subject to search on the web'},

}}}}

## test

search_web(query="nvidia")Internet searches could be very broad, and I want to give the Agent the option to be more precise. Let’s say, I’m planning to use this Agent to learn about financial updates, so I can give it a specific tool for that topic, like searching only a finance website instead of the whole web.

def search_yf(query: str) -> str: engine = DuckDuckGoSearchResults(backend="news")

return engine.run(f"site:finance.yahoo.com {query}")

tool_search_yf = {'type':'function', 'function':{

'name': 'search_yf',

'description': 'Search for specific financial news',

'parameters': {'type': 'object',

'required': ['query'],

'properties': {

'query': {'type':'str', 'description':'the financial topic or subject to search'},

}}}}

## test

search_yf(query="nvidia")Simple Agent (WebSearch)

In my opinion, the most basic Agent should at least be able to choose between one or two Tools and re-elaborate the output of the action to give the user a proper and concise answer.

First, you need to write a prompt to describe the Agent’s purpose, the more detailed the better (mine is very generic), and that will be the first message in the chat history with the LLM.

prompt = '''You are an assistant with access to tools, you must decide when to use tools to answer user message.'''

messages = [{"role":"system", "content":prompt}]In order to keep the chat with the AI alive, I will use a loop that starts with user’s input and then the Agent is invoked to respond (which can be a text from the LLM or the activation of a Tool).

while True:

## user input

try:

q = input(' Read More

Read More

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] Microsoft Office Professional 2021 for Windows: Lifetime License (75% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Anthony_Brown_Alamy.jpg?#)

_Hanna_Kuprevich_Alamy.jpg?#)

.png?#)

![Hands-on: We got to play Nintendo Switch 2 for nearly six hours yesterday [Video]](https://i0.wp.com/9to5toys.com/wp-content/uploads/sites/5/2025/04/Switch-FI-.jpg.jpg?resize=1200%2C628&ssl=1)

![Fitbit redesigns Water stats and logging on Android, iOS [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/03/fitbit-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)