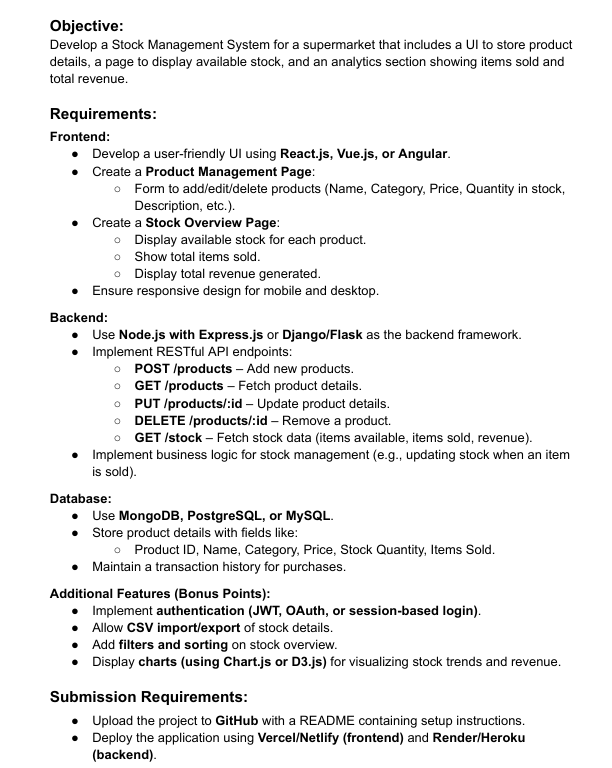

4 Levels of GitHub Actions: A Guide to Data Workflow Automation

From a simple Python workflow to scheduled data processing and security management The post 4 Levels of GitHub Actions: A Guide to Data Workflow Automation appeared first on Towards Data Science.

GitHub Action Benefits

Github Actions are already well-known for its functionalities in the software development domain, while in recent years, also discovered as offering compelling benefits in streamlining data workflows:

- Automate the data science environments setup, such as installing dependencies and required packages (e.g. pandas, PyTorch).

- Streamline the data integration and data transformation steps by connecting to databases to fetch or update records, and using scripting languages like Python to preprocess or transform the raw data.

- Create an iterable data science lifecycle by automating the training of machine learning models whenever new data is available, and deploying models to production environments automatically after successful training.

- GitHub Actions is free for unlimited usage on GitHub-hosted runners for public repositories. It also provides 2,000 free minutes of compute time per month for individual accounts using private repositories. It is easy to set up for building a proof-of-concept simply requiring a GitHub account, without worrying about opting in for a cloud provider.

- Numerous GitHub Actions templates, and community resources are available online. Additionally, community and crowdsourced forums provide answers to common questions and troubleshooting support.

GitHub Action Building Blocks

GitHub Action is a feature of GitHub that allows users to automate workflows directly within their repositories. These workflows are defined using YAML files and can be triggered by various events such as code pushes, pull requests, issue creation, or scheduled intervals. With its extensive library of pre-built actions and the ability to write custom scripts, GitHub Actions is a versatile tool for automating tasks.

- Event: If you have come across using an automation on your devices, such as turning on dark mode when after 8pm, then you are familiar with the concept of using a trigger point or condition to initiate a workflow of actions. In GitHub Actions, this is referred to as an Event, which can be time-based e.g. scheduled on the 1st day of the month or automatically run every hour. Alternatively, Events can be triggered by certain behaviors, like every time changes are pushed from a local repository to a remote repository.

- Workflow: A workflow is composed by a series of jobs and GitHub allows flexibility of customizing each individual step in a job to your needs. It is generally defined by a YAML file stored in the

.github/workflowdirectory in a GitHub repository. - Runners: a hosted environment that allows running the workflow. Instead of running a script on your laptop, now you can borrow GitHub hosted runners to do the job for you or alternatively specify a self-hosted machine.

- Runs: each iteration of running the workflow create a run, and we can see the logs of each run in the “Actions” tab. GitHub provides an interface for users to easily visualize and monitor Action run logs.

4 Levels of Github Actions

We will demonstrate the implementation GitHub actions through 4 levels of difficulty, starting with the “minimal viable product” and progressively introducing additional components and customization in each level.

1. “Simple Workflow” with Python Script Execution

Start by creating a GitHub repository where you want to store your workflow and the Python script. In your repository, create a .github/workflows directory (please note that this directory must be placed within the workflows folder for the action to be executed successfully). Inside this directory, create a YAML file (e.g., simple-workflow.yaml) that defines your workflow.

The hello_world.py based on a manual trigger.

name: simple-workflow

on:

workflow_dispatch:

jobs:

run-hello-world:

runs-on: ubuntu-latest

steps:

- name: Checkout repo content

uses: actions/checkout@v4

- name: run hello world

run: python code/hello_world.pyIt consists of three sections: First, name: simple-workflow defines the workflow name. Second, on: workflow_dispatch specifies the condition for running the workflow, which is manually triggering each action. Last, the workflow jobs jobs: run-hello-world break down into the following steps:

runs-on: ubuntu-latest: Specify the runner (i.e., a virtual machine) to run the workflow —ubuntu-latestis a standard GitHub hosted runner containing an environment of tools, packages, and settings available for GitHub Actions to use.uses: actions/checkout@v4: Apply a pre-built GitHub Actioncheckout@v4to pull the repository content into the runner’s environment. This ensures that the workflow has access to all necessary files and scripts stored in the repository.run: python code/hello_world.py: Execute the Python script located in thecodesub-directory by running shell commands directly in your YAML workflow file.

2. “Push Workflow” with Environment Setup

The first workflow demonstrated the minimal viable version of the GitHub Action, but it did not take full advantage of the GitHub Actions. At the second level, we will add a bit more customization and functionalities – automatically set up the environment with Python version 3.11, install required packages and execute the script whenever changes are pushed to main branch.

name: push-workflow

on:

push:

branches:

- main

jobs:

run-hello-world:

runs-on: ubuntu-latest

steps:

- name: Checkout repo content

uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: '3.11'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Run hello world

run: python code/hello_world.pyon: push: Instead of being activated by manual workflow dispatch, this allows the action to run whenever there is a push from the local repository to the remote repository. This condition is commonly used in a software development setting for integration and deployment processes, which is also adopted in the Mlops workflow, ensuring that code changes are consistently tested and validated before being merged into a different branch. Additionally, it facilitates continuous deployment by automatically deploying updates to production or staging environments as soon as changes are pushed. Here we add an optional conditionbranches: -mainto only trigger this action when it is pushed to the main branch.uses: actions/setup-python@v5: We added the “Set up Python” step using GitHub’s built-in actionsetup-python@v5. Using thesetup-pythonaction is the recommended way of using Python with GitHub Actions because it ensures consistent behavior across different runners and versions of Python.pip install -r requirements.txt: Streamlined the installation of required packages for the environment, which are saved in therequirements.txtfile, thus speed up the further building of data pipeline and data science solution.

If you are interested in the basics of setting up a development environment for your data science projects, my previous blog post “7 Tips to Future-Proof Machine Learning Projects” provides a bit more explanation.

3. “Scheduled Workflow” with Argument Parsing

At the third level, we add more dynamics and complexity to make it more suitable for real-world applications. We introduce scheduled jobs as they bring even more benefits to a data science project, enabling periodic fetching of more recent data and reducing the need to manually run the script whenever data refresh is required. Additionally, we utilize dynamic argument parsing to execute the script based on different date range parameters according to the schedule.

name: scheduled-workflow

on:

workflow_dispatch:

schedule:

- cron: "0 12 1 * *" # run 1st day of every month

jobs:

run-data-pipeline:

runs-on: ubuntu-latest

steps:

- name: Checkout repo content

uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: '3.11' # Specify your Python version here

- name: Install dependencies

run: |

python -m pip install --upgrade pip

python -m http.client

pip install -r requirements.txt

- name: Run data pipeline

run: |

PREV_MONTH_START=$(date -d "`date +%Y%m01` -1 month" +%Y-%m-%d)

PREV_MONTH_END=$(date -d "`date +%Y%m01` -1 day" +%Y-%m-%d)

python code/fetch_data.py --start $PREV_MONTH_START --end $PREV_MONTH_END

- name: Commit changes

run: |

git config user.name ''

git config user.email ''

git add .

git commit -m "update data"

git push on: schedule: - cron: "0 12 1 * *": Specify a time based trigger using the cron expression “0 12 1 * *” – run at 12:00 pm on the 1st day of every month. You can use crontab.guru to help create and validate cron expressions, which follow the format: “minute/hour/ day of month/month/day of week”.python code/fetch_data.py --start $PREV_MONTH_START --end $PREV_MONTH_END: “Run data pipeline” step runs a series of shell commands. It defines two variablesPREV_MONTH_STARTandPREV_MONTH_ENDto get the first day and the last day of the previous month. These two variables are passed to the python script “fetch_data.py” to dynamically fetch data for the previous month relative to whenever the action is run. To allow the Python script to accept custom variables via command-line arguments, we useargparselibrary to build the script. This deserves a separate topic, but here is quick preview of how the python script would look like using theargparselibrary to handle command-line arguments ‘–start’ and ‘–end’ parameters.

## fetch_data.py

import argparse

import os

import urllib

def main(args=None):

parser = argparse.ArgumentParser()

parser.add_argument('--start', type=str)

parser.add_argument('--end', type=str)

args = parser.parse_args(args=args)

print("Start Date is: ", args.start)

print("End Date is: ", args.end)

date_range = pd.date_range(start=args.start, end=args.end)

content_lst = []

for date in date_range:

date = date.strftime('%Y-%m-%d')

params = urllib.parse.urlencode({

'api_token': '',

'published_on': date,

'search': search_term,

})

url = '/v1/news/all?{}'.format(params)

content_json = parse_news_json(url, date)

content_lst.append(content_json)

with open('data.jsonl', 'w') as f:

for item in content_lst:

json.dump(item, f)

f.write('\n')

return content_lst When the command python code/fetch_data.py --start $PREV_MONTH_START --end $PREV_MONTH_END executes, it creates a date range between $PREV_MONTH_START and $PREV_MONTH_END. For each day in the date range, it generates a URL, fetches the daily news through the API, parses the JSON response, and collects all the content into a JSON list. We then output this JSON list to the file “data.jsonl”.

- name: Commit changes

run: |

git config user.name ''

git config user.email ''

git add .

git commit -m "update data"

git push As shown above, the last step “Commit changes” commits the changes, configures the git user email and name, stages the changes, commits them, and pushes to the remote GitHub repository. This is a necessary step when running GitHub Actions that result in changes to the working directory (e.g., output file “data.jsonl” is created). Otherwise, the output is only saved in the /temp folder within the runner environment, and appears as if no changes have been made after the action is completed.

4. “Secure Workflow” with Secrets and Environment Variables Management

The final level focuses on improving the security and performance of the GitHub workflow by addressing non-functional requirements.

name: secure-workflow

on:

workflow_dispatch:

schedule:

- cron: "34 23 1 * *" # run 1st day of every month

jobs:

run-data-pipeline:

runs-on: ubuntu-latest

steps:

- name: Checkout repo content

uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: '3.11' # Specify your Python version here

- name: Install dependencies

run: |

python -m pip install --upgrade pip

python -m http.client

pip install -r requirements.txt

- name: Run data pipeline

env:

NEWS_API_TOKEN: ${{ secrets.NEWS_API_TOKEN }}

run: |

PREV_MONTH_START=$(date -d "`date +%Y%m01` -1 month" +%Y-%m-%d)

PREV_MONTH_END=$(date -d "`date +%Y%m01` -1 day" +%Y-%m-%d)

python code/fetch_data.py --start $PREV_MONTH_START --end $PREV_MONTH_END

- name: Check changes

id: git-check

run: |

git config user.name 'github-actions'

git config user.email 'github-actions@github.com'

git add .

git diff --staged --quiet || echo "changes=true" >> $GITHUB_ENV

- name: Commit and push if changes

if: env.changes == 'true'

run: |

git commit -m "update data"

git push

To improve workflow efficiency and reduce errors, we add a check before committing changes, ensuring that commits and pushes only occur when there are actual changes since the last commit. This is achieved through the command git diff --staged --quiet || echo "changes=true" >> $GITHUB_ENV.

git diff --stagedchecks the difference between the staging area and the last commit.--quietsuppresses the output — it returns 0 when there are no changes between the staged environment and working directory; whereas it returns exit code 1 (general error) when there are changes between the staged environment and working directory- This command is then connected to

echo "changes=true" >> $GITHUB_ENVthrough the OR operator||which tells the shell to run the rest of the line if the first command failed. Therefore, if changes exist, “changes=true” is passed to the environment variable$GITHUB_ENVand accessed at the next step to trigger git commit and push conditioned onenv.changes == 'true'.

Lastly, we introduce the environment secret, which enhances security and avoids exposing sensitive information (e.g., API token, personal access token) in the codebase. Additionally, environment secrets offer the benefit of separating the development environment. This means you can have different secrets for different stages of your development and deployment pipeline. For example, the testing environment (e.g., in the dev branch) can only access the test token, whereas the production environment (e.g. in the main branch) will be able to access the token linked to the production instance.

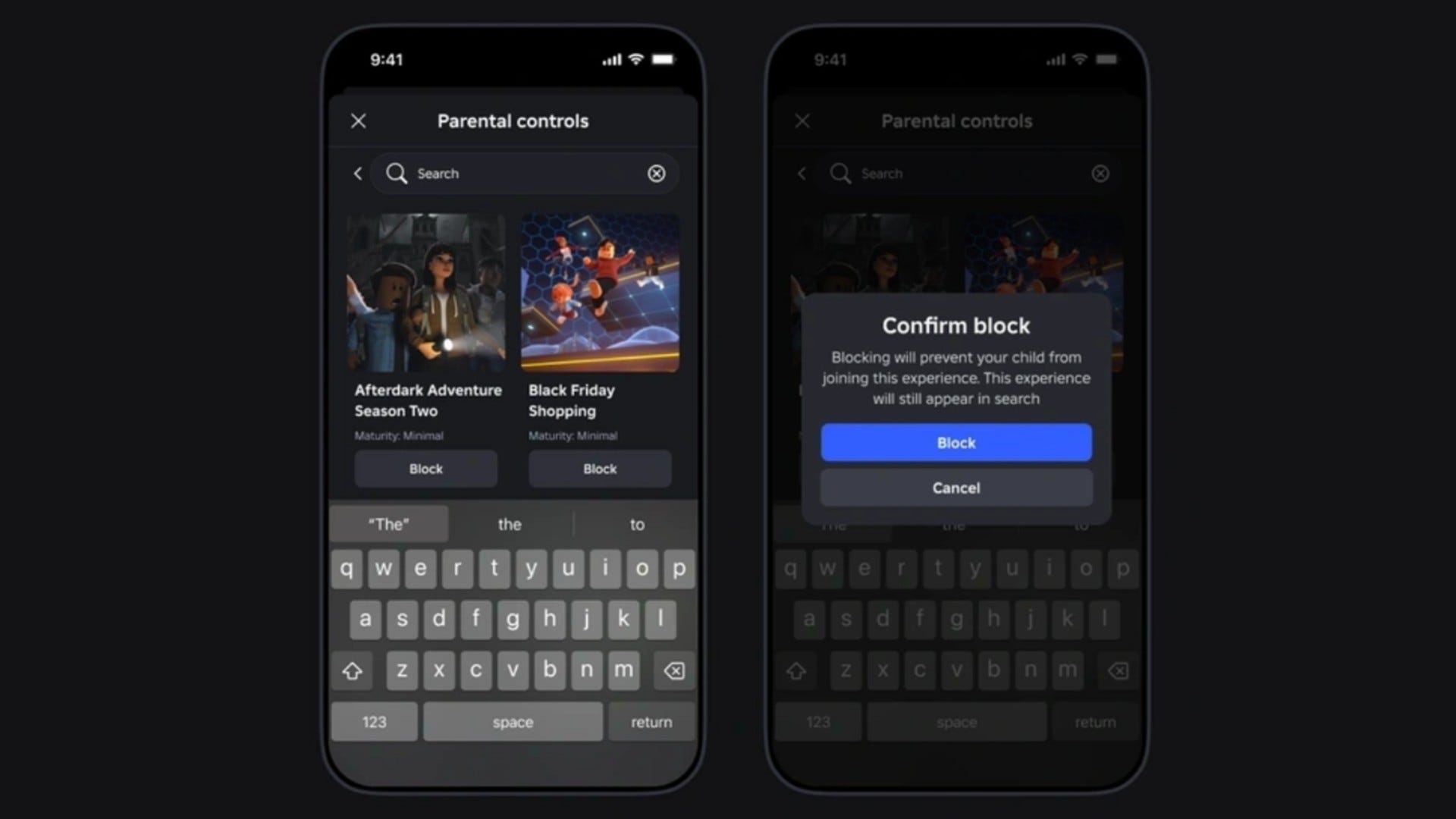

To set up environment secrets in GitHub:

- Go to your repository settings

- Navigate to Secrets and Variables > Actions

- Click “New repository secret”

- Add your secret name and value

After setting up the GitHub environment secrets, we will need to add the secret to the workflow environment, for example below we added ${{ secrets.NEWS_API_TOKEN }} to the step “Run data pipeline”.

- name: Run data pipeline

env:

NEWS_API_TOKEN: ${{ secrets.NEWS_API_TOKEN }}

run: |

PREV_MONTH_START=$(date -d "`date +%Y%m01` -1 month" +%Y-%m-%d)

PREV_MONTH_END=$(date -d "`date +%Y%m01` -1 day" +%Y-%m-%d)

python code/fetch_data.py --start $PREV_MONTH_START --end $PREV_MONTH_ENDWe then update the Python script fetch_data.py to access the environment secret using os.environ.get().

import os api_token = os.environ.get('NEWS_API_TOKEN')Take-Home Message

This guide explores the implementation of GitHub Actions for building dynamic data pipelines, progressing through four different levels of workflow implementations:

- Level 1: Basic workflow setup with manual triggers and simple Python script execution.

- Level 2: Push workflow with development environment setup.

- Level 3: Scheduled workflow with dynamic date handling and data fetching with command-line arguments

- Level 4: Secure pipeline workflow with secrets and environment variables management

Each level builds upon the previous one, demonstrating how GitHub Actions can be effectively utilized in the data domain to streamline data solutions and speed up the development lifecycle.

The post 4 Levels of GitHub Actions: A Guide to Data Workflow Automation appeared first on Towards Data Science.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] Microsoft Office Professional 2021 for Windows: Lifetime License (75% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Anthony_Brown_Alamy.jpg?#)

_Hanna_Kuprevich_Alamy.jpg?#)

.png?#)

![Hands-on: We got to play Nintendo Switch 2 for nearly six hours yesterday [Video]](https://i0.wp.com/9to5toys.com/wp-content/uploads/sites/5/2025/04/Switch-FI-.jpg.jpg?resize=1200%2C628&ssl=1)

![Fitbit redesigns Water stats and logging on Android, iOS [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/03/fitbit-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)