Writers Deserve Better: What’s Really Going On at HackerNoon?

Over the last year, a growing chorus of writers has raised concerns about HackerNoon’s editorial process. What was once seen as a promising platform for tech storytellers is now being criticized for inconsistent standards, dismissive communication, and an opaque reliance on AI tools to determine what gets published. These issues aren’t isolated. Across forums, private chats, and social platforms, writers have shared remarkably similar experiences: polished, well-researched pieces being rejected with vague explanations like “AI-generated,” “too promotional,” or “lacking originality.” No detailed feedback. No specific suggestions. Just flat rejection. The most commonly cited tool in these cases is Pangram, a system HackerNoon reportedly uses to detect AI-generated content. That alone isn’t surprising—most large-scale platforms use automated systems to manage volume. But the irony is hard to miss: writers are being told their work “sounds like AI,” based on assessments made by actual AI. Writers point out that in today’s tech landscape, almost every piece of writing is assisted in some way. Spell checkers, grammar tools, paraphrasers, clarity checkers—all are common. And none of these erase the human intent behind the words. Yet HackerNoon seems to treat signs of polish or structure as indicators of synthetic origin, rather than evidence of craft. Things don’t improve when writers push for clarity. Some who have followed up have received responses that feel combative, even sarcastic. In one widely circulated exchange, an editor dismissed concerns by accusing the writer of trying to “buy brand credit,” suggesting the article was part of a PR stunt. The writer had asked for a clearer explanation of the rejection. Instead, they were met with suspicion and character judgment. That’s where this issue becomes more than just editorial preference. When a platform stops engaging with content and starts interrogating contributor intent, it shifts from being a publication to a gatekeeper of perception. The focus moves away from quality and toward assumption. Is the piece written too cleanly? Might be AI. Is it about someone notable? Must be PR. Is it framed too positively? Clearly reputation management. And yet, in most of these rejections, there’s no effort to actually critique the content itself. No feedback on structure, tone, clarity, or insight. No path to revision or collaboration. Just accusations that shut down the conversation before it starts. Some writers have even suggested that HackerNoon is moving toward a kind of editorial paywall, where certain kinds of content are only accepted if accompanied by brand sponsorships. Whether or not that’s formally true, the perception is growing—and the absence of transparency fuels it. This all raises a bigger question: what should editorial judgment look like in an age where AI-assisted writing is increasingly normal? If tools are being used on both sides—by writers to refine and by editors to detect—then where does the line get drawn? And more importantly, who gets to draw it? No one’s asking HackerNoon to abandon standards. Every platform has the right to protect its voice and mission. But when those standards become unpredictable, inconsistently applied, or tinged with hostility, the writers—many of whom are technologists donating their time and knowledge—start to feel like adversaries instead of contributors. There’s a difference between curating content and policing contributors. And there’s a responsibility that comes with holding the editorial gate. If a piece lacks originality, say so. If it sounds like AI, show why. If it reads like PR, point to the parts that cross the line. But don’t hide behind algorithms and tone policing. Writers are human. Most are open to feedback, even tough feedback, if it’s fair and honest. What they’re asking for isn’t special treatment—it’s clarity, respect, and a process that reflects the values HackerNoon says it believes in. Right now, it feels like the platform is drifting away from that mission. The question is whether it’s willing to listen to the people who helped build its reputation in the first place. Have you experienced something similar with HackerNoon or another tech blog? Share your thoughts in the comments.

Over the last year, a growing chorus of writers has raised concerns about HackerNoon’s editorial process. What was once seen as a promising platform for tech storytellers is now being criticized for inconsistent standards, dismissive communication, and an opaque reliance on AI tools to determine what gets published.

These issues aren’t isolated. Across forums, private chats, and social platforms, writers have shared remarkably similar experiences: polished, well-researched pieces being rejected with vague explanations like “AI-generated,” “too promotional,” or “lacking originality.” No detailed feedback. No specific suggestions. Just flat rejection.

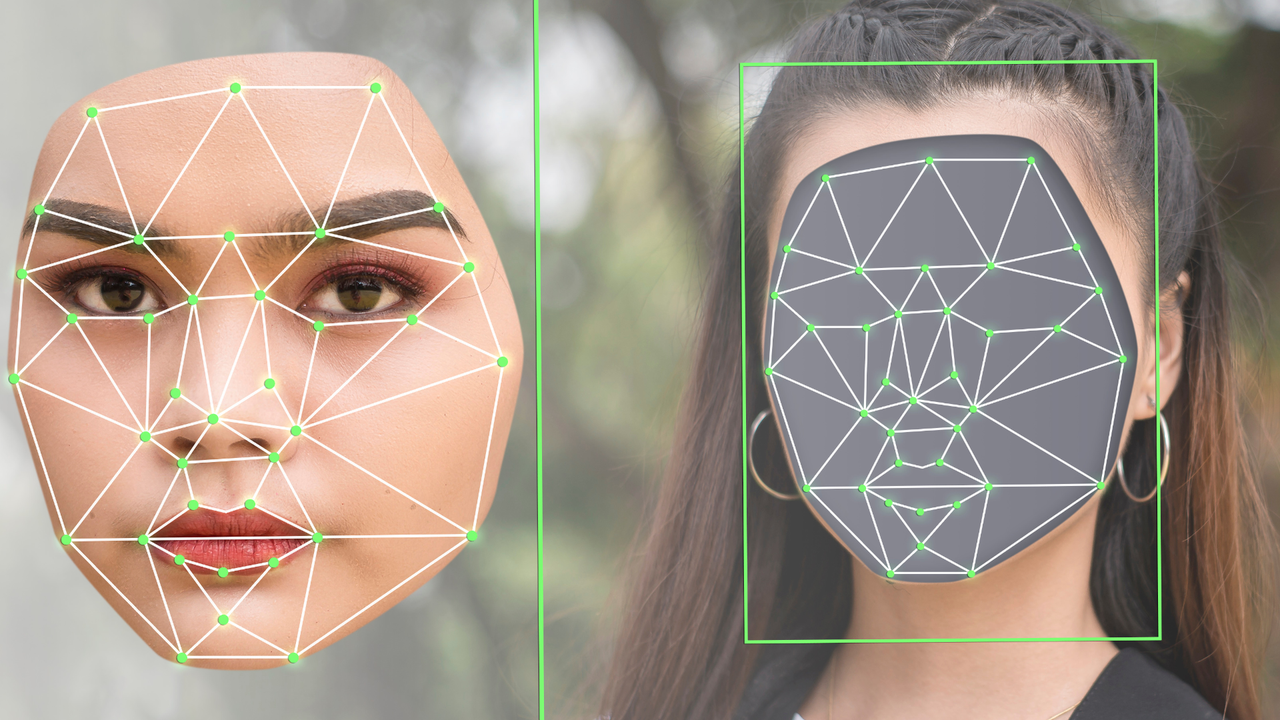

The most commonly cited tool in these cases is Pangram, a system HackerNoon reportedly uses to detect AI-generated content. That alone isn’t surprising—most large-scale platforms use automated systems to manage volume. But the irony is hard to miss: writers are being told their work “sounds like AI,” based on assessments made by actual AI.

Writers point out that in today’s tech landscape, almost every piece of writing is assisted in some way. Spell checkers, grammar tools, paraphrasers, clarity checkers—all are common. And none of these erase the human intent behind the words. Yet HackerNoon seems to treat signs of polish or structure as indicators of synthetic origin, rather than evidence of craft.

Things don’t improve when writers push for clarity. Some who have followed up have received responses that feel combative, even sarcastic. In one widely circulated exchange, an editor dismissed concerns by accusing the writer of trying to “buy brand credit,” suggesting the article was part of a PR stunt. The writer had asked for a clearer explanation of the rejection. Instead, they were met with suspicion and character judgment.

That’s where this issue becomes more than just editorial preference. When a platform stops engaging with content and starts interrogating contributor intent, it shifts from being a publication to a gatekeeper of perception. The focus moves away from quality and toward assumption.

Is the piece written too cleanly? Might be AI. Is it about someone notable? Must be PR. Is it framed too positively? Clearly reputation management.

And yet, in most of these rejections, there’s no effort to actually critique the content itself. No feedback on structure, tone, clarity, or insight. No path to revision or collaboration. Just accusations that shut down the conversation before it starts.

Some writers have even suggested that HackerNoon is moving toward a kind of editorial paywall, where certain kinds of content are only accepted if accompanied by brand sponsorships. Whether or not that’s formally true, the perception is growing—and the absence of transparency fuels it.

This all raises a bigger question: what should editorial judgment look like in an age where AI-assisted writing is increasingly normal? If tools are being used on both sides—by writers to refine and by editors to detect—then where does the line get drawn? And more importantly, who gets to draw it?

No one’s asking HackerNoon to abandon standards. Every platform has the right to protect its voice and mission. But when those standards become unpredictable, inconsistently applied, or tinged with hostility, the writers—many of whom are technologists donating their time and knowledge—start to feel like adversaries instead of contributors.

There’s a difference between curating content and policing contributors. And there’s a responsibility that comes with holding the editorial gate. If a piece lacks originality, say so. If it sounds like AI, show why. If it reads like PR, point to the parts that cross the line. But don’t hide behind algorithms and tone policing.

Writers are human. Most are open to feedback, even tough feedback, if it’s fair and honest. What they’re asking for isn’t special treatment—it’s clarity, respect, and a process that reflects the values HackerNoon says it believes in.

Right now, it feels like the platform is drifting away from that mission. The question is whether it’s willing to listen to the people who helped build its reputation in the first place.

Have you experienced something similar with HackerNoon or another tech blog? Share your thoughts in the comments.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Is This Programming Paradigm New? [closed]](https://miro.medium.com/v2/resize:fit:1200/format:webp/1*nKR2930riHA4VC7dLwIuxA.gif)

-Classic-Nintendo-GameCube-games-are-coming-to-Nintendo-Switch-2!-00-00-13.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![M4 MacBook Air Drops to New All-Time Low of $912 [Deal]](https://www.iclarified.com/images/news/97108/97108/97108-640.jpg)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)