Why your MCP server fails (how to make 100% working MCP server)

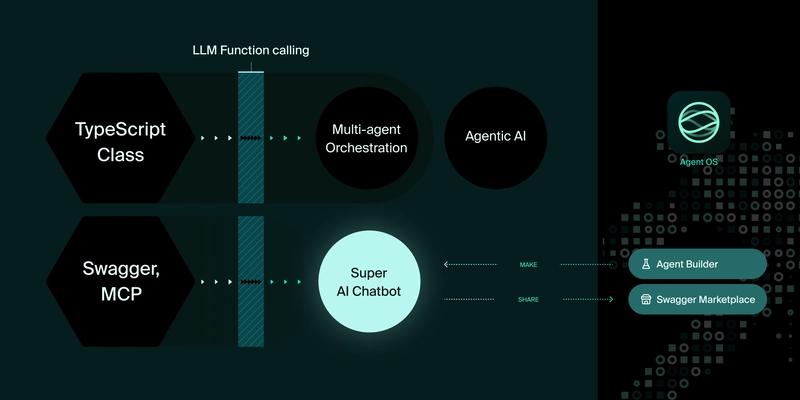

Summary MCP (Model Context Protocol) has brought a new wave to the AI ecosystem, but recently there have been many criticisms regarding its stability and effectiveness. However, if you change MCP into function units and implement function calling with the following three strategies, you can create an AI agent that works 100% of the time. Use function calling with MCP and Agentica. Agentica Github Repository: https://github.com/wrtnlabs/agentica Guide Documents: https://wrtnlabs.io/agentica Function calling strategies of Agentica JSON Schema Specification: specs are different across LLM vendors Validation Feedback: correct AI's mistakes on arguments composition Selector Agent: filtering candidate functions to reduce context 1. Preface Github MCP on Claude Desktop breaks down too much Recently, MCP (Model Context Protocol) has been gaining popularity in the AI agent ecosystem. However, at the same time, AI early adopters often express dissatisfaction with MCP's instability. I regularly check posts on LinkedIn, and without exaggeration, I see at least 3 posts daily like the one below, suggesting MCP is premature: People talk about MCP as if it's a silver bullet, but it's extremely unstable. It frequently crashes, breaks down, and even the token costs are prohibitively expensive. It's simply not usable in enterprise settings. Perhaps when cheaper, larger, and smarter models emerge, the vision of MCP might be realized. For now, MCP is premature and overhyped. 100% working Github agent by function calling with Agentica Surprisingly, by decomposing the functions of an MCP server and implementing AI Function Calling yourself, you can boost your AI application's stability to 100%. And it works perfectly well even with mini models like 8b that can run on personal laptops. Agentica recommends function calling with MCP because you can efficiently call the functions provided by MCP servers, avoid hallucinations, and safely manage them using the following strategies: JSON Schema Specification: specs are different across LLM vendors Validation Feedback: correct AI's mistakes on arguments composition Selector Agent: filtering candidate functions to reduce context And if you want to create an AI application that succeeds 100% of the time—not just a toy-like AI application limited to a single server with 30 function calls like Github MCP, but one that encompasses numerous servers and hundreds of functions—you should implement Document Driven Development. Below is an AI agent for an e-commerce platform with 289 functions, and as you can see, it's working flawlessly without any errors. Let's create a 100% successful AI application with MCP function calling and Document Driven Development using Agentica. 100% working e-commerce agent of 289 functions with 8b mini model 2. Function Calling 2.1. JSON Schema Specification Documents https://github.com/samchon/openapi https://wrtnlabs.io/agentica/docs/core/vendor/#schema-specification JSON Schema Specifications IChatGptSchema.ts: OpenAI ChatGPT IClaudeSchema.ts: Anthropic Claude IDeepSeekSchema.ts: High-Flyer DeepSeek IGeminiSchema.ts: Google Gemini ILlamaSchema.ts: Meta Llama JSON schemas defined in MCP must be converted to match the target AI vendor specifications. Did you know? JSON schema specifications differ across AI vendors. The JSON schema used by OpenAI, Anthropic Claude, and Google Gemini all have different specifications. However, the Model Context Protocol has no constraints on JSON schema specifications. In principle, it can use all versions of JSON schemas that exist in the world. But AI vendors each have their own JSON schema specifications. This is where the problem arises. { name: "divide", inputSchema: { x: { type: "number", }, y: { anyOf: [ // not supported in Gemini { type: "number", exclusiveMaximum: 0, // not supported in OpenAI and Gemini }, { type: "number", exclusiveMinimum: 0, // not supported in OpenAI and Gemini }, ], }, }, description: "Calculate x / y", } My MCP server works well with Claude but not with OpenAI. This is because you're using JSON schema features not supported by OpenAI { type: "string", format: "uuid" } { type: "integer", minimum: 0 } Why does my MCP server work with OpenAI and Claude but not with Gemini? Because you're using JSON schema features not supported by Gemini { anyOf: [ { type: "boolean" }, { type: "integer" } ] } { $ref: "#/$defs/Recursive" } Claude supports the latest JSON schema specification, the 2020-12 draft version. However, this specification contains numerous redundant, ambiguous, and vague expressions that must be corrected for proper use. OpenAI and Gemini have their own unique JSON schema specifications. They didn't follow the standard but created their

Summary

MCP (Model Context Protocol) has brought a new wave to the AI ecosystem, but recently there have been many criticisms regarding its stability and effectiveness.

However, if you change MCP into function units and implement function calling with the following three strategies, you can create an AI agent that works 100% of the time. Use function calling with MCP and Agentica.

- Agentica

- Github Repository: https://github.com/wrtnlabs/agentica

- Guide Documents: https://wrtnlabs.io/agentica

- Function calling strategies of Agentica

- JSON Schema Specification: specs are different across LLM vendors

- Validation Feedback: correct AI's mistakes on arguments composition

- Selector Agent: filtering candidate functions to reduce context

1. Preface

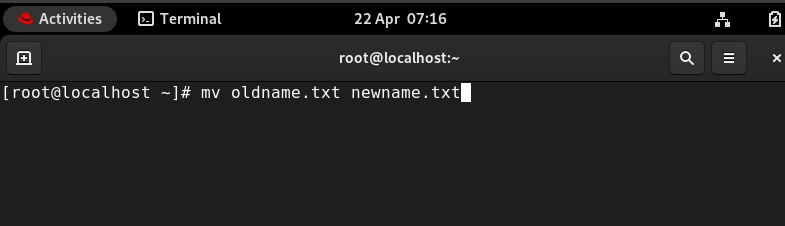

Github MCP on Claude Desktop breaks down too much

Recently, MCP (Model Context Protocol) has been gaining popularity in the AI agent ecosystem.

However, at the same time, AI early adopters often express dissatisfaction with MCP's instability. I regularly check posts on LinkedIn, and without exaggeration, I see at least 3 posts daily like the one below, suggesting MCP is premature:

People talk about MCP as if it's a silver bullet, but it's extremely unstable. It frequently crashes, breaks down, and even the token costs are prohibitively expensive. It's simply not usable in enterprise settings.

Perhaps when cheaper, larger, and smarter models emerge, the vision of MCP might be realized. For now, MCP is premature and overhyped.

100% working Github agent by function calling with Agentica

Surprisingly, by decomposing the functions of an MCP server and implementing AI Function Calling yourself, you can boost your AI application's stability to 100%. And it works perfectly well even with mini models like 8b that can run on personal laptops.

Agentica recommends function calling with MCP because you can efficiently call the functions provided by MCP servers, avoid hallucinations, and safely manage them using the following strategies:

- JSON Schema Specification: specs are different across LLM vendors

- Validation Feedback: correct AI's mistakes on arguments composition

- Selector Agent: filtering candidate functions to reduce context

And if you want to create an AI application that succeeds 100% of the time—not just a toy-like AI application limited to a single server with 30 function calls like Github MCP, but one that encompasses numerous servers and hundreds of functions—you should implement Document Driven Development.

Below is an AI agent for an e-commerce platform with 289 functions, and as you can see, it's working flawlessly without any errors. Let's create a 100% successful AI application with MCP function calling and Document Driven Development using Agentica.

100% working e-commerce agent of 289 functions with 8b mini model

2. Function Calling

2.1. JSON Schema Specification

- Documents

- JSON Schema Specifications

-

IChatGptSchema.ts: OpenAI ChatGPT -

IClaudeSchema.ts: Anthropic Claude -

IDeepSeekSchema.ts: High-Flyer DeepSeek -

IGeminiSchema.ts: Google Gemini -

ILlamaSchema.ts: Meta Llama

-

JSON schemas defined in MCP must be converted to match the target AI vendor specifications.

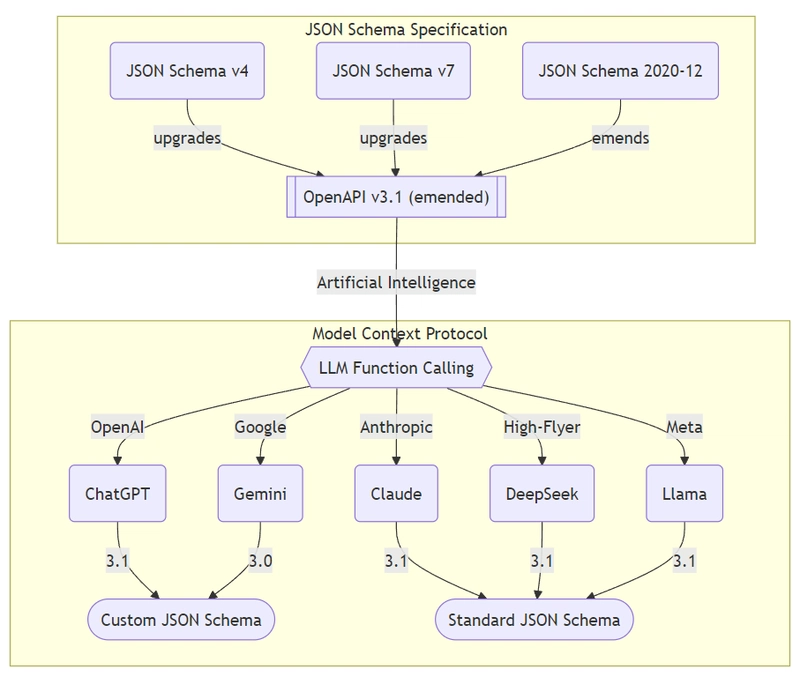

Did you know? JSON schema specifications differ across AI vendors. The JSON schema used by OpenAI, Anthropic Claude, and Google Gemini all have different specifications.

However, the Model Context Protocol has no constraints on JSON schema specifications. In principle, it can use all versions of JSON schemas that exist in the world. But AI vendors each have their own JSON schema specifications.

This is where the problem arises.

{

name: "divide",

inputSchema: {

x: {

type: "number",

},

y: {

anyOf: [ // not supported in Gemini

{

type: "number",

exclusiveMaximum: 0, // not supported in OpenAI and Gemini

},

{

type: "number",

exclusiveMinimum: 0, // not supported in OpenAI and Gemini

},

],

},

},

description: "Calculate x / y",

}

My MCP server works well with Claude but not with OpenAI.

- This is because you're using JSON schema features not supported by OpenAI

{ type: "string", format: "uuid" }{ type: "integer", minimum: 0 }Why does my MCP server work with OpenAI and Claude but not with Gemini?

- Because you're using JSON schema features not supported by Gemini

{ anyOf: [ { type: "boolean" }, { type: "integer" } ] }{ $ref: "#/$defs/Recursive" }

Claude supports the latest JSON schema specification, the 2020-12 draft version. However, this specification contains numerous redundant, ambiguous, and vague expressions that must be corrected for proper use.

OpenAI and Gemini have their own unique JSON schema specifications. They didn't follow the standard but created their own JSON schema specs. Unfortunately, rather than enhancing the expressiveness of JSON schema specs, they've largely stripped away features from the standard JSON schema specifications.

Adding to the confusion is the Server SDK created by Anthropic Claude for model-context-protocol. This Server SDK (via zod-to-schema) generates JSON schema based on the 10-year-old v7 draft version.

Now do you understand why your MCP server fails before it can even start?

Only after decomposing your MCP server into function units, converting their JSON schemas to match your target AI vendor, and correcting them can you create a properly functioning AI application.

Our Agentica goes through the following JSON schema conversion process via @samchon/openapi. When creating AI applications based on MCP, you must go through a similar JSON schema conversion process:

- Convert the input JSON schema to the 2020-12 draft version

- Internally referred to as OpenAPI v3.1 emended

-

OpenApi.IJsonSchema

- Remove all redundant/ambiguous/vague expressions to correct it

- Convert to a JSON schema model suitable for the target AI vendor

import { Agentica, assertMcpController } from "@agentica/core";

import { Client } from "@modelcontextprotocol/sdk/client/index.js";

import { StdioClientTransport } from "@modelcontextprotocol/sdk/client/stdio.js";

const client = new Client({

name: "shopping",

version: "1.0.0",

});

await client.connect(new StdioClientTransport({

command: "npx",

args: ["-y", "@wrtnlabs/shopping-mcp"],

}));

const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: "********" }),

model: "gpt-4o-mini",

},

controllers: [

await assertMcpController({

name: "shopping",

model: "chatgpt",

client,

}),

],

});

await agent.conversate("I wanna buy a Macbook");

2.2. Validation Feedback

| Name | Status |

|---|---|

ObjectConstraint |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectFunctionSchema |

2️⃣2️⃣4️⃣2️⃣2️⃣2️⃣2️⃣2️⃣5️⃣2️⃣ |

ObjectHierarchical |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣2️⃣1️⃣1️⃣2️⃣ |

ObjectJsonSchema |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectSimple |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectUnionExplicit |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectUnionImplicit |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingCartCommodity |

1️⃣2️⃣2️⃣3️⃣1️⃣1️⃣4️⃣2️⃣1️⃣2️⃣ |

ShoppingOrderCreate |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingOrderPublish |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣❌1️⃣1️⃣1️⃣ |

ShoppingSaleDetail |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingSalePage |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

https://wrtnlabs.io/agentica/docs/concepts/function-calling/#orchestration-strategy

Validation feedback corrects mistakes in AI composed arguments.

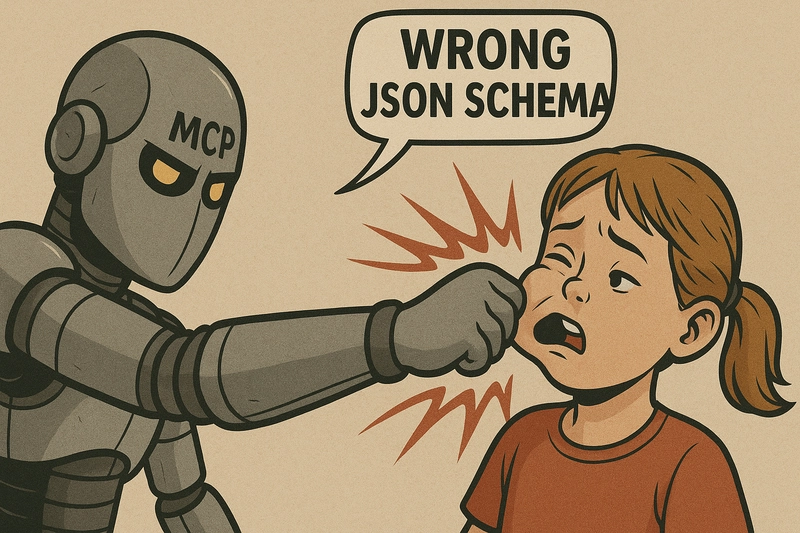

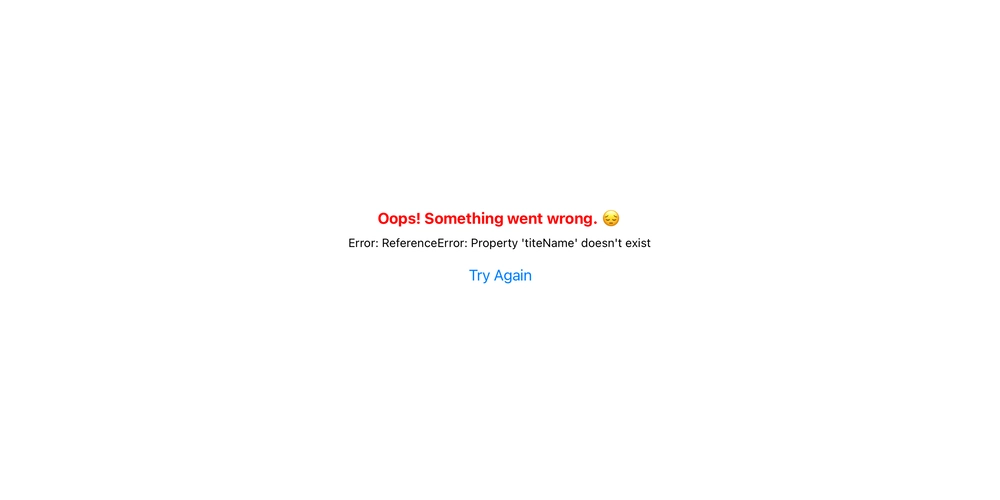

MCP and AI Function Calling often compose arguments (parameter values) of incorrect types. This is another major reason why your MCP server and AI application might break down.

To solve this, when an AI agent composes parameters of the wrong type for a specific function, you need to provide specific and detailed information about what went wrong and how, then request the AI agent to reconstruct the parameter values.

Agentica calls this the validation feedback strategy, and it's one of the core strategies for increasing the success rate of function calling. The table above shows how many attempts it takes for the AI to compose parameters successfully in each scenario, measured on the OpenAI o3-mini model. Any number other than 1 indicates that validation feedback was performed that many times.

Among the examples above, ObjectFunctionSchema and ShoppingCartCommodity represent fairly complex types, and we can see that success is unlikely without validation feedback. The second reason your MCP server might be failing is the absence of such a validation feedback strategy.

export interface IMcpLlmFunction<Model extends ILlmSchema.Model> {

name: string;

description?: string | undefined;

parameters: ILlmSchema.IParameters<Model>;

validate: (args: unknown) => IValidation<unknown>;

}

IMcpLlmFunctiontype of@samchon/openapi

2.3. Selector Agent

https://wrtnlabs.io/agentica/docs/concepts/function-calling/#validation-feedback

Select candidate functions to reduce context.

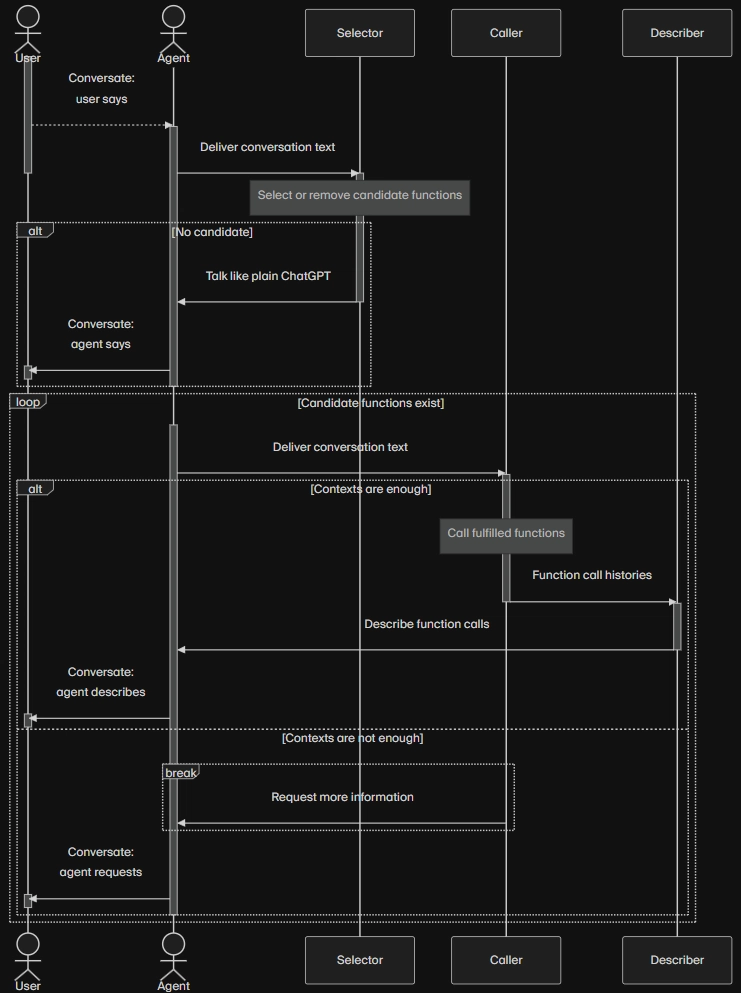

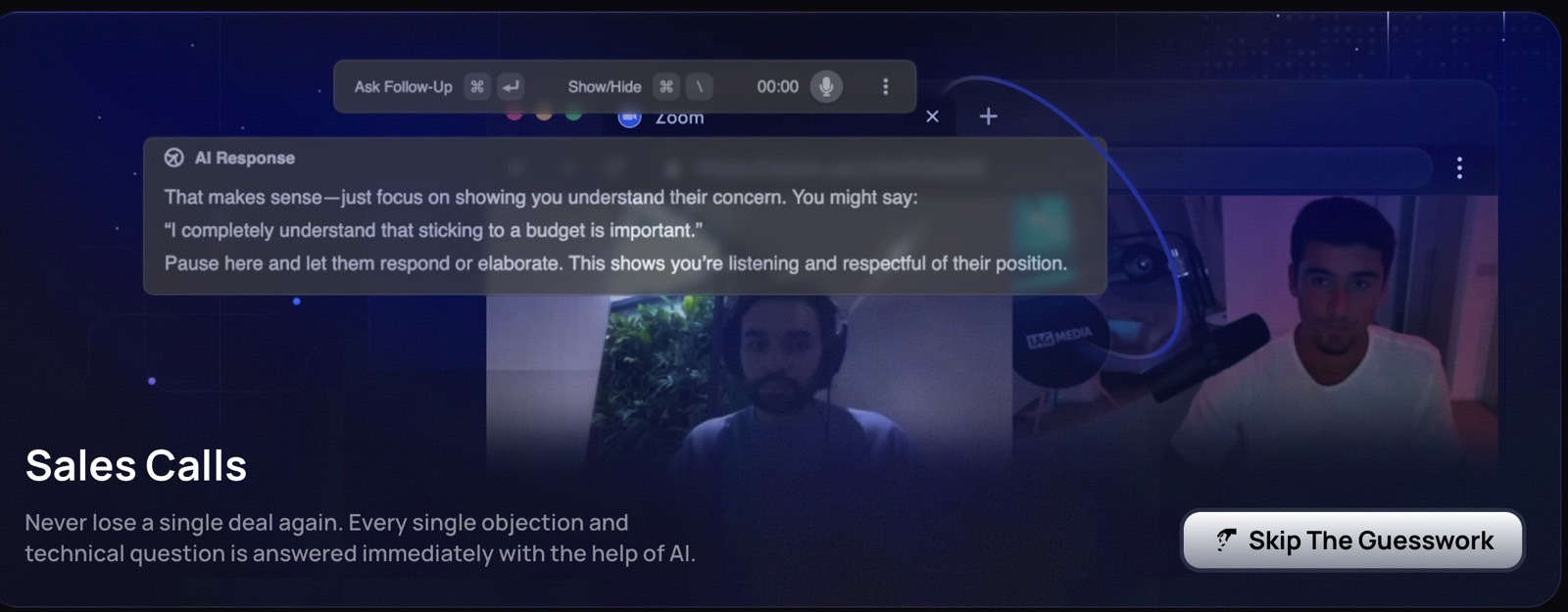

Looking at OpenAI or Claude APIs, you'll notice a field called mcp_servers designed to take an entire MCP server. When you input the entire MCP server documentation at once to an AI agent, the context and token count becomes enormous.

This is why even with the Github MCP server, which has only 30 functions, Claude Desktop keeps crashing or producing hallucinations before accomplishing anything. If you had 300 functions instead of 30, the document size would reach about 8 MB and 210,000 lines of code. This would be impossible to process as an agent configuration.

agent=Agent(

name="Assistant",

instructions="Use the tools to achieve the task",

mcp_servers=[mcp_server_1, mcp_server_2]

)

To address this issue, Agentica internally uses a selector agent to filter candidate functions for function calling. The selector agent gathers the names and descriptions (IMcpLlmFunction.description) of each function and performs RAG (Retrieval Augmented Generation) searches to check if the user's utterance implies calling a specific function. If it does, the function is added to the candidate list.

This way, the Caller Agent typically has 0-2 target functions, rarely exceeding 4-5, which dramatically reduces context and token consumption. It avoids hallucinations caused by the massive context consumption that occurs when pouring an entire MCP server into mcp_servers.

Using a Selector Agent to filter candidate functions and save on context consumption is the third strategy to make your MCP server 100% successful.

3. Document Driven Development

@Controller(`shoppings/customers/sales`)

export class ShoppingCustomerSaleController {

/**

* List up every summarized sales.

*

* List up every {@link IShoppingSale.ISummary summarized sales}.

*

* As you can see, returned sales are summarized, not detailed. It does not

* contain the SKU (Stock Keeping Unit) information represented by the

* {@link IShoppingSaleUnitOption} and {@link IShoppingSaleUnitStock} types.

* If you want to get such detailed information of a sale, use

* `GET /shoppings/customers/sales/{id}` operation for each sale.

*

* > If you're an A.I. chatbot, and the user wants to buy or compose

* > {@link IShoppingCartCommodity shopping cart} from a sale, please

* > call the `GET /shoppings/customers/sales/{id}` operation at least once

* > to the target sale to get detailed SKU information about the sale.

* > It needs to be run at least once for the next steps.

*

* @param input Request info of pagination, searching and sorting

* @returns Paginated sales with summarized information

* @tag Sale

*

* @author Samchon

*/

@TypedRoute.Patch()

public index(

@ShoppingCustomerAuth() customer: IShoppingCustomer,

@TypedBody() input: IShoppingSale.IRequest,

): Promise<IPage<IShoppingSale.ISummary>>;

/**

* Get a sale with detailed information.

*

* Get a {@link IShoppingSale sale} with detailed information including

* the SKU (Stock Keeping Unit) information represented by the

* {@link IShoppingSaleUnitOption} and {@link IShoppingSaleUnitStock} types.

*

* > If you're an A.I. chatbot, and the user wants to buy or compose a

* > {@link IShoppingCartCommodity shopping cart} from a sale, please call

* > this operation at least once to the target sale to get detailed SKU

* > information about the sale.

* >

* > It needs to be run at least once for the next steps. In other words,

* > if you A.I. agent has called this operation to a specific sale, you

* > don't need to call this operation again for the same sale.

* >

* > Additionally, please do not summarize the SKU information. Just show

* > the every options and stocks in the sale with detailed information.

*

* @param id Target sale's {@link IShoppingSale.id}

* @returns Detailed sale information

* @tag Sale

*

* @author Samchon

*/

@TypedRoute.Get(":id")

public at(

@props.AuthGuard() actor: Actor,

@TypedParam("id") id: string & tags.Format<"uuid">,

): Promise<IShoppingSale>;

}

https://github.com/samchon/shopping-backend

The documentation level of functions and types determines the quality of your AI application.

If you want to create an enterprise-level AI application using MCP, not just a toy project—an AI application with hundreds of functions provided by MCP servers—you need to pay careful attention to documenting each function.

Consider how the Selector agent should determine which function to call in which cases, and provide detailed explanations of each function's purpose. If there are prerequisite execution conditions between functions, include them in the IMcpLlmFunction.description. The AI agent will execute the functions in the correct order on its own.

Also, document each DTO type and its properties in detail. Just defining types isn't enough. Your documentation should explain how the AI agent should construct each property from the user's utterance.

@samchon/shopping-backend is an API server with 289 functions, likely more than any server you've encountered. It also demonstrates best practices for documentation for AI application development, with a function calling success rate approaching 100%.

Instead of building a Workflow Agent according to MCP philosophy, list the functions that are appropriate for your AI application's purpose. Focus on thorough documentation for each function and type. Your AI application will become scalable, flexible, and mass productive.

import { tags } from "typia";

/**

* Restriction information of the coupon.

*

* @author Samchon

*/

export interface IShoppingCouponRestriction {

/**

* Access level of coupon.

*

* - public: possible to find from public API

* - private: unable to find from public API

* - arbitrarily assigned by the seller or administrator

* - issued from one-time link

*/

access: "public" | "private";

/**

* Exclusivity or not.

*

* An exclusive discount coupon refers to a discount coupon that has an

* exclusive relationship with other discount coupons and can only be

* used alone. That is, when an exclusive discount coupon is used, no

* other discount coupon can be used for the same

* {@link IShoppingOrder order} or {@link IShoppingOrderGood good}.

*

* Please note that this exclusive attribute is a very different concept

* from multiplicative, which means whether the same coupon can be

* multiplied and applied to multiple coupons of the same order, so

* please do not confuse them.

*/

exclusive: boolean;

/**

* Limited quantity issued.

*

* If there is a limit to the quantity issued, it becomes impossible

* to issue tickets exceeding this value.

*

* In other words, the concept of N coupons being issued on

* a first-come, first-served basis is created.

*/

volume: null | (number & tags.Type<"uint32">);

/**

* Limited quantity issued per person.

*

* As a limit to the total amount of issuance per person, it is

* common to assign 1 to limit duplicate issuance to the same citizen,

* or to use the NULL value to set no limit.

*

* Of course, by assigning a value of N, the total amount issued

* to the same citizen can be limited.

*/

volume_per_citizen: null | (number & tags.Type<"uint32">);

/**

* Expiration day(s) value.

*

* The concept of expiring N days after a discount coupon ticket is issued.

*

* Therefore, customers must use the ticket within N days, if possible,

* from the time it is issued.

*/

expired_in: null | (number & tags.Type<"uint32">);

/**

* Expiration date.

*

* A concept that expires after YYYY-MM-DD after a discount coupon ticket

* is issued.

*

* Double restrictions are possible with expired_in, of which the one

* with the shorter expiration date is used.

*/

expired_at: null | (string & tags.Format<"date-time">);

}

4. TypeScript Class Function Calling

import { Agentica } from "@agentica/core";

import OpenAI from "openai";

import typia from "typia";

import { BbsArticleService } from "./services/BbsArticleService";

const agent = new Agentica({

vendor: {

model: "gpt-4o-mini",

api: new OpenAI({ apiKey: "********" }),

},

controllers: [

{

protocol: "class",

application: typia.llm.application<BbsArticleService, "chatgpt">(),

execute: new BbsArticleService(),

},

],

});

await agent.conversate("I want to write an article.");

It doesn't have to be MCP. Function call directly to TypeScript classes.

You've seen what strategies Agentica employs to make MCP 100% successful. But Agentica supports protocols beyond MCP, including TypeScript Class.

If the functions you want to provide to your AI application don't require a server and can be implemented with just a TypeScript Class, go for it.

5. OpenAPI Function Calling

import { Agentica } from "@agentica/core";

import { HttpLlm, OpenApi } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: "*****" }),

model: "gpt-4o-mini",

},

controllers: [

{

protocol: "http",

name: "shopping",

application: HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then((r) => r.json()),

}),

connection: {

host: "https://shopping-be.wrtn.ai",

headers: {

Authorization: "Bearer *****",

},

},

},

],

});

await agent.conversate("I want to buy a MacBook Pro");

Don't migrate to MCP unnecessarily. OpenAPI is fine as it is.

You've seen what strategies Agentica employs to make MCP 100% successful. But Agentica also supports Swagger/OpenAPI protocol.

If you're considering migrating to MCP to develop an AI application based on your existing backend server, there's no need to do so. Just use your backend server's OpenAPI documentation (swagger.json).

6. Agentica

- Github Repository: https://github.com/wrtnlabs/agentica

- Guide Documents: https://wrtnlabs.io/agentica

Agentica is a TypeScript AI Agent framework specialized in Function Calling.

It incorporates all the MCP enhancement strategies discussed in this unit, and also supports Function Calling for TypeScript Class and OpenAPI.

It's probably the easiest and most reliable AI framework available today, so please check it out.

.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Weyo_alamy.png?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Releases Public Beta 2 of iOS 18.5, iPadOS 18.5, macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97094/97094/97094-640.jpg)

![New M4 MacBook Air On Sale for $929 [Lowest Price Ever]](https://www.iclarified.com/images/news/97090/97090/97090-1280.jpg)

![Apple iPhone 17 Pro May Come in 'Sky Blue' Color [Rumor]](https://www.iclarified.com/images/news/97088/97088/97088-640.jpg)