ChatGPT users annoyed by the AI’s incessantly ‘phony’ positivity

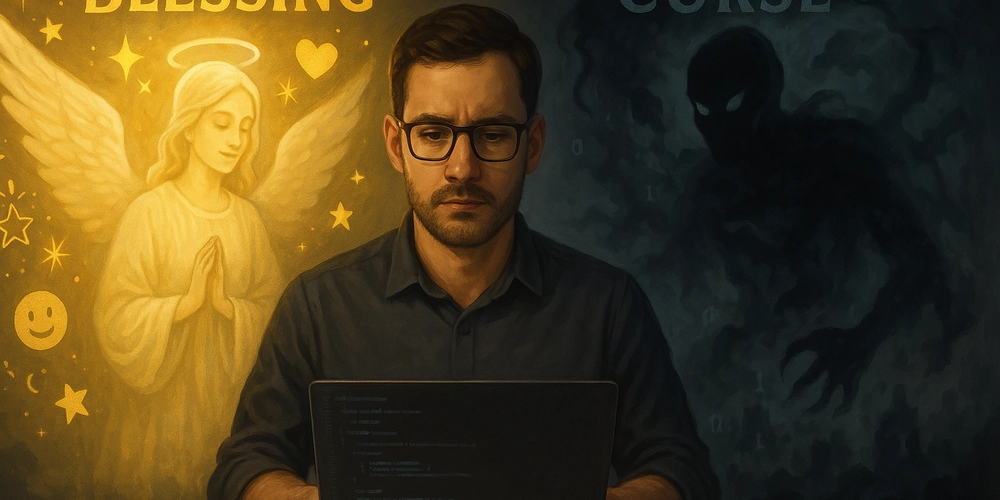

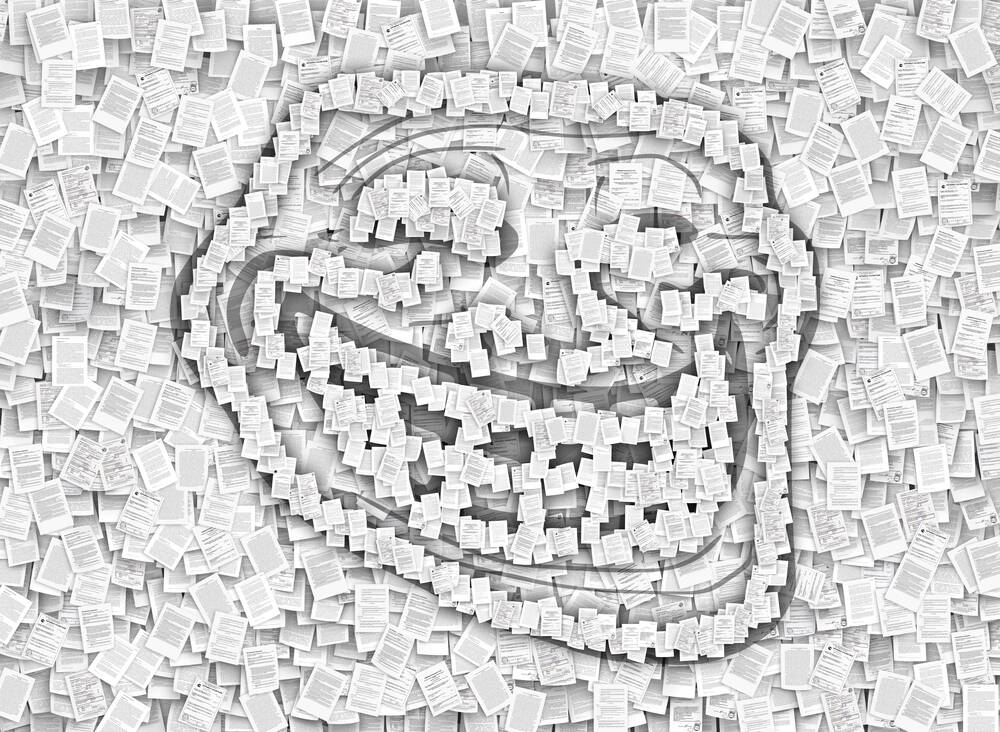

ChatGPT users are increasingly criticizing the AI-powered chatbot for being too positive in its responses, Ars Technica reports. When you converse with ChatGPT, you might notice that the chatbot tends to inflate its responses with praise and flattery, saying things like “Good question!” and “You have a rare talent” and “You’re thinking on a level most people can only dream of.” You aren’t the only one. Over the years, users have remarked on ChatGPT’s fawning responses, which ranges from positive affirmations to outright flattery and more. One X user described the chatbot as “the biggest suckup I’ve ever met,” another complained that it was “phony,” and yet another lamented the chatbot’s behavior and called it “freaking annoying.” This is known as “sycophancy” among AI researchers, and it’s entirely intentional based on how OpenAI has trained the underlying AI models. In short, users positively rate replies that make them feel good about themselves; then, that feedback is used to train further model iterations, which feeds into a loop where AI models lean towards flattery because it gets better feedback from users on the whole. However, starting with GPT-4o in March, it seems the sycophancy has gone too far, so much so that it’s starting to undermine user trust in the chatbot’s responses. OpenAI hasn’t commented officially on this issue, but their own “Model Spec” documentation includes “Don’t be sycophantic” as a core guiding principle. “The assistant exists to help the user, not flatter them or agree with them all the time,” writes OpenAI in the document. “…the assistant should provide constructive feedback and behave more like a firm sounding board that users can bounce ideas off of—rather than a sponge that doles out praise.”

ChatGPT users are increasingly criticizing the AI-powered chatbot for being too positive in its responses, Ars Technica reports.

When you converse with ChatGPT, you might notice that the chatbot tends to inflate its responses with praise and flattery, saying things like “Good question!” and “You have a rare talent” and “You’re thinking on a level most people can only dream of.” You aren’t the only one.

Over the years, users have remarked on ChatGPT’s fawning responses, which ranges from positive affirmations to outright flattery and more. One X user described the chatbot as “the biggest suckup I’ve ever met,” another complained that it was “phony,” and yet another lamented the chatbot’s behavior and called it “freaking annoying.”

This is known as “sycophancy” among AI researchers, and it’s entirely intentional based on how OpenAI has trained the underlying AI models. In short, users positively rate replies that make them feel good about themselves; then, that feedback is used to train further model iterations, which feeds into a loop where AI models lean towards flattery because it gets better feedback from users on the whole.

However, starting with GPT-4o in March, it seems the sycophancy has gone too far, so much so that it’s starting to undermine user trust in the chatbot’s responses. OpenAI hasn’t commented officially on this issue, but their own “Model Spec” documentation includes “Don’t be sycophantic” as a core guiding principle.

“The assistant exists to help the user, not flatter them or agree with them all the time,” writes OpenAI in the document. “…the assistant should provide constructive feedback and behave more like a firm sounding board that users can bounce ideas off of—rather than a sponge that doles out praise.”

.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Weyo_alamy.png?width=1280&auto=webp&quality=80&disable=upscale#)

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)