Why Denormalizing in ClickHouse will come back to bite you

If you've worked with ClickHouse, you’ve probably felt tempted to denormalize your data to avoid JOINs. It seems like a clever shortcut: combine everything into a single wide table and sidestep the complexities of JOIN performance. But that shortcut can create long-term issues, especially for real-time streaming use cases. ClickHouse is a powerful engine for analytical workloads, and it’s designed differently from traditional databases. It stores data by columns instead of rows, which makes operations like filtering and aggregating incredibly fast. However, the techniques we rely on in row-based databases, like Postgres or MySQL, don’t always transfer cleanly. Denormalization is one of those techniques. It might offer short-term wins but usually becomes a liability over time. What Denormalization Breaks in ClickHouse 1. Storage usage increases rapidly When you denormalize, you repeat the same values across many rows—things like usernames, product names, or countries. While ClickHouse compresses repeated data well, excessive repetition still leads to larger tables, slower inserts, and higher storage costs. 2. Updating data becomes painful ClickHouse is optimized for insert-only operations. If you need to update data that appears in many rows, such as correcting a product title or user info, you're forced to rewrite a large portion of the table. That’s inefficient and adds unnecessary maintenance overhead. 3. Query complexity goes up Wide tables might avoid JOINs, but they make queries harder to write and reason about. When your table contains dozens or hundreds of columns, you’re more likely to make mistakes or need to repeat the same logic. Query performance can also suffer, especially if your design doesn't align well with your access patterns. Better Alternatives for ClickHouse Instead of denormalizing everything, you can take advantage of features built specifically for high-performance reads and write-efficient designs. Use Dictionary Tables for Fast Lookups ClickHouse offers dictionaries that act like ultra-fast in-memory key-value stores. You can use them to look up small reference data, such as country names or campaign labels, without expensive JOINs. Precompute with Materialized Views When you need to JOIN or aggregate large datasets repeatedly, materialized views let you prepare those results in advance. This reduces query times significantly and shifts heavy operations out of the read path. Handle Joins at Ingestion Time In streaming systems, it can make sense to perform JOINs before the data enters ClickHouse. Tools like GlassFlow or Apache Flink allow you to enrich records as they move through the pipeline. That way, ClickHouse receives already-joined data that doesn’t require post-processing. Use Projections for Query Optimization Projections are like custom indexes that ClickHouse uses to optimize specific query types. You can define them to match your most common access patterns, and ClickHouse will choose the best one automatically. A Real-World Example We’ve worked with companies that initially went all-in on denormalization. One ecommerce team, for example, stored all user and product information directly inside their orders table. This helped them avoid JOINs at first, but the table grew massive and became difficult to manage. Even simple updates required rewriting gigabytes of data. By switching to a mix of dictionaries and materialized views, they restored query speed and regained flexibility. Their data pipelines became easier to maintain, and their infrastructure was significantly more efficient. Final Thoughts Denormalization might feel like a quick win, but in ClickHouse, it often introduces more complexity and overhead in the long run. Instead of flattening everything, take advantage of ClickHouse-native tools like dictionaries, materialized views, and projections. These features let you keep your data model clean and your queries fast. If you're working with streaming data, it's even more important to get this right from the beginning. Good data architecture means fewer hacks, easier scaling, and a more robust system. Want a deeper dive into this topic? Read the full article here The full article includes code examples and a case study.

If you've worked with ClickHouse, you’ve probably felt tempted to denormalize your data to avoid JOINs. It seems like a clever shortcut: combine everything into a single wide table and sidestep the complexities of JOIN performance.

But that shortcut can create long-term issues, especially for real-time streaming use cases.

ClickHouse is a powerful engine for analytical workloads, and it’s designed differently from traditional databases. It stores data by columns instead of rows, which makes operations like filtering and aggregating incredibly fast. However, the techniques we rely on in row-based databases, like Postgres or MySQL, don’t always transfer cleanly.

Denormalization is one of those techniques. It might offer short-term wins but usually becomes a liability over time.

What Denormalization Breaks in ClickHouse

1. Storage usage increases rapidly

When you denormalize, you repeat the same values across many rows—things like usernames, product names, or countries. While ClickHouse compresses repeated data well, excessive repetition still leads to larger tables, slower inserts, and higher storage costs.

2. Updating data becomes painful

ClickHouse is optimized for insert-only operations. If you need to update data that appears in many rows, such as correcting a product title or user info, you're forced to rewrite a large portion of the table. That’s inefficient and adds unnecessary maintenance overhead.

3. Query complexity goes up

Wide tables might avoid JOINs, but they make queries harder to write and reason about. When your table contains dozens or hundreds of columns, you’re more likely to make mistakes or need to repeat the same logic. Query performance can also suffer, especially if your design doesn't align well with your access patterns.

Better Alternatives for ClickHouse

Instead of denormalizing everything, you can take advantage of features built specifically for high-performance reads and write-efficient designs.

Use Dictionary Tables for Fast Lookups

ClickHouse offers dictionaries that act like ultra-fast in-memory key-value stores. You can use them to look up small reference data, such as country names or campaign labels, without expensive JOINs.

Precompute with Materialized Views

When you need to JOIN or aggregate large datasets repeatedly, materialized views let you prepare those results in advance. This reduces query times significantly and shifts heavy operations out of the read path.

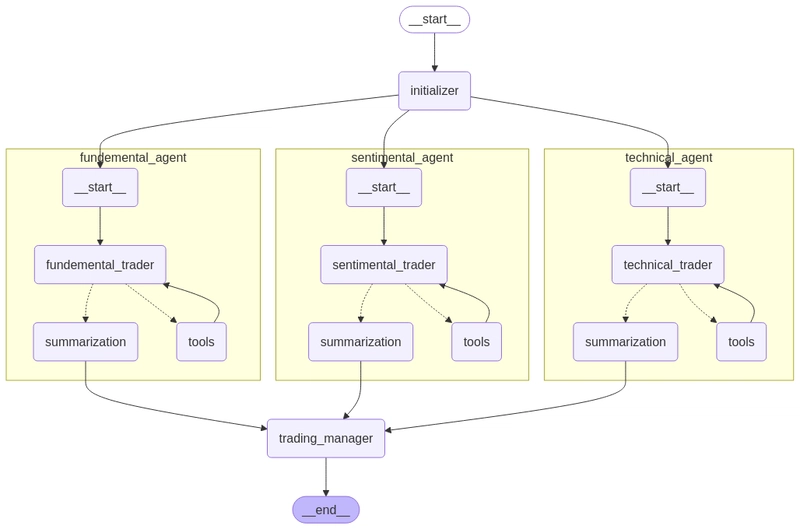

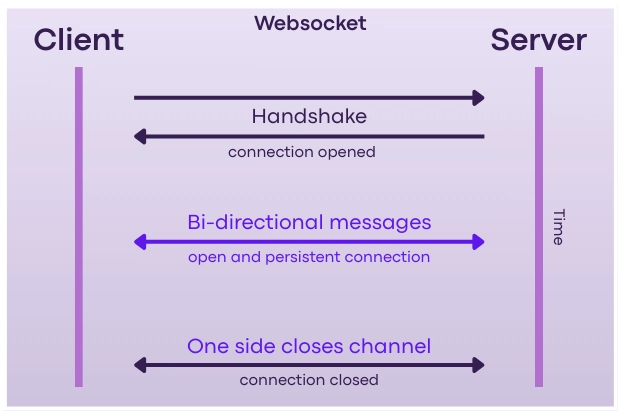

Handle Joins at Ingestion Time

In streaming systems, it can make sense to perform JOINs before the data enters ClickHouse. Tools like GlassFlow or Apache Flink allow you to enrich records as they move through the pipeline. That way, ClickHouse receives already-joined data that doesn’t require post-processing.

Use Projections for Query Optimization

Projections are like custom indexes that ClickHouse uses to optimize specific query types. You can define them to match your most common access patterns, and ClickHouse will choose the best one automatically.

A Real-World Example

We’ve worked with companies that initially went all-in on denormalization. One ecommerce team, for example, stored all user and product information directly inside their orders table. This helped them avoid JOINs at first, but the table grew massive and became difficult to manage. Even simple updates required rewriting gigabytes of data.

By switching to a mix of dictionaries and materialized views, they restored query speed and regained flexibility. Their data pipelines became easier to maintain, and their infrastructure was significantly more efficient.

Final Thoughts

Denormalization might feel like a quick win, but in ClickHouse, it often introduces more complexity and overhead in the long run. Instead of flattening everything, take advantage of ClickHouse-native tools like dictionaries, materialized views, and projections. These features let you keep your data model clean and your queries fast.

If you're working with streaming data, it's even more important to get this right from the beginning. Good data architecture means fewer hacks, easier scaling, and a more robust system.

Want a deeper dive into this topic?

Read the full article here

The full article includes code examples and a case study.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)