Is it viable to design a middleware architecture that integrates Lisp’s symbolic flexibility with Rust’s performance and memory safety?

I'm exploring the possibility of designing a middleware system that bridges Lisp (for its symbolic, reflective, and metaprogrammable power) with Rust (for its low-level efficiency and memory safety). This is not a product pitch or a standard software project. It's an architectural inquiry—an experiment in language integration, symbolic reasoning, and runtime adaptability. Motivation: Much of today's AI landscape is focused on statistical inference—opaque models, transformer pipelines, and massive corpora. While these models are impressive, they're also shallow in a fundamental sense: they approximate thought, but don't represent it. I'm interested in symbolic AI—not as a nostalgic return to GOFAI, but as a means of composing systems that think structurally, build internal representations, and evolve their logic through self-inspection and transformation. To do this, I believe we need a dual-architecture: A symbolic front-end in Lisp. Lisp offers homoiconicity, macros, and a long tradition of symbolic reasoning. It's well-suited for introspection, rule composition, and reflective self-modification. A Rust-based runtime. Rust brings memory safety, strong typing, concurrency, and modern systems-level performance. It could act as the stable core—handling persistence, IO, memory, and performance-sensitive logic. The bridge would be a middleware layer. The goal is to enable meaningful, dynamic communication between the Lisp layer (where "thought" happens) and the Rust layer (which ensures it can run safely and efficiently). This middleware could handle: Representation passing (e.g., S-expressions or ASTs) Dynamic code evaluation from Lisp to Rust and vice versa Shared memory/state or message-passing architectures Possibly even runtime generation of Rust code from Lisp-level logic Imagine a system that could reflect on its structure, adapt its behavior, and do so without incurring the traditional risks of unsafe execution or inefficiency. For context: I've been learning Java for about seven months—focusing on the core language and avoiding frameworks. My current understanding of Lisp and Rust is architectural and theoretical: I've mapped out how this system could look, what components it would need, and what philosophical assumptions it challenges. I'm not trying to ship an MVP or build a SaaS. This is a research-motivated question: Can we compose intelligence differently, if we take language seriously as both a tool and a substrate of thought? My questions: Are there known patterns or prior architectures that meaningfully integrate symbolic languages with static systems languages? (E.g., experiments in FFI, VM bridging, interpreter embeddings?) What technical constraints would shape such a bridge? Serialization? GC boundary crossing? Runtime type translation? Should Lisp live "on top" of Rust (as interpreter), or be "underneath" it (with embedded Rust components), or exist in parallel via IPC/shared memory? Have there been notable historical attempts to fuse symbolic and performant systems? What worked (or didn’t) in projects like SBCL, Racket’s JIT layers, or hybrid systems like Shen or GNU Guile? Closing thought: This might be a dead-end. Or a poetic detour. But I want to test the limits of what a thinking system could be if it were built from a symbolic foundation, grounded in memory-safe execution. If this resonates—even abstractly—I’d love to hear your thoughts on: Architecture Language interoperability Prior art Or philosophical/theoretical perspectives on symbolic computing today. I don't even know if this is the right place on the Net to share this. I tried figuring out where to post it on Reddit, but I'm not really comfortable there—though I do use it. If any of you are interested, I have a longer document where I try to formulate this idea in a more detailed way. Don't hesitate to reach out!

I'm exploring the possibility of designing a middleware system that bridges Lisp (for its symbolic, reflective, and metaprogrammable power) with Rust (for its low-level efficiency and memory safety).

This is not a product pitch or a standard software project. It's an architectural inquiry—an experiment in language integration, symbolic reasoning, and runtime adaptability.

Motivation:

Much of today's AI landscape is focused on statistical inference—opaque models, transformer pipelines, and massive corpora. While these models are impressive, they're also shallow in a fundamental sense: they approximate thought, but don't represent it.

I'm interested in symbolic AI—not as a nostalgic return to GOFAI, but as a means of composing systems that think structurally, build internal representations, and evolve their logic through self-inspection and transformation.

To do this, I believe we need a dual-architecture:

A symbolic front-end in Lisp. Lisp offers homoiconicity, macros, and a long tradition of symbolic reasoning. It's well-suited for introspection, rule composition, and reflective self-modification.

A Rust-based runtime. Rust brings memory safety, strong typing, concurrency, and modern systems-level performance. It could act as the stable core—handling persistence, IO, memory, and performance-sensitive logic.

The bridge would be a middleware layer. The goal is to enable meaningful, dynamic communication between the Lisp layer (where "thought" happens) and the Rust layer (which ensures it can run safely and efficiently).

This middleware could handle:

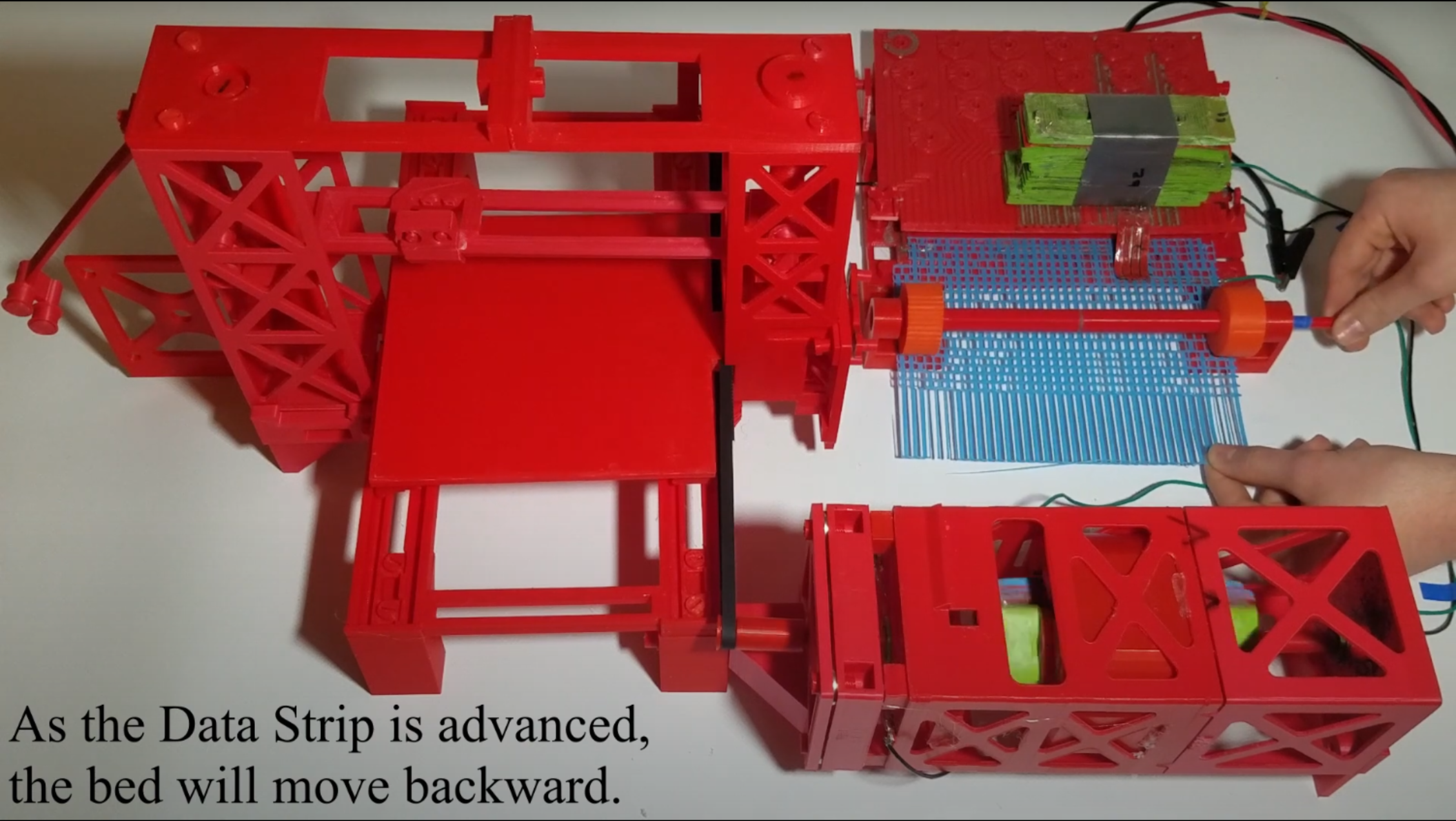

Representation passing (e.g., S-expressions or ASTs)

Dynamic code evaluation from Lisp to Rust and vice versa

Shared memory/state or message-passing architectures

Possibly even runtime generation of Rust code from Lisp-level logic

Imagine a system that could reflect on its structure, adapt its behavior, and do so without incurring the traditional risks of unsafe execution or inefficiency.

For context: I've been learning Java for about seven months—focusing on the core language and avoiding frameworks. My current understanding of Lisp and Rust is architectural and theoretical: I've mapped out how this system could look, what components it would need, and what philosophical assumptions it challenges.

I'm not trying to ship an MVP or build a SaaS. This is a research-motivated question: Can we compose intelligence differently, if we take language seriously as both a tool and a substrate of thought?

My questions:

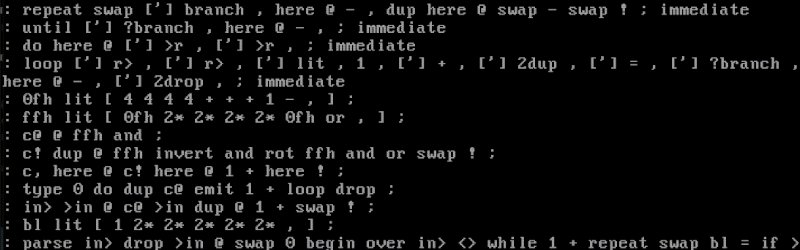

Are there known patterns or prior architectures that meaningfully integrate symbolic languages with static systems languages? (E.g., experiments in FFI, VM bridging, interpreter embeddings?)

What technical constraints would shape such a bridge? Serialization? GC boundary crossing? Runtime type translation?

Should Lisp live "on top" of Rust (as interpreter), or be "underneath" it (with embedded Rust components), or exist in parallel via IPC/shared memory?

Have there been notable historical attempts to fuse symbolic and performant systems? What worked (or didn’t) in projects like SBCL, Racket’s JIT layers, or hybrid systems like Shen or GNU Guile?

Closing thought: This might be a dead-end. Or a poetic detour. But I want to test the limits of what a thinking system could be if it were built from a symbolic foundation, grounded in memory-safe execution.

If this resonates—even abstractly—I’d love to hear your thoughts on:

Architecture

Language interoperability

Prior art

Or philosophical/theoretical perspectives on symbolic computing today.

I don't even know if this is the right place on the Net to share this. I tried figuring out where to post it on Reddit, but I'm not really comfortable there—though I do use it. If any of you are interested, I have a longer document where I try to formulate this idea in a more detailed way. Don't hesitate to reach out!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)