Training a Machine Learning model in Azure

Introduction We will use a credit card dataset to understand how to use Machine Learning for a classification problem in Azure. Here our goal is to identify customers who are at risk of not paying their credit card bills. To get started with Azure Machine Learning following two prerequisites first we need to setup: Workspace In order to use Machine Learning in Azure we need to create a workspace. A workspace is a centralized environment where all the machine learning resource get stored. Compute Instance A compute instance is a cloud-based virtual machine (VM) used for developing and running ML models. Here we will run Jupyter notebooks and Python scripts and test AI algorithms. Create a workspace Sign in to Azure Machine Learning studio Select Create workspace Provide the following information to configure your new workspace: Workspace name Friendly name Hub If you did not select a hub, provide the advanced information If you selected a hub, these values are taken from the hub. Subscription Resource group Region Select Create to create the workspace Create a compute instance Create compute instance following steps: Select your workspace On the top right, select New Select Compute instance in the list Supply a name Keep the default values for the rest of the page, unless your organization policy requires you to change other settings Select Review + Create Select Create Create handle to workspace We need a way to reference our workspace. That is Azure Machine Learning workspace is where all your ML resources live (models, datasets, compute, etc.). In order to work in the workspace "Python code" needs to connect to it and creating an "ml_client" does this. from azure.ai.ml import MLClient from azure.identity import DefaultAzureCredential # authenticate credential = DefaultAzureCredential() SUBSCRIPTION="" RESOURCE_GROUP="" WS_NAME="" # Get a handle to the workspace ml_client = MLClient( credential=credential, subscription_id=SUBSCRIPTION, resource_group_name=RESOURCE_GROUP, workspace_name=WS_NAME, ) Here, we need to import required library Import Libraries MLClient → Used to interact with Azure Machine Learning. DefaultAzureCredential → Handles authentication using Azure credentials Authenticate This automatically retrieves credentials from the user's Azure environment. Define Azure Subscription & Resource Details Connect to the Azure ML Workspace [ChatGPT explain] Verify Connection [move code with above] ws = ml_client.workspaces.get(WS_NAME) print(ws.location,":", ws.resource_group) ml_client.workspaces.get(WS_NAME) → Fetches the workspace details from Azure. ws.location → Prints the region where the workspace is deployed (e.g., "eastus"). ws.resource_group → Prints the resource group name. If this prints the correct location and resource group, the connection is successful! Enviroment Setup To run machine learning jobs in Azure, you need to define an environment. To make sure the job runs correctly, you need to tell Azure what software and libraries are required. You create an environment in Azure ML to tell Azure what tools, packages, and Python version your code needs to run correctly and reliably. In this example, you create a custom conda environment for your jobs, using a conda yaml file. Reference this yaml file to create and register this custom environment in your workspace: import os dependencies_dir = "./dependencies" os.makedirs(dependencies_dir, exist_ok=True) from azure.ai.ml.entities import Environment custom_env_name = "aml-scikit-learn" custom_job_env = Environment( name=custom_env_name, description="Custom environment for Credit Card Defaults job", tags={"scikit-learn": "1.0.2"}, conda_file=os.path.join(dependencies_dir, "conda.yaml"), image="mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest", ) custom_job_env = ml_client.environments.create_or_update(custom_job_env) This registers the environment to your Azure ML workspace using ml_client. print( f"Environment with name {custom_job_env.name} is registered to workspace, the environment version is {custom_job_env.version}" ) Confirms that the environment has been successfully created or updated. Configure a command job to run training script You're setting up a command job in Azure to run a Python script that trains a credit default prediction model using GradientBoostingClassifier. This training script Prepares the data Trains the model Splits the data using train_test_split (into training and testing sets) Registers the trained model so it can be reused or deployed later Here we will use Azure ML Studio to create and run the command job. %%writefile {train_src_dir}/main.py import os import argparse import pandas as pd import mlflow import mlflow.sklearn from sklearn.ensemble import GradientBoostingClassifier from sklearn.metrics import classifi

Introduction

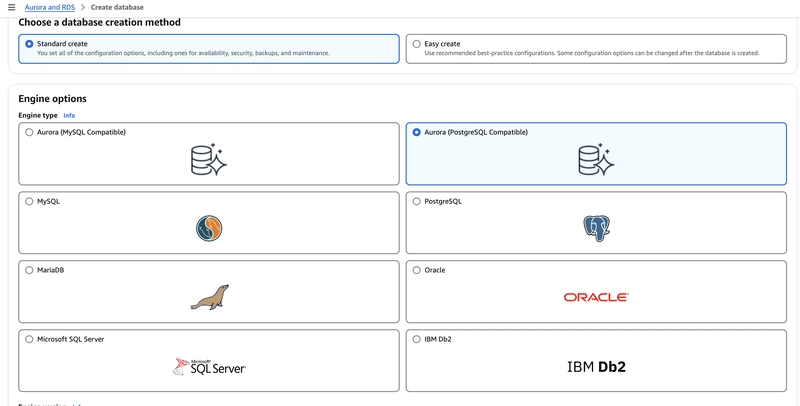

We will use a credit card dataset to understand how to use Machine Learning for a classification problem in Azure. Here our goal is to identify customers who are at risk of not paying their credit card bills. To get started with Azure Machine Learning following two prerequisites first we need to setup:

Workspace

In order to use Machine Learning in Azure we need to create a workspace. A workspace is a centralized environment where all the machine learning resource get stored.

Compute Instance

A compute instance is a cloud-based virtual machine (VM) used for developing and running ML models. Here we will run Jupyter notebooks and Python scripts and test AI algorithms.

Create a workspace

- Sign in to Azure Machine Learning studio

- Select Create workspace

- Provide the following information to configure your new workspace:

- Workspace name

- Friendly name

- Hub

- If you did not select a hub, provide the advanced information

- If you selected a hub, these values are taken from the hub.

- Subscription

- Resource group

- Region

- Select Create to create the workspace

Create a compute instance

Create compute instance following steps:

- Select your workspace

- On the top right, select New

- Select Compute instance in the list

- Supply a name

- Keep the default values for the rest of the page, unless your organization policy requires you to change other settings

- Select Review + Create

- Select Create

Create handle to workspace

We need a way to reference our workspace. That is Azure Machine Learning workspace is where all your ML resources live (models, datasets, compute, etc.). In order to work in the workspace "Python code" needs to connect to it and creating an "ml_client" does this.

from azure.ai.ml import MLClient

from azure.identity import DefaultAzureCredential

# authenticate

credential = DefaultAzureCredential()

SUBSCRIPTION=""

RESOURCE_GROUP=""

WS_NAME=""

# Get a handle to the workspace

ml_client = MLClient(

credential=credential,

subscription_id=SUBSCRIPTION,

resource_group_name=RESOURCE_GROUP,

workspace_name=WS_NAME,

)

Here, we need to import required library

- Import Libraries

- MLClient → Used to interact with Azure Machine Learning.

- DefaultAzureCredential → Handles authentication using Azure credentials

- Authenticate

- This automatically retrieves credentials from the user's Azure environment.

- Define Azure Subscription & Resource Details

- Connect to the Azure ML Workspace [ChatGPT explain]

- Verify Connection [move code with above]

ws = ml_client.workspaces.get(WS_NAME)

print(ws.location,":", ws.resource_group)

- ml_client.workspaces.get(WS_NAME) → Fetches the workspace details from Azure.

- ws.location → Prints the region where the workspace is deployed (e.g., "eastus").

- ws.resource_group → Prints the resource group name.

If this prints the correct location and resource group, the connection is successful!

Enviroment Setup

To run machine learning jobs in Azure, you need to define an environment. To make sure the job runs correctly, you need to tell Azure what software and libraries are required.

You create an environment in Azure ML to tell Azure what tools, packages, and Python version your code needs to run correctly and reliably.

In this example, you create a custom conda environment for your jobs, using a conda yaml file.

Reference this yaml file to create and register this custom environment in your workspace:

import os

dependencies_dir = "./dependencies"

os.makedirs(dependencies_dir, exist_ok=True)

from azure.ai.ml.entities import Environment

custom_env_name = "aml-scikit-learn"

custom_job_env = Environment(

name=custom_env_name,

description="Custom environment for Credit Card Defaults job",

tags={"scikit-learn": "1.0.2"},

conda_file=os.path.join(dependencies_dir, "conda.yaml"),

image="mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest",

)

custom_job_env = ml_client.environments.create_or_update(custom_job_env)

This registers the environment to your Azure ML workspace using ml_client.

print(

f"Environment with name {custom_job_env.name} is registered to workspace, the environment version is {custom_job_env.version}"

)

Confirms that the environment has been successfully created or updated.

Configure a command job to run training script

You're setting up a command job in Azure to run a Python script that trains a credit default prediction model using GradientBoostingClassifier.

This training script

- Prepares the data

- Trains the model

- Splits the data using train_test_split (into training and testing sets)

- Registers the trained model so it can be reused or deployed later

Here we will use Azure ML Studio to create and run the command job.

%%writefile {train_src_dir}/main.py

import os

import argparse

import pandas as pd

import mlflow

import mlflow.sklearn

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import classification_report

from sklearn.model_selection import train_test_split

def main():

"""Main function of the script."""

# input and output arguments

parser = argparse.ArgumentParser()

parser.add_argument("--data", type=str, help="path to input data")

parser.add_argument("--test_train_ratio", type=float, required=False, default=0.25)

parser.add_argument("--n_estimators", required=False, default=100, type=int)

parser.add_argument("--learning_rate", required=False, default=0.1, type=float)

parser.add_argument("--registered_model_name", type=str, help="model name")

args = parser.parse_args()

# Start Logging

mlflow.start_run()

# enable autologging

mlflow.sklearn.autolog()

###################

#

###################

print(" ".join(f"{k}={v}" for k, v in vars(args).items()))

print("input data:", args.data)

credit_df = pd.read_csv(args.data, header=1, index_col=0)

mlflow.log_metric("num_samples", credit_df.shape[0])

mlflow.log_metric("num_features", credit_df.shape[1] - 1)

#Split train and test datasets

train_df, test_df = train_test_split(

credit_df,

test_size=args.test_train_ratio,

)

####################

#

####################

##################

#

##################

# Extracting the label column

y_train = train_df.pop("default payment next month")

# convert the dataframe values to array

X_train = train_df.values

# Extracting the label column

y_test = test_df.pop("default payment next month")

# convert the dataframe values to array

X_test = test_df.values

print(f"Training with data of shape {X_train.shape}")

clf = GradientBoostingClassifier(

n_estimators=args.n_estimators, learning_rate=args.learning_rate

)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print(classification_report(y_test, y_pred))

###################

#

###################

##########################

#

##########################

# Registering the model to the workspace

print("Registering the model via MLFlow")

mlflow.sklearn.log_model(

sk_model=clf,

registered_model_name=args.registered_model_name,

artifact_path=args.registered_model_name,

)

# Saving the model to a file

mlflow.sklearn.save_model(

sk_model=clf,

path=os.path.join(args.registered_model_name, "trained_model"),

)

###########################

#

###########################

# Stop Logging

mlflow.end_run()

if __name__ == "__main__":

main()

Above code explanation

Set Up the Script

Uses argparse to get inputs (like data path, model name, and training settings).

Starts an MLflow logging session.

Prepare the Data

credit_df = pd.read_csv(args.data)

train_df, test_df = train_test_split(credit_df)

Reads the CSV file.

Splits the data into training and test sets using train_test_split.

Train the Model

clf = GradientBoostingClassifier()

clf.fit(X_train, y_train)

Trains a Gradient Boosting Classifier, a tree-based ML model.

Then uses the model to make predictions.

Log Metrics and Results with MLflow

mlflow.sklearn.autolog()

mlflow.log_metric("num_samples", ...)

Automatically logs model metrics like accuracy, training time, etc.

Helps track and compare different model runs.

Register the Trained Model

mlflow.sklearn.log_model(...)

mlflow.sklearn.save_model(...)

The model is saved and registered to your Azure ML Workspace. This allows you to keep track of your trained models.

Configure a training job using "Command"

Now we need to configure a training job in Azure Machine Learning using the command function which will run the main.py training script in Azure with the right data, parameters and environment.

Create a variable called "inputs" that will use for:

- data: The CSV file with credit card data.

- test_train_ratio: How much of the data should be used for testing (20%).

- learning_rate: A parameter for the model training.

- registered_model_name: The name to register your trained model under.

Submit the job

Submit the job to run in Azure Machine Learning studio. This time, willuse create_or_update on ml_client. ml_client is a client class that allows you to connect to your Azure subscription using Python and interact with Azure Machine Learning services. ml_client allows to submit the jobs using Python.

ml_client.create_or_update(job)

Conclusion

After run the cell, the notebook output shows a link to the job's details page on Machine Learning studio.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)