The Next Wave of AI: Intelligent Agents Working Together

The next era of AI isn’t powered by solo models it’s built by teams of agents that think, act, and collaborate. With A2A and MCP, the future of AI is not just intelligent, it’s interoperable. The Challenge of Building Multi-Agent Systems Today If you're building AI agents today, you’ve probably noticed the challenge: individual agents can be smart, but when they need to collaborate, communication often feels clumsy and inefficient. Without a common language or system, agents end up siloed unable to share information or coordinate tasks effectively. This is where Agent2Agent (A2A) and Model Context Protocol (MCP) come in. These protocols offer a standardized foundation for real-world, production-grade multi-agent ecosystems. What is Agent2Agent (A2A)? At its core, Agent2Agent (A2A) is an open protocol that allows AI agents to: Discover each other Share their capabilities Request and delegate tasks Exchange structured data Stream updates in real time The heart of A2A is the Agent Card a standardized description of what an agent can do, which interfaces it supports (text, video, forms, etc.), and how to interact with it. Instead of brittle, custom integrations, agents can simply browse available Agent Cards, select the right collaborator, and initiate cooperation. Key Features of A2A HTTP and JSON based (easy for developers) Push notifications for real-time updates Streaming support for long-running tasks Built-in authentication and security Designed for multiple interaction modes (not just text chat) Bottom line: A2A helps agents function like true teammates, not isolated bots operating in silos. A2A in Action: Reimbursement Agent Example To ground this in reality, let’s look at a simplified example from the opensource A2A agent repo. This Reimbursement Agent helps users submit reimbursement requests and shows how an A2A-compliant agent defines its skills, handles missing information, and interacts with tools like APIs or forms. 1. Defining the Agent’s Skill and Capabilities The agent advertises its functionality using an AgentCard, which includes a skill (in this case, reimbursement) and its capabilities: capabilities = AgentCapabilities(streaming=True) skill = AgentSkill( id="process_reimbursement", name="Process Reimbursement Tool", description="Helps with the reimbursement process for users.", tags=["reimbursement"], examples=["Can you reimburse me $20 for my lunch with the clients?"], ) agent_card = AgentCard( name="Reimbursement Agent", description="Handles reimbursement processes for employees.", url=f"http://{host}:{port}/", version="1.0.0", defaultInputModes=ReimbursementAgent.SUPPORTED_CONTENT_TYPES, defaultOutputModes=ReimbursementAgent.SUPPORTED_CONTENT_TYPES, capabilities=capabilities, skills=[skill], ) server = A2AServer( agent_card=agent_card, task_manager=AgentTaskManager(agent=ReimbursementAgent()), host=host, port=port, ) This skill definition helps other agents know when and how to call this agent. 2. Creating a Reimbursement Request Form The agent uses a structured tool called create_request_form() to collect missing information from users before proceeding: def create_request_form(date=None, amount=None, purpose=None): return { "request_id": "request_id_123456", "date": date or "", "amount": amount or "", "purpose": purpose or "", } This helps standardize the input, ensuring the agent can reason about incomplete or partial information. 3. Returning a Structured Form to the User Once a form is generated, the agent can return it as a JSON object that will be rendered in a UI: def return_form(form_data, tool_context, instructions=None): return { "type": "form", "form": { "type": "object", "properties": { "date": {"type": "string", "title": "Date"}, "amount": {"type": "string", "title": "Amount"}, "purpose": {"type": "string", "title": "Purpose"}, "request_id": {"type": "string", "title": "Request ID"}, }, "required": list(form_data.keys()), }, "form_data": form_data, "instructions": instructions, } 4. Validating and Processing the Request Once the form is filled, the agent uses the reimburse() function to process it: def reimburse(request_id): if request_id not in request_ids: return {"status": "Error: Invalid request_id."} return {"status": "approved", "request_id": request_id} 5. Putting It All Together with an LLM Agent The core logic of how the agent uses its tools is defined in a prompt and wrapped in an LlmAgent: return LlmAgent( model="gemini-2.0-flash-001", name="reimbursement_agent", instruction=""" You are an agent who

The next era of AI isn’t powered by solo models it’s built by teams of agents that think, act, and collaborate. With A2A and MCP, the future of AI is not just intelligent, it’s interoperable.

The Challenge of Building Multi-Agent Systems Today

If you're building AI agents today, you’ve probably noticed the challenge: individual agents can be smart, but when they need to collaborate, communication often feels clumsy and inefficient.

Without a common language or system, agents end up siloed unable to share information or coordinate tasks effectively.

This is where Agent2Agent (A2A) and Model Context Protocol (MCP) come in.

These protocols offer a standardized foundation for real-world, production-grade multi-agent ecosystems.

What is Agent2Agent (A2A)?

At its core, Agent2Agent (A2A) is an open protocol that allows AI agents to:

- Discover each other

- Share their capabilities

- Request and delegate tasks

- Exchange structured data

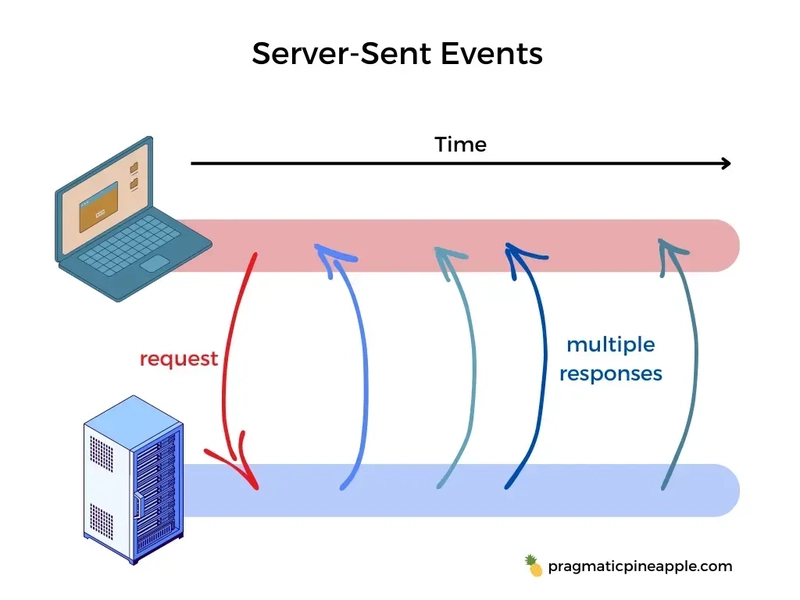

- Stream updates in real time

The heart of A2A is the Agent Card a standardized description of what an agent can do, which interfaces it supports (text, video, forms, etc.), and how to interact with it.

Instead of brittle, custom integrations, agents can simply browse available Agent Cards, select the right collaborator, and initiate cooperation.

Key Features of A2A

- HTTP and JSON based (easy for developers)

- Push notifications for real-time updates

- Streaming support for long-running tasks

- Built-in authentication and security

- Designed for multiple interaction modes (not just text chat)

Bottom line:

A2A helps agents function like true teammates, not isolated bots operating in silos.

A2A in Action: Reimbursement Agent Example

To ground this in reality, let’s look at a simplified example from the opensource A2A agent repo.

This Reimbursement Agent helps users submit reimbursement requests and shows how an A2A-compliant agent defines its skills, handles missing information, and interacts with tools like APIs or forms.

1. Defining the Agent’s Skill and Capabilities

The agent advertises its functionality using an AgentCard, which includes a skill (in this case, reimbursement) and its capabilities:

capabilities = AgentCapabilities(streaming=True)

skill = AgentSkill(

id="process_reimbursement",

name="Process Reimbursement Tool",

description="Helps with the reimbursement process for users.",

tags=["reimbursement"],

examples=["Can you reimburse me $20 for my lunch with the clients?"],

)

agent_card = AgentCard(

name="Reimbursement Agent",

description="Handles reimbursement processes for employees.",

url=f"http://{host}:{port}/",

version="1.0.0",

defaultInputModes=ReimbursementAgent.SUPPORTED_CONTENT_TYPES,

defaultOutputModes=ReimbursementAgent.SUPPORTED_CONTENT_TYPES,

capabilities=capabilities,

skills=[skill],

)

server = A2AServer(

agent_card=agent_card,

task_manager=AgentTaskManager(agent=ReimbursementAgent()),

host=host,

port=port,

)

This skill definition helps other agents know when and how to call this agent.

2. Creating a Reimbursement Request Form

The agent uses a structured tool called create_request_form() to collect missing information from users before proceeding:

def create_request_form(date=None, amount=None, purpose=None):

return {

"request_id": "request_id_123456",

"date": date or "",

"amount": amount or "",

"purpose": purpose or "",

}

This helps standardize the input, ensuring the agent can reason about incomplete or partial information.

3. Returning a Structured Form to the User

Once a form is generated, the agent can return it as a JSON object that will be rendered in a UI:

def return_form(form_data, tool_context, instructions=None):

return {

"type": "form",

"form": {

"type": "object",

"properties": {

"date": {"type": "string", "title": "Date"},

"amount": {"type": "string", "title": "Amount"},

"purpose": {"type": "string", "title": "Purpose"},

"request_id": {"type": "string", "title": "Request ID"},

},

"required": list(form_data.keys()),

},

"form_data": form_data,

"instructions": instructions,

}

4. Validating and Processing the Request

Once the form is filled, the agent uses the reimburse() function to process it:

def reimburse(request_id):

if request_id not in request_ids:

return {"status": "Error: Invalid request_id."}

return {"status": "approved", "request_id": request_id}

5. Putting It All Together with an LLM Agent

The core logic of how the agent uses its tools is defined in a prompt and wrapped in an LlmAgent:

return LlmAgent(

model="gemini-2.0-flash-001",

name="reimbursement_agent",

instruction="""

You are an agent who processes reimbursements. Start by calling create_request_form().

Then call return_form(). Once completed by the user, call reimburse().

""",

tools=[create_request_form, return_form, reimburse],

)

What is Model Context Protocol (MCP)?

While A2A focuses on agent-to-agent communication, Model Context Protocol (MCP) focuses on context delivery, ensuring that models have all the information they need to perform intelligently.

Large Language Models (LLMs) are powerful, but they need access to:

- User profiles

- Real-time external data

- APIs for tools and services

- Internal documents and knowledge bases

MCP standardizes how this information is delivered to the model in a structured, secure, and model-agnostic way.

Key Features of MCP

- Model-agnostic (compatible with Claude, Gemini, GPT, and others)

- Security-first architecture for sensitive data

- Built-in support for tool calling

- Enables richer, more accurate outputs by providing complete context

Think of MCP as a universal adapter that plugs LLMs into your organization's real-world data, systems, and workflows.

Extending the Reimbursement Agent with MCP

While A2A enables agent discovery and collaboration, Model Context Protocol (MCP) ensures each agent or model receives relevant, structured context for better decisions.

Let’s integrate an MCP-compliant context server into the Reimbursement Agent. This allows it to expose useful tools, documents, and real-time context to LLMs or other agents.

1. Define the MCP Context Server

The MCP server provides access to the agent’s tools and context through a standard interface.

from mcp.server import MCPServer

from mcp.schema import ToolDefinition, ToolCallRequest, ToolCallResponse

# Define the tool metadata

tool_definitions = [

ToolDefinition(

name="create_request_form",

description="Creates a reimbursement request form with fields for date, amount, and purpose.",

input_schema={"type": "object", "properties": {}}, # Parameters can be defined as needed

output_schema={"type": "object"},

),

ToolDefinition(

name="return_form",

description="Returns the structured reimbursement form for user input.",

input_schema={"type": "object"},

output_schema={"type": "object"},

),

ToolDefinition(

name="reimburse",

description="Processes the reimbursement request and returns the status.",

input_schema={"type": "object", "properties": {"request_id": {"type": "string"}}},

output_schema={"type": "object"},

),

]

2. Handle Tool Calls via the MCP API

This endpoint allows models to invoke tools securely and consistently.

def handle_tool_call(request: ToolCallRequest) -> ToolCallResponse:

if request.tool_name == "create_request_form":

result = create_request_form(**request.input)

elif request.tool_name == "return_form":

result = return_form(**request.input)

elif request.tool_name == "reimburse":

result = reimburse(**request.input)

else:

return ToolCallResponse(error="Unknown tool")

return ToolCallResponse(output=result)

3. Launch the MCP Server

Finally, spin up the MCP server alongside the A2A server:

mcp_server = MCPServer(

tools=tool_definitions,

handle_tool_call=handle_tool_call,

host="0.0.0.0",

port=8081,

)

mcp_server.run()

Why A2A and MCP Are Powerful Together

On their own, both protocols add value.

Together, they unlock the next generation of intelligent agent ecosystems.

Imagine this scenario:

- An agent manages job interviews using A2A and MCP.

- It discovers other agents like a resume parser, calendar scheduler, or interviewer assistant through A2A, using their Agent Cards to understand their capabilities.

- It accesses your internal company data like HR policies, org charts, or even your calendar via MCP, using standardized tools, prompts, and data sources.

- It invokes tools exposed by remote systems (e.g., ATS platforms or calendar APIs) through the MCP client-server structure, enabling secure, structured execution of real-world actions.

- It streams updates in real time to stakeholders hiring managers, candidates, or other agents as the workflow progresses.

The result?

Not a collection of disconnected bots, but a coordinated system of intelligent agents operating with context, awareness, and autonomy.

This is the shift from clever AI demos to real, production-grade multi-agent systems dynamic, modular, and ready for the complexity of real-world work.

Why Developers Should Pay Attention

Before A2A and MCP, multi-agent systems were often:

- Painful and time-consuming to build

- Dependent on fragile custom integrations

- Brittle across model updates and system changes

With A2A and MCP, developers gain a shared, standardized foundation that offers:

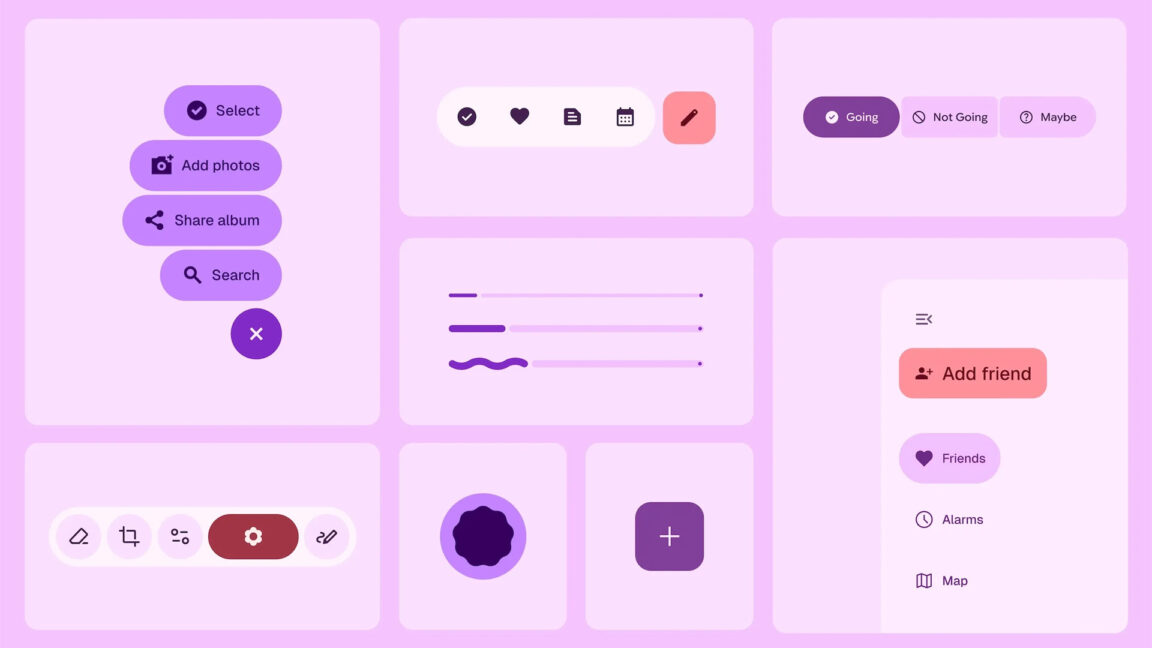

- Easier interoperability between agents from different vendors

- The emergence of agent marketplaces

- Dynamic, adaptive multi-agent workflows

- A truly modular approach to building AI systems

This marks a major step forward in composable, scalable AI architecture no longer tied to a single vendor or platform.

Final Thoughts

A2A and MCP are still early-stage protocols. Standards will continue to evolve, and adoption may take time.

However, the future direction is clear:

- Multi-agent AI needs common languages and protocols.

- Real-world context is critical for model success.

- Open, interoperable ecosystems will outperform closed, proprietary ones.

If you're building agentic AI today, bookmark A2A and MCP.

If you're observing the space, prepare for rapid innovation.

The next era of AI isn't about isolated genius models it's about intelligent agents working together like dynamic, adaptable teams.

And the future is already taking shape.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Turn any iPad into a gaming display with this one simple trick [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/iPad-as-console-FI.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)