starting up firstly.academy

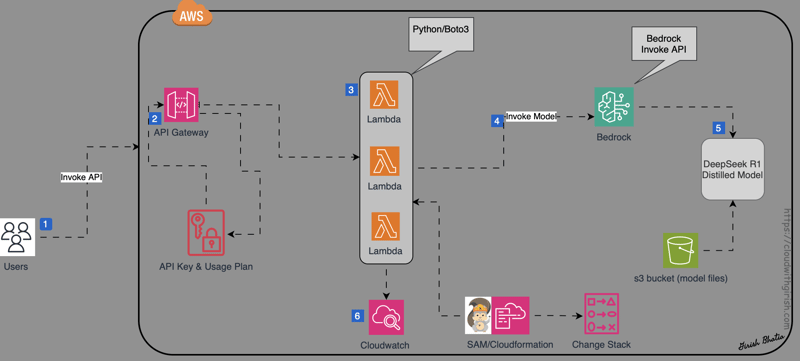

So, today was less about the big dream and more about actually getting stuff done. I've got this idea for an AI language platform, and with Gemini 2.5 and Cursor I started hammering it into shape – something real I can actually test. No more trying to do everything at once; we went lean and focused. 1. Finding the Starting Line First thing, I ditched the vague "everyone learning languages" target. That just doesn't work. I narrowed it way down: Who: FCE Exam takers, specifically teens (14-20). They have a clear goal (pass the test), and their parents are likely the ones paying. Much better. Problem: They need good, targeted speaking practice and feedback, which is hard to get consistently. First Product (V0.1): Cut everything non-essential. Forget the whole course for now. We're starting only with the FCE Speaking Part 2 AI tool. That's the MVP – the smallest thing I can build to see if the core idea even works. 2. Getting the Basics Sorted Needed some groundwork: The Name: Grabbed the firstly.academy domain. It fits the exam focus, sounds decent, and was cheap (€17!). Skipped all the extra .store, .world stuff they tried to sell me. 3. Teaching the AI Some Manners This was the main event. I had recordings of real FCE exams with examiner comments – absolute gold. Organized It: Put the transcripts and comments into a structured format (started with a Google Doc, thought about JSONs – good for later, but kept it simple for now). Tested the AI: Ran the real examples through my current AI tool. The verdict? Way too strict compared to the human examiners. Made it Smarter (Iterated): Figured out why it was too strict by looking at how lenient B2 examiners actually are with minor mistakes. Tweaked the AI's instructions (the prompt): Told it to act like a B2 examiner, focus on task completion and clarity, and tolerate typical B2 errors. Added a couple of real examples directly into the prompt to show it what I meant. Made a key decision: Got rid of the numerical scores. They weren't reliable based on just text (especially pronunciation!), and good written feedback is more valuable right now. Added a new feature: An "Examiner Summary" paragraph at the end to give a quick overview. Final touch: Made the summary address the student directly ("You did this well..." instead of "The candidate did...") to make it feel more personal. Fixed Bugs: Had to squash some backend (TypeError, ValidationError) and frontend (undefined variable) bugs that popped up after changing the data structure (removing scores, adding summary). Eventually tracked it down to needing to update the data model definition (FCEAnalysisResponse) in the backend and clearing stubborn browser cache. 4. Where I'm At Now So, after all that, the V0.1 feature is actually working! The AI gives feedback on Speaking Part 2, it includes the summary, it's less harsh, it addresses the student directly, and it shows up correctly on the webpage. Is it the final perfect version? Definitely not. But is it ready for the most important step? Yes. Now it's all about validation. I need to get this in front of those actual students and parents next week. I'll demo this feedback and ask straight up: "Honestly, is this helpful enough that you'd pay something small, like €5 a month, for it?" The technical side feels pretty much ready for this first test (just waiting on that SSL to fully sort itself out). Time to brace for some real user feedback.

So, today was less about the big dream and more about actually getting stuff done. I've got this idea for an AI language platform, and with Gemini 2.5 and Cursor I started hammering it into shape – something real I can actually test. No more trying to do everything at once; we went lean and focused.

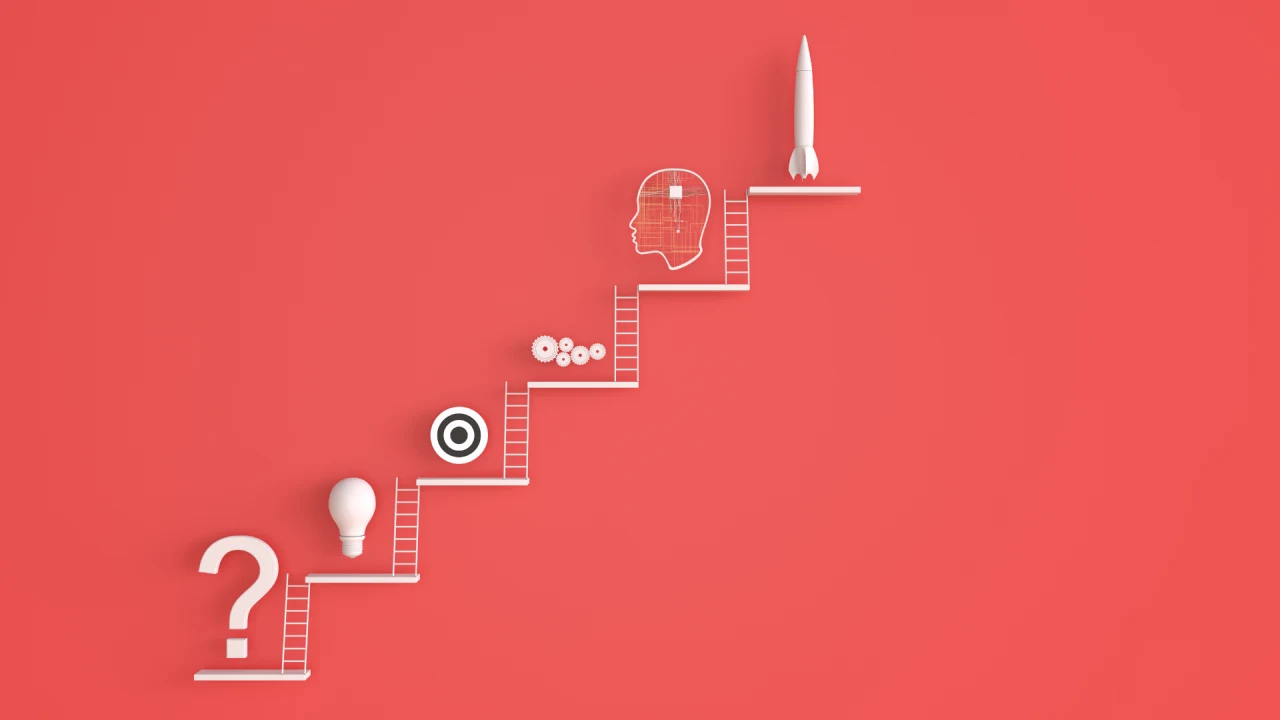

1. Finding the Starting Line

First thing, I ditched the vague "everyone learning languages" target. That just doesn't work. I narrowed it way down:

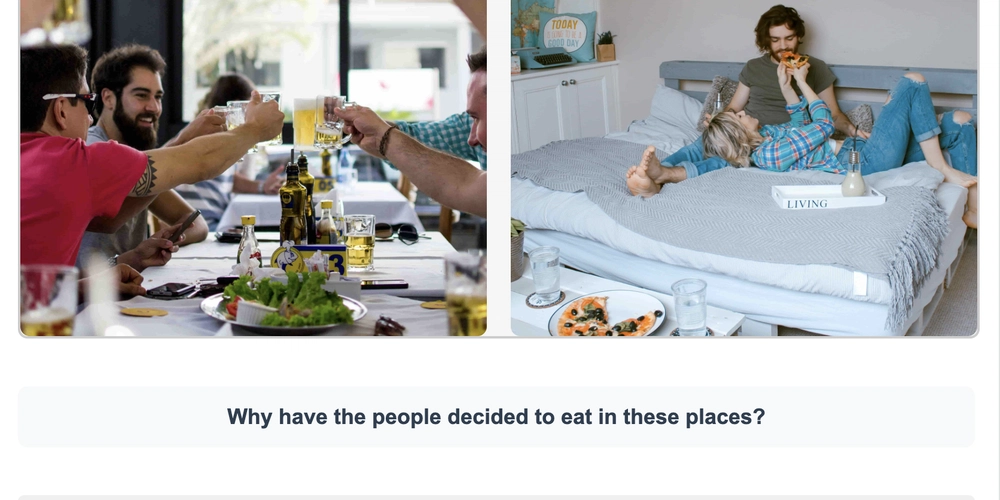

Who: FCE Exam takers, specifically teens (14-20). They have a clear goal (pass the test), and their parents are likely the ones paying. Much better.

Problem: They need good, targeted speaking practice and feedback, which is hard to get consistently.

First Product (V0.1): Cut everything non-essential. Forget the whole course for now. We're starting only with the FCE Speaking Part 2 AI tool. That's the MVP – the smallest thing I can build to see if the core idea even works.

2. Getting the Basics Sorted

Needed some groundwork:

The Name: Grabbed the firstly.academy domain. It fits the exam focus, sounds decent, and was cheap (€17!). Skipped all the extra .store, .world stuff they tried to sell me.

3. Teaching the AI Some Manners

This was the main event. I had recordings of real FCE exams with examiner comments – absolute gold.

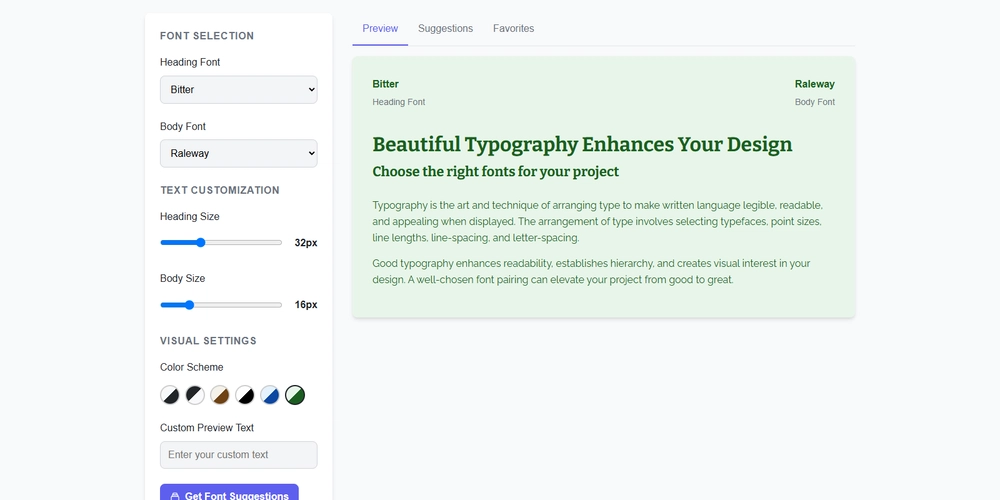

Organized It: Put the transcripts and comments into a structured format (started with a Google Doc, thought about JSONs – good for later, but kept it simple for now).

Tested the AI: Ran the real examples through my current AI tool. The verdict? Way too strict compared to the human examiners.

Made it Smarter (Iterated):

Figured out why it was too strict by looking at how lenient B2 examiners actually are with minor mistakes.

Tweaked the AI's instructions (the prompt): Told it to act like a B2 examiner, focus on task completion and clarity, and tolerate typical B2 errors. Added a couple of real examples directly into the prompt to show it what I meant.

Made a key decision: Got rid of the numerical scores. They weren't reliable based on just text (especially pronunciation!), and good written feedback is more valuable right now.

Added a new feature: An "Examiner Summary" paragraph at the end to give a quick overview.

Final touch: Made the summary address the student directly ("You did this well..." instead of "The candidate did...") to make it feel more personal.

Fixed Bugs: Had to squash some backend (TypeError, ValidationError) and frontend (undefined variable) bugs that popped up after changing the data structure (removing scores, adding summary). Eventually tracked it down to needing to update the data model definition (FCEAnalysisResponse) in the backend and clearing stubborn browser cache.

4. Where I'm At Now

So, after all that, the V0.1 feature is actually working! The AI gives feedback on Speaking Part 2, it includes the summary, it's less harsh, it addresses the student directly, and it shows up correctly on the webpage.

Is it the final perfect version? Definitely not. But is it ready for the most important step? Yes.

Now it's all about validation. I need to get this in front of those actual students and parents next week. I'll demo this feedback and ask straight up: "Honestly, is this helpful enough that you'd pay something small, like €5 a month, for it?"

The technical side feels pretty much ready for this first test (just waiting on that SSL to fully sort itself out). Time to brace for some real user feedback.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[Tutorial] Chapter 9: Task Dashboard: Charts](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fnotu3uxqtwmann665q6r.png)

![[Tutorial] Chapter 10: Task Dashboard (Part 2) - Filter & Conditions](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Ffjbdyeaw51nx8g13ag12.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)