Supercharge Read Performance: 397x Faster Database Queries Through Denormalization

When working with relational databases, we often encounter situations where we need to count related records in a table. First, let's consider the following tables: CREATE TABLE orders ( order_id SERIAL PRIMARY KEY, order_date DATE, customer VARCHAR(100) ); CREATE TABLE order_items ( item_id SERIAL PRIMARY KEY, order_id INT, product VARCHAR(100), quantity INT, FOREIGN KEY (order_id) REFERENCES orders(order_id) ); We have an orders table and want to count how many items each order contains. A common approach would be to use a COUNT subquery: SELECT o.order_id,(SELECT COUNT(*) FROM order_items oi WHERE oi.order_id = o.order_id ) AS total_items FROM orders o; This query works, but can become inefficient in scenarios with large data volumes as the subquery executes for each row in the orders table. Enter Denormalization! Denormalization in databases is the process of introducing redundancy to a previously normalized database, aiming to improve performance for read operations (queries). It involves adding duplicated data or combining tables to reduce the number of required operations (joins, counts...), which can speed up information retrieval in systems where reads are more frequent than writes. Now that we understand denormalization, how can we simplify our query? Instead of counting items every time we execute the query, we can add a total_items field directly to the orders table. Whenever an item is added or removed, we increment or decrement this field. Add the total_items column to the orders table: ALTER TABLE orders ADD COLUMN total_items INT DEFAULT 0; Update the total_items field whenever items are added/removed Your query becomes simpler and more efficient: SELECT order_id, total_items FROM orders; Let's insert sample data to validate our proposal using this function: CREATE OR REPLACE FUNCTION generate_sample_data() RETURNS VOID AS $$ DECLARE order_count INT := 200; items_per_order INT := 10; current_order_id INT; random_customer VARCHAR(100); random_product VARCHAR(100); random_quantity INT; BEGIN FOR i IN 1..order_count LOOP random_customer := 'Customer ' || (floor(random() * 1000)::INT); INSERT INTO orders (order_date, customer, total_items) VALUES (current_date - (floor(random() * 365)::INT), random_customer, 10) RETURNING order_id INTO current_order_id; FOR j IN 1..items_per_order LOOP random_product := 'Product ' || (floor(random() * 100)::INT); random_quantity := (floor(random() * 10)::INT + 1; INSERT INTO order_items (order_id, product, quantity) VALUES (current_order_id, random_product, random_quantity); END LOOP; END LOOP; END; $$ LANGUAGE plpgsql; SELECT generate_sample_data(); This script creates 200 records in orders and 10 corresponding records in order_items for each order. To compare performance, we'll execute both queries with EXPLAIN ANALYZE: Using subquery: Execution time: 28.983ms Using denormalized field: Execution time: 0.073ms (397x faster). Note this test used only 200 order records - performance gains become more significant as data volume increases. Advantages: Performance: Avoids repetitive subquery execution Simplicity: Cleaner main query structure Control: Direct management of counter values Considerations: Consistency: Must ensure total_items always reflects accurate counts Concurrency: Requires atomic updates in high-concurrency systems Hope you found this content useful!

When working with relational databases, we often encounter situations where we need to count related records in a table.

First, let's consider the following tables:

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

order_date DATE,

customer VARCHAR(100)

);

CREATE TABLE order_items (

item_id SERIAL PRIMARY KEY,

order_id INT,

product VARCHAR(100),

quantity INT,

FOREIGN KEY (order_id) REFERENCES orders(order_id)

);

We have an orders table and want to count how many items each order contains. A common approach would be to use a COUNT subquery:

SELECT

o.order_id,(SELECT

COUNT(*) FROM order_items oi

WHERE oi.order_id = o.order_id

) AS total_items

FROM

orders o;

This query works, but can become inefficient in scenarios with large data volumes as the subquery executes for each row in the orders table.

Enter Denormalization!

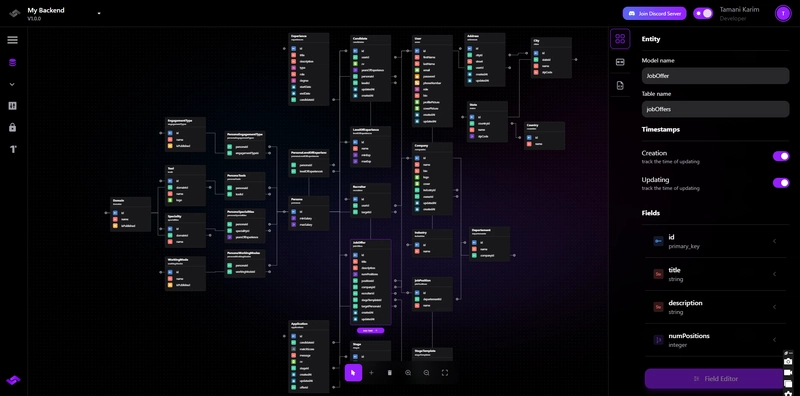

Denormalization in databases is the process of introducing redundancy to a previously normalized database, aiming to improve performance for read operations (queries). It involves adding duplicated data or combining tables to reduce the number of required operations (joins, counts...), which can speed up information retrieval in systems where reads are more frequent than writes.

Now that we understand denormalization, how can we simplify our query?

Instead of counting items every time we execute the query, we can add a total_items field directly to the orders table. Whenever an item is added or removed, we increment or decrement this field.

- Add the

total_itemscolumn to theorderstable:

ALTER TABLE orders ADD COLUMN total_items INT DEFAULT 0;

- Update the

total_itemsfield whenever items are added/removed - Your query becomes simpler and more efficient:

SELECT

order_id,

total_items

FROM

orders;

Let's insert sample data to validate our proposal using this function:

CREATE OR REPLACE FUNCTION generate_sample_data()

RETURNS VOID AS $$

DECLARE

order_count INT := 200;

items_per_order INT := 10;

current_order_id INT;

random_customer VARCHAR(100);

random_product VARCHAR(100);

random_quantity INT;

BEGIN

FOR i IN 1..order_count LOOP

random_customer := 'Customer ' || (floor(random() * 1000)::INT);

INSERT INTO orders (order_date, customer, total_items)

VALUES (current_date - (floor(random() * 365)::INT), random_customer, 10)

RETURNING order_id INTO current_order_id;

FOR j IN 1..items_per_order LOOP

random_product := 'Product ' || (floor(random() * 100)::INT);

random_quantity := (floor(random() * 10)::INT + 1;

INSERT INTO order_items (order_id, product, quantity)

VALUES (current_order_id, random_product, random_quantity);

END LOOP;

END LOOP;

END;

$$ LANGUAGE plpgsql;

SELECT generate_sample_data();

This script creates 200 records in orders and 10 corresponding records in order_items for each order.

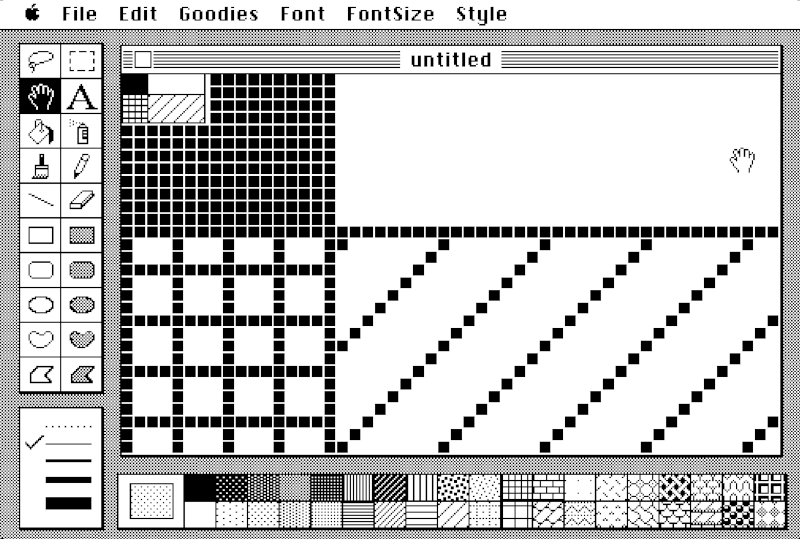

To compare performance, we'll execute both queries with EXPLAIN ANALYZE:

Using subquery:

Using denormalized field:

Execution time: 0.073ms (397x faster). Note this test used only 200 order records - performance gains become more significant as data volume increases.

Advantages:

Performance: Avoids repetitive subquery execution

Simplicity: Cleaner main query structure

Control: Direct management of counter values

Considerations:

Consistency: Must ensure total_items always reflects accurate counts

Concurrency: Requires atomic updates in high-concurrency systems

Hope you found this content useful!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)