/start: Your Data Engineering Project Launchpad

/start: Your Data Engineering Project Launchpad Data engineering projects can feel overwhelming at the beginning. Where do you even start? This post aims to be your /start command, providing a roadmap and actionable steps to kick off your data engineering initiatives. The Initial Spark: Defining the 'Why' Before diving into tools and technologies, understanding the why behind your project is crucial. Ask yourself: What problem are we solving? Be specific. Instead of "improving data quality," aim for "reducing data errors in the customer onboarding process to decrease churn." What are the business goals? How will this project impact revenue, efficiency, or customer satisfaction? Quantify the benefits whenever possible. Who are the stakeholders? Identify the users of the data and their needs. Engage them early and often for feedback. Answering these questions will help you define the project scope, prioritize tasks, and measure success. Data Source Discovery and Profiling The next step involves identifying and understanding your data sources. This includes: Cataloging existing data: Document all available data sources, including databases, APIs, files, and streams. Create a data catalog to make this information easily accessible. Profiling data: Analyze the data to understand its structure, quality, and completeness. Use data profiling tools to identify anomalies, missing values, and inconsistencies. Data lineage: Trace the origin and transformation of data as it moves through your systems. This is crucial for debugging and ensuring data quality. Consider these questions during this phase: What types of data are available (structured, semi-structured, unstructured)? How frequently is the data updated? What are the data quality issues? What are the security and compliance requirements? Architecture Design: Building the Foundation With a clear understanding of the data and requirements, you can design your data architecture. Key considerations include: Data ingestion: How will you move data from source systems to your data platform? Options include batch processing, real-time streaming, and change data capture (CDC). Data storage: Where will you store the data? Consider options like data lakes, data warehouses, and data lakehouses. Data processing: How will you transform and clean the data? Choose appropriate tools for ETL/ELT, data quality checks, and data enrichment. Data access: How will users access the data? Implement data governance policies and security measures to ensure data privacy and compliance. Common architectural patterns include: Lambda architecture: Combines batch processing for accuracy with stream processing for low latency. Kappa architecture: Relies solely on stream processing for both real-time and historical analysis. Data lakehouse architecture: Combines the best features of data lakes and data warehouses. Technology Selection: Choosing the Right Tools The data engineering landscape is constantly evolving, with a plethora of tools and technologies available. Some popular choices include: Cloud platforms: AWS, Azure, GCP provide a wide range of data engineering services. Data ingestion: Apache Kafka, Apache NiFi, Airbyte, Fivetran. Data storage: Apache Hadoop, Apache Spark, Snowflake, Amazon Redshift, Google BigQuery. Data processing: Apache Spark, Apache Beam, dbt (data build tool). Orchestration: Apache Airflow, Prefect, Dagster. When selecting tools, consider: Scalability: Can the tool handle your current and future data volumes? Cost: What are the licensing fees and infrastructure costs? Ease of use: How easy is it to learn and use the tool? Integration: Does the tool integrate well with your existing systems? Community support: Is there a large and active community for the tool? Implementation and Testing: Putting It All Together With your architecture and tools in place, you can start implementing your data pipelines. Key steps include: Developing ETL/ELT pipelines: Write code to extract, transform, and load data. Implementing data quality checks: Ensure data accuracy and completeness. Testing pipelines: Thoroughly test your pipelines to identify and fix errors. Automating deployments: Use CI/CD pipelines to automate the deployment of your code. Monitoring and Maintenance: Keeping It Running Smoothly Once your data pipelines are in production, it's essential to monitor their performance and maintain them over time. This includes: Monitoring data quality: Track data quality metrics to identify anomalies. Monitoring pipeline performance: Ensure pipelines are running efficiently. Troubleshooting errors: Quickly diagnose and fix any issues. Updating code: Keep your code up-to-date with the latest security patches and bug fixes. Conclusion Launching a data engineering project requires careful pl

/start: Your Data Engineering Project Launchpad

Data engineering projects can feel overwhelming at the beginning. Where do you even start? This post aims to be your /start command, providing a roadmap and actionable steps to kick off your data engineering initiatives.

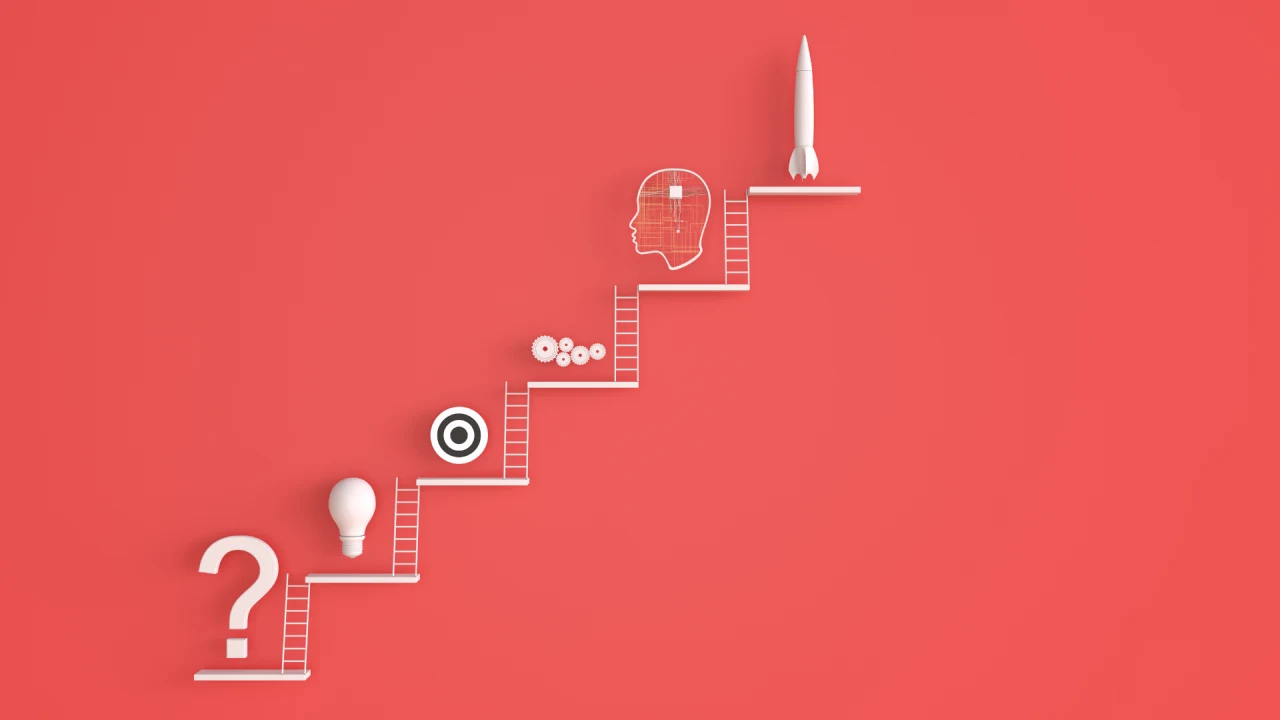

The Initial Spark: Defining the 'Why'

Before diving into tools and technologies, understanding the why behind your project is crucial. Ask yourself:

- What problem are we solving? Be specific. Instead of "improving data quality," aim for "reducing data errors in the customer onboarding process to decrease churn."

- What are the business goals? How will this project impact revenue, efficiency, or customer satisfaction? Quantify the benefits whenever possible.

- Who are the stakeholders? Identify the users of the data and their needs. Engage them early and often for feedback.

Answering these questions will help you define the project scope, prioritize tasks, and measure success.

Data Source Discovery and Profiling

The next step involves identifying and understanding your data sources. This includes:

- Cataloging existing data: Document all available data sources, including databases, APIs, files, and streams. Create a data catalog to make this information easily accessible.

- Profiling data: Analyze the data to understand its structure, quality, and completeness. Use data profiling tools to identify anomalies, missing values, and inconsistencies.

- Data lineage: Trace the origin and transformation of data as it moves through your systems. This is crucial for debugging and ensuring data quality.

Consider these questions during this phase:

- What types of data are available (structured, semi-structured, unstructured)?

- How frequently is the data updated?

- What are the data quality issues?

- What are the security and compliance requirements?

Architecture Design: Building the Foundation

With a clear understanding of the data and requirements, you can design your data architecture. Key considerations include:

- Data ingestion: How will you move data from source systems to your data platform? Options include batch processing, real-time streaming, and change data capture (CDC).

- Data storage: Where will you store the data? Consider options like data lakes, data warehouses, and data lakehouses.

- Data processing: How will you transform and clean the data? Choose appropriate tools for ETL/ELT, data quality checks, and data enrichment.

- Data access: How will users access the data? Implement data governance policies and security measures to ensure data privacy and compliance.

Common architectural patterns include:

- Lambda architecture: Combines batch processing for accuracy with stream processing for low latency.

- Kappa architecture: Relies solely on stream processing for both real-time and historical analysis.

- Data lakehouse architecture: Combines the best features of data lakes and data warehouses.

Technology Selection: Choosing the Right Tools

The data engineering landscape is constantly evolving, with a plethora of tools and technologies available. Some popular choices include:

- Cloud platforms: AWS, Azure, GCP provide a wide range of data engineering services.

- Data ingestion: Apache Kafka, Apache NiFi, Airbyte, Fivetran.

- Data storage: Apache Hadoop, Apache Spark, Snowflake, Amazon Redshift, Google BigQuery.

- Data processing: Apache Spark, Apache Beam, dbt (data build tool).

- Orchestration: Apache Airflow, Prefect, Dagster.

When selecting tools, consider:

- Scalability: Can the tool handle your current and future data volumes?

- Cost: What are the licensing fees and infrastructure costs?

- Ease of use: How easy is it to learn and use the tool?

- Integration: Does the tool integrate well with your existing systems?

- Community support: Is there a large and active community for the tool?

Implementation and Testing: Putting It All Together

With your architecture and tools in place, you can start implementing your data pipelines. Key steps include:

- Developing ETL/ELT pipelines: Write code to extract, transform, and load data.

- Implementing data quality checks: Ensure data accuracy and completeness.

- Testing pipelines: Thoroughly test your pipelines to identify and fix errors.

- Automating deployments: Use CI/CD pipelines to automate the deployment of your code.

Monitoring and Maintenance: Keeping It Running Smoothly

Once your data pipelines are in production, it's essential to monitor their performance and maintain them over time. This includes:

- Monitoring data quality: Track data quality metrics to identify anomalies.

- Monitoring pipeline performance: Ensure pipelines are running efficiently.

- Troubleshooting errors: Quickly diagnose and fix any issues.

- Updating code: Keep your code up-to-date with the latest security patches and bug fixes.

Conclusion

Launching a data engineering project requires careful planning and execution. By following these steps, you can set your project up for success and unlock the value of your data. Remember to start with the why, understand your data, design a robust architecture, choose the right tools, and continuously monitor and maintain your pipelines. Good luck with your /start!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)