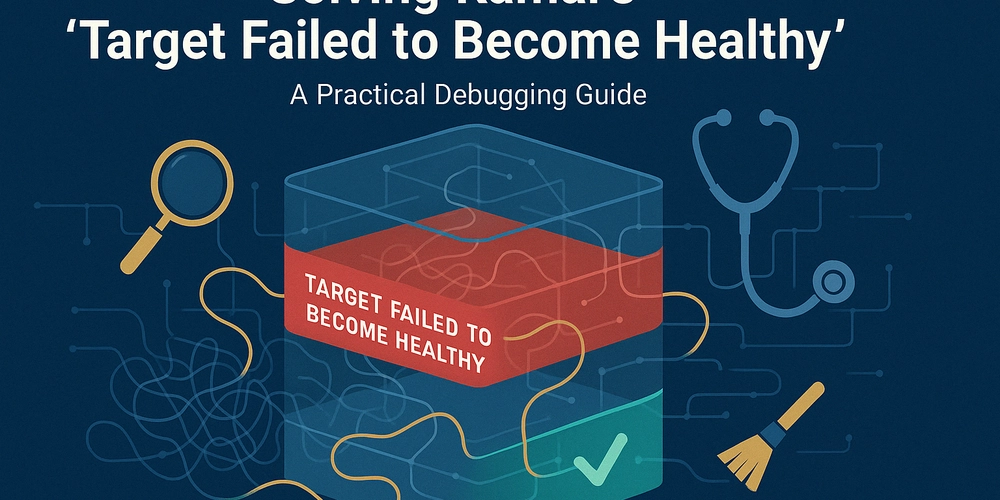

Solving Kamal's "Target Failed to Become Healthy" Error: A Practical Debugging Guide

The Silent Deployment Killer Deployment should be the triumphant final step in your development cycle. You've built your application, tested it thoroughly, and you're ready to share it with the world. You've chosen Kamal as your deployment tool because it provides Docker-based simplicity without Kubernetes complexity. Then suddenly, your deployment grinds to a halt with this message: ERROR Failed to boot web on xxx.xxx.x.xx INFO First web container is unhealthy, not booting any other roles ERROR docker exit status: 1 docker stdout: Nothing written docker stderr: Error: target failed to become healthy This seemingly opaque error—what I call a "silent killer"—can derail your deployment momentum and leave you searching for answers in a sea of logs and configuration files. Why This Error Is So Deceptively Complex What makes this error particularly challenging is its deceptive simplicity. It tells you something is wrong, but offers little insight into what exactly failed. Let's unpack what's happening beneath the surface: Your container builds successfully (so your code itself is valid) The container starts on your server (so your server environment is configured) Kamal's health check system attempts to verify your application is running The health check fails, but the reason remains hidden It's like having your car stop in the middle of the road with only a generic "engine problem" warning—frustrating and uninformative. Anatomizing the Health Check Process To understand the root causes, we need to understand Kamal's health check mechanism. When deploying, Kamal: Starts your container Makes HTTP requests to the /up endpoint Expects a 200 OK response within a configured timeout period Proceeds with deployment only if these checks succeed This seemingly simple process can break in multiple subtle ways. Looking at GitHub issue #1041, we find a revealing clue in the logs: ERROR 2024-10-03T10:12:10.047581359Z {"time":"2024-10-03T10:12:10.047492864Z","level":"INFO","msg":"Unable to proxy request","path":"/up","error":"dial tcp 127.0.0.1:3000: connect: connection refused"} This error reveals the health check can't even reach your application—but why? Five Common Failure Patterns and Their Solutions After analyzing dozens of deployment issues with this error, I've identified five distinct failure patterns. Each requires a different approach to solve: 1. SSL Configuration Conflicts Pattern: Health check returns 301 redirects instead of 200 OK What's happening: Rails applications with force_ssl enabled automatically redirect HTTP requests to HTTPS. However, Kamal's health check uses HTTP, causing a redirect loop. Solution: Add this to your config/environments/production.rb: # Skip http-to-https redirect for the health check endpoint config.ssl_options = { redirect: { exclude: ->(request) { request.path == "/up" } } } This creates a targeted exception that allows health check requests while keeping SSL protection for all other routes. 2. Port Mismatch Configuration Pattern: Logs show "connection refused" errors What's happening: Kamal's proxy tries to communicate with your application on a port different from where your application is actually listening. Solution: Ensure port configuration consistency across three places: In your Dockerfile: EXPOSE 3000 (or your chosen port) In your deploy.yml: Add app_port: 3000 under the proxy section In your application startup command: Listen on 0.0.0.0:port (not just localhost) Example deploy.yml configuration: proxy: ssl: true host: myapp.example.com app_port: 3000 # Must match your application's listening port 3. Host Authorization Barriers Pattern: Application logs show "Blocked host" errors What's happening: Rails 7+ includes a host authorization system that blocks requests from unexpected hostnames. Kamal's health check may use an IP address or hostname that doesn't match your configured allowed hosts. Solution: Add an exception for the health check path in config/environments/production.rb: # Allow health check endpoint without host verification config.host_authorization = { exclude: ->(request) { request.path == "/up" } } 4. Resource Constraints Pattern: Slow SSH connection, deployment sometimes works but often fails What's happening: During blue-green deployment, Kamal runs two containers simultaneously. On under-provisioned servers, this can exhaust available resources. Solution: Either upgrade your server or modify your deployment strategy: Increase server RAM/CPU (recommended for production) Add swap space as a temporary measure: sudo fallocate -l 1G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile Restart your server before deployment to clear memory 5. Application Initialization Failures Pattern: The container starts but your application crashes

The Silent Deployment Killer

Deployment should be the triumphant final step in your development cycle. You've built your application, tested it thoroughly, and you're ready to share it with the world. You've chosen Kamal as your deployment tool because it provides Docker-based simplicity without Kubernetes complexity.

Then suddenly, your deployment grinds to a halt with this message:

ERROR Failed to boot web on xxx.xxx.x.xx

INFO First web container is unhealthy, not booting any other roles

ERROR docker exit status: 1

docker stdout: Nothing written

docker stderr: Error: target failed to become healthy

This seemingly opaque error—what I call a "silent killer"—can derail your deployment momentum and leave you searching for answers in a sea of logs and configuration files.

Why This Error Is So Deceptively Complex

What makes this error particularly challenging is its deceptive simplicity. It tells you something is wrong, but offers little insight into what exactly failed. Let's unpack what's happening beneath the surface:

- Your container builds successfully (so your code itself is valid)

- The container starts on your server (so your server environment is configured)

- Kamal's health check system attempts to verify your application is running

- The health check fails, but the reason remains hidden

It's like having your car stop in the middle of the road with only a generic "engine problem" warning—frustrating and uninformative.

Anatomizing the Health Check Process

To understand the root causes, we need to understand Kamal's health check mechanism. When deploying, Kamal:

- Starts your container

- Makes HTTP requests to the

/upendpoint - Expects a 200 OK response within a configured timeout period

- Proceeds with deployment only if these checks succeed

This seemingly simple process can break in multiple subtle ways. Looking at GitHub issue #1041, we find a revealing clue in the logs:

ERROR 2024-10-03T10:12:10.047581359Z {"time":"2024-10-03T10:12:10.047492864Z","level":"INFO","msg":"Unable to proxy request","path":"/up","error":"dial tcp 127.0.0.1:3000: connect: connection refused"}

This error reveals the health check can't even reach your application—but why?

Five Common Failure Patterns and Their Solutions

After analyzing dozens of deployment issues with this error, I've identified five distinct failure patterns. Each requires a different approach to solve:

1. SSL Configuration Conflicts

Pattern: Health check returns 301 redirects instead of 200 OK

What's happening: Rails applications with force_ssl enabled automatically redirect HTTP requests to HTTPS. However, Kamal's health check uses HTTP, causing a redirect loop.

Solution: Add this to your config/environments/production.rb:

# Skip http-to-https redirect for the health check endpoint

config.ssl_options = { redirect: { exclude: ->(request) { request.path == "/up" } } }

This creates a targeted exception that allows health check requests while keeping SSL protection for all other routes.

2. Port Mismatch Configuration

Pattern: Logs show "connection refused" errors

What's happening: Kamal's proxy tries to communicate with your application on a port different from where your application is actually listening.

Solution: Ensure port configuration consistency across three places:

- In your Dockerfile:

EXPOSE 3000(or your chosen port) - In your deploy.yml: Add

app_port: 3000under the proxy section - In your application startup command: Listen on 0.0.0.0:port (not just localhost)

Example deploy.yml configuration:

proxy:

ssl: true

host: myapp.example.com

app_port: 3000 # Must match your application's listening port

3. Host Authorization Barriers

Pattern: Application logs show "Blocked host" errors

What's happening: Rails 7+ includes a host authorization system that blocks requests from unexpected hostnames. Kamal's health check may use an IP address or hostname that doesn't match your configured allowed hosts.

Solution: Add an exception for the health check path in config/environments/production.rb:

# Allow health check endpoint without host verification

config.host_authorization = { exclude: ->(request) { request.path == "/up" } }

4. Resource Constraints

Pattern: Slow SSH connection, deployment sometimes works but often fails

What's happening: During blue-green deployment, Kamal runs two containers simultaneously. On under-provisioned servers, this can exhaust available resources.

Solution: Either upgrade your server or modify your deployment strategy:

- Increase server RAM/CPU (recommended for production)

- Add swap space as a temporary measure:

sudo fallocate -l 1G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

- Restart your server before deployment to clear memory

5. Application Initialization Failures

Pattern: The container starts but your application crashes during initialization

What's happening: Your application encounters an error during startup that isn't visible in standard Kamal output—perhaps a missing environment variable, database connection issue, or code error only triggered in production.

Solution: Run your container interactively to see the actual error:

# SSH into your server

ssh your-server

# Find the image ID

docker image ls

# Run it with environment variables to see the real error

docker run -it --network kamal --env-file .kamal/apps//env/roles/web.env

This will show you the actual error messages that are otherwise hidden.

A Systematic Debugging Approach

When encountering this error, follow this systematic approach:

1. Examine the Full Logs

kamal logs -f

Look for patterns in the logs—are you seeing connection refused errors? SSL redirects? Application errors?

2. Verify Network Connectivity

SSH into your server and check if basic network services are working:

# Test database connectivity

source .kamal/apps//env/roles/web.env

pg_isready -h $DB_HOST -p $DB_PORT -U $DB_USER

# Check if something else is using your port

sudo netstat -tulpn | grep 3000

3. Inspect Container Status

# See if containers are starting and stopping

docker ps -a

# Check resource usage

docker stats

4. Run the Container Interactively

# Get the image ID

docker image ls | grep your-app-name

# Run it with the environment variables

docker run -it --network kamal --env-file .kamal/apps//env/roles/web.env

This interactive approach often reveals issues that aren't visible in the logs.

5. Test the Health Check Endpoint Directly

# On your server

curl http://localhost:3000/up

If this fails while your container is running, you've found the issue.

Case Study: Multiple Layers of Failure

Consider a real deployment scenario where a Rails application migrated from Kamal 1.0 to 2.0. The deployment failed with our familiar error. Systematic debugging revealed:

- The app used

force_sslbut lacked the SSL endpoint exception - The database credentials worked in development but needed specific IP whitelisting in production

- An initializer had a subtle dependency on an environment variable that wasn't set

By methodically addressing each layer of the problem—fixing the SSL configuration, updating database access controls, and adding the missing environment variable—the deployment succeeded.

The key insight? Most "target failed to become healthy" errors are multiple issues stacked together, requiring a layered debugging approach.

Preventive Configuration for Rails Applications

To avoid this error in future deployments, here's a comprehensive configuration template for Rails applications using Kamal:

- In

config/environments/production.rb:

# Allow health checks without SSL redirects

config.ssl_options = { redirect: { exclude: ->(request) { request.path == "/up" } } }

# Allow health checks without host verification

config.host_authorization = { exclude: ->(request) { request.path == "/up" } }

# Set timeout values appropriately

config.timeout_seconds = 30

- In

deploy.yml:

proxy:

ssl: true # Set to false if using external SSL termination

host: your-application.com

app_port: 3000 # Match your application's port

healthcheck:

path: /up

interval: 10

timeout: 30

- In your Dockerfile:

# Ensure port is exposed

EXPOSE 3000

# Use a clear startup command

CMD ["./bin/rails", "server", "-b", "0.0.0.0", "-p", "3000"]

- Add a specific health check endpoint for more reliability:

# In config/routes.rb

get '/up', to: proc { [200, {}, ['OK']] }

Logs as Archaeological Artifacts

When debugging deployment issues, think of logs as archaeological artifacts—each one tells part of a story, but you need to piece them together to understand the full narrative.

The most revealing logs for this error are often:

- The Kamal proxy logs showing the health check attempts

- Your application's startup logs

- Any database connection errors

- Environment variable misconfigurations

Collect these logs systematically, and the pattern of failure will emerge.

Deployment as a System, Not an Event

A key conceptual shift in resolving these errors is to view deployment not as a single event but as a system with multiple interconnected components:

- Container building

- Image distribution

- Environment configuration

- Application initialization

- Health verification

- Traffic transitioning

The "target failed to become healthy" error points to a breakdown in the health verification component, but the root cause could be in any of these areas.

By systematically testing each component and understanding their interconnections, you can identify where the system is breaking down and apply the appropriate fix.

The Path Forward

Kamal's maintainers are actively improving error reporting and health check mechanisms. Future versions will likely provide more detailed diagnostics. Until then, this systematic debugging approach will help you resolve the dreaded "target failed to become healthy" error.

Remember that deployment tools are abstractions that make common cases easy but edge cases challenging. By understanding what happens beneath these abstractions, you gain the power to diagnose and resolve the issues that inevitably arise.

The next time you encounter this error, approach it methodically, test each layer of the system, and you'll find that what initially seemed like an impenetrable wall becomes a solvable puzzle.

This guide is based on real-world deployment experiences with Kamal across various Rails applications. If you've encountered other causes or solutions for this error, consider contributing to the Kamal project documentation to help other developers.

Paul Keen is an Open-source Contributor and a Chief Technology Officer at JetThoughts. Follow him on LinkedIn or GitHub.

If you enjoyed this story, we recommend reading our latest tech stories and trending tech stories.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)