Selecting Embedded LLM AI: Points to Consider

Embedded, LLM-based AI agents are quickly evolving and gathering interest from every major industry, but the effectiveness of these agentic AI edge devices varies widely based on the hardware and software supporting them. Let’s break down how each factor directly impacts the efficiency, effectiveness, and quality of an LLM-based AI agent on the edge. Hardware: The Foundation of Edge AI Performance Match Hardware to the Use Case When deploying large language models at the edge, hardware selection must be targeted towards the complexity of the LLM and the agent’s intended function. Lightweight agents handling basic NLP tasks—like command recognition or sentence classification—can operate on small NPUs with modest compute (1–5 TOPS) paired with ARM CPUs. However, true embedded LLM agents capable of multi-turn dialogue, document summarization, or contextual reasoning demand significantly more power. Quantized LLMs like LLaMA 3, Mistral, or TinyLlama, trimmed for edge deployment, typically require 16–40GB of fast-access memory and 30–100 TOPS of compute throughput. Tasks involving vision-language fusion, long-context reasoning, or agentic task execution may push this further. In these cases, mid-to-high tier edge devices—like the Jetson Orin NX or AGX Orin—offer the ideal balance of compute, memory bandwidth, and energy efficiency for embedding full LLM capabilities on-device. When multiple agents or concurrent interactions are required, edge server-class platforms become essential to maintain responsiveness without offloading to the cloud. Power Efficiency and Performance-per-Watt Embedded LLM agents often live in power-constrained environments—wearables, smart displays, autonomous machines—where energy draw directly impacts feasibility. It's not just about raw speed; it’s about how much LLM inference you get per watt. Devices like the Jetson Orin NX, which deliver over 50 TOPS under 30W, demonstrate that high performance doesn’t require data center power budgets. For edge LLM deployment, performance-per-watt is one of the most decisive metrics. The ideal hardware platform should offer efficient transformer execution while staying cool, mobile, and battery-friendly. A marginally slower chip that consumes half the power may be the better long-term choice for an always-on AI agent in the field. Memory Architecture and Interconnects LLM inference is memory-intensive with long-context windows, attention caching, or multimodal inputs. Devices with limited memory or narrow memory buses often fail to sustain inference throughput or must offload parts of the model, negating the benefits of on-device AI. For embedded LLM agents, look for hardware with high-bandwidth memory (e.g., LPDDR5, GDDR6, or HBM), support for 16GB+ VRAM, and wide memory buses capable of feeding data at 100+ GB/s. This is critical for avoiding performance degradation during attention-heavy tasks or agentic workflows involving large prompts or tool usage. Equally important are interconnects—like PCIe Gen4/Gen5 or NVLink—that ensure fast communication between NPUs, GPUs, CPUs, and memory. Without these, your model might have the compute but still stall due to internal data starvation. Compare Against Industry Benchmarks Here’s a breakdown of leading edge AI devices for embedded LLM workloads: Jetson Nano – Not suitable for LLMs. Best used for simple computer vision or natural language processing models under 0.5B parameters. Jetson Orin NX – Ideal for quantized LLM inference (e.g., LLaMA 3 7B INT8), especially in single-agent or voice assistant scenarios. Delivers ~2-5 TOPS/W with up to 102.4 GB/s bandwidth. Jetson AGX Orin – Best for multitasking agents or visual-LM agents with multimodal inputs. Deliver ~4.6 - 18.3 TOPS/W with up to 204.8 GB/s bandwidth. Whenever possible, benchmark using your intended LLM architecture and expected prompt types. Standard throughput tests like tokens/sec and latency per token provide a more realistic view of edge capability than theoretical TOPS alone. Ask the Right Questions When evaluating hardware for embedded LLMs, ask questions that reflect real-world constraints and use cases: What quantized LLMs can this device run in real time? (e.g., LLaMA 3 7B INT8, Mistral 7B FP16) What is the average token generation latency? How many concurrent sessions or users can the system handle? Does it support attention acceleration or transformer-friendly inference runtimes? What’s the sustained performance under thermal constraints? Is memory architecture sufficient for long-context inference (e.g., 8k+ tokens)? How does it benchmark against known LLM devices like Jetson Orin NX or AGX Orin? By asking these, you'll align hardware selection with the true demands of your agent, not just the spec sheet. Software: The Differentiator for Agent Intelligence While hardware lays the foundation, software dictates the intelligence, adaptability, and real-world utility of embedded AI agents. L

Embedded, LLM-based AI agents are quickly evolving and gathering interest from every major industry, but the effectiveness of these agentic AI edge devices varies widely based on the hardware and software supporting them. Let’s break down how each factor directly impacts the efficiency, effectiveness, and quality of an LLM-based AI agent on the edge.

Hardware: The Foundation of Edge AI Performance

Match Hardware to the Use Case

When deploying large language models at the edge, hardware selection must be targeted towards the complexity of the LLM and the agent’s intended function. Lightweight agents handling basic NLP tasks—like command recognition or sentence classification—can operate on small NPUs with modest compute (1–5 TOPS) paired with ARM CPUs. However, true embedded LLM agents capable of multi-turn dialogue, document summarization, or contextual reasoning demand significantly more power.

Quantized LLMs like LLaMA 3, Mistral, or TinyLlama, trimmed for edge deployment, typically require 16–40GB of fast-access memory and 30–100 TOPS of compute throughput. Tasks involving vision-language fusion, long-context reasoning, or agentic task execution may push this further. In these cases, mid-to-high tier edge devices—like the Jetson Orin NX or AGX Orin—offer the ideal balance of compute, memory bandwidth, and energy efficiency for embedding full LLM capabilities on-device. When multiple agents or concurrent interactions are required, edge server-class platforms become essential to maintain responsiveness without offloading to the cloud.

Power Efficiency and Performance-per-Watt

Embedded LLM agents often live in power-constrained environments—wearables, smart displays, autonomous machines—where energy draw directly impacts feasibility. It's not just about raw speed; it’s about how much LLM inference you get per watt.

Devices like the Jetson Orin NX, which deliver over 50 TOPS under 30W, demonstrate that high performance doesn’t require data center power budgets. For edge LLM deployment, performance-per-watt is one of the most decisive metrics. The ideal hardware platform should offer efficient transformer execution while staying cool, mobile, and battery-friendly. A marginally slower chip that consumes half the power may be the better long-term choice for an always-on AI agent in the field.

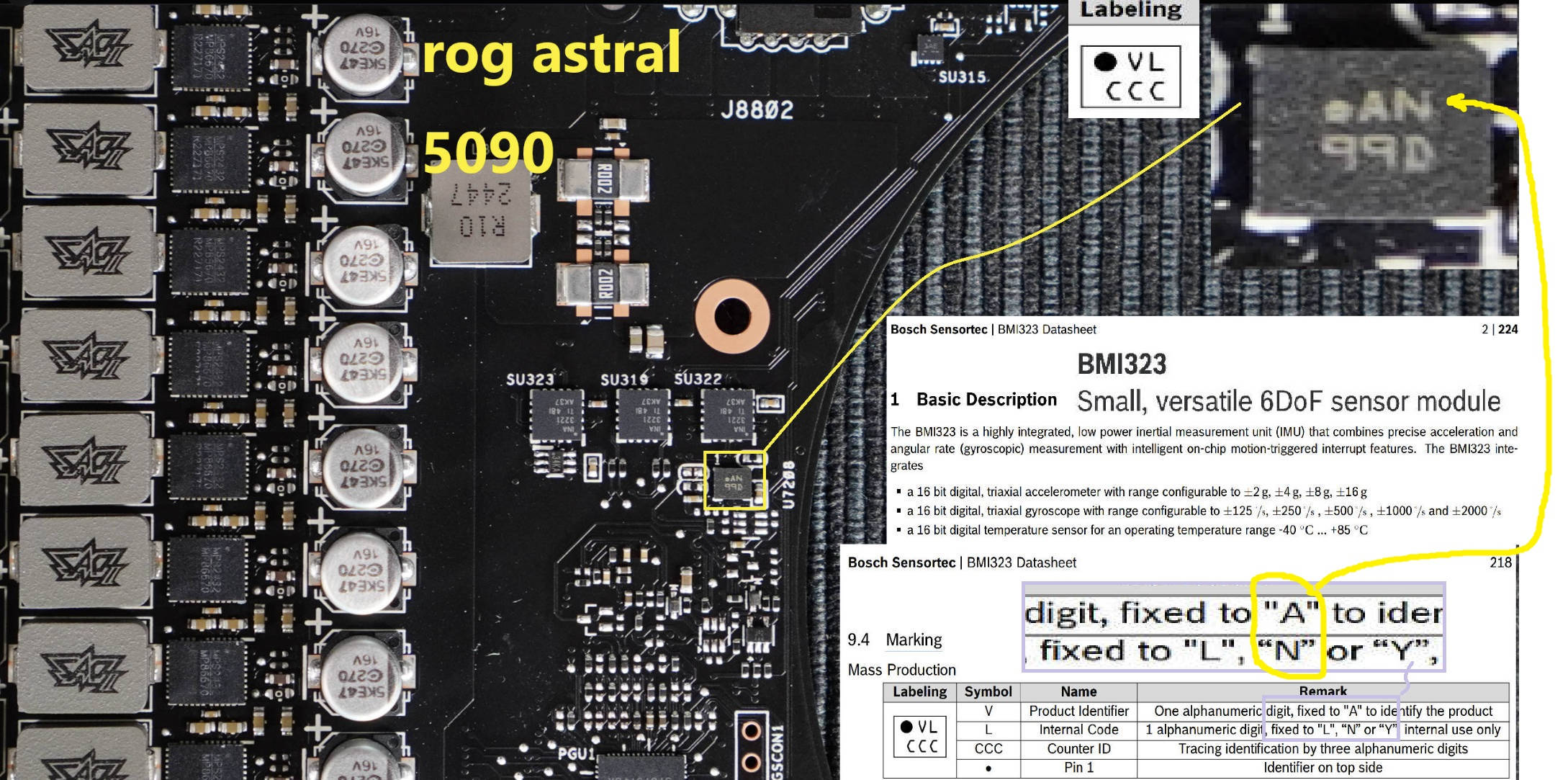

Memory Architecture and Interconnects

LLM inference is memory-intensive with long-context windows, attention caching, or multimodal inputs. Devices with limited memory or narrow memory buses often fail to sustain inference throughput or must offload parts of the model, negating the benefits of on-device AI.

For embedded LLM agents, look for hardware with high-bandwidth memory (e.g., LPDDR5, GDDR6, or HBM), support for 16GB+ VRAM, and wide memory buses capable of feeding data at 100+ GB/s. This is critical for avoiding performance degradation during attention-heavy tasks or agentic workflows involving large prompts or tool usage.

Equally important are interconnects—like PCIe Gen4/Gen5 or NVLink—that ensure fast communication between NPUs, GPUs, CPUs, and memory. Without these, your model might have the compute but still stall due to internal data starvation.

Compare Against Industry Benchmarks

Here’s a breakdown of leading edge AI devices for embedded LLM workloads:

- Jetson Nano – Not suitable for LLMs. Best used for simple computer vision or natural language processing models under 0.5B parameters.

- Jetson Orin NX – Ideal for quantized LLM inference (e.g., LLaMA 3 7B INT8), especially in single-agent or voice assistant scenarios. Delivers ~2-5 TOPS/W with up to 102.4 GB/s bandwidth.

- Jetson AGX Orin – Best for multitasking agents or visual-LM agents with multimodal inputs. Deliver ~4.6 - 18.3 TOPS/W with up to 204.8 GB/s bandwidth.

Whenever possible, benchmark using your intended LLM architecture and expected prompt types. Standard throughput tests like tokens/sec and latency per token provide a more realistic view of edge capability than theoretical TOPS alone.

Ask the Right Questions

When evaluating hardware for embedded LLMs, ask questions that reflect real-world constraints and use cases:

- What quantized LLMs can this device run in real time? (e.g., LLaMA 3 7B INT8, Mistral 7B FP16)

- What is the average token generation latency?

- How many concurrent sessions or users can the system handle?

- Does it support attention acceleration or transformer-friendly inference runtimes?

- What’s the sustained performance under thermal constraints? Is memory architecture sufficient for long-context inference (e.g., 8k+ tokens)?

- How does it benchmark against known LLM devices like Jetson Orin NX or AGX Orin?

- By asking these, you'll align hardware selection with the true demands of your agent, not just the spec sheet.

Software: The Differentiator for Agent Intelligence

While hardware lays the foundation, software dictates the intelligence, adaptability, and real-world utility of embedded AI agents.

Language Model

The language model at the core of the agent is a primary differentiator. Cloud-based models like OpenAI’s GPT-4 offer strong inference performance, multilingual fluency, and broad general knowledge. Meanwhile, open-source alternatives like Meta’s LLaMA or Mistral allow for customization, transparency, and fine-tuning but require additional engineering effort.

Inference Optimization

Software frameworks like TensorRT, ONNX Runtime, and Apache TVM include tools for inference optimization—such as quantization, kernel fusion, and graph simplification—enabling faster and more efficient model execution on diverse hardware. Quantization reduces model size and computation by converting high-precision weights (e.g., FP32) into lower-precision formats like INT8 or FP16. Kernel fusion combines multiple operations into a single kernel to minimize memory access and execution overhead. Graph simplification removes redundant or unused operations in the model, streamlining the computation graph for faster inference.

*Integration and Multimodal Capabilities *

Integration capabilities determine how seamlessly AI agents fit into existing workflows. The best platforms provide robust APIs and SDKs that connect with enterprise systems such as CRM (Customer Relationship Management), ERP (Enterprise Resource Planning), and EMR (Electronic Medical Records). These integrations enable agents to retrieve, update, and act on business data in real time.

Multimodal capabilities—support for voice, images, structured data, and more—add versatility, making agents applicable across a wider range of industries and use cases. Omni-channel deployment ensures that these agents operate consistently across websites, mobile apps, internal dashboards, and messaging interfaces.

Explainability and Traceability

Finally, explainability and traceability are critical in regulated or high-stakes domains, where understanding how and why an AI system produces its outputs is essential. Features like source attribution, audit trails, and domain-specific reasoning contribute to this transparency by allowing users to verify answers, track decision paths, and ensure compliance.

Conclusion

As embedded AI agents continue to evolve, selecting the right edge solution requires more than just chasing the latest specs. The ideal edge LLM system strikes a balance between performance-per-watt, model compatibility, memory architecture, and secure data handling—all while integrating seamlessly into operational workflows. With more clarity and purpose, developers and decision-makers can confidently deploy edge AI agents that are not only powerful, but also practical, adaptable, and compliant.

For any questions, visit sightify.ai.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)

![Apple Seeds tvOS 18.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97153/97153/97153-640.jpg)