Scaling Your Cloudflare D1 Database: From the 10 GB Limit to TBs

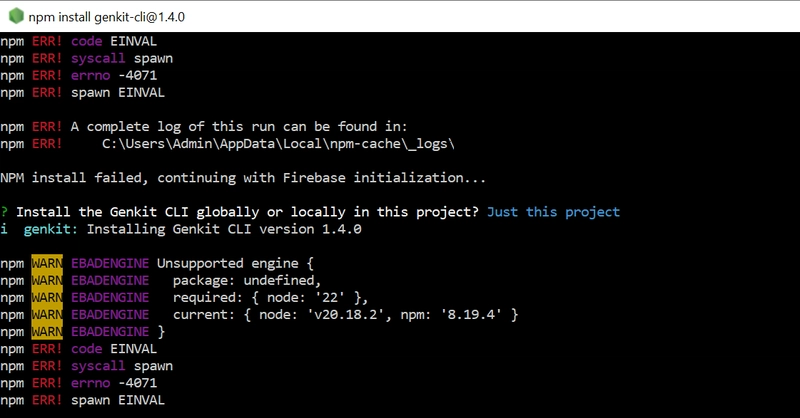

D1 is Cloudflare's managed serverless database built on SQLite, offering a lot of out-of-the-box features that make it highly suitable for startups and even enterprises. However, one limitation of D1 is the 10 GB per database restriction. Cloudflare recommends splitting your data across multiple databases, using a per-tenant or per-entity structure. From my research, there’s no default implementation for per-tenant databases, but it is possible to implement this using Cloudflare APIs—both for D1 and Workers. You can create databases via the API and bind them to Workers using the Workers REST API. This design choice is important for my architecture, as I plan to stick with Cloudflare's infrastructure for the next few years. However, the implementation can be time-consuming and complex, though it scales well as the number of users grows. For those seeking an easier solution that provides more runtime space without exceeding the 10 GB limit, you can scale beyond 10 GB—up to 50 GB, for example. Once you hit around 20 GB, the solution might start to break down, at which point hiring skilled engineers to resolve this becomes a viable option. Cloudflare's support for up to 50,000 databases per Worker offers massive scalability potential. However, it's crucial to decide on the number of shards in advance. But what exactly are shards? What Are Shards? Sharding involves splitting large datasets into smaller, more manageable chunks, called shards, which are stored across multiple servers. This is especially useful when your database exceeds the 10 GB limit of D1. For example, if your database grows to 1 TB (1000 GB) over 10 years, you’ll need around 100 separate D1 databases. Instead of storing all data in one, which would compromise performance, you distribute it across multiple shards—each shard is just a D1 database with a unique identifier. But how do you determine which data goes into which shard? Here are two possible strategies: 1. Hash Sharding: This method involves creating a hash of the customer ID and distributing customers across specific shards based on the hash. Pros: Ensures even distribution of data across shards. Cons: The 10 GB limit per database still applies, so adding more shards requires rebalancing. This can be mitigated by linking the shard number or key to the user during registration. 2. Range Sharding: This approach assigns specific ranges of users (e.g., every 100 or 1000 users) to their dedicated shard. Pros: Easy to implement and scale as the user base grows. Cons: Requires tracking user counts across shards. Some shards may become overwhelmed while others remain underused. For simplicity and proof of concept, I will implement hash-based sharding. We’ll start with 3 shards, providing 30 GB of storage, which should suffice for hundreds or thousands of users. By the time we reach 10,000 users, Cloudflare will likely have addressed this issue, or we can migrate to a different database solution. Note: With this setup, Cloudflare allows up to 5000 shards with R1 DB, but this requires custom scripts and contacting Cloudflare support for rate limiting and other considerations. Step-by-Step Implementation Step 1: Create the Databases First, we’ll create 3 databases: npx wrangler d1 create SHARD_1 npx wrangler d1 create SHARD_2 npx wrangler d1 create SHARD_3 Step 2: Update wrangler.toml with Database Bindings Next, bind the databases to the Worker: "d1_databases": [ { "binding": "SHARD_1", "database_name": "SHARD_1", "database_id": "uid" }, { "binding": "SHARD_2", "database_name": "SHARD_2", "database_id": "uid" }, { "binding": "SHARD_3", "database_name": "SHARD_3", "database_id": "uid" } ] Step 4: Get database Shard Method setup This will allow us to rederive the right shard based on the hashing function output import { drizzle } from 'drizzle-orm/d1'; import { Context } from '@cloudflare/workers-types'; // Bindings for multiple D1 databases export interface Env { SHARD_1: D1Database; SHARD_2: D1Database; SHARD_3: D1Database; } // Create Drizzle instances for each shard export function getDB(env: Env, shardNumber: number) { switch (shardNumber) { case 0: return drizzle(env.SHARD_1); case 1: return drizzle(env.SHARD_2); case 2: return drizzle(env.SHARD_3); default: throw new Error("Invalid shard"); } } Step 4: Implement Hash Function to Determine the Shard This is very basic implementation you could go with more complex robust hash function implementation. But the goal of this is simply when we compute hash(customer_id) % num_of_our_shards will always give us a number from 0 to num_of_our_shards - 1 // Simple hashing function for sharding async function hash(customerId: string): Promise { const encoder = new TextEncoder(); const data = encoder.encode

D1 is Cloudflare's managed serverless database built on SQLite, offering a lot of out-of-the-box features that make it highly suitable for startups and even enterprises. However, one limitation of D1 is the 10 GB per database restriction. Cloudflare recommends splitting your data across multiple databases, using a per-tenant or per-entity structure.

From my research, there’s no default implementation for per-tenant databases, but it is possible to implement this using Cloudflare APIs—both for D1 and Workers. You can create databases via the API and bind them to Workers using the Workers REST API.

This design choice is important for my architecture, as I plan to stick with Cloudflare's infrastructure for the next few years. However, the implementation can be time-consuming and complex, though it scales well as the number of users grows.

For those seeking an easier solution that provides more runtime space without exceeding the 10 GB limit, you can scale beyond 10 GB—up to 50 GB, for example. Once you hit around 20 GB, the solution might start to break down, at which point hiring skilled engineers to resolve this becomes a viable option. Cloudflare's support for up to 50,000 databases per Worker offers massive scalability potential.

However, it's crucial to decide on the number of shards in advance. But what exactly are shards?

What Are Shards?

Sharding involves splitting large datasets into smaller, more manageable chunks, called shards, which are stored across multiple servers. This is especially useful when your database exceeds the 10 GB limit of D1.

For example, if your database grows to 1 TB (1000 GB) over 10 years, you’ll need around 100 separate D1 databases. Instead of storing all data in one, which would compromise performance, you distribute it across multiple shards—each shard is just a D1 database with a unique identifier.

But how do you determine which data goes into which shard? Here are two possible strategies:

1. Hash Sharding:

This method involves creating a hash of the customer ID and distributing customers across specific shards based on the hash.

-

Pros:

- Ensures even distribution of data across shards.

-

Cons:

- The 10 GB limit per database still applies, so adding more shards requires rebalancing. This can be mitigated by linking the shard number or key to the user during registration.

2. Range Sharding:

This approach assigns specific ranges of users (e.g., every 100 or 1000 users) to their dedicated shard.

-

Pros:

- Easy to implement and scale as the user base grows.

-

Cons:

- Requires tracking user counts across shards.

- Some shards may become overwhelmed while others remain underused.

For simplicity and proof of concept, I will implement hash-based sharding. We’ll start with 3 shards, providing 30 GB of storage, which should suffice for hundreds or thousands of users. By the time we reach 10,000 users, Cloudflare will likely have addressed this issue, or we can migrate to a different database solution.

Note: With this setup, Cloudflare allows up to 5000 shards with R1 DB, but this requires custom scripts and contacting Cloudflare support for rate limiting and other considerations.

Step-by-Step Implementation

Step 1: Create the Databases

First, we’ll create 3 databases:

npx wrangler d1 create SHARD_1

npx wrangler d1 create SHARD_2

npx wrangler d1 create SHARD_3

Step 2: Update wrangler.toml with Database Bindings

Next, bind the databases to the Worker:

"d1_databases": [

{

"binding": "SHARD_1",

"database_name": "SHARD_1",

"database_id": "uid"

},

{

"binding": "SHARD_2",

"database_name": "SHARD_2",

"database_id": "uid"

},

{

"binding": "SHARD_3",

"database_name": "SHARD_3",

"database_id": "uid"

}

]

Step 4: Get database Shard Method setup

This will allow us to rederive the right shard based on the hashing function output

import { drizzle } from 'drizzle-orm/d1';

import { Context } from '@cloudflare/workers-types';

// Bindings for multiple D1 databases

export interface Env {

SHARD_1: D1Database;

SHARD_2: D1Database;

SHARD_3: D1Database;

}

// Create Drizzle instances for each shard

export function getDB(env: Env, shardNumber: number) {

switch (shardNumber) {

case 0: return drizzle(env.SHARD_1);

case 1: return drizzle(env.SHARD_2);

case 2: return drizzle(env.SHARD_3);

default: throw new Error("Invalid shard");

}

}

Step 4: Implement Hash Function to Determine the Shard

This is very basic implementation you could go with more complex robust hash function implementation. But the goal of this is simply when we compute hash(customer_id) % num_of_our_shards will always give us a number from 0 to num_of_our_shards - 1

// Simple hashing function for sharding

async function hash(customerId: string): Promise<number> {

const encoder = new TextEncoder();

const data = encoder.encode(customerId);

const hashBuffer = await crypto.subtle.digest("SHA-256", data);

const hashArray = Array.from(new Uint8Array(hashBuffer));

const hashValue =

(hashArray[0] << 24) |

(hashArray[1] << 16) |

(hashArray[2] << 8) |

hashArray[3];

return Math.abs(hashValue);

}

Step 5: Create the Worker to Communicate with Shards

export default {

async fetch(request: Request, env: Env) {

const url = new URL(request.url);

const customerId = url.searchParams.get("customer_id");

if (!customerId) {

return new Response("Missing customer_id", { status: 400 });

}

// Hashing function to determine shard number

const shardNumber = hash(customerId) % 3; // Here we get the shard number

const db = getDB(env, shardNumber);

// Execute a query using Drizzle ORM

const results = await db.execute("SELECT * FROM users WHERE customer_id = ?", [customerId]);

return new Response(JSON.stringify(results), {

headers: { "Content-Type": "application/json" },

});

}

};

Key Takeaways:

Cloudflare D1 Scalability: While Cloudflare D1 offers scalability, it is inherently limited by SQLite's capabilities.

Per-Tenant Database: A per-tenant database approach is more scalable for enterprises and can be automated, though it introduces increased complexity. This guide highlights how to implement this solution.

Hash-Based Sharding for Startups: Sharding by hash with 10 shards provides an effective solution for startups, offering 100 GB of storage. This setup ensures sufficient room for growth and scalability, giving you peace of mind without worrying about hitting any limit, any time soon, however do monitor and adjust. Or I am sure after you pass 5 GB you can hire brilliant engineers who would design a solution for you in a launch break.

Always consider the pro's and con's of each approach.

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

-Nintendo-Switch-2-–-Overview-trailer-00-00-10.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Anna_Berkut_Alamy.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![[Weekly funding roundup March 29-April 4] Steady-state VC inflow pre-empts Trump tariff impact](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)