Replicate an Author’s Writing Style Using Prompt Engineering

Insights from a structured experiment in replicating an author's writing style using large language models. Evaluating the effectiveness of prompt driven approaches. Goal Replicate or capture an author's writing style using both manual prompt engineering and Claude’s Custom Styles feature Context Claude enables users to upload a writing sample and apply that custom style to future outputs via the styles feature. This experiment evaluates how well the custom style feature performs compared to manual prompting, and whether prompting techniques can offer a practical alternative to finetuning for precisely replicating writing styles. Models Compared Claude 3.7 Sonnet GPT-4o Hypothesis Claude’s style feature likely uses a combination of: In-context learning from uploaded writing samples System-level prompt conditioning to maintain tone, pacing, and structure This experiment explores whether similar results can be achieved through: Carefully structured zero shot and few-shot prompts Key Concepts In-Context Learning: The model learns from examples provided in the prompt itself, without retraining. System Prompt Conditioning: Claude likely distills your uploaded style into a reusable system-level instruction that’s injected into future generations. Delivery vs. Content: Writing style is about rhythm, structure, words placement, and flow of emotions not just vocabulary. Flattening a writer’s style into plain structure removes their unique voice. What Was Tested Including every prompt and model response inline would make this write up too long, added prompts and responses for readers who want to explore prompt to response results. The experiments were conducted using writing samples from Steven Pressfield. 1. Flat vs. Structured Writing Samples Flattened samples (i.e., original writing sample collapsed into a long, neutral paragraph) failed to preserve the author’s voice. Both Claude and GPT-4o produced technically sound writing, but the emotional cadence and authorial feel were missing. Using Pressfield’s original write-ups unaltered led to significantly improved style replication. Claude leaned into reflective, rhetorical depth. GPT-4o also captured the voice more effectively. Insight: Structure matters, preserving the author original writing structure is of importance. Rhythm is part of a writer’s voice. 2. Style Transfer Without Samples (Zero-Shot Prompting) When prompted to write "in the style of Steven Pressfield" without any sample, Claude produced responses that more closely captured his voice. GPT-4o's output was smoother and well-structured but lacked the core stylistic precision. Insight: Claude handles authorial rhythm better in zero-shot settings, while GPT-4o needs structural cues. 3. Style Transfer With Sample + Rewrite Instruction Providing a real Pressfield sample along with a prompt to rewrite a neutral paragraph significantly improved both models’ responses, bringing them closely in line with the original style. Insight: A real sample combined with a specific rewrite prompt produced better results than name references alone. 4. Claude Custom Style vs. Prompt Engineering Uploading a custom style to Claude produced reflective and philosophical prose inspired by Pressfield. However, it lacked the raw, fragmented structure of his true writing voice. It felt more like a well-crafted modern adaptation than a faithful replication. Insight: Claude abstracts tone and theme rather than sentence-level mimicry. It is inspiration-driven, not author-driven. Results For brand tone or general voice alignment, Claude’s Custom Style works well. Manual editing of system prompts within Claude Custom Style can help guide the model toward more efficient replication. However, the outputs remained more inspiration-driven than truly author-specific in tone and structure from the various tweaking applied in this experiment. Using an Author's unmodified writing sample is crucial. Sentence breaks, rhythm, and pacing are integral parts of an author's voice and should remain untouched for effective replication. Prompt-based approaches are increasingly effective as model capabilities improve and should be highly considered as the first approach for prototyping or MVPs. However, for consistent and accurate replication of Author's writing style, finetuning remains the more reliable though often more resource intensive option. Links Full prompt and model responses When to Fine-Tune?

Insights from a structured experiment in replicating an author's writing style using large language models.

Evaluating the effectiveness of prompt driven approaches.

Goal

Replicate or capture an author's writing style using both manual prompt engineering and Claude’s Custom Styles feature

Context

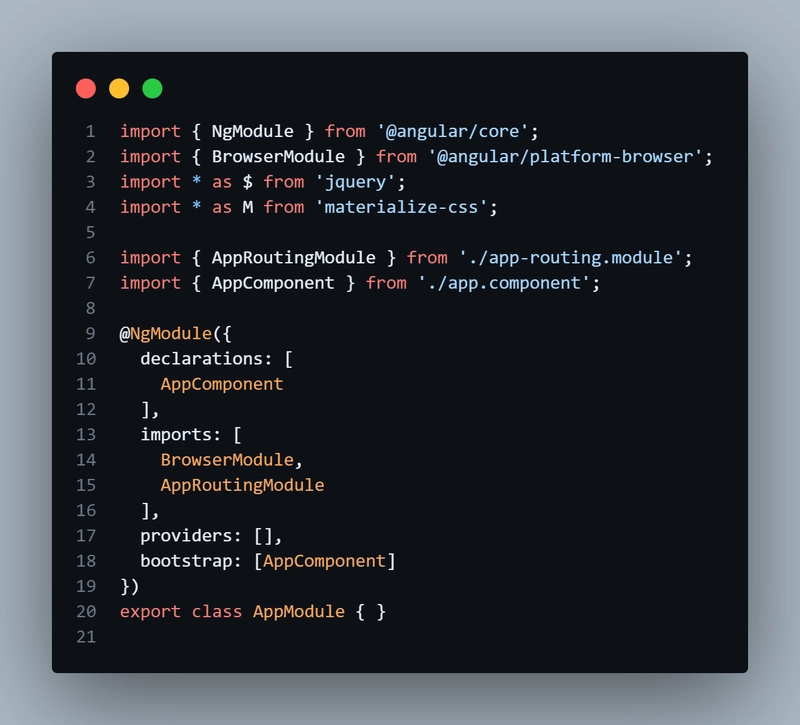

Claude enables users to upload a writing sample and apply that custom style to future outputs via the styles feature. This experiment evaluates how well the custom style feature performs compared to manual prompting, and whether prompting techniques can offer a practical alternative to finetuning for precisely replicating writing styles.

Models Compared

- Claude 3.7 Sonnet

- GPT-4o

Hypothesis

Claude’s style feature likely uses a combination of:

- In-context learning from uploaded writing samples

- System-level prompt conditioning to maintain tone, pacing, and structure

This experiment explores whether similar results can be achieved through:

- Carefully structured zero shot and few-shot prompts

Key Concepts

In-Context Learning: The model learns from examples provided in the prompt itself, without retraining.

System Prompt Conditioning: Claude likely distills your uploaded style into a reusable system-level instruction that’s injected into future generations.

Delivery vs. Content: Writing style is about rhythm, structure, words placement, and flow of emotions not just vocabulary. Flattening a writer’s style into plain structure removes their unique voice.

What Was Tested

Including every prompt and model response inline would make this write up too long, added prompts and responses for readers who want to explore prompt to response results. The experiments were conducted using writing samples from Steven Pressfield.

1. Flat vs. Structured Writing Samples

Flattened samples (i.e., original writing sample collapsed into a long, neutral paragraph) failed to preserve the author’s voice. Both Claude and GPT-4o produced technically sound writing, but the emotional cadence and authorial feel were missing.

Using Pressfield’s original write-ups unaltered led to significantly improved style replication. Claude leaned into reflective, rhetorical depth. GPT-4o also captured the voice more effectively.

Insight: Structure matters, preserving the author original writing structure is of importance. Rhythm is part of a writer’s voice.

2. Style Transfer Without Samples (Zero-Shot Prompting)

When prompted to write "in the style of Steven Pressfield" without any sample, Claude produced responses that more closely captured his voice. GPT-4o's output was smoother and well-structured but lacked the core stylistic precision.

Insight: Claude handles authorial rhythm better in zero-shot settings, while GPT-4o needs structural cues.

3. Style Transfer With Sample + Rewrite Instruction

Providing a real Pressfield sample along with a prompt to rewrite a neutral paragraph significantly improved both models’ responses, bringing them closely in line with the original style.

Insight: A real sample combined with a specific rewrite prompt produced better results than name references alone.

4. Claude Custom Style vs. Prompt Engineering

Uploading a custom style to Claude produced reflective and philosophical prose inspired by Pressfield. However, it lacked the raw, fragmented structure of his true writing voice.

It felt more like a well-crafted modern adaptation than a faithful replication.

Insight: Claude abstracts tone and theme rather than sentence-level mimicry. It is inspiration-driven, not author-driven.

Results

- For brand tone or general voice alignment, Claude’s Custom Style works well.

- Manual editing of system prompts within Claude Custom Style can help guide the model toward more efficient replication. However, the outputs remained more inspiration-driven than truly author-specific in tone and structure from the various tweaking applied in this experiment.

- Using an Author's unmodified writing sample is crucial. Sentence breaks, rhythm, and pacing are integral parts of an author's voice and should remain untouched for effective replication.

- Prompt-based approaches are increasingly effective as model capabilities improve and should be highly considered as the first approach for prototyping or MVPs. However, for consistent and accurate replication of Author's writing style, finetuning remains the more reliable though often more resource intensive option.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.webp?#)

![PSA: It’s not just you, Spotify is down [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2023/06/spotify-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Update: Optional] Google rolling out auto-restart security feature to Android](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Vision 'Air' Headset May Feature Titanium and iPhone 5-Era Black Finish [Rumor]](https://www.iclarified.com/images/news/97040/97040/97040-640.jpg)

![Apple to Split Enterprise and Western Europe Roles as VP Exits [Report]](https://www.iclarified.com/images/news/97032/97032/97032-640.jpg)