Quick SQLite to PostgreSQL Migration with pgloader

pgloader is an open-source data loading tool that efficiently streams data into PostgreSQL using the COPY protocol. It handles schema discovery, data transformations, and error management automatically. For SQLite migrations, it can handle both schema and data in a single command, making it perfect for automated database migrations. 1. Restore SQLite from Litestream # Set your AWS credentials and restore the backup export LITESTREAM_ACCESS_KEY_ID="your_access_key" \ LITESTREAM_SECRET_ACCESS_KEY="your_secret_key" && \ litestream restore -o storage/production.sqlite3 \ s3://your-bucket/storage/production.sqlite3 2. Migrate to PostgreSQL For cloud PostgreSQL (e.g., Supabase): docker run --rm -it \ -v /path/to/your/sqlite:/data/production.sqlite3 \ dimitri/pgloader:latest \ pgloader --with "DATA ONLY" --verbose \ sqlite:///data/production.sqlite3 \ postgresql://user:password@your-postgres-host:6543/postgres For local PostgreSQL: docker run --rm -it \ -v /path/to/your/sqlite:/data/production.sqlite3 \ dimitri/pgloader:latest \ pgloader --with "DATA ONLY" --verbose \ sqlite:///data/production.sqlite3 \ postgresql://postgres:postgres@host.docker.internal:5432/your_db Key points: Use --with "DATA ONLY" if tables are already created in PostgreSQL Use host.docker.internal to connect to local PostgreSQL from Docker Ensure your SQLite file path in the volume mount matches the path in the pgloader command Important Notes Always backup your data before migration Test the migration process in a staging environment first Plan for downtime during the migration Update your application's database connection strings after migration

pgloader is an open-source data loading tool that efficiently streams data into PostgreSQL using the COPY protocol. It handles schema discovery, data transformations, and error management automatically. For SQLite migrations, it can handle both schema and data in a single command, making it perfect for automated database migrations.

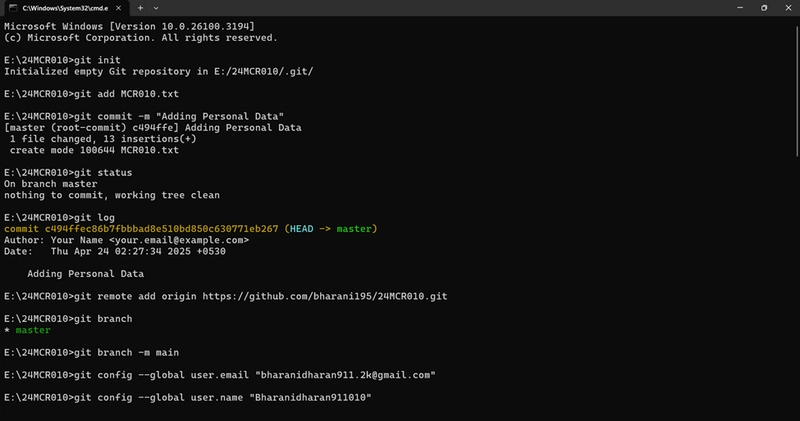

1. Restore SQLite from Litestream

# Set your AWS credentials and restore the backup

export LITESTREAM_ACCESS_KEY_ID="your_access_key" \

LITESTREAM_SECRET_ACCESS_KEY="your_secret_key" && \

litestream restore -o storage/production.sqlite3 \

s3://your-bucket/storage/production.sqlite3

2. Migrate to PostgreSQL

For cloud PostgreSQL (e.g., Supabase):

docker run --rm -it \

-v /path/to/your/sqlite:/data/production.sqlite3 \

dimitri/pgloader:latest \

pgloader --with "DATA ONLY" --verbose \

sqlite:///data/production.sqlite3 \

postgresql://user:password@your-postgres-host:6543/postgres

For local PostgreSQL:

docker run --rm -it \

-v /path/to/your/sqlite:/data/production.sqlite3 \

dimitri/pgloader:latest \

pgloader --with "DATA ONLY" --verbose \

sqlite:///data/production.sqlite3 \

postgresql://postgres:postgres@host.docker.internal:5432/your_db

Key points:

- Use

--with "DATA ONLY"if tables are already created in PostgreSQL - Use

host.docker.internalto connect to local PostgreSQL from Docker - Ensure your SQLite file path in the volume mount matches the path in the pgloader command

Important Notes

- Always backup your data before migration

- Test the migration process in a staging environment first

- Plan for downtime during the migration

- Update your application's database connection strings after migration

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Google reveals NotebookLM app for Android & iPhone, coming at I/O 2025 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/NotebookLM-Android-iPhone-6-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Reports Q2 FY25 Earnings: $95.4 Billion in Revenue, $24.8 Billion in Net Income [Chart]](https://www.iclarified.com/images/news/97188/97188/97188-640.jpg)