Optimizing API Performance Part 3: Asynchronous Processing & Queues

This is the 3rd instalment on the API performance and optimisation series. It is targeted at medium scale to enterprise level applications. We have explore Caching in part 1 of the series, and load balancing in part 2. In this article, we will dive into asynchronous processing and how it can help makes your API faster, scalable and more resilient We will explore: Why Asynchronous Processing Matters Benefits of Asynchronous Processing and queues to your API How Asynchronous Processing works with queues Popular Message Queues for Asynchronous Processing Practical Use Cases of Asynchronous Processing Best Practices for Asynchronous Processing Resources for further reads 1. The problem statement (Why Asynchronous Processing Matters). Most applications start with synchronous REST APIs, where Service A makes a request and waits for Service B to respond. However, as application grows this becomes a problem. Using e-commerce applications as a case study, a click to purchase an item can trigger calls to: process payments, send emails, update stock count and inventory, update suggested products data, update dashboard reports in real-time. Without any form of asynchronous processing, The API tries to process everything at synchronously before returning a response. This often leads to: Degrades application performance. Increased server load – More CPU and memory usage. Slow API response times – Users wait longer for a response. Potential failures – A delay in one step (e.g., slow email service) can crash the entire request. Bottlenecks – A delayed response from your email provider will put rest of the processes on hold. The Solution: Offloading Heavy and non urgent Tasks to a Queue Instead of making users wait, APIs can process tasks asynchronously: API receives a request (e.g., "Place Order"). The API queues background tasks (e.g., email notifications, inventory update, analytics). API immediately responds to the user. Background tasks are processed separately, without slowing the API. This makes APIs blazing fast, scalable, and resilient under heavy load. 2. Benefits of Asynchronous Processing and queues to your applications Improved Performance & Responsiveness Offloading time-consuming tasks (e.g., sending emails, processing payments) to background workers ensures your API responds instantly to users instead of making them wait. Better Scalability Queues help distribute workloads across multiple workers, preventing bottlenecks and allowing your system to handle more requests without overwhelming resources. Fault Tolerance & Reliability If a request fails due to a temporary issue (e.g., network failure), queued tasks can be retried automatically, reducing the risk of data loss or failed operations. Efficient Resource Utilization Instead of blocking API threads with long-running operations, background workers process tasks at a controlled pace, optimising CPU and memory usage. Decoupled Architecture Queues enable micro-services and different system components to communicate asynchronously, improving modularity and reducing dependencies between services. Improved User Experience Users don’t have to wait for slow operations (like file processing or third-party API calls) to complete before getting a response, making applications feel faster and smoother. 3. How Message Queues Work We have highlighted that asynchronous processing allows applications become more resilient by handling important/urgent tasks immediately and queue the rest for a later time. They do this by storing tasks and relevant data in a message queue system like RabbitMQ or Apache Kafka to be processed or read at a later time. Message queue systems are robust and serve different use cases. Apache Kafka for example ensure reliability and persistence of data even across reads. However, Kafka doesn’t care whether there is a consumer for a message or if consumer reads a message. On other hand, RabbitMQ is more involved in the life span of the message, ensuring it gets to the right consumer in the right order. Irrespective of the type of message queues you are working with, there are three common themes: A producer - application that sends messages. Queue (RabbitMQ, AWS SQS) - a buffer that stores messages. A consumer - application that receives/read messages. Using our order process example - The order service produce the message to send email, update inventory, and so on. The respective services receive the message or read the message from the broker then perform actions as required of them. Note: Message Queue systems are capable of handling variety of process complexity. From simple process like a pub/sub messaging system to a complex streaming and Remote procedure call (RPC). However, your use case would determine how and which to use. 4. Popular Message Queues: Redis (for lightweight, fast task queues). Rabbi

This is the 3rd instalment on the API performance and optimisation series. It is targeted at medium scale to enterprise level applications. We have explore Caching in part 1 of the series, and load balancing in part 2. In this article, we will dive into asynchronous processing and how it can help makes your API faster, scalable and more resilient

We will explore:

- Why Asynchronous Processing Matters

- Benefits of Asynchronous Processing and queues to your API

- How Asynchronous Processing works with queues

- Popular Message Queues for Asynchronous Processing

- Practical Use Cases of Asynchronous Processing

- Best Practices for Asynchronous Processing

- Resources for further reads

1. The problem statement (Why Asynchronous Processing Matters).

Most applications start with synchronous REST APIs, where Service A makes a request and waits for Service B to respond. However, as application grows this becomes a problem.

Using e-commerce applications as a case study, a click to purchase an item can trigger calls to: process payments, send emails, update stock count and inventory, update suggested products data, update dashboard reports in real-time.

Without any form of asynchronous processing, The API tries to process everything at synchronously before returning a response.

This often leads to:

- Degrades application performance.

- Increased server load – More CPU and memory usage.

- Slow API response times – Users wait longer for a response.

- Potential failures – A delay in one step (e.g., slow email service) can crash the entire request.

- Bottlenecks – A delayed response from your email provider will put rest of the processes on hold.

The Solution: Offloading Heavy and non urgent Tasks to a Queue

Instead of making users wait, APIs can process tasks asynchronously:

API receives a request (e.g., "Place Order").

- The API queues background tasks (e.g., email notifications, inventory update, analytics).

- API immediately responds to the user.

- Background tasks are processed separately, without slowing the API.

- This makes APIs blazing fast, scalable, and resilient under heavy load.

2. Benefits of Asynchronous Processing and queues to your applications

- Improved Performance & Responsiveness

Offloading time-consuming tasks (e.g., sending emails, processing payments) to background workers ensures your API responds instantly to users instead of making them wait.

- Better Scalability

Queues help distribute workloads across multiple workers, preventing bottlenecks and allowing your system to handle more requests without overwhelming resources.

- Fault Tolerance & Reliability

If a request fails due to a temporary issue (e.g., network failure), queued tasks can be retried automatically, reducing the risk of data loss or failed operations.

- Efficient Resource Utilization

Instead of blocking API threads with long-running operations, background workers process tasks at a controlled pace, optimising CPU and memory usage.

- Decoupled Architecture

Queues enable micro-services and different system components to communicate asynchronously, improving modularity and reducing dependencies between services.

- Improved User Experience

Users don’t have to wait for slow operations (like file processing or third-party API calls) to complete before getting a response, making applications feel faster and smoother.

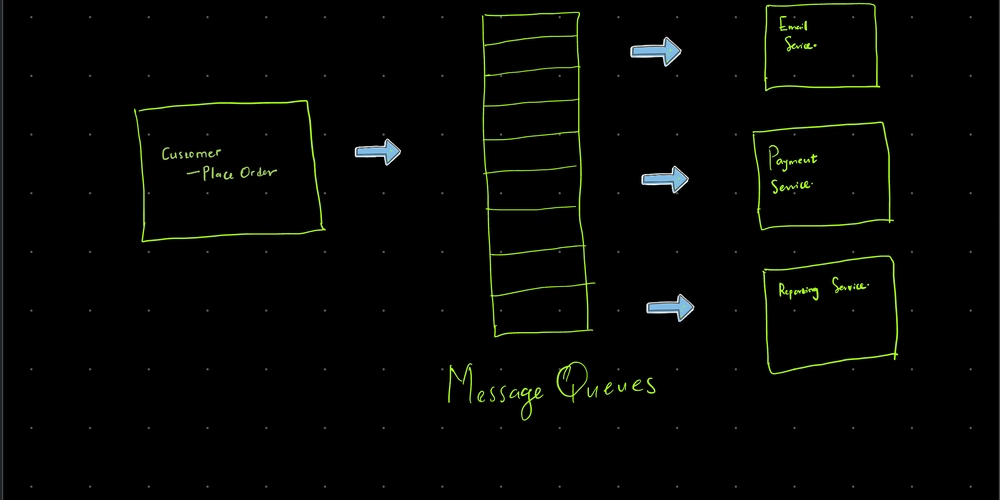

3. How Message Queues Work

We have highlighted that asynchronous processing allows applications become more resilient by handling important/urgent tasks immediately and queue the rest for a later time. They do this by storing tasks and relevant data in a message queue system like RabbitMQ or Apache Kafka to be processed or read at a later time.

Message queue systems are robust and serve different use cases. Apache Kafka for example ensure reliability and persistence of data even across reads. However, Kafka doesn’t care whether there is a consumer for a message or if consumer reads a message. On other hand, RabbitMQ is more involved in the life span of the message, ensuring it gets to the right consumer in the right order.

Irrespective of the type of message queues you are working with, there are three common themes:

- A producer - application that sends messages.

- Queue (RabbitMQ, AWS SQS) - a buffer that stores messages.

- A consumer - application that receives/read messages.

Using our order process example - The order service produce the message to send email, update inventory, and so on. The respective services receive the message or read the message from the broker then perform actions as required of them.

Note: Message Queue systems are capable of handling variety of process complexity. From simple process like a pub/sub messaging system to a complex streaming and Remote procedure call (RPC). However, your use case would determine how and which to use.

4. Popular Message Queues:

- Redis (for lightweight, fast task queues).

- RabbitMQ (for enterprise-grade messaging)

- Apache Kafka (for large-scale event streaming) - think something big like a sport-book service.

- AWS SQS (fully managed message queue)

- Others - Apache ActiveMQ, KubeMQ, Celery, IBM MQ etc.

5. Practical Use Cases of Asynchronous Processing

1. Sending Emails Without Blocking the API

Instead of waiting for an email service to respond, queue the task and return a response immediately.

Example (Using Node.js & Redis Queue – BullMQ)

const Queue = require("bullmq").Queue;

const emailQueue = new Queue("emailQueue");

app.post("/send-email", async (req, res) => {

await emailQueue.add("sendEmail", { userEmail: req.body.email });

res.json({ message: "Email request received!" });

});

// Worker: Processes emails in the background

emailQueue.process(async (job) => {

try {

const { userEmail } = job.data;

await sendEmail(userEmail);

} catch (error) {

console.error(`Failed to send email: ${error.message}`);

}

});

2. Processing Payments Without Slowing Checkout

E-commerce platforms queue payment tasks so API responses are instant.

Steps:

- API places order and queues payment processing.

- The response is immediate (user doesn’t wait).

- A background worker handles the payment asynchronously.

Example: Shopify processes millions of orders daily, especially on Black Friday, where traffic surges 10x. By queuing payments, they reduced checkout times by 40%, preventing crashes and improving conversions.

3. Generating Reports in the Background

Instead of making users wait, APIs can:

- Accept the request & enqueue report generation.

- Send a notification/email when the report is ready.

Example: Google Analytics queues data processing so reports are generated without blocking real-time insights.

4. Updating stock Inventory

Instead of making users wait, APIs can:

- Accept the request & enqueue report generation.

- Send a notification/email when the report is ready.

6. Best Practices for Asynchronous Processing

- Choose the right queue – Redis for speed, RabbitMQ for reliability, Kafka for streaming.

- Set task priorities – If possible, Process urgent tasks first.

- Monitor failed jobs – Implement retries and logging.

- Auto-scale workers – Handle increased load dynamically.

Conclusion: Make Your APIs Lightning Fast

Asynchronous processing and queues remove bottlenecks, improve scalability, and enhance user experience. Whether you're handling emails, payments, or heavy computations, queues make APIs smoother and more resilient.

How do you handle background tasks in your APIs? Drop a comment below!

Further Reading & Resources

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

-Nintendo-Switch-2-–-Overview-trailer-00-00-10.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Anna_Berkut_Alamy.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![[Weekly funding roundup March 29-April 4] Steady-state VC inflow pre-empts Trump tariff impact](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)