MCP: Is It Really Worth Its Hype?

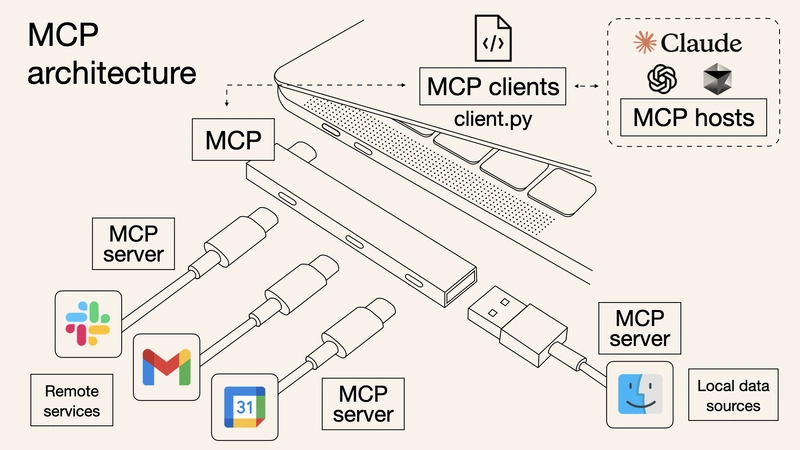

The technology community cannot stop talking about MCP, short for Model Context Protocol, developed by the Claude-maker Anthropic ,open-sourced in November 2024, MCP addresses the complexities of connecting AI models to various tools and datasets. While it flew under the radar last year, it's been gaining serious momentum lately, with developers, platforms, and companies increasingly jumping on board. Well Then, What is MCP? MCP is an open protocol that lets AI systems and agents connect to external tools and resources seamlessly. Think of MCP like a USB port for your AI tools—it lets you plug into all the different data sources you need. Sure, everything works smoothly when you're starting a project from scratch on an AI coding platform. But what if you need to pull in code from older projects on GitHub, fetch documents from Google Drive, and stay on top of updates your QA team is dropping in Slack? With MCP, you need is for is to connect the Github-Slack-Drive to the AI coding agents being used so that your workflow doesn’t miss a beat. Before MCP, each of the tools needed custom coding. So, _MCP provides a universal protocol that allows AI systems to maintain context across different applications, simplifying the development of intelligent agents. _ Who Has Adopted It? Open AI : Tweet Google DeepMind: Confirmed MCP support in their upcoming Gemini models. Developer tools like Replit, Codeium and Sourcegraph has utilised MCP to enhance their AI agents' capabilities. MCP vs LLM: Understanding the Difference While both MCP and Large Language Models (LLMs) are integral to AI development, they serve distinct purposes: LLMs: These are advanced AI models trained on vast datasets to understand and generate human-like text. They excel in tasks such as language translation, content creation, and summarisation. MCP: This is a protocol that facilitates communication between LLMs and external applications, allowing AI systems to perform tasks beyond text generation, such as interacting with databases, executing functions, and maintaining contextual awareness across different tools. Let's understand with a Real-Life Analogy: Imagine a chef (LLM) who is highly skilled in cooking but works in a kitchen (MCP) equipped with various tools and ingredients. The kitchen provides the chef with everything needed to prepare a dish, such as access to a refrigerator (database), stove (execution environment), and pantry (file system). Without the kitchen, the chef's abilities would be limited to what they can carry, but with the kitchen, they can create a wide array of dishes by utilising the available resources. In essence, while LLMs provide the cognitive capabilities, MCP enables them to interact with the external world effectively. Oookay, but that's why we have APIs, right? MCP vs APIs: Understanding the Difference Model Context Protocol is a standard protocol for AI agents to access and understand external tools, data. Application Programming Interface helps different applications to interact with each other. MCP : Interoperable across protocols and systems by nature. APIs: requires custom coding MCP is AI-native and hence can work with LLMs and AI tools. APIs is not inherently built for AI systems. Now that we've cleared up the differences between MCP, APIs, and LLMs, let's dive into how MCP actually works. Core Components & Working of MCP At a high level, MCP follows a client-server architecture: Hosts are the LLM applications, such as IDE extensions or desktop AI clients, that initiate connections. Clients are embedded inside hosts and maintain one-to-one connections with servers. Servers provide essential context, serve tools, manage resource access, and deliver prompts to clients. Instead of hardcoding custom integrations, clients simply send structured MCP requests to servers, which in turn communicate with tools and return structured MCP responses. Its core components include the protocol layer, transport layer, and message handling system. Protocol Layer The protocol layer is responsible for the high-level communication logic, handling message framing, request-response linking, and notification management between clients and servers. Functionalities in the protocol include: Handling incoming requests through schema-validated handlers. Processing incoming notifications asynchronously. Sending outgoing requests and awaiting structured responses. Sending one-way notifications that don’t expect a response. At the code level, MCP exposes this functionality through a Protocol class with key methods like setRequestHandler, setNotificationHandler, request, and notification. These abstractions ensures that developers can work with clean, predictable communication patterns. Transport Layer The transport layer handles the transmission of MCP messages between clients and servers. MCP suppor

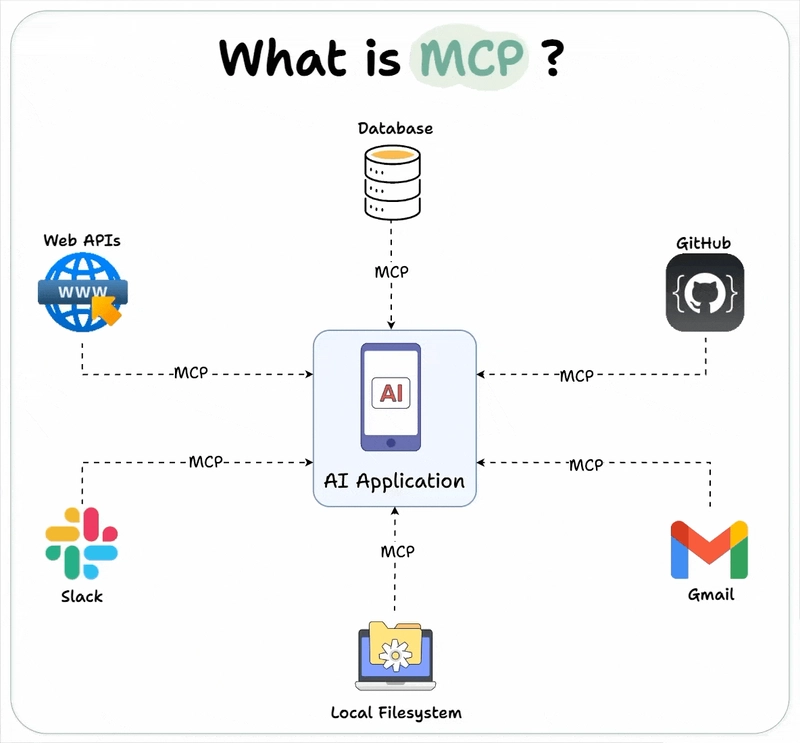

The technology community cannot stop talking about MCP, short for Model Context Protocol, developed by the Claude-maker Anthropic ,open-sourced in November 2024, MCP addresses the complexities of connecting AI models to various tools and datasets. While it flew under the radar last year, it's been gaining serious momentum lately, with developers, platforms, and companies increasingly jumping on board.

Well Then,

What is MCP?

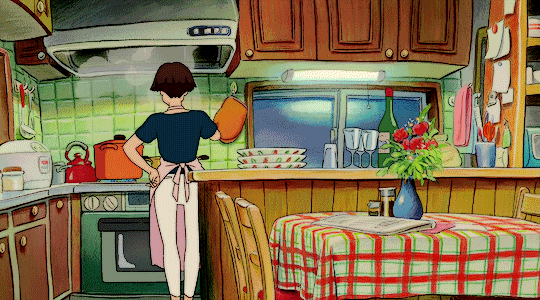

MCP is an open protocol that lets AI systems and agents connect to external tools and resources seamlessly.

Think of MCP like a USB port for your AI tools—it lets you plug into all the different data sources you need. Sure, everything works smoothly when you're starting a project from scratch on an AI coding platform. But what if you need to pull in code from older projects on GitHub, fetch documents from Google Drive, and stay on top of updates your QA team is dropping in Slack? With MCP, you need is for is to connect the Github-Slack-Drive to the AI coding agents being used so that your workflow doesn’t miss a beat.

Before MCP, each of the tools needed custom coding.

- So, _MCP provides a universal protocol that allows AI systems to maintain context across different applications, simplifying the development of intelligent agents. _

Who Has Adopted It?

- Open AI : Tweet

- Google DeepMind: Confirmed MCP support in their upcoming Gemini models.

- Developer tools like Replit, Codeium and Sourcegraph has utilised MCP to enhance their AI agents' capabilities.

MCP vs LLM: Understanding the Difference

While both MCP and Large Language Models (LLMs) are integral to AI development, they serve distinct purposes:

LLMs: These are advanced AI models trained on vast datasets to understand and generate human-like text. They excel in tasks such as language translation, content creation, and summarisation.

MCP: This is a protocol that facilitates communication between LLMs and external applications, allowing AI systems to perform tasks beyond text generation, such as interacting with databases, executing functions, and maintaining contextual awareness across different tools.

- Let's understand with a Real-Life Analogy:

Imagine a chef (LLM) who is highly skilled in cooking but works in a kitchen (MCP) equipped with various tools and ingredients. The kitchen provides the chef with everything needed to prepare a dish, such as access to a refrigerator (database), stove (execution environment), and pantry (file system). Without the kitchen, the chef's abilities would be limited to what they can carry, but with the kitchen, they can create a wide array of dishes by utilising the available resources.

In essence, while LLMs provide the cognitive capabilities, MCP enables them to interact with the external world effectively.

Oookay, but that's why we have APIs, right?

MCP vs APIs: Understanding the Difference

Model Context Protocol is a standard protocol for AI agents to access and understand external tools, data.

Application Programming Interface helps different applications to interact with each other.

MCP : Interoperable across protocols and systems by nature.

APIs: requires custom coding

MCP is AI-native and hence can work with LLMs and AI tools.

APIs is not inherently built for AI systems.

Now that we've cleared up the differences between MCP, APIs, and LLMs, let's dive into how MCP actually works.

Core Components & Working of MCP

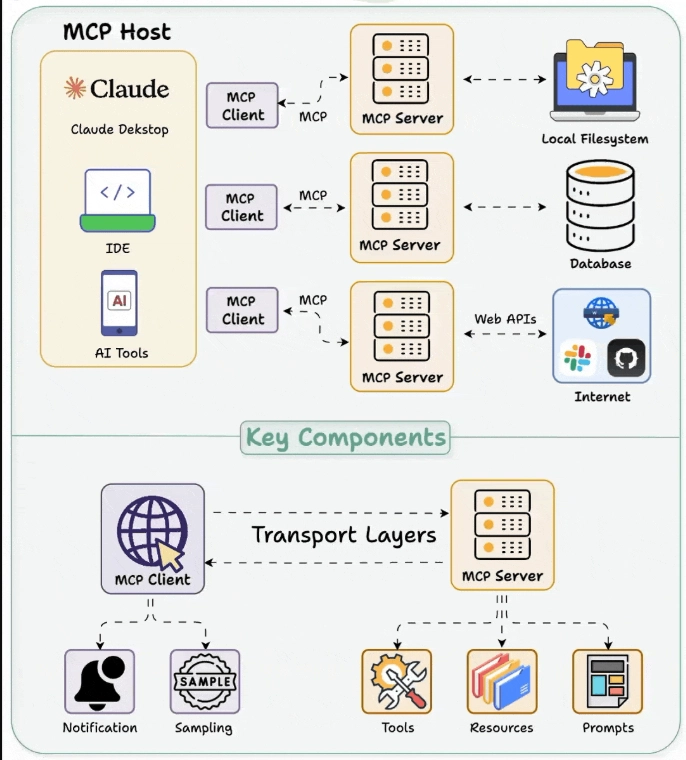

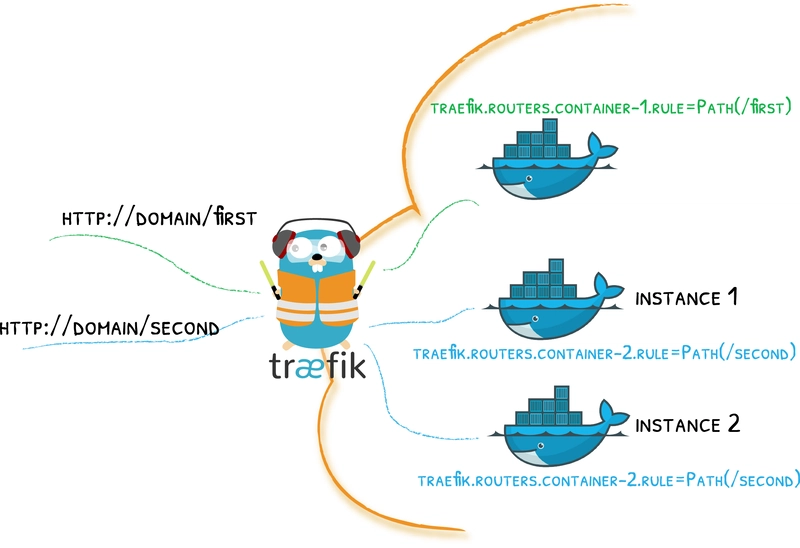

At a high level, MCP follows a client-server architecture:

Hosts are the LLM applications, such as IDE extensions or desktop AI clients, that initiate connections.

Clients are embedded inside hosts and maintain one-to-one connections with servers.

Servers provide essential context, serve tools, manage resource access, and deliver prompts to clients.

Instead of hardcoding custom integrations, clients simply send structured MCP requests to servers, which in turn communicate with tools and return structured MCP responses.

Its core components include the protocol layer, transport layer, and message handling system.

Protocol Layer

The protocol layer is responsible for the high-level communication logic, handling message framing, request-response linking, and notification management between clients and servers.

Functionalities in the protocol include:

Handling incoming requests through schema-validated handlers.

Processing incoming notifications asynchronously.

Sending outgoing requests and awaiting structured responses.

Sending one-way notifications that don’t expect a response.

At the code level, MCP exposes this functionality through a Protocol class with key methods like setRequestHandler, setNotificationHandler, request, and notification. These abstractions ensures that developers can work with clean, predictable communication patterns.

Transport Layer

The transport layer handles the transmission of MCP messages between clients and servers. MCP supports multiple transport mechanisms depending on the deployment scenario:

Stdio Transport uses standard input/output for communication, making it ideal for local, same-machine communication between processes. It is lightweight and easy to manage.

HTTP with Server-Sent Events (SSE) Transport is designed for remote communication, using HTTP POST for client-to-server messages and SSE for server-to-client streaming messages. This allows integration into cloud-based architectures or web-friendly environments.

Regardless of the transport, MCP messages are exchanged using JSON-RPC 2.0, ensuring consistent structure and compatibility across different platforms and programming languages.

Core Message Types in MCP

Communication in MCP is structured around four major message types:

- Requests are sent when a client or server needs a response from the other side.

- Results are successful responses to requests.

- Errors indicate that a request has failed, with detailed error codes and descriptions.

- Notifications are one-way messages sent without expecting any reply.

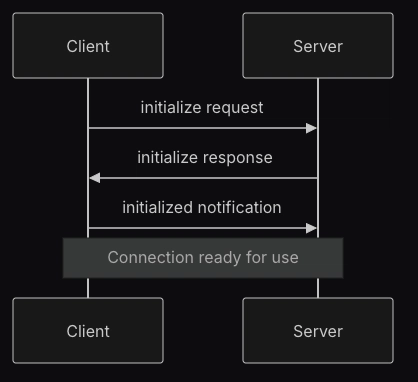

Connection Lifecycle

An MCP connection goes through several phases:

Initialisation

- The client sends an initialise request specifying the protocol version and its capabilities.

- The server responds with its version and capabilities.

- The client sends an initialised notification, confirming the readiness to start normal messaging.

Message Exchange

- After initialisation, both parties can send requests and notifications freely.

- Request-Response and one-way notification patterns are supported simultaneously.

Termination

- Either the client or server can terminate the connection cleanly.

- Disconnections may also happen due to transport errors or shutdowns.

Why Is It Important & s It Really Worth Its Hype?

Makes AI agents more intelligent, useful through context

Reduces time required for integrating multiple tools.

MCP definitely lives up to the hype. It makes connecting AI models to tools super easy, cutting out all the messy custom work. If you want to build smarter, more flexible AI apps without the usual headaches, MCP is a total game-changer.

Below given are several applications and platforms that currently support the Model Context Protocol (MCP):

Claude Desktop – Developed by Anthropic, this is the official MCP client.

Replit – A collaborative coding platform integrating MCP for enhanced AI agent capabilities.

Codeium – An AI-powered code completion tool utilizing MCP.

Sourcegraph – A code search and navigation platform supporting MCP.

Zed – An IDE that integrates MCP for improved AI-assisted development.

Spring AI – A Java framework offering native MCP support for enterprise applications.

Drupal – A content management system with dedicated MCP integration.

Quarkus – A Java-based framework providing MCP support for enterprise integrations.

AWS Bedrock – Amazon's managed service for building and scaling generative AI applications, supporting MCP.

Google Cloud – Offers MCP integration for various AI and cloud services.

Brave Search – A privacy-focused search engine with MCP support.

GitHub – Supports MCP for repository management and interactions.

GitLab – Offers MCP integration for version control and CI/CD pipelines.

Google Drive – Provides MCP support for file management and interactions.

Google Maps – Integrates MCP for location-based AI interactions.

Sentry – An error monitoring platform with MCP support.

Puppeteer – A Node.js library for browser automation, supporting MCP.

Playwright – A browser automation tool with MCP integration.

YouTube Transcript Server – Enables retrieval of YouTube video transcripts via MCP.

Apple Shortcuts – Allows MCP-based automation on Apple devices.

Azure OpenAI Integration – Microsoft's integration of Azure OpenAI services with MCP support.

Web Search MCP Server – Enables free web searching using Google search results via MCP.

Video Editor MCP – Integrates with Video Jungle for video editing through MCP.

Rijksmuseum MCP Server – Provides access to the Rijksmuseum's collection for AI interactions via MCP.

Kubernetes MCP Server – Allows interaction with Kubernetes clusters through MCP.

Netskope NPA MCP Server – Manages Netskope Network Private Access infrastructure through MCP.

iTerm MCP Server – Provides access to iTerm sessions for efficient token use and full terminal control via MCP.

CLI MCP Server – Executes command-line operations securely via MCP.

MCP Shell Server – Implements shell command execution through MCP.

Google Tasks MCP Server – Integrates with Google Tasks for task management via MCP.

iMessage Query MCP Server – Provides access to iMessage database for querying and analysing conversations via MCP.

Glean MCP Server – Integrates with the Glean API, offering search and chat functionalities via MCP.

Gmail AutoAuth MCP Server – Enables Gmail integration in Claude Desktop with auto-authentication support via MCP.

Google Calendar MCP Server – Allows interaction with Google Calendar for event management via MCP.

Grafana MCP Server – Provides access to Grafana instances and related tools via MCP.

Home Assistant MCP Server – Enables natural language control and monitoring of smart home devices via MCP.

Inoyu Apache Unomi MCP Server – Enables user context maintenance through Apache Unomi profile management via MCP.

Integration App MCP Server – Provides tools for various integrations within an Integration App workspace via MCP.

JetBrains MCP Proxy Server – Integrates JetBrains IDE functionalities via MCP.

JSON MCP Server – Enables interaction with JSON data through MCP.

Kagi MCP Server – Integrates search capabilities via the Kagi Search API via MCP.

Keycloak MCP Server – Manages users and realms in Keycloak via MCP.

Linear MCP Server – Integrates with Linear's issue tracking system via MCP.

MCP Code Executor – Allows execution of Python code within a specified Conda environment via MCP.

Atlassian MCP Server – Integrates with Atlassian Cloud products like Confluence and Jira via MCP.

BigQuery MCP Server – Enables interaction with BigQuery data via MCP.

MCP Compass – A discovery and recommendation service to explore MCP servers with natural language queries.

Free USDC Transfer MCP Server – Enables free USDC transfers on Base with Coinbase CDP MPC Wallet integration via MCP.

MCP Google Server – Provides access to Google Custom Search and webpage content extraction via MCP.

mcp-hfspace MCP Server – Connects to Hugging Face Spaces with minimal setup via MCP.

HubSpot MCP Server – Integrates with HubSpot CRM via MCP.

mcp-installer – Installs other MCP servers via MCP.

Kibela MCP Server – Integrates with Kibela API via MCP.

MCP MongoDB Server – Provides access to MongoDB databases via MCP.

Neo4j MCP Clients & Servers – Interacts with Neo4j and Aura accounts using natural language via MCP.

Neo4j Server – Integrates Neo4j graph database with Claude.

Social Media Handles

If you found this article helpful, feel free to connect with me on my social media channels for more insights:

- GitHub: [AmanjotSingh0908]

- LinkedIn: [Amanjot Singh Saini]

- Twitter: [@AmanjotSingh]

Thanks for reading!❣️

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![M4 MacBook Air Drops to Just $849 - Act Fast! [Lowest Price Ever]](https://www.iclarified.com/images/news/97140/97140/97140-640.jpg)

![Apple Smart Glasses Not Close to Being Ready as Meta Targets 2025 [Gurman]](https://www.iclarified.com/images/news/97139/97139/97139-640.jpg)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)