Markov Decision Process vs Reinforcement Learning: What AI Engineers Need to Know in 2025

In the fast-evolving world of Artificial Intelligence, two foundational pillars often leave newcomers—and even seasoned developers—scratching their heads: Markov Decision Processes (MDPs) and Reinforcement Learning (RL). While these terms are closely related, they aren’t interchangeable. Understanding how they differ—and how they work together—is essential for any AI engineer building decision-making models in 2025. This guide breaks down their core differences, use cases, and practical significance, especially as demand grows for smarter, autonomous systems across industries. Understanding the Basics What is a Markov Decision Process (MDP)? An MDP is a mathematical framework used to describe decision-making problems where outcomes are partly random and partly under the control of a decision maker. It provides a formal structure for modeling environments in which agents interact over time. An MDP typically includes: A set of states (S) A set of actions (A) Transition probabilities (P) between states A reward function (R) A discount factor (γ) for future rewards It assumes the Markov property—the next state depends only on the current state and action, not on prior history. Want a deep-dive into how MDPs are structured and used in AI? Explore the full explanation here: Markov Decision Process in Artificial Intelligence What is Reinforcement Learning (RL)? Reinforcement Learning is a machine learning paradigm that focuses on training agents to make sequences of decisions. It involves learning an optimal policy through trial-and-error interactions with an environment, receiving rewards or penalties as feedback. An RL system generally consists of: An agent (learner/decision-maker) An environment (the world with which the agent interacts) A policy (agent’s behavior) A reward signal A value function (expected return) Reinforcement Learning builds upon MDPs by allowing agents to learn optimal strategies (policies) in environments where the transition probabilities or rewards are unknown. MDP vs Reinforcement Learning: Key Differences Feature Markov Decision Process (MDP) Reinforcement Learning (RL) Nature Mathematical framework Learning paradigm based on MDP Knowledge of environment Requires full knowledge of transitions and rewards Can operate without full knowledge Used for Modeling decision problems Learning optimal policies in unknown environments Learning involved No learning, assumes known model Learning through interaction Example Planning in robotics with known dynamics Training a robot using trial-and-error Why This Matters in 2025 With the increasing deployment of AI in real-world systems like autonomous vehicles, recommendation engines, and robotic process automation, engineers must distinguish when to use MDPs for planning and when to apply RL for learning in uncertain environments. Key 2025 trends: Model-free RL continues to gain popularity due to scalability in unknown environments. Model-based RL, which uses MDP-like assumptions, is seeing resurgence in real-time simulations. Hybrid approaches are bridging the gap, especially in healthcare, fintech, and logistics. Use Cases to Watch in 2025 Autonomous Vehicles MDP: Used in simulation environments with defined rules. RL: Real-time adaptation in traffic scenarios. Supply Chain Optimization MDP: Optimal planning when historical data is reliable. RL: Learning from dynamic market behaviors. Personalized Education Platforms MDP: Initial model setup. RL: Adaptive learning paths based on user engagement. Final Thoughts MDPs are the foundation—Reinforcement Learning is the practical application in dynamic, often uncertain, real-world environments. Understanding this distinction empowers AI engineers to build robust, intelligent systems in 2025. Want to go deeper into how MDPs work, with examples and real-world applications? Check out the full article here: Markov Decision Process in Artificial Intelligence – Applied AI Blog

In the fast-evolving world of Artificial Intelligence, two foundational pillars often leave newcomers—and even seasoned developers—scratching their heads: Markov Decision Processes (MDPs) and Reinforcement Learning (RL). While these terms are closely related, they aren’t interchangeable. Understanding how they differ—and how they work together—is essential for any AI engineer building decision-making models in 2025.

This guide breaks down their core differences, use cases, and practical significance, especially as demand grows for smarter, autonomous systems across industries.

Understanding the Basics

What is a Markov Decision Process (MDP)?

An MDP is a mathematical framework used to describe decision-making problems where outcomes are partly random and partly under the control of a decision maker. It provides a formal structure for modeling environments in which agents interact over time.

An MDP typically includes:

- A set of states (S)

- A set of actions (A)

- Transition probabilities (P) between states

- A reward function (R)

- A discount factor (γ) for future rewards

It assumes the Markov property—the next state depends only on the current state and action, not on prior history.

Want a deep-dive into how MDPs are structured and used in AI?

Explore the full explanation here:

Markov Decision Process in Artificial Intelligence

What is Reinforcement Learning (RL)?

Reinforcement Learning is a machine learning paradigm that focuses on training agents to make sequences of decisions. It involves learning an optimal policy through trial-and-error interactions with an environment, receiving rewards or penalties as feedback.

An RL system generally consists of:

- An agent (learner/decision-maker)

- An environment (the world with which the agent interacts)

- A policy (agent’s behavior)

- A reward signal

- A value function (expected return)

Reinforcement Learning builds upon MDPs by allowing agents to learn optimal strategies (policies) in environments where the transition probabilities or rewards are unknown.

MDP vs Reinforcement Learning: Key Differences

| Feature | Markov Decision Process (MDP) | Reinforcement Learning (RL) |

|---|---|---|

| Nature | Mathematical framework | Learning paradigm based on MDP |

| Knowledge of environment | Requires full knowledge of transitions and rewards | Can operate without full knowledge |

| Used for | Modeling decision problems | Learning optimal policies in unknown environments |

| Learning involved | No learning, assumes known model | Learning through interaction |

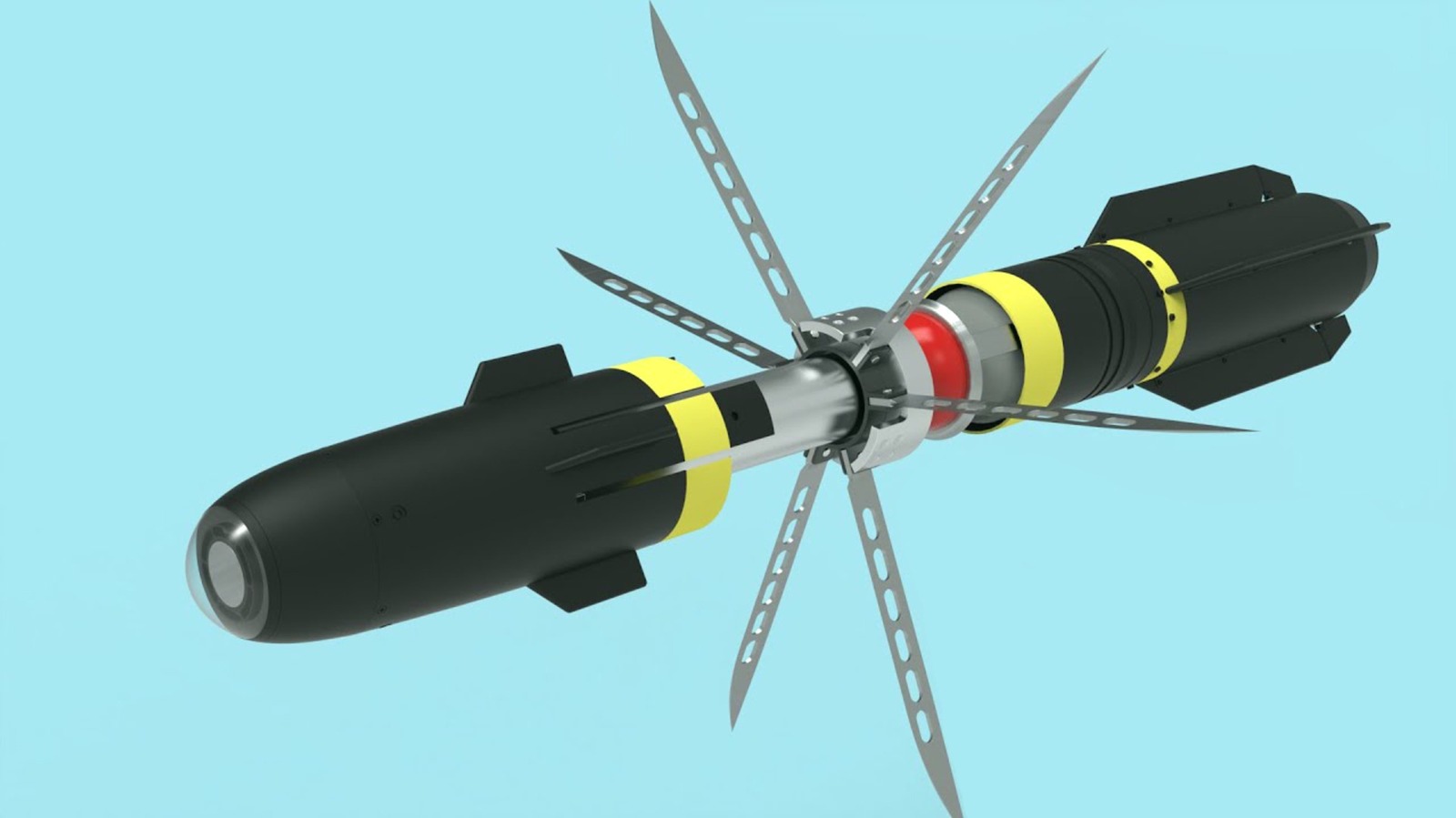

| Example | Planning in robotics with known dynamics | Training a robot using trial-and-error |

Why This Matters in 2025

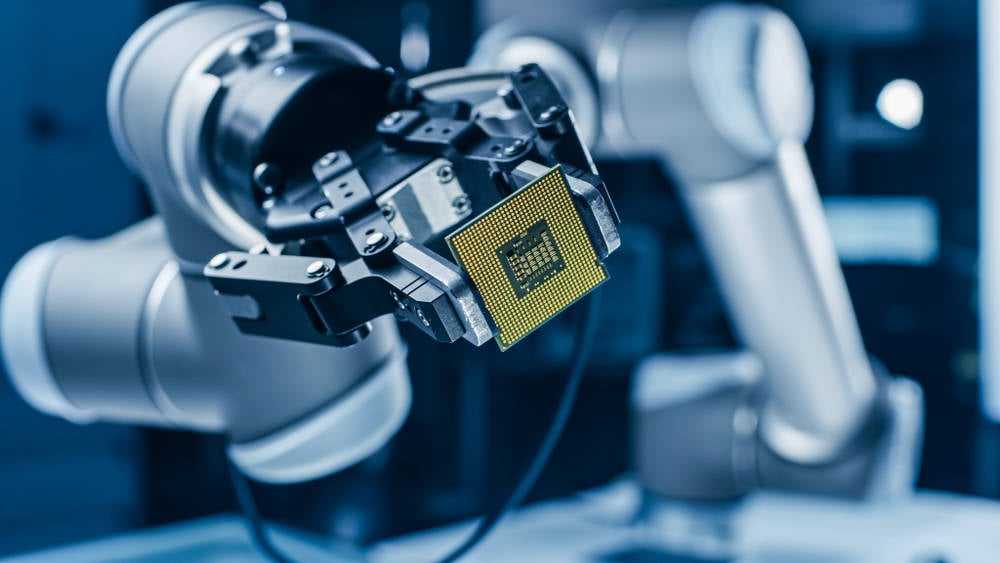

With the increasing deployment of AI in real-world systems like autonomous vehicles, recommendation engines, and robotic process automation, engineers must distinguish when to use MDPs for planning and when to apply RL for learning in uncertain environments.

Key 2025 trends:

- Model-free RL continues to gain popularity due to scalability in unknown environments.

- Model-based RL, which uses MDP-like assumptions, is seeing resurgence in real-time simulations.

- Hybrid approaches are bridging the gap, especially in healthcare, fintech, and logistics.

Use Cases to Watch in 2025

-

Autonomous Vehicles

- MDP: Used in simulation environments with defined rules.

- RL: Real-time adaptation in traffic scenarios.

-

Supply Chain Optimization

- MDP: Optimal planning when historical data is reliable.

- RL: Learning from dynamic market behaviors.

-

Personalized Education Platforms

- MDP: Initial model setup.

- RL: Adaptive learning paths based on user engagement.

Final Thoughts

MDPs are the foundation—Reinforcement Learning is the practical application in dynamic, often uncertain, real-world environments. Understanding this distinction empowers AI engineers to build robust, intelligent systems in 2025.

Want to go deeper into how MDPs work, with examples and real-world applications?

Check out the full article here:

Markov Decision Process in Artificial Intelligence – Applied AI Blog

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)

![Apple Shares New Ad for iPhone 16: 'Trust Issues' [Video]](https://www.iclarified.com/images/news/97125/97125/97125-640.jpg)