Jozu Hub–Your private, on-prem Hugging Face registry

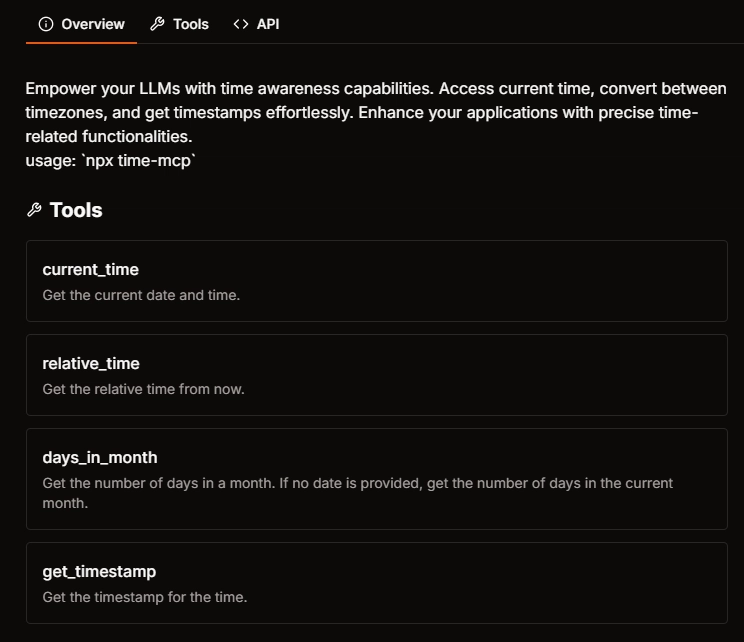

We've covered how to secure and deploy Hugging Face models with Jozu Hub, creating a solid pipeline for model serving with strong security features. We've also examined how to build an MLOps pipeline with Dagger.io and KitOps to move AI projects to production and how KitOps and Ray simplify model scaling. This guide builds on those ideas to show how to curate and secure models from Hugging Face, and host them in your own private model registry. We'll walk through importing a model from the Hugging Face repository, packaging it into ModelKit, and pushing it to Jozu Hub for secure storage. When used together, KitOps and Hugging Face can make model development more efficient. The approach enables direct model imports and maintains consistency between model artifacts and their dependencies. Version tracking becomes more straightforward, and the immutable nature of ModelKits helps maintain integrity throughout the development process. With over 1 billion models hosted on Hugging Face, it has become central to model development, but this scale creates challenges. Finding the right model can be difficult, and verifying quality and security isn't always straightforward. Many teams need a private, on-premises solution to host selected models. Jozu Hub offers one approach to creating such an environment for a team's model development lifecycle. Importing a model from Hugging Face Prerequisites To follow along with this tutorial, you will need the following: A container registry: You can use Jozu Hub, the GitHub Package registry, or DockerHub. This guide uses Jozu Hub, which includes model auditing features. KitOps: Check out the guide to installing KitOps. Step 1: Install KitOps First, make sure you have the latest version of Kit CLI installed locally. Once installed, run the command below to verify the installation: kit version You should see an output like this: Version: 1.2.1 Commit: 561aa53ec7cfa9bc52ed76edd448228aad57c5ef Built: 2025-02-18T22:26:11Z Go version: go1.22.6 Step 2: Create a Jozu Hub repository Navigate to Jozu Hub, sign up for free, and create a repository. For demonstration, let's create an empty repository called gpt2-distilled-lora-alpaca. Tip: You can also import models directly from Hugging Face without installing KitOps locally. Simply click the "Add Repository" button in the Jozu Hub interface and select "Import from Hugging Face" from the dropdown menu. This streamlines the process by handling the import and packaging within the Jozu Hub platform. After signing up, log in to authenticate your registry from your local terminal by running the command kit login jozu.ml with your username and password. Note: Your username is the email address used to create your Jozu Hub account. kit login jozu.ml You'll get the login successful response below with your metadata. Username: your-email@companyname.com Password: Log in successful Step 3: Download a model from a Hugging Face repository In the new KitOps V1.0, the Kit CLI can now import a model from the Hugging Face repository. Using the kit import command, you can take any model available on the Hugging Face and directly package it into a ModelKit that you can push to image registries such as Jozu Hub and DockerHub. When you run the command, kit import shahidmo99/gpt2-distilled-lora-alpaca, the Kit CLI will: Download the shahidmo99/gpt2-distilled-lora-alpaca model from the Hugging Face repository locally. Generate the configuration needed to package the files in that repository into a ModelKit. Package the repository into a locally-stored ModelKit. The above steps explain the code block below: kit import shahidmo99/gpt2-distilled-lora-alpaca After you run the kit import command as stated above, this generates the configuration needed to package the file into a ModelKit, as seen below: manifestVersion: 1.0.0 package: name: gpt2-distilled-lora-alpaca authors: [shahidmo99] model: name: adapter_model path: adapter_model.safetensors parts: - path: adapter_config.json docs: - path: README.md description: Readme file Would you like to edit Kitfile before packing? (y/N): N Downloading file adapter_model.safetensors Downloading file README.md Downloading file adapter_config.json Packing model to shahidmo99/gpt2-distilled-lora-alpaca:latest Saved model layer: sha256:d73d11f8de65294b35fa061563a2f7a7d4c4467deb54d26694e45a91181ce644 Saved modelpart layer: sha256:17e7fa1325601f7a934aec82436beee66d5f7a3cd073f61551ce0592060ed487 Saved docs layer: sha256:e3eb3860b8b37efb4bf1dfbc27f384c8e7b444d446066dbc3dbfa67199b45b6e Saved configuration: sha256:b28858df0f4e33c194bfc35dfbd85ab76f85ec667aa6d51f87ab2bbfae1a002b Saved manifest to storage: sha256:e8ed0895fc0d0e2fa0f9dfd15d624be01d15b709f3891071e3c7e72b303a8319 Model is packed as shahidmo99/gpt2-distilled-lora-alpaca:latest Step 4: Push your ModelKit

We've covered how to secure and deploy Hugging Face models with Jozu Hub, creating a solid pipeline for model serving with strong security features. We've also examined how to build an MLOps pipeline with Dagger.io and KitOps to move AI projects to production and how KitOps and Ray simplify model scaling.

This guide builds on those ideas to show how to curate and secure models from Hugging Face, and host them in your own private model registry. We'll walk through importing a model from the Hugging Face repository, packaging it into ModelKit, and pushing it to Jozu Hub for secure storage.

When used together, KitOps and Hugging Face can make model development more efficient. The approach enables direct model imports and maintains consistency between model artifacts and their dependencies. Version tracking becomes more straightforward, and the immutable nature of ModelKits helps maintain integrity throughout the development process.

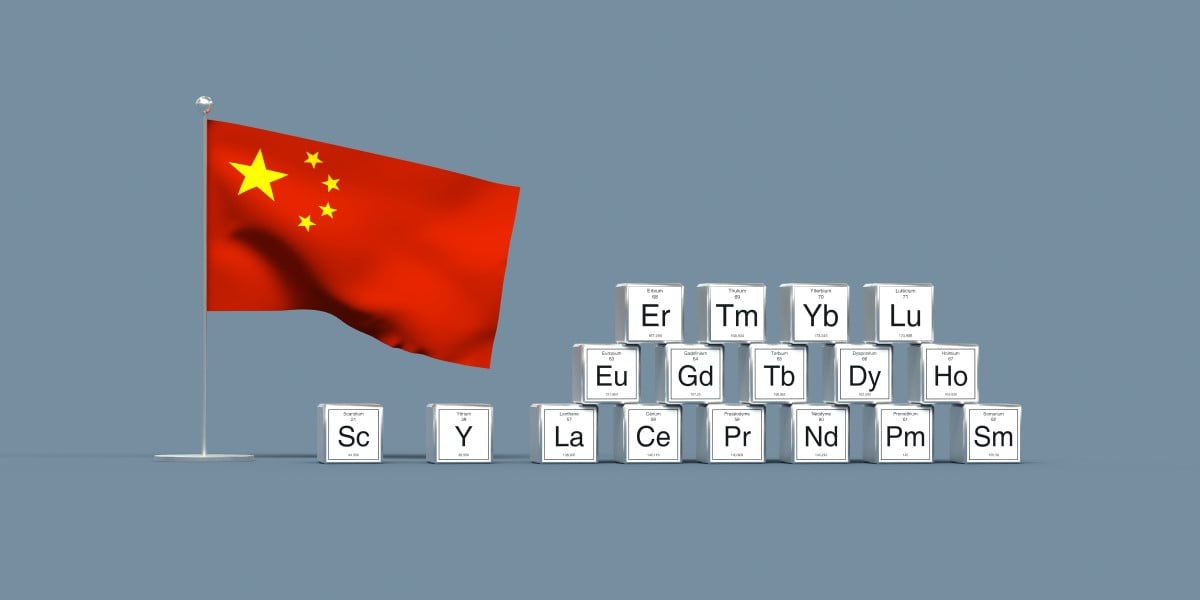

With over 1 billion models hosted on Hugging Face, it has become central to model development, but this scale creates challenges. Finding the right model can be difficult, and verifying quality and security isn't always straightforward. Many teams need a private, on-premises solution to host selected models. Jozu Hub offers one approach to creating such an environment for a team's model development lifecycle.

Importing a model from Hugging Face

Prerequisites

To follow along with this tutorial, you will need the following:

- A container registry: You can use Jozu Hub, the GitHub Package registry, or DockerHub. This guide uses Jozu Hub, which includes model auditing features.

- KitOps: Check out the guide to installing KitOps.

Step 1: Install KitOps

First, make sure you have the latest version of Kit CLI installed locally. Once installed, run the command below to verify the installation:

kit version

You should see an output like this:

Version: 1.2.1

Commit: 561aa53ec7cfa9bc52ed76edd448228aad57c5ef

Built: 2025-02-18T22:26:11Z

Go version: go1.22.6

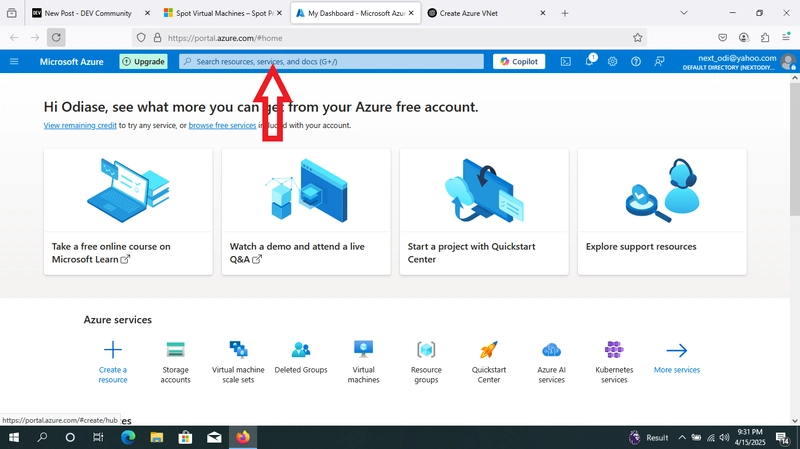

Step 2: Create a Jozu Hub repository

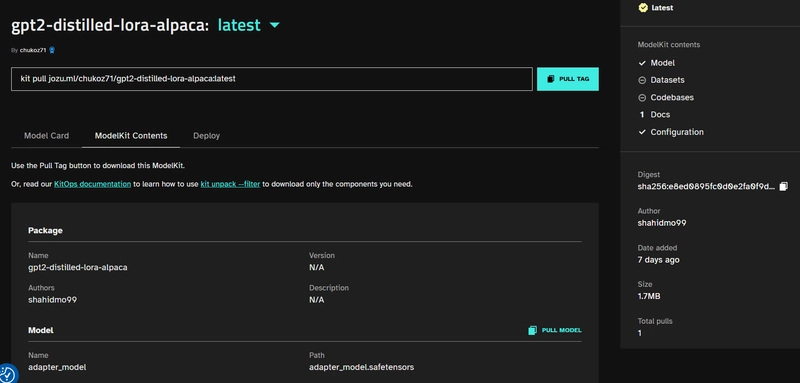

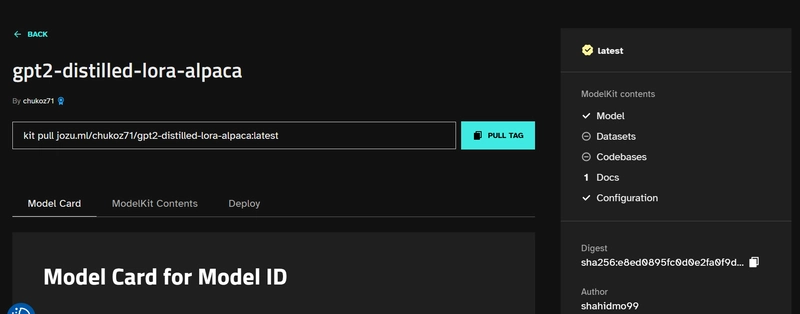

Navigate to Jozu Hub, sign up for free, and create a repository. For demonstration, let's create an empty repository called gpt2-distilled-lora-alpaca.

Tip: You can also import models directly from Hugging Face without installing KitOps locally. Simply click the "Add Repository" button in the Jozu Hub interface and select "Import from Hugging Face" from the dropdown menu. This streamlines the process by handling the import and packaging within the Jozu Hub platform.

After signing up, log in to authenticate your registry from your local terminal by running the command kit login jozu.ml with your username and password.

Note: Your username is the email address used to create your Jozu Hub account.

kit login jozu.ml

You'll get the login successful response below with your metadata.

Username: your-email@companyname.com

Password:

Log in successful

Step 3: Download a model from a Hugging Face repository

In the new KitOps V1.0, the Kit CLI can now import a model from the Hugging Face repository. Using the kit import command, you can take any model available on the Hugging Face and directly package it into a ModelKit that you can push to image registries such as Jozu Hub and DockerHub.

When you run the command, kit import shahidmo99/gpt2-distilled-lora-alpaca, the Kit CLI will:

- Download the shahidmo99/gpt2-distilled-lora-alpaca model from the Hugging Face repository locally.

- Generate the configuration needed to package the files in that repository into a ModelKit.

- Package the repository into a locally-stored ModelKit.

The above steps explain the code block below:

kit import shahidmo99/gpt2-distilled-lora-alpaca

After you run the kit import command as stated above, this generates the configuration needed to package the file into a ModelKit, as seen below:

manifestVersion: 1.0.0

package:

name: gpt2-distilled-lora-alpaca

authors: [shahidmo99]

model:

name: adapter_model

path: adapter_model.safetensors

parts:

- path: adapter_config.json

docs:

- path: README.md

description: Readme file

Would you like to edit Kitfile before packing? (y/N): N

Downloading file adapter_model.safetensors

Downloading file README.md

Downloading file adapter_config.json

Packing model to shahidmo99/gpt2-distilled-lora-alpaca:latest

Saved model layer: sha256:d73d11f8de65294b35fa061563a2f7a7d4c4467deb54d26694e45a91181ce644

Saved modelpart layer: sha256:17e7fa1325601f7a934aec82436beee66d5f7a3cd073f61551ce0592060ed487

Saved docs layer: sha256:e3eb3860b8b37efb4bf1dfbc27f384c8e7b444d446066dbc3dbfa67199b45b6e

Saved configuration: sha256:b28858df0f4e33c194bfc35dfbd85ab76f85ec667aa6d51f87ab2bbfae1a002b

Saved manifest to storage: sha256:e8ed0895fc0d0e2fa0f9dfd15d624be01d15b709f3891071e3c7e72b303a8319

Model is packed as shahidmo99/gpt2-distilled-lora-alpaca:latest

Step 4: Push your ModelKit

To push the ModelKit, run the command:

kit push [HuggingFace repository name]:[your tag name] [your registry address]/[your registry user or organization name]/[your repository name]:[your tag name]

In this example, the command is used to push a ModelKit tagged latest (representing your tag name) to:

- The Jozu Hub → your registry address

- The

shahidmo99/gpt2-distilled-lora-alpaca→ your Hugging Face model repository name - The

chukoz71→ your registry user or organization name - The

gpt2-distilled-lora-alpaca→ your repository name

As a result, the command will look like:

kit push shahidmo99/gpt2-distilled-lora-alpaca:latest jozu.ml/chukoz71/gpt2-distilled-lora-alpaca:latest

After executing the command, you should see the following output:

Pushing localhost/shahidmo99/gpt2-distilled-lora-alpaca:latest to jozu.ml/chukoz71/gpt2-distilled-lora-alpaca:latest

Copying e3eb3860 | 5.5 KiB | done

Copying d73d11f8 | 1.6 MiB | done

Copying b28858df | 765 B | done

Copying 17e7fa13 | 2.5 KiB | done

Copying e8ed0895 | 727 B | done

Pushed sha256:e8ed0895fc0d0e2fa0f9dfd15d624be01d15b709f3891071e3c7e72b303a8319

Alternative Method: Direct Import via Jozu Hub

Jozu Hub now offers a simplified workflow to import models directly from Hugging Face without requiring local KitOps installation. This browser-based approach streamlines the process:

- Log in to your Jozu Hub account

- Click the "Add Repository" button in the top navigation

- Select "Import from Hugging Face" from the dropdown menu

- Enter the Hugging Face model name you wish to import

- Jozu Hub will handle the importing and packaging process automatically

This web-based import feature is particularly useful for teams who want to quickly add models to their private registry without configuring the KitOps CLI locally.

Conclusion

This approach to managing Hugging Face models offers a structured way to handle machine learning models throughout their development lifecycle. The ModelKit packaging method includes code, datasets, and configurations while maintaining a record of changes. This can be helpful for teams that need to track model versions and maintain consistent environments.

For teams working on projects that need to move from experimentation to production, this workflow may help maintain consistency in the deployment process. The method could be useful for various applications, from research projects to enterprise deployments.

For further discussion about KitOps implementation, there's an active community on Discord, and documentation is available on the KitOps website.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

-Baldur’s-Gate-3-The-Final-Patch---An-Animated-Short-00-03-43.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)

![Apple Releases Public Betas of iOS 18.5, iPadOS 18.5, macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97024/97024/97024-640.jpg)

![Apple to Launch In-Store Recycling Promotion Tomorrow, Up to $20 Off Accessories [Gurman]](https://www.iclarified.com/images/news/97023/97023/97023-640.jpg)