Introduction to Data Engineering Concepts |14| DevOps for Data Engineering

Free Resources Free Apache Iceberg Course Free Copy of “Apache Iceberg: The Definitive Guide” Free Copy of “Apache Polaris: The Definitive Guide” 2025 Apache Iceberg Architecture Guide How to Join the Iceberg Community Iceberg Lakehouse Engineering Video Playlist Ultimate Apache Iceberg Resource Guide As data systems grow more complex and interconnected, the principles of DevOps—long applied to software engineering—have become increasingly relevant to data engineering. Continuous integration, infrastructure as code, testing, and automation aren’t just for deploying apps anymore. They’re essential for delivering reliable, maintainable, and scalable data pipelines. In this post, we’ll explore how DevOps practices translate into the world of data engineering, why they matter, and what tools and techniques help bring them to life in modern data teams. Bridging the Gap Between Code and Data At the heart of DevOps is the idea that development and operations should be integrated. In traditional software development, this means automating the steps from writing code to running it in production. For data engineering, the challenge is similar—but the output isn't always a user-facing app. Instead, it's pipelines, transformations, and datasets that power reports, dashboards, and machine learning models. The core question becomes: how do we ensure that changes to data workflows are tested, deployed, and monitored with the same rigor as application code? The answer lies in adopting DevOps-inspired practices like version control, automated testing, continuous deployment, and infrastructure automation—all tailored to the specifics of data systems. Version Control for Pipelines and Configurations Just like in software engineering, all code that defines your data infrastructure—SQL queries, transformation logic, orchestration DAGs, and even schema definitions—should live in version-controlled repositories. This makes it easier to collaborate, review changes, and roll back when something breaks. Tools like Git, combined with platforms like GitHub or GitLab, provide the foundation. Branching strategies and pull requests help teams manage change in a structured, auditable way. Even configurations—such as data source definitions or schedule timings—can and should be versioned, ideally alongside the pipeline logic they support. Continuous Integration and Testing Data pipelines are code, and they should be tested like code. This includes unit tests for transformation logic, integration tests for full pipeline runs, and data quality checks that assert assumptions about the shape and content of your data. CI pipelines, powered by tools like GitHub Actions, GitLab CI, or Jenkins, can run these tests automatically on each commit or pull request. They ensure that changes don’t break existing functionality or introduce regressions. Testing data workflows is more nuanced than testing application logic. It often involves staging environments with synthetic or sample data, mocking external dependencies, and verifying outputs across time windows. But the goal is the same: catch problems early, not after they hit production. Infrastructure as Code Managing infrastructure manually—whether it’s a Spark cluster, an Airflow deployment, or a cloud storage bucket—doesn’t scale. Infrastructure as code (IaC) provides a way to define your environment in declarative files that can be versioned, reviewed, and deployed automatically. Tools like Terraform, Pulumi, and CloudFormation allow data teams to define compute resources, networking, permissions, and even pipeline configurations as code. Combined with CI/CD, IaC enables repeatable deployments, easier disaster recovery, and consistent environments across dev, staging, and production. IaC also helps in tracking infrastructure changes over time. When something breaks, you can look at the exact commit that introduced the change—not just guess what might have gone wrong. Continuous Deployment for Pipelines Once code is tested and approved, it needs to be deployed. Continuous deployment automates this step, pushing new pipeline definitions or transformation logic into production systems with minimal manual intervention. In practice, this might mean updating DAGs in Airflow, deploying dbt models, or rolling out new configurations to a Kafka stream processor. The process should include validation steps, such as verifying schema compatibility or testing data output in a sandbox environment before it goes live. Feature flags and gradual rollouts—techniques borrowed from application development—can also be applied to data. They allow teams to test changes on a subset of data or users before promoting them system-wide. Monitoring and Incident Response Finally, DevOps emphasizes the importance of monitoring and observability. Data pipelines need the same treatment. Logs, metrics, and alerts should pro

Free Resources

- Free Apache Iceberg Course

- Free Copy of “Apache Iceberg: The Definitive Guide”

- Free Copy of “Apache Polaris: The Definitive Guide”

- 2025 Apache Iceberg Architecture Guide

- How to Join the Iceberg Community

- Iceberg Lakehouse Engineering Video Playlist

- Ultimate Apache Iceberg Resource Guide

As data systems grow more complex and interconnected, the principles of DevOps—long applied to software engineering—have become increasingly relevant to data engineering. Continuous integration, infrastructure as code, testing, and automation aren’t just for deploying apps anymore. They’re essential for delivering reliable, maintainable, and scalable data pipelines.

In this post, we’ll explore how DevOps practices translate into the world of data engineering, why they matter, and what tools and techniques help bring them to life in modern data teams.

Bridging the Gap Between Code and Data

At the heart of DevOps is the idea that development and operations should be integrated. In traditional software development, this means automating the steps from writing code to running it in production. For data engineering, the challenge is similar—but the output isn't always a user-facing app. Instead, it's pipelines, transformations, and datasets that power reports, dashboards, and machine learning models.

The core question becomes: how do we ensure that changes to data workflows are tested, deployed, and monitored with the same rigor as application code?

The answer lies in adopting DevOps-inspired practices like version control, automated testing, continuous deployment, and infrastructure automation—all tailored to the specifics of data systems.

Version Control for Pipelines and Configurations

Just like in software engineering, all code that defines your data infrastructure—SQL queries, transformation logic, orchestration DAGs, and even schema definitions—should live in version-controlled repositories.

This makes it easier to collaborate, review changes, and roll back when something breaks. Tools like Git, combined with platforms like GitHub or GitLab, provide the foundation. Branching strategies and pull requests help teams manage change in a structured, auditable way.

Even configurations—such as data source definitions or schedule timings—can and should be versioned, ideally alongside the pipeline logic they support.

Continuous Integration and Testing

Data pipelines are code, and they should be tested like code. This includes unit tests for transformation logic, integration tests for full pipeline runs, and data quality checks that assert assumptions about the shape and content of your data.

CI pipelines, powered by tools like GitHub Actions, GitLab CI, or Jenkins, can run these tests automatically on each commit or pull request. They ensure that changes don’t break existing functionality or introduce regressions.

Testing data workflows is more nuanced than testing application logic. It often involves staging environments with synthetic or sample data, mocking external dependencies, and verifying outputs across time windows. But the goal is the same: catch problems early, not after they hit production.

Infrastructure as Code

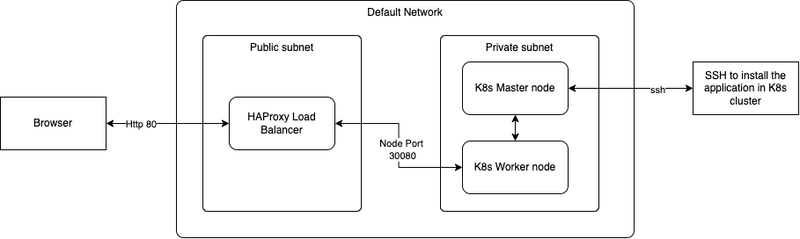

Managing infrastructure manually—whether it’s a Spark cluster, an Airflow deployment, or a cloud storage bucket—doesn’t scale. Infrastructure as code (IaC) provides a way to define your environment in declarative files that can be versioned, reviewed, and deployed automatically.

Tools like Terraform, Pulumi, and CloudFormation allow data teams to define compute resources, networking, permissions, and even pipeline configurations as code. Combined with CI/CD, IaC enables repeatable deployments, easier disaster recovery, and consistent environments across dev, staging, and production.

IaC also helps in tracking infrastructure changes over time. When something breaks, you can look at the exact commit that introduced the change—not just guess what might have gone wrong.

Continuous Deployment for Pipelines

Once code is tested and approved, it needs to be deployed. Continuous deployment automates this step, pushing new pipeline definitions or transformation logic into production systems with minimal manual intervention.

In practice, this might mean updating DAGs in Airflow, deploying dbt models, or rolling out new configurations to a Kafka stream processor. The process should include validation steps, such as verifying schema compatibility or testing data output in a sandbox environment before it goes live.

Feature flags and gradual rollouts—techniques borrowed from application development—can also be applied to data. They allow teams to test changes on a subset of data or users before promoting them system-wide.

Monitoring and Incident Response

Finally, DevOps emphasizes the importance of monitoring and observability. Data pipelines need the same treatment. Logs, metrics, and alerts should provide insight into pipeline health, performance, and failures.

Tools like Prometheus, Grafana, and cloud-native observability platforms can be integrated with orchestration tools to expose runtime metrics. Custom dashboards can show pipeline durations, success rates, and error counts. Alerts can notify teams when jobs fail or when output data violates expectations.

Just as importantly, incidents should feed back into improvement. Postmortems, runbooks, and blameless retrospectives help teams learn from failures and evolve their systems.

Shifting the Culture

Adopting DevOps for data engineering is as much about culture as it is about tools. It means treating data workflows with the same discipline as software systems—building, testing, deploying, and monitoring them in automated, repeatable ways.

This cultural shift leads to faster iterations, fewer outages, and more confidence in the data products that teams rely on. It also reduces the operational load on engineers, freeing them to focus on value creation instead of firefighting.

In the next post, we’ll step back and look at the cloud ecosystem that underpins much of this work. Understanding the role of managed services and cloud-native tools is key to building a modern, agile data platform.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

![[Updated] Samsung’s 65-inch 4K Smart TV Just Crashed to $299 — That’s Cheaper Than an iPad](https://www.androidheadlines.com/wp-content/uploads/2025/05/samsung-du7200.jpg)